一、安装参考

1.ubuntu18.04+TensorRT 配置攻略

ubuntu18.04+TensorRT 配置攻略 - 简书卷积网络的量化和部署是重要环节,以前我们训练好模型后直接trace进行调用,参考(C++ windows调用ubuntu训练的PyTorch模型(.pt/.pth)[http...http://events.jianshu.io/p/147aecd1e084

二、遇到的问题

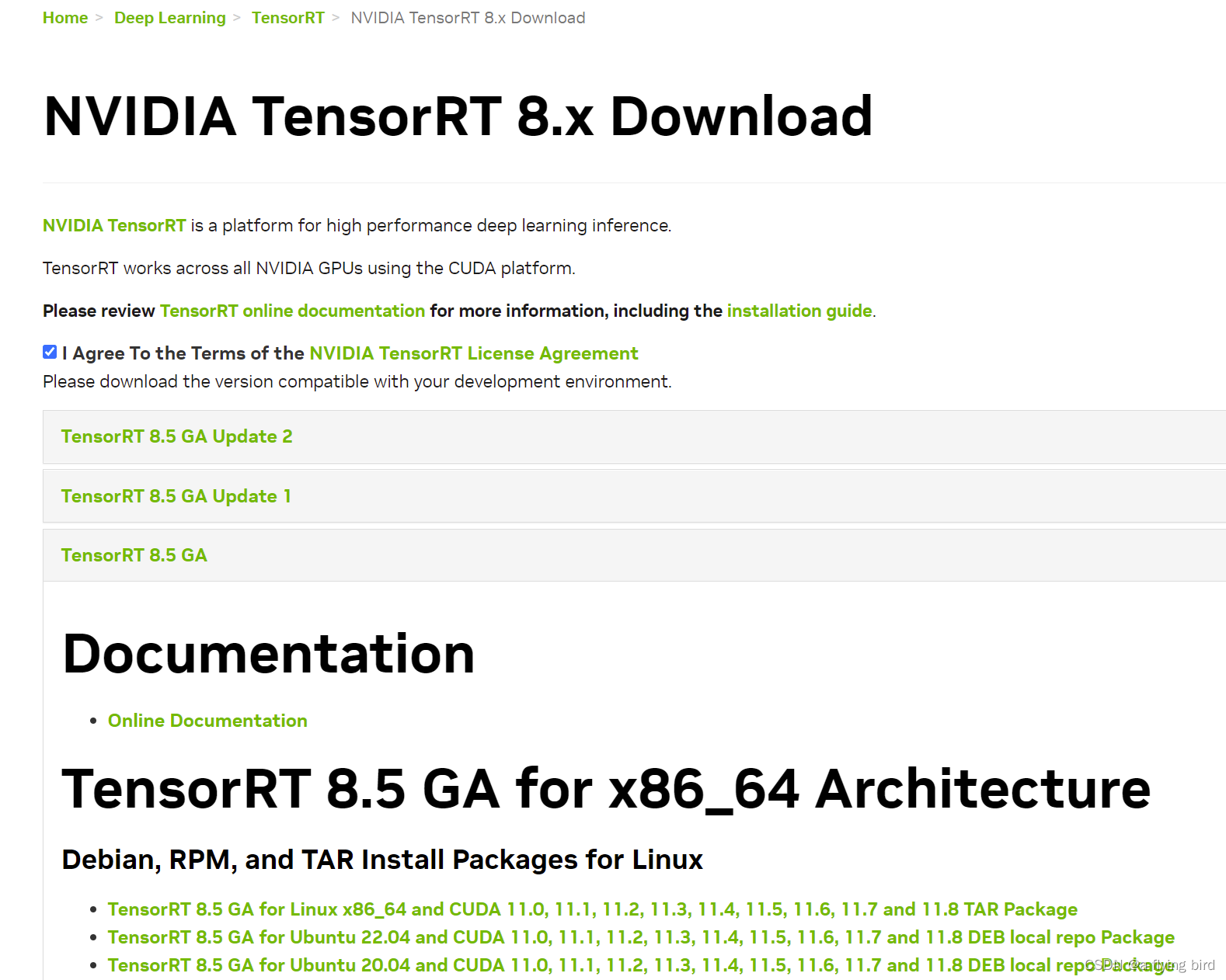

1. 参照官网,查找对应的cuda 和cudnn版本,并安装。安装参考上一篇文章。

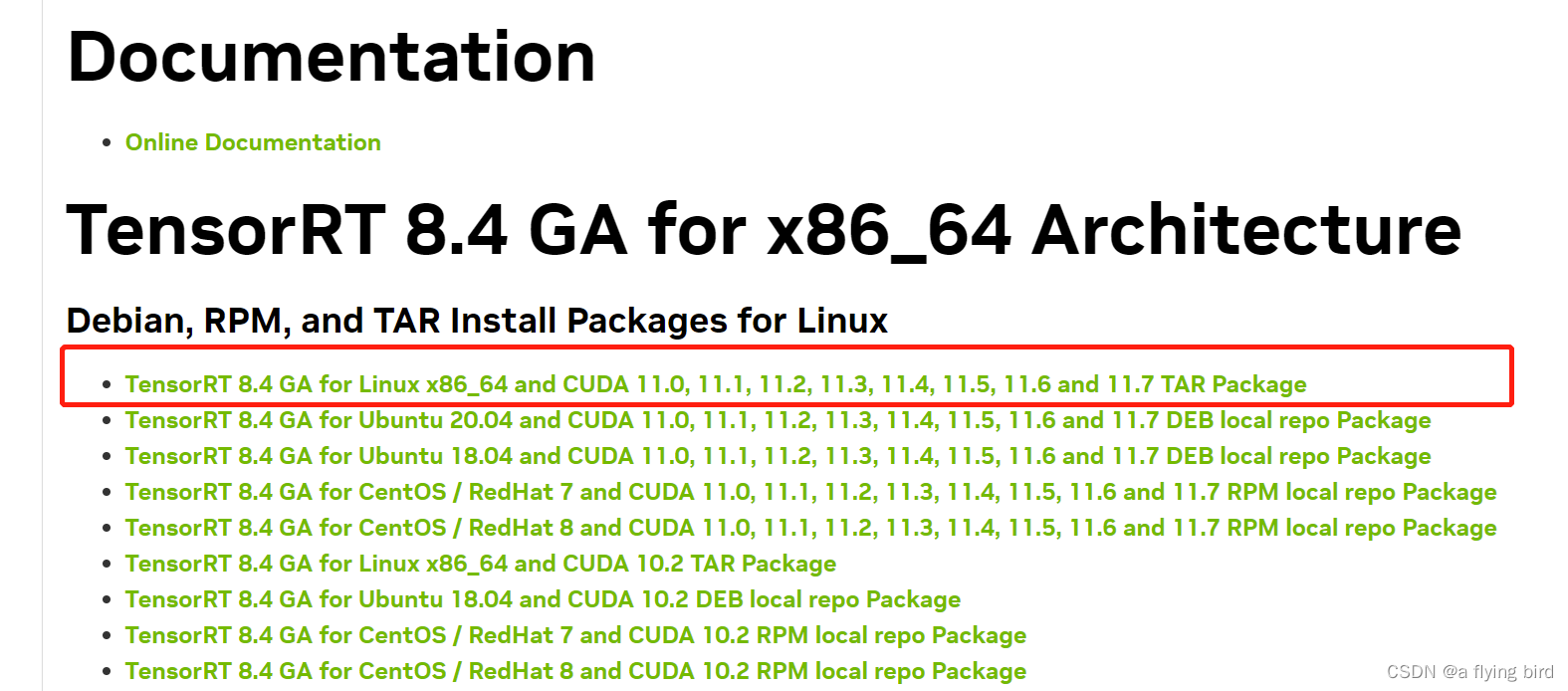

https://developer.nvidia.com/nvidia-tensorrt-8x-download

注:TensorRT8分为GA和EA版本,EA是提前发布的不稳定版本,GA是经过完备测试的稳定版本

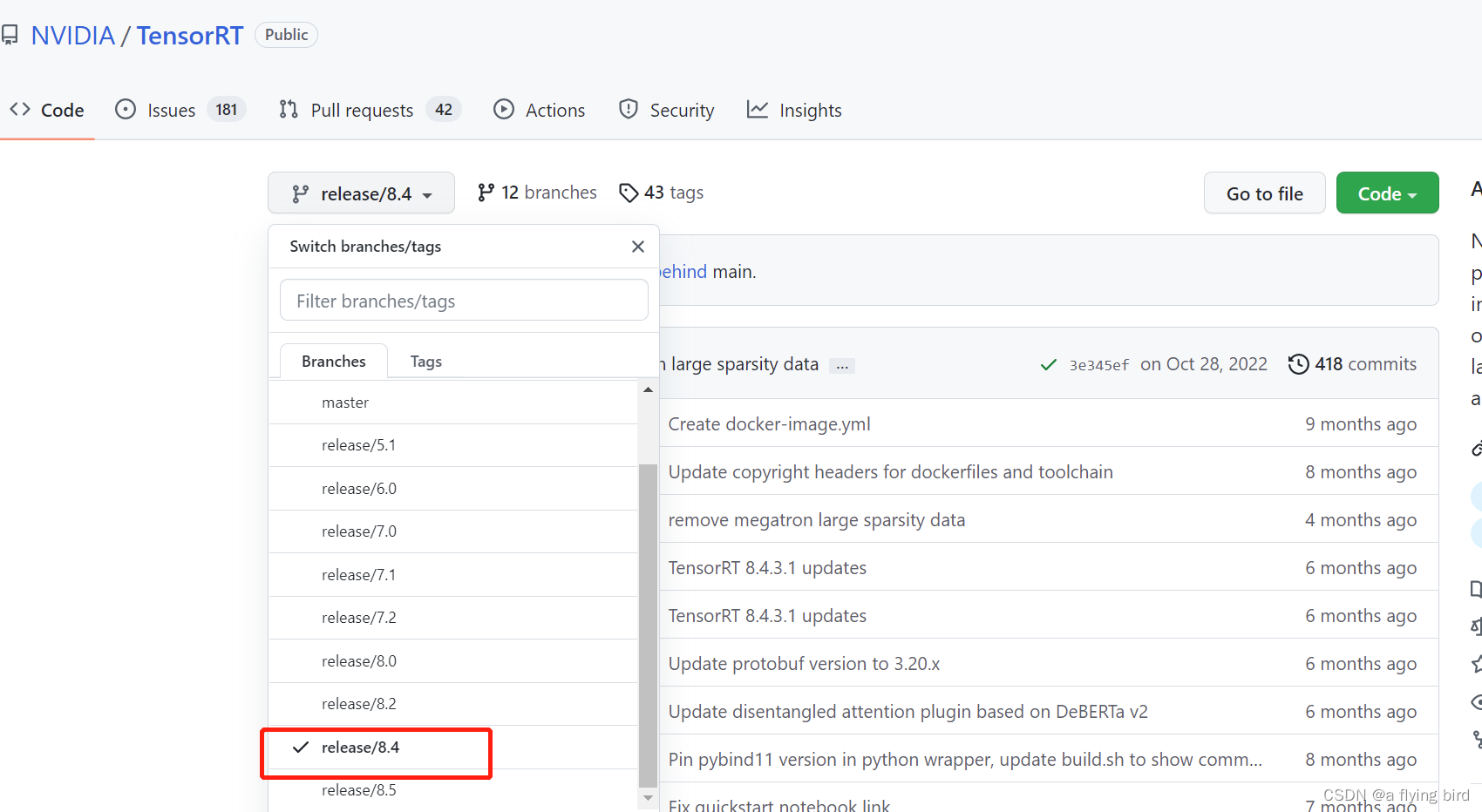

2. 下载的tensorRT8.4, git也要下载8.4

GitHub - NVIDIA/TensorRT: NVIDIA® TensorRT™, an SDK for high-performance deep learning inference, includes a deep learning inference optimizer and runtime that delivers low latency and high throughput for inference applications.

3. CMAKE错误:No CMAKE_CUDA_COMPILER could be found.

CMAKE错误:No CMAKE_CUDA_COMPILER could be found._luckwsm的博客-CSDN博客_cmake_cuda_compiler在使用cmake编译VTK程序过程中,执行cmake命令时,出现如下错误:-- The CUDA compiler identification is unknownCMake Error at CMakeLists.txt:4 (enable_language): No CMAKE_CUDA_COMPILER could be found. Tell CMake where to find the compiler by setting either the environment v...https://blog.csdn.net/luckwsm/article/details/120866609

3. make install 失败

环境变量问题,重新配置。 或者清空需要配置的环境变量,重新来一遍。

三、相关参考:

1.ubuntu编译TensorRT源码(添加自定义plugin)

ubuntu编译TensorRT源码(添加自定义plugin)_baobei0112的博客-CSDN博客_tensorrt_library_myelin1.下载源码1.1 下载TensorRT编译版本官方下载TensorRT编译版本,此处以TensorRT7.0.0.11-cuda10.2-cudnn7.6-ubuntu18.04为例子:根据安装的cuda的版本选择,选择tar包即可选择的版本要和安装的cuda对应。1.2 下载TensorRT源码git clone https://github.com/nvidia/TensorRTcd TensorRTgit submodule update --init --recursi.https://blog.csdn.net/baobei0112/article/details/122680910

环境变量配置:

cd TRT_ROOT

export TRT_RELEASE=`pwd`/TensorRT-7.0.0.11

export TENSORRT_LIBRARY_INFER=$TRT_RELEASE/targets/x86_64-linux-gnu/lib/libnvinfer.so.7

export TENSORRT_LIBRARY_INFER_PLUGIN=$TRT_RELEASE/targets/x86_64-linux-gnu/lib/libnvinfer_plugin.so.7

export TENSORRT_LIBRARY_MYELIN=$TRT_RELEASE/targets/x86_64-linux-gnu/lib/libmyelin.so

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$TRT_RELEASE/lib