1. 问题背景

注意,我这里的Static Pod并非Kubernetes的Static Pod,而是需要把想要Debug的程序放到Delve环境中重新打包一个镜像。因为还有另外一种场景,那就是我们需要不重启Running Pod,为了和这种方式区分,才以此为名。

最近遇到一个问题,简单说明一下背景。

由于虚拟机重新启动,造成Redis Operator无法组建Redis集群,报错为:[ERR] Node 10.233.75.71:6379 is not empty. Either the node already knows other nodes (check with CLUSTER NODES) or contains some key in database 0。

这个错误比较典型,在网上一搜索就能找到解决方案。其实就是因为虚拟机重新启动之后,Pod被重建,IP发生变动,而nodes.conf文件由于被持久化,因此里面记录了上一次集群的信息。因此解决的方案也很简单,就是删除aof/rdb/nodes.conf文件。详细可以参考这篇文章:Operator——Redis之重启虚拟机后无法重建集群

为了让Redis Operator能够自动处理这种情况,我修改了Redis Operator的源码,如果在组件集群的时候发现是上面所说的错误,就自动删除aof/rdb/nodes.conf文件,重建集群

程序改完之后,发现Redis Operator一直卡在某个地方,实际上根据Redis Operator的源码可以知道,就算RedisCluster资源不发生改动,Redis Operator也会每隔10s重新Reconcile一遍。即便遇到错误返回,也会在120s之后,重新Reconcile

{"level":"info","ts":1671086466.020392,"logger":"controllers.RedisCluster","msg":"Will reconcile redis cluster operator in again 10 seconds","Request.Namespace":"redis-system","Request.Name":"redis"}

{"level":"info","ts":1671086476.0212002,"logger":"controllers.RedisCluster","msg":"Reconciling opstree redis Cluster controller","Request.Namespace":"redis-system","Request.Name":"redis"}

{"level":"info","ts":1671086476.0256453,"logger":"controller_redis","msg":"Redis statefulset get action was successful","Request.StatefulSet.Namespace":"redis-system","Request.StatefulSet.Name":"redis-leader"}

{"level":"info","ts":1671086476.0356286,"logger":"controller_redis","msg":"Reconciliation Complete, no Changes required.","Request.StatefulSet.Namespace":"redis-system","Request.StatefulSet.Name":"redis-leader"}

{"level":"info","ts":1671086476.0390885,"logger":"controller_redis","msg":"Redis service get action is successful","Request.Service.Namespace":"redis-system","Request.Service.Name":"redis-leader-headless"}

{"level":"info","ts":1671086476.0410442,"logger":"controller_redis","msg":"Redis service is already in-sync","Request.Service.Namespace":"redis-system","Request.Service.Name":"redis-leader-headless"}

{"level":"info","ts":1671086476.0445807,"logger":"controller_redis","msg":"Redis service get action is successful","Request.Service.Namespace":"redis-system","Request.Service.Name":"redis-leader"}

{"level":"info","ts":1671086476.0464318,"logger":"controller_redis","msg":"Redis service is already in-sync","Request.Service.Namespace":"redis-system","Request.Service.Name":"redis-leader"}

{"level":"info","ts":1671086476.0476692,"logger":"controller_redis","msg":"Redis PodDisruptionBudget get action failed","Request.PodDisruptionBudget.Namespace":"redis-system","Request.PodDisruptionBudget.Name":"redis-leader"}

{"level":"info","ts":1671086476.047689,"logger":"controller_redis","msg":"Reconciliation Successful, no PodDisruptionBudget Found.","Request.PodDisruptionBudget.Namespace":"redis-system","Request.PodDisruptionBudget.Name":"redis-leader"}

{"level":"info","ts":1671086476.0511396,"logger":"controller_redis","msg":"Redis statefulset get action was successful","Request.StatefulSet.Namespace":"redis-system","Request.StatefulSet.Name":"redis-leader"}

{"level":"info","ts":1671086476.05468,"logger":"controller_redis","msg":"Redis statefulset get action was successful","Request.StatefulSet.Namespace":"redis-system","Request.StatefulSet.Name":"redis-follower"}

{"level":"info","ts":1671086476.0869293,"logger":"controller_redis","msg":"Reconciliation Complete, no Changes required.","Request.StatefulSet.Namespace":"redis-system","Request.StatefulSet.Name":"redis-follower"}

{"level":"info","ts":1671086476.0903852,"logger":"controller_redis","msg":"Redis service get action is successful","Request.Service.Namespace":"redis-system","Request.Service.Name":"redis-follower-headless"}

{"level":"info","ts":1671086476.0920627,"logger":"controller_redis","msg":"Redis service is already in-sync","Request.Service.Namespace":"redis-system","Request.Service.Name":"redis-follower-headless"}

{"level":"info","ts":1671086476.0965445,"logger":"controller_redis","msg":"Redis service get action is successful","Request.Service.Namespace":"redis-system","Request.Service.Name":"redis-follower"}

{"level":"info","ts":1671086476.0986211,"logger":"controller_redis","msg":"Redis service is already in-sync","Request.Service.Namespace":"redis-system","Request.Service.Name":"redis-follower"}

{"level":"info","ts":1671086476.099773,"logger":"controller_redis","msg":"Redis PodDisruptionBudget get action failed","Request.PodDisruptionBudget.Namespace":"redis-system","Request.PodDisruptionBudget.Name":"redis-follower"}

{"level":"info","ts":1671086476.0997992,"logger":"controller_redis","msg":"Reconciliation Successful, no PodDisruptionBudget Found.","Request.PodDisruptionBudget.Namespace":"redis-system","Request.PodDisruptionBudget.Name":"redis-follower"}

{"level":"info","ts":1671086476.1040905,"logger":"controller_redis","msg":"Redis statefulset get action was successful","Request.StatefulSet.Namespace":"redis-system","Request.StatefulSet.Name":"redis-follower"}

{"level":"info","ts":1671086476.104127,"logger":"controllers.RedisCluster","msg":"Creating redis cluster by executing cluster creation commands","Request.Namespace":"redis-system","Request.Name":"redis","Leaders.Ready":"3","Followers.Ready":"3"}

{"level":"info","ts":1671086476.1103818,"logger":"controller_redis","msg":"Successfully got the ip for redis","Request.RedisManager.Namespace":"redis-system","Request.RedisManager.Name":"redis-leader-0","ip":"10.233.74.86"}

{"level":"info","ts":1671086476.1114752,"logger":"controller_redis","msg":"Redis cluster nodes are listed","Request.RedisManager.Namespace":"redis-system","Request.RedisManager.Name":"redis","Output":"cf0291a193357d03c6c2e6a67cf012689840affe 10.233.74.86:6379@16379 myself,master - 0 1671078114882 1 connected\n"}

{"level":"info","ts":1671086476.1115515,"logger":"controller_redis","msg":"Total number of redis nodes are","Request.RedisManager.Namespace":"redis-system","Request.RedisManager.Name":"redis","Nodes":"1"}

{"level":"info","ts":1671086476.1184812,"logger":"controller_redis","msg":"Successfully got the ip for redis","Request.RedisManager.Namespace":"redis-system","Request.RedisManager.Name":"redis-leader-0","ip":"10.233.74.86"}

{"level":"info","ts":1671086476.1197286,"logger":"controller_redis","msg":"Redis cluster nodes are listed","Request.RedisManager.Namespace":"redis-system","Request.RedisManager.Name":"redis","Output":"cf0291a193357d03c6c2e6a67cf012689840affe 10.233.74.86:6379@16379 myself,master - 0 1671078114882 1 connected\n"}

{"level":"info","ts":1671086476.1197934,"logger":"controller_redis","msg":"Number of redis nodes are","Request.RedisManager.Namespace":"redis-system","Request.RedisManager.Name":"redis","Nodes":"1","Type":"leader"}

{"level":"info","ts":1671086476.1198037,"logger":"controllers.RedisCluster","msg":"Not all leader are part of the cluster...","Request.Namespace":"redis-system","Request.Name":"redis","Leaders.Count":1,"Instance.Size":3}

{"level":"info","ts":1671086476.1235414,"logger":"controller_redis","msg":"Successfully got the ip for redis","Request.RedisManager.Namespace":"redis-system","Request.RedisManager.Name":"redis-leader-0","ip":"10.233.74.86"}

{"level":"info","ts":1671086476.1272135,"logger":"controller_redis","msg":"Successfully got the ip for redis","Request.RedisManager.Namespace":"redis-system","Request.RedisManager.Name":"redis-leader-1","ip":"10.233.97.165"}

{"level":"info","ts":1671086476.1303189,"logger":"controller_redis","msg":"Successfully got the ip for redis","Request.RedisManager.Namespace":"redis-system","Request.RedisManager.Name":"redis-leader-2","ip":"10.233.75.68"}

{"level":"info","ts":1671086476.130354,"logger":"controller_redis","msg":"Redis Add Slots command for single node cluster is","Request.RedisManager.Namespace":"redis-system","Request.RedisManager.Name":"redis","Command":["redis-cli","--cluster","create","10.233.74.86:6379","10.233.97.165:6379","10.233.75.68:6379","--cluster-yes"]}

{"level":"info","ts":1671086476.133076,"logger":"controller_redis","msg":"Redis cluster creation command is","Request.RedisManager.Namespace":"redis-system","Request.RedisManager.Name":"redis","Command":["redis-cli","--cluster","create","10.233.74.86:6379","10.233.97.165:6379","10.233.75.68:6379","--cluster-yes","-a","T1XmQh9U"]}

{"level":"info","ts":1671086476.1361492,"logger":"controller_redis","msg":"Pod Counted successfully","Request.RedisManager.Namespace":"redis-system","Request.RedisManager.Name":"redis","Count":0,"Container Name":"redis-leader"}

root@lvs-229:~# date +'%Y-%m-%d %H:%M:%S' -d "@1671086476.1361492"

2022-12-15 14:41:16

root@lvs-229:~#

可以看到,程序最后一次输出的日志为20分钟以前,非常的诡异。程序停留在了Pod Counted successfully附近,而我排查了一下源代码,并没有发现阻塞的地方。那么是什么原因导致程序开启了是”卡住“呢?由于redis集群组件的特性,我本地的windows10电脑无法复现,需要在K8S集群只能,Redis Operator才能跑起来。所以我需要能够在K8S Pod内Debug我修改之后的Redis Operator程序。

2. 编译源码

由于需要Debug源码,而golang编译器默认开启了内联优化,因此我们需要在编译二进制的时候,关闭内联优化

go build -gcflags=all="-N -l" -o manager ./cmd/operator/main.go

如上,通过加入-N -l选项,就可以禁止内联优化

3. Dockerfile

可以Debug的二进制程序准备好了,下面我们就可以准备Dockerfile,上传镜像了,需要注意的是,我们需要在启动的时候通过delve来启动,才能让我们的IDEA连接到Pod当中

FROM golang:1.19.4

MAINTAINER skyguard-bigdata

WORKDIR /

USER root

COPY manager .

RUN chmod +x /manager

RUN go env -w GO111MODULE=on && \

go env -w GOPROXY=https://goproxy.cn,direct && \

go install github.com/go-delve/delve/cmd/dlv@latest

EXPOSE 12345

CMD dlv --listen=:12345 --headless=true --api-version=2 --accept-multiclient exec manager -- --leader-elect

构建镜像,如下:

[root@9a394601b73f redis-operator]# docker build -t redis-operator:0.13.2-debug -f Dockerfile-Debug .

Sending build context to Docker daemon 188.4MB

Step 1/9 : FROM golang:1.19.4

---> 180567aa84db

Step 2/9 : MAINTAINER skyguard-bigdata

---> Using cache

---> 5750b24ad20d

Step 3/9 : WORKDIR /

---> Using cache

---> 1c31e39cec1d

Step 4/9 : USER root

---> Using cache

---> a059bffdeaf7

Step 5/9 : COPY manager .

---> Using cache

---> fec9465515d8

Step 6/9 : RUN chmod +x /manager

---> Using cache

---> 19e9cabec7f7

Step 7/9 : RUN go env -w GO111MODULE=on && go env -w GOPROXY=https://goproxy.cn,direct && go install github.com/go-delve/delve/cmd/dlv@latest

---> Running in 5dfa25abd876

go: downloading github.com/go-delve/delve v1.20.1

go: downloading github.com/sirupsen/logrus v1.6.0

go: downloading github.com/spf13/cobra v1.1.3

go: downloading gopkg.in/yaml.v2 v2.4.0

go: downloading github.com/mattn/go-isatty v0.0.3

go: downloading github.com/cosiner/argv v0.1.0

go: downloading github.com/derekparker/trie v0.0.0-20200317170641-1fdf38b7b0e9

go: downloading github.com/go-delve/liner v1.2.3-0.20220127212407-d32d89dd2a5d

go: downloading github.com/google/go-dap v0.6.0

go: downloading go.starlark.net v0.0.0-20220816155156-cfacd8902214

go: downloading golang.org/x/sys v0.0.0-20220908164124-27713097b956

go: downloading github.com/hashicorp/golang-lru v0.5.4

go: downloading golang.org/x/arch v0.0.0-20190927153633-4e8777c89be4

go: downloading github.com/cpuguy83/go-md2man/v2 v2.0.0

go: downloading github.com/spf13/pflag v1.0.5

go: downloading github.com/mattn/go-runewidth v0.0.13

go: downloading github.com/cilium/ebpf v0.7.0

go: downloading github.com/russross/blackfriday/v2 v2.0.1

go: downloading github.com/rivo/uniseg v0.2.0

go: downloading github.com/shurcooL/sanitized_anchor_name v1.0.0

Removing intermediate container 5dfa25abd876

---> 0397bab71dd9

Step 8/9 : EXPOSE 12345

---> Running in 3e585f70d0a6

Removing intermediate container 3e585f70d0a6

---> 3dd708e29767

Step 9/9 : CMD dlv --listen=:12345 --headless=true --api-version=2 --accept-multiclient exec manager

---> Running in 0d8181e63ea7

Removing intermediate container 0d8181e63ea7

---> 77f30b173d34

Successfully built 77f30b173d34

Successfully tagged redis-operator:0.13.2-debug

[root@9a394601b73f redis-operator]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

redis-operator 0.13.2-debug 77f30b173d34 10 seconds ago 1.21GB

把镜像推送到仓库当中即可

4. Service

由于之前的Redis Operator并不需要提供服务,因此并没有Service,这里我们需要把12345端口暴露出来

注意:如果小伙伴们可以直接访问K8S集群的话,那么这里可以直接使用NodePort的方式暴露端口,并且Apisix那个步骤可以跳过

kind: Service

apiVersion: v1

metadata:

name: redis-operator-debug

namespace: redis-system

spec:

type: ClusterIP

ports:

- name: delve

port: 12345

targetPort: 12345

selector:

control-plane: redis-operator

root@lvs-229:~# kubectl get svc -n redis-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

redis-follower ClusterIP 10.233.5.220 <none> 6379/TCP,9121/TCP 28h

redis-follower-headless ClusterIP None <none> 6379/TCP 28h

redis-leader ClusterIP 10.233.0.234 <none> 6379/TCP,9121/TCP 28h

redis-leader-headless ClusterIP None <none> 6379/TCP 28h

redis-operator-debug ClusterIP 10.233.19.68 <none> 12345/TCP 19s

root@lvs-229:~#

5. Apisix

由于我们的K8S是通过Apisix网关暴露给外部的,因此这里还需要给Apisix添加一个四层代理,这样外部才能的delve才能连接上K8S内部的Pod

PUT {{ApisixManagerAddr}}/apisix/admin/stream_routes/redis-operator-debug

Content-Type: {{ContentType}}

X-API-KEY: {{ApisixKey}}

{

"server_port": 9107,

"upstream": {

"type": "roundrobin",

"nodes": {

"10.233.19.68:12345": 1

}

}

}

由于集群内的DNS解析暂时有点问题,这里我们通过Service IP找到服务

6. Deployment

修改Redis Operator的Image属性,改为我们前面上传的镜像并且加上特权,privileged: true。

切记,这里一定要记得给容器加上特权,否则Debug会有问题

securityContext:

privileged: true

root@lvs-229:~# kubectl logs -f --tail 100 -n redis-system redis-operator-546fdc76b7-lqz9b

2022-12-15T08:23:44Z error layer=debugger could not create config directory: mkdir .config: permission denied

2022-12-15T08:23:44Z warning layer=rpc Listening for remote connections (connections are not authenticated nor encrypted)

API server listening at: [::]:12345

更新Deployment之后,等待Kubernetes重启好新的Pod,查看日志,发现这个Pod正在等待delve连接进来,接下来,我们启动IDEA

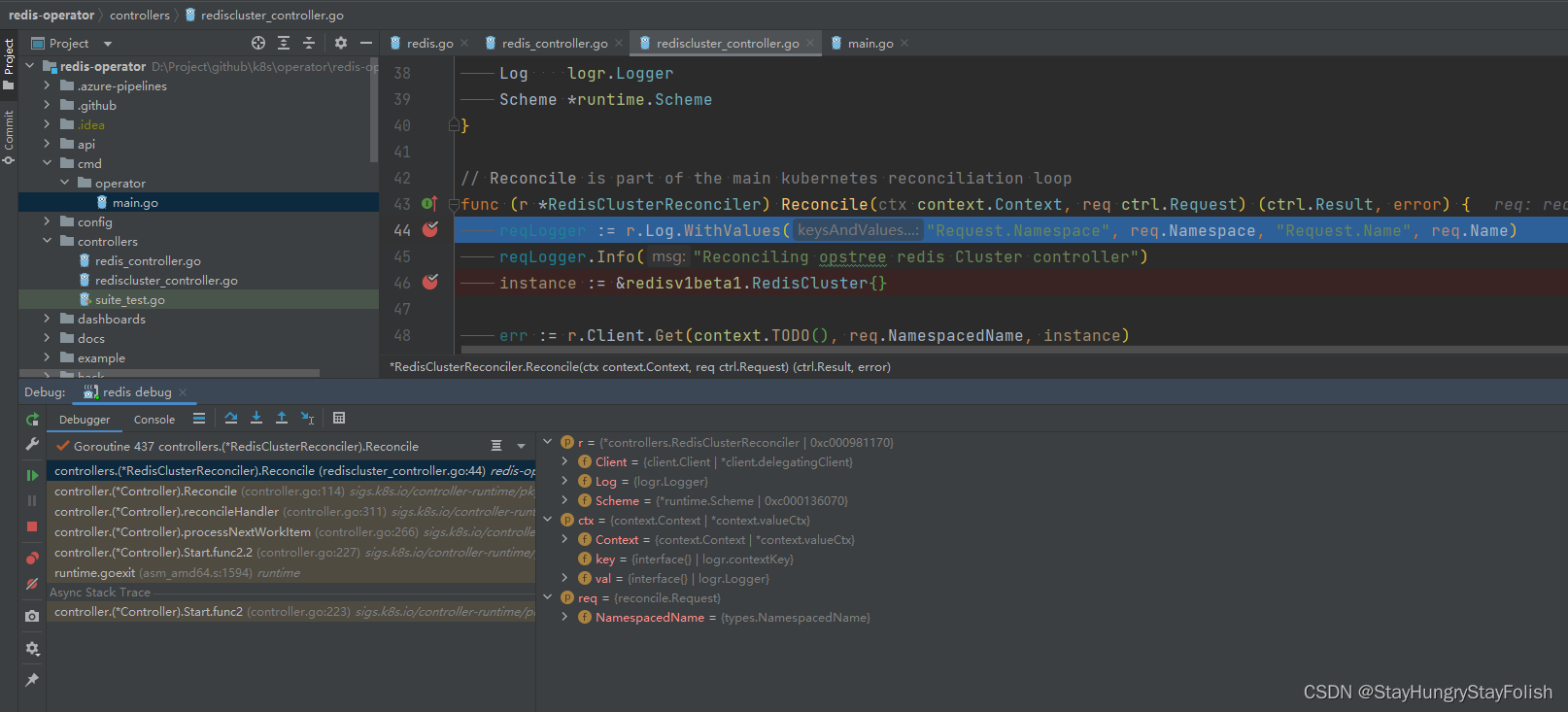

7. IDEA

注意:需要提前打好端点,不然IEDA已启动,很有可能我们需要Debug的地方已经跳过了

搞定,之后就可以愉快的Debug啦

![[附源码]Python计算机毕业设计Django酒店客房管理系统](https://img-blog.csdnimg.cn/f7ceac33b0ff402383eaaa0c5834cbe7.png)

![[附源码]Nodejs计算机毕业设计基于大学生兼职系统Express(程序+LW)](https://img-blog.csdnimg.cn/cce5b46e085a495c8fc48ae1c264b587.png)

![[操作系统笔记]页面置换算法](https://img-blog.csdnimg.cn/b9d3acb1c98649c7a8bb3ad12024b96b.png)