ChatGLM2-6B:https://github.com/THUDM/ChatGLM2-6B

模型地址:https://huggingface.co/THUDM/chatglm2-6b

详细步骤同:ChatGLM-6B的P-Tuning微调详细步骤及结果验证

环境可复用ChatGLM-6B(上述部署教程),即

Python 3.8.10

CUDA Version: 12.0

torch 2.0.1

transformers 4.27.1

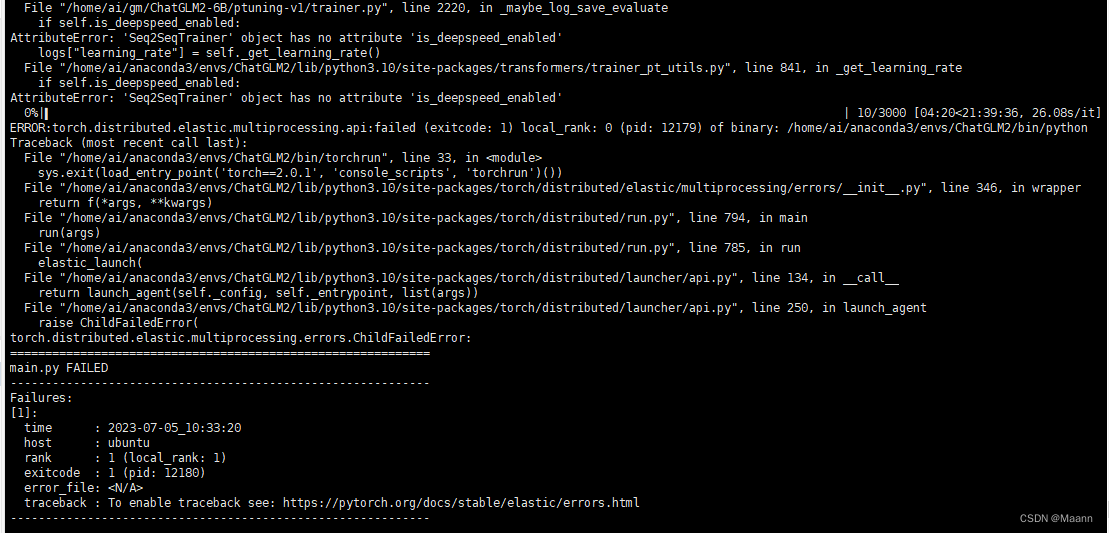

注:ChatGLM2-6B官网给的环境P-Tuning微调报错

AttributeError: ‘Seq2SeqTrainer’ object has no attribute 'is_deepspeed_enabl

torch.distributed.elastic.multiprocessing.errors.ChildFailedError: