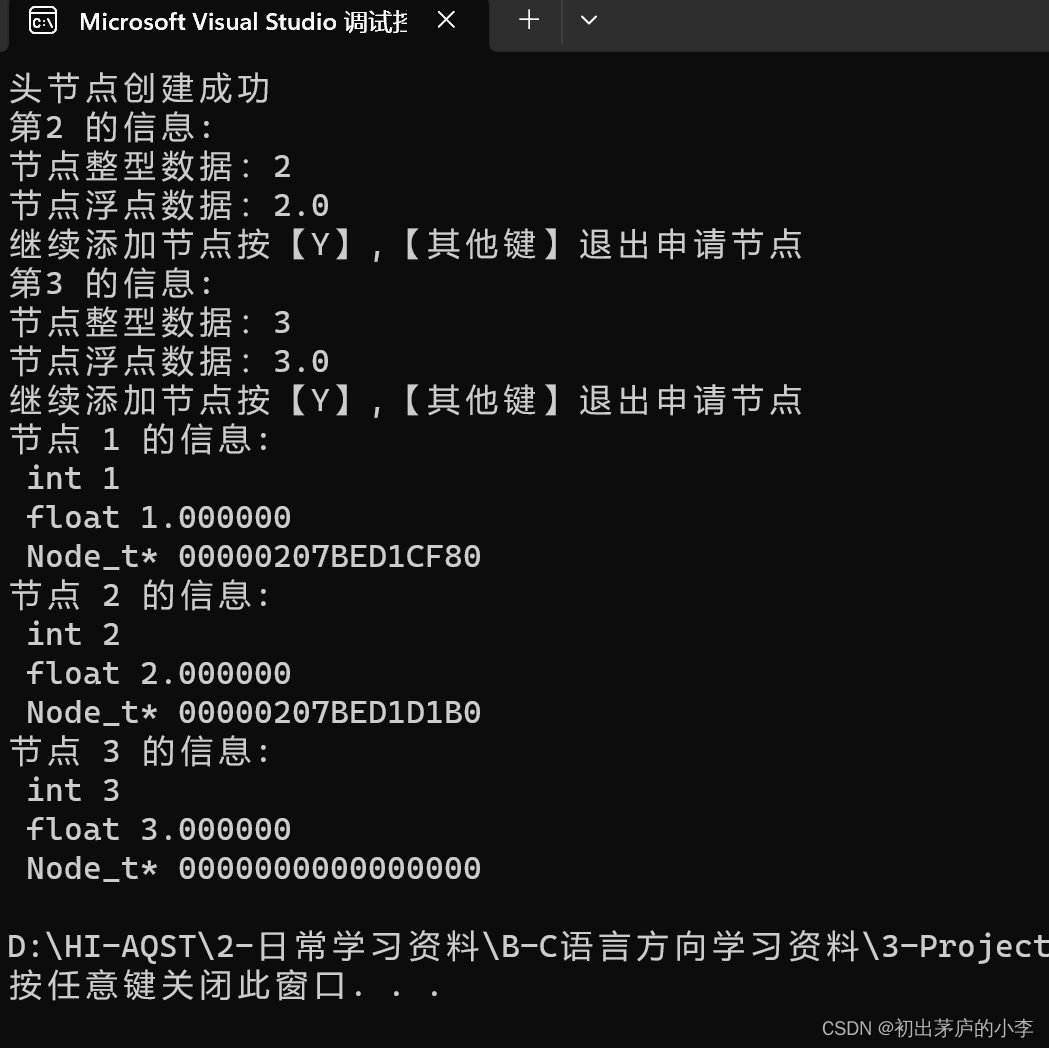

dict结构

dictEntry即键值对,每个桶就是dictEntry连接的链表

typedef struct dictEntry {

void *key;

union {

void *val; // 自定义类型

uint64_t u64;

int64_t s64;

double d;

} v;

struct dictEntry *next;

} dictEntry;

数据真正指向的地方

typedef struct dictht {

dictEntry **table;

unsigned long size;

// 计算hash key。sizemask = size-1

unsigned long sizemask;

unsigned long used;

} dictht;

dict结构

typedef struct dict {

dictType *type;

void *privdata;

dictht ht[2]; // two hash table for incremental rehashing

long rehashidx; // rehashing not in progress if rehashidx == -1

unsigned long iterators; // number of iterators currently running

} dict;

什么时候rehash

扩容条件

扩容发生于打开resizing的情况下超出了使用量/容量的阈值比率(通常是1),或者超出强制扩容比率(5)

// Source: https://github.com/redis/redis/blob/b8c67ce41b51247c02a639929e4fab20189afa1e/src/dict.c#L1030

static long _dictKeyIndex(dict *d, const void *key, uint64_t hash, dictEntry **existing)

{

unsigned long idx, table;

/* Expand the hash table if needed */

if (_dictExpandIfNeeded(d) == DICT_ERR)

return -1;

for (table = 0; table <= 1; table++) {

// a bunch of operations going on, we are not interested

}

return idx;

}

// Source: https://github.com/redis/redis/blob/b8c67ce41b51247c02a639929e4fab20189afa1e/src/dict.c#L54

/* Using dictEnableResize() / dictDisableResize() we make possible to

* enable/disable resizing of the hash table as needed. This is very important

* for Redis, as we use copy-on-write and don't want to move too much memory

* around when there is a child performing saving operations.

*

* Note that even when dict_can_resize is set to 0, not all resizes are

* prevented: a hash table is still allowed to grow if the ratio between

* the number of elements and the buckets > dict_force_resize_ratio. */

static int dict_can_resize = 1;

static unsigned int dict_force_resize_ratio = 5;

// Source: https://github.com/redis/redis/blob/b8c67ce41b51247c02a639929e4fab20189afa1e/src/dict.c#L987

/* Expand the hash table if needed */

static int _dictExpandIfNeeded(dict *d)

{

/* Incremental rehashing already in progress. Return. */

if (dictIsRehashing(d)) return DICT_OK;

/* If the hash table is empty expand it to the initial size. */

if (d->ht[0].size == 0) return dictExpand(d, DICT_HT_INITIAL_SIZE);

/* If we reached the 1:1 ratio, and we are allowed to resize the hash

* table (global setting) or we should avoid it but the ratio between

* elements/buckets is over the "safe" threshold, we resize doubling

* the number of buckets. */

if (d->ht[0].used >= d->ht[0].size &&

(dict_can_resize ||

d->ht[0].used/d->ht[0].size > dict_force_resize_ratio) &&

dictTypeExpandAllowed(d))

{

return dictExpand(d, d->ht[0].used + 1);

}

return DICT_OK;

}

有时,如果大量内存被分配超出了可分配内存限制可能会导致redis无法响应,并且可能会驱逐大量的key

// Source: https://github.com/redis/redis/blob/c4b52fc7c9e77fb1f2719d2cb5b9977b90698721/src/server.h#L164

#define HASHTABLE_MAX_LOAD_FACTOR 1.618 /* Maximum hash table load factor. */

// Source: https://github.com/redis/redis/blob/c4b52fc7c9e77fb1f2719d2cb5b9977b90698721/src/dict.c#L977

/* Because we may need to allocate huge memory chunk at once when dict

* expands, we will check this allocation is allowed or not if the dict

* type has expandAllowed member function. */

static int dictTypeExpandAllowed(dict *d) {

if (d->type->expandAllowed == NULL) return 1;

return d->type->expandAllowed(

_dictNextPower(d->ht[0].used + 1) * sizeof(dictEntry*),

(double)d->ht[0].used / d->ht[0].size);

}

// Source: https://github.com/redis/redis/blob/c4b52fc7c9e77fb1f2719d2cb5b9977b90698721/src/server.c#L1314

/* Return 1 if currently we allow dict to expand. Dict may allocate huge

* memory to contain hash buckets when dict expands, that may lead redis

* rejects user's requests or evicts some keys, we can stop dict to expand

* provisionally if used memory will be over maxmemory after dict expands,

* but to guarantee the performance of redis, we still allow dict to expand

* if dict load factor exceeds HASHTABLE_MAX_LOAD_FACTOR. */

// 若使用比率小于等于HASHTABLE_MAX_LOAD_FACTOR,就回检查释放超出可分配最大内存

int dictExpandAllowed(size_t moreMem, double usedRatio) {

if (usedRatio <= HASHTABLE_MAX_LOAD_FACTOR) {

return !overMaxmemoryAfterAlloc(moreMem);

} else {

return 1;

}

}

缩容条件

若hash table大于DICT_HT_INITIAL_SIZE = 4并且负载因子小于0.1,那么就会进行缩容

/* This is the initial size of every hash table */

#define DICT_HT_INITIAL_SIZE 4

// Source: https://github.com/redis/redis/blob/b8c67ce41b51247c02a639929e4fab20189afa1e/src/server.h#L162

/* Hash table parameters */

#define HASHTABLE_MIN_FILL 10 /* Minimal hash table fill 10% */

// Source: https://github.com/redis/redis/blob/b8c67ce41b51247c02a639929e4fab20189afa1e/src/dict.h#L147

#define dictSlots(d) ((d)->ht[0].size+(d)->ht[1].size)

#define dictSize(d) ((d)->ht[0].used+(d)->ht[1].used)

// Source: https://github.com/redis/redis/blob/b8c67ce41b51247c02a639929e4fab20189afa1e/src/server.c#L1498

int htNeedsResize(dict *dict) {

long long size, used;

size = dictSlots(dict);

used = dictSize(dict);

return (size > DICT_HT_INITIAL_SIZE &&

(used*100/size < HASHTABLE_MIN_FILL));

}

// Source: https://github.com/redis/redis/blob/c4b52fc7c9e77fb1f2719d2cb5b9977b90698721/src/dict.c#L133

/* Resize the table to the minimal size that contains all the elements,

* but with the invariant of a USED/BUCKETS ratio near to <= 1 */

int dictResize(dict *d)

{

unsigned long minimal;

if (!dict_can_resize || dictIsRehashing(d)) return DICT_ERR;

minimal = d->ht[0].used;

if (minimal < DICT_HT_INITIAL_SIZE)

minimal = DICT_HT_INITIAL_SIZE;

return dictExpand(d, minimal);

}

“虚假”的rehash

在满足rehash条件之后会进行rehash,但这个rehash并不是真正的搬迁过程

/* Expand or create the hash table,

* when malloc_failed is non-NULL, it'll avoid panic if malloc fails (in which case it'll be set to 1).

* Returns DICT_OK if expand was performed, and DICT_ERR if skipped. */

int _dictExpand(dict *d, unsigned long size, int* malloc_failed)

{

if (malloc_failed) *malloc_failed = 0;

/* the size is invalid if it is smaller than the number of

* elements already inside the hash table */

// 若使用元素数量大于容量,或者已经rehash,报错

if (dictIsRehashing(d) || d->ht[0].used > size)

return DICT_ERR;

dictht n; /* the new hash table */

// 按照原容量两倍扩展新容量

unsigned long realsize = _dictNextPower(size);

/* Rehashing to the same table size is not useful. */

// rehash的容量等于原容量是没有意义的,直接返回

if (realsize == d->ht[0].size) return DICT_ERR;

/* Allocate the new hash table and initialize all pointers to NULL */

// 初始化新hash table

n.size = realsize;

n.sizemask = realsize-1;

if (malloc_failed) {

n.table = ztrycalloc(realsize*sizeof(dictEntry*));

*malloc_failed = n.table == NULL;

if (*malloc_failed)

return DICT_ERR;

} else

n.table = zcalloc(realsize*sizeof(dictEntry*));

// 初始化新hash table使用量为0

n.used = 0;

/* Is this the first initialization? If so it's not really a rehashing

* we just set the first hash table so that it can accept keys. */

// 若dict本来是空的,那么直接将初始化的新hash table赋予给dict

if (d->ht[0].table == NULL) {

d->ht[0] = n;

return DICT_OK;

}

/* Prepare a second hash table for incremental rehashing */

// 赋予新hash table给dict的第二个hash table(也就是rehash hash table),并标记开始rehash

d->ht[1] = n;

d->rehashidx = 0;

return DICT_OK;

}

/* return DICT_ERR if expand was not performed */

int dictExpand(dict *d, unsigned long size) {

return _dictExpand(d, size, NULL);

}

真正rehash过程

在每次对dict进行添加、删除、查找或者更新操作时会执行真正的rehash

/* Performs N steps of incremental rehashing. Returns 1 if there are still

* keys to move from the old to the new hash table, otherwise 0 is returned.

*

* Note that a rehashing step consists in moving a bucket (that may have more

* than one key as we use chaining) from the old to the new hash table, however

* since part of the hash table may be composed of empty spaces, it is not

* guaranteed that this function will rehash even a single bucket, since it

* will visit at max N*10 empty buckets in total, otherwise the amount of

* work it does would be unbound and the function may block for a long time. */

// n代表至多有n个桶被rehash

int dictRehash(dict *d, int n) {

int empty_visits = n*10; /* Max number of empty buckets to visit. */

// 若未处于rehash过程中,返回

if (!dictIsRehashing(d)) return 0;

// 遍历dict直到使用量为0或者达到本次最大遍历桶数量

while(n-- && d->ht[0].used != 0) {

dictEntry *de, *nextde;

/* Note that rehashidx can't overflow as we are sure there are more

* elements because ht[0].used != 0 */

assert(d->ht[0].size > (unsigned long)d->rehashidx);

// 跳过空桶,若超过可允许访问的空桶数量也返回

while(d->ht[0].table[d->rehashidx] == NULL) {

d->rehashidx++;

if (--empty_visits == 0) return 1;

}

// de指向当前rehash节点

de = d->ht[0].table[d->rehashidx];

/* Move all the keys in this bucket from the old to the new hash HT */

while(de) {

uint64_t h;

// 记录当前桶节点下一个

nextde = de->next;

/* Get the index in the new hash table */

// 获取搬迁新桶索引

h = dictHashKey(d, de->key) & d->ht[1].sizemask;

// 当前桶节点指向新桶头节点

de->next = d->ht[1].table[h];

// 搬迁当前桶节点到新桶

d->ht[1].table[h] = de;

// 原桶使用量-1,新桶+1

d->ht[0].used--;

d->ht[1].used++;

// 更新遍历的桶节点

de = nextde;

}

//清空当前搬迁桶,并更新搬迁索引

d->ht[0].table[d->rehashidx] = NULL;

d->rehashidx++;

}

/* Check if we already rehashed the whole table... */

// 若全部搬迁完毕,则清空原hash table,并将新hash table置为当前table,清空搬迁状态

if (d->ht[0].used == 0) {

zfree(d->ht[0].table);

d->ht[0] = d->ht[1];

_dictReset(&d->ht[1]);

d->rehashidx = -1;

return 0;

}

/* More to rehash... */

return 1;

}

此外redis在空闲的时候也会充分利用起来进行rehash

/* Our hash table implementation performs rehashing incrementally while

* we write/read from the hash table. Still if the server is idle, the hash

* table will use two tables for a long time. So we try to use 1 millisecond

* of CPU time at every call of this function to perform some rehashing.

*

* The function returns 1 if some rehashing was performed, otherwise 0

* is returned. */

// 当其他内存繁重的进程(比如将当前数据库状态保存到磁盘)无法运行时,所有 redis 数据库的后台 cron 作业将一个接一个地调用 IncrementallyRehash () ,并且在配置中打开 activerhash 标志。

int incrementallyRehash(int dbid) {

/* Keys dictionary */

if (dictIsRehashing(server.db[dbid].dict)) {

dictRehashMilliseconds(server.db[dbid].dict,1);

return 1; /* already used our millisecond for this loop... */

}

/* Expires */

if (dictIsRehashing(server.db[dbid].expires)) {

dictRehashMilliseconds(server.db[dbid].expires,1);

return 1; /* already used our millisecond for this loop... */

}

return 0;

}

// Source: https://github.com/redis/redis/blob/b8c67ce41b51247c02a639929e4fab20189afa1e/src/dict.c#L263

/* Rehash in ms+"delta" milliseconds. The value of "delta" is larger

* than 0, and is smaller than 1 in most cases. The exact upper bound

* depends on the running time of dictRehash(d,100).*/

int dictRehashMilliseconds(dict *d, int ms) {

if (d->iterators > 0) return 0;

long long start = timeInMilliseconds();

int rehashes = 0;

// 在ms时间内运行rehash

while(dictRehash(d,100)) {

rehashes += 100;

if (timeInMilliseconds()-start > ms) break;

}

return rehashes;

}

总结

redis rehash利用了和golang类似的方式,将真正的rehash操作放置到了dict增删改除中,有效地分摊了rehash性能消耗。

不同之处在于

-

更进一步作为一个数据库应用产品利用了闲暇时间去进行rehash。

-

为有效利用宝贵内存空间,添加了缩容,还添加可以在较高的使用率下的内存回收

-

扩容前预估是否超出内存限制

-

出于对响应时间的敏感性,redis还设置了最大空桶访问次数

-

redis没有考虑到桶overflow太多的情况

Ref

- https://codeburst.io/a-closer-look-at-redis-dictionary-implementation-internals-3fd815aae535