目录

延时控制

定时操作

低分辨率定时器

高分辨率定时器

练习

延时控制

在硬件的操作中经常会用到延时,比如要保持芯片的复位时间持续多久、芯片复位后要至少延时多长时间才能去访问芯片、芯片的上电时序控制等。为此,内核提供了一组延时操作函数。

内核在启动过程中会计算一个全局变量 loops_per_jiffy 的值,该变量反映了一段循环延时的代码要循环多少次才能延时一个 jiffy 的时间(关 jiffy会在后面说明),下面是FS4412目标板启动过程中做相关计算后的打印信息。

0.0450001 Calibrating delay 1oop...1992.29 BogoMIPS (lpj=4980736)

根据 loops_per_jiffy 这个值,就可以知道延时一微秒需要多少个循环、延时一毫秒需要多少个循环。于是内核就据此定义了一些靠循环来延时的宏或函数。

void ndelay(unsigned long x);

udelay(n)

mdelay(n)

ndelay、udelay 和 mdelay 分别表示纳秒级延时、微秒级延时和毫秒级延时。查看代码,在ARM 体系结构下,ndelay和 mdclay 都是基于 udelay 的,将 udelay 的循环次数除以1000 就是纳秒延时,可想而知,如果 udelay 延时的循环次数不到 1000 次,那么纳级延时也就是不准确的。这些延时函数都是忙等待延时,是靠白白消耗 CPU 的时间来获得延时的,如果没有特殊的理由(比如在中断上下文中或获得自旋锁的情况下),不推荐使用这些函数延迟较长的时间。

如果延时时间较长,又没有特殊的要求,那么可以使用休眠延时。

void msleep(unsigned int msecs);

long msleep_interruptible(unsigned int msecs);

void ssleep(unsigned int seconds);

函数及参数的意义都很好理解,msleep_interruptible表示休眠可以被信号打断,反过来说,msleep和 ssleep 都不能被信号打断,只能等到休眠时间到了才会返回。

定时操作

有时候需要在设定的时间到期后自动执行一个操作,这就是定时。定时又分为单次定时和循环定时两种,所谓单次定时就是设定的时间到期之后,操作只被执行一次,而循环定时则是设定的时间到期之后操作被执行,然后再次启动定时器,下一次时间到期后操作再次被执行,如此循环往复。随着 Linux 的发展,分别出现了低分辨率的经典定时器和高分辨率定时器。

低分辨率定时器

在讲解低分辨率定时器之前,我们先来看看内核中的两个全局变量 jiffies 和jiffies_64。经典的定时器是基于一个硬件定时器的,该定时器周期性地产生中断,产生中断的次数可以进行配置,在 FS4412 目标板上为 200,内核源码中以 HZ 这个宏来代表这个配置值,也就是说,这个硬件定时器每秒钟会产生 HZ 次中断。该定时器自开机以来产生的中断次数会被记录在 jiffies 全局变量中(一般会偏一个值),在 32 位的系统上 jiffies被定义为32位,所以在一个可期待的时间之后就会溢出,于是内核又定义了一个jiffies_64的64 位全局变量,这使得目前和可以预见的所有计算机系统都不会让该计数值溢出。内核通过链接器的帮助使 jiffies 和jiffies_64 共 4个字节,这使得这两个量的作更加方便。内核提供了一组围绕jiffies 操作的函数和宏。

u64 get_jiffies_64(void);

time_after(a,b)

time_before(a,b)

time_after_eq(a,b)

time_before_eq(a,b)

time_in_range(a,b,c)

time_after64(a,b)

time_before64(a,b)

time_after_eq64(a,b)

time_before_eq64(a,b)

time_in_range64(a,b, c)

unsigned int jiffies_to_msecs(const unsigned long j);

unsigned int jiffies_to_usecs(const unsigned long j);

u64 jiffies_to_nsecs(const unsigned long j);

unsigned long msecs_to_jiffies(const unsigned int m);

unsigned long usecs_to_jiffies(const unsigned int u);

get_jiffies_64:获取jiffies_64 的值。

time_after:如果在之后则返回真,其他的宏可以以此类推,其后加“64”表是64 位值相比较,加“eq”则表示在相等的情况下也返回真。

jiffies_to_msecs:将j转换为对应的毫秒值,其他的以此类推。

灵活使用 time_after 或 time_before 也可以实现长延时,但前面已介绍了更方便的函数,所以在此就不多做介绍了。

了解了jiffies 后,可以来查看内核中的低分辨率定时器。其定时器对象的结构类型定义如下。

#ifndef _LINUX_TIMER_H

#define _LINUX_TIMER_H

#include <linux/list.h>

#include <linux/ktime.h>

#include <linux/stddef.h>

#include <linux/debugobjects.h>

#include <linux/stringify.h>

struct tvec_base;

struct timer_list {

/*

* All fields that change during normal runtime grouped to the

* same cacheline

*/

struct list_head entry;

unsigned long expires;

struct tvec_base *base;

void (*function)(unsigned long);

unsigned long data;

int slack;

#ifdef CONFIG_TIMER_STATS

int start_pid;

void *start_site;

char start_comm[16];

#endif

#ifdef CONFIG_LOCKDEP

struct lockdep_map lockdep_map;

#endif

};

extern struct tvec_base boot_tvec_bases;

#ifdef CONFIG_LOCKDEP

/*

* NB: because we have to copy the lockdep_map, setting the lockdep_map key

* (second argument) here is required, otherwise it could be initialised to

* the copy of the lockdep_map later! We use the pointer to and the string

* "<file>:<line>" as the key resp. the name of the lockdep_map.

*/

#define __TIMER_LOCKDEP_MAP_INITIALIZER(_kn) \

.lockdep_map = STATIC_LOCKDEP_MAP_INIT(_kn, &_kn),

#else

#define __TIMER_LOCKDEP_MAP_INITIALIZER(_kn)

#endif

/*

* Note that all tvec_bases are at least 4 byte aligned and lower two bits

* of base in timer_list is guaranteed to be zero. Use them for flags.

*

* A deferrable timer will work normally when the system is busy, but

* will not cause a CPU to come out of idle just to service it; instead,

* the timer will be serviced when the CPU eventually wakes up with a

* subsequent non-deferrable timer.

*

* An irqsafe timer is executed with IRQ disabled and it's safe to wait for

* the completion of the running instance from IRQ handlers, for example,

* by calling del_timer_sync().

*

* Note: The irq disabled callback execution is a special case for

* workqueue locking issues. It's not meant for executing random crap

* with interrupts disabled. Abuse is monitored!

*/

#define TIMER_DEFERRABLE 0x1LU

#define TIMER_IRQSAFE 0x2LU

#define TIMER_FLAG_MASK 0x3LU

#define __TIMER_INITIALIZER(_function, _expires, _data, _flags) { \

.entry = { .prev = TIMER_ENTRY_STATIC }, \

.function = (_function), \

.expires = (_expires), \

.data = (_data), \

.base = (void *)((unsigned long)&boot_tvec_bases + (_flags)), \

.slack = -1, \

__TIMER_LOCKDEP_MAP_INITIALIZER( \

__FILE__ ":" __stringify(__LINE__)) \

}

#define TIMER_INITIALIZER(_function, _expires, _data) \

__TIMER_INITIALIZER((_function), (_expires), (_data), 0)

#define TIMER_DEFERRED_INITIALIZER(_function, _expires, _data) \

__TIMER_INITIALIZER((_function), (_expires), (_data), TIMER_DEFERRABLE)

#define DEFINE_TIMER(_name, _function, _expires, _data) \

struct timer_list _name = \

TIMER_INITIALIZER(_function, _expires, _data)

void init_timer_key(struct timer_list *timer, unsigned int flags,

const char *name, struct lock_class_key *key);

#ifdef CONFIG_DEBUG_OBJECTS_TIMERS

extern void init_timer_on_stack_key(struct timer_list *timer,

unsigned int flags, const char *name,

struct lock_class_key *key);

extern void destroy_timer_on_stack(struct timer_list *timer);

#else

static inline void destroy_timer_on_stack(struct timer_list *timer) { }

static inline void init_timer_on_stack_key(struct timer_list *timer,

unsigned int flags, const char *name,

struct lock_class_key *key)

{

init_timer_key(timer, flags, name, key);

}

#endif

#ifdef CONFIG_LOCKDEP

#define __init_timer(_timer, _flags) \

do { \

static struct lock_class_key __key; \

init_timer_key((_timer), (_flags), #_timer, &__key); \

} while (0)

#define __init_timer_on_stack(_timer, _flags) \

do { \

static struct lock_class_key __key; \

init_timer_on_stack_key((_timer), (_flags), #_timer, &__key); \

} while (0)

#else

#define __init_timer(_timer, _flags) \

init_timer_key((_timer), (_flags), NULL, NULL)

#define __init_timer_on_stack(_timer, _flags) \

init_timer_on_stack_key((_timer), (_flags), NULL, NULL)

#endif

#define init_timer(timer) \

__init_timer((timer), 0)

#define init_timer_deferrable(timer) \

__init_timer((timer), TIMER_DEFERRABLE)

#define init_timer_on_stack(timer) \

__init_timer_on_stack((timer), 0)

#define __setup_timer(_timer, _fn, _data, _flags) \

do { \

__init_timer((_timer), (_flags)); \

(_timer)->function = (_fn); \

(_timer)->data = (_data); \

} while (0)

#define __setup_timer_on_stack(_timer, _fn, _data, _flags) \

do { \

__init_timer_on_stack((_timer), (_flags)); \

(_timer)->function = (_fn); \

(_timer)->data = (_data); \

} while (0)

#define setup_timer(timer, fn, data) \

__setup_timer((timer), (fn), (data), 0)

#define setup_timer_on_stack(timer, fn, data) \

__setup_timer_on_stack((timer), (fn), (data), 0)

#define setup_deferrable_timer_on_stack(timer, fn, data) \

__setup_timer_on_stack((timer), (fn), (data), TIMER_DEFERRABLE)

/**

* timer_pending - is a timer pending?

* @timer: the timer in question

*

* timer_pending will tell whether a given timer is currently pending,

* or not. Callers must ensure serialization wrt. other operations done

* to this timer, eg. interrupt contexts, or other CPUs on SMP.

*

* return value: 1 if the timer is pending, 0 if not.

*/

static inline int timer_pending(const struct timer_list * timer)

{

return timer->entry.next != NULL;

}

extern void add_timer_on(struct timer_list *timer, int cpu);

extern int del_timer(struct timer_list * timer);

extern int mod_timer(struct timer_list *timer, unsigned long expires);

extern int mod_timer_pending(struct timer_list *timer, unsigned long expires);

extern int mod_timer_pinned(struct timer_list *timer, unsigned long expires);

extern void set_timer_slack(struct timer_list *time, int slack_hz);

#define TIMER_NOT_PINNED 0

#define TIMER_PINNED 1

/*

* The jiffies value which is added to now, when there is no timer

* in the timer wheel:

*/

#define NEXT_TIMER_MAX_DELTA ((1UL << 30) - 1)

/*

* Return when the next timer-wheel timeout occurs (in absolute jiffies),

* locks the timer base and does the comparison against the given

* jiffie.

*/

extern unsigned long get_next_timer_interrupt(unsigned long now);

/*

* Timer-statistics info:

*/

#ifdef CONFIG_TIMER_STATS

extern int timer_stats_active;

#define TIMER_STATS_FLAG_DEFERRABLE 0x1

extern void init_timer_stats(void);

extern void timer_stats_update_stats(void *timer, pid_t pid, void *startf,

void *timerf, char *comm,

unsigned int timer_flag);

extern void __timer_stats_timer_set_start_info(struct timer_list *timer,

void *addr);

static inline void timer_stats_timer_set_start_info(struct timer_list *timer)

{

if (likely(!timer_stats_active))

return;

__timer_stats_timer_set_start_info(timer, __builtin_return_address(0));

}

static inline void timer_stats_timer_clear_start_info(struct timer_list *timer)

{

timer->start_site = NULL;

}

#else

static inline void init_timer_stats(void)

{

}

static inline void timer_stats_timer_set_start_info(struct timer_list *timer)

{

}

static inline void timer_stats_timer_clear_start_info(struct timer_list *timer)

{

}

#endif

extern void add_timer(struct timer_list *timer);

extern int try_to_del_timer_sync(struct timer_list *timer);

#ifdef CONFIG_SMP

extern int del_timer_sync(struct timer_list *timer);

#else

# define del_timer_sync(t) del_timer(t)

#endif

#define del_singleshot_timer_sync(t) del_timer_sync(t)

extern void init_timers(void);

extern void run_local_timers(void);

struct hrtimer;

extern enum hrtimer_restart it_real_fn(struct hrtimer *);

unsigned long __round_jiffies(unsigned long j, int cpu);

unsigned long __round_jiffies_relative(unsigned long j, int cpu);

unsigned long round_jiffies(unsigned long j);

unsigned long round_jiffies_relative(unsigned long j);

unsigned long __round_jiffies_up(unsigned long j, int cpu);

unsigned long __round_jiffies_up_relative(unsigned long j, int cpu);

unsigned long round_jiffies_up(unsigned long j);

unsigned long round_jiffies_up_relative(unsigned long j);

#endif

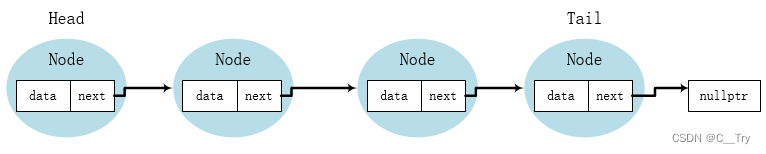

entry:双向链表节点的对象,用于构成双向链表

expires:定时器到期的jiffies 值。

function:定时器到期后执行的函数。

data: 传递给定时器函数的参数,通常传递一个指针。

低分辨率定时器操作的相关函数如下。

init_timer(timer)

void add_timer(struct timer_list *timer);

int mod_timer(struct timer_list *timer, unsigned long expires) ;

int del_timer(struct timer_list * timer);init_timer:初始化一个定时器。

add_timer:将定时器添加到内核中的定时器链表中。

mod_timer:修改定时器的expires 成员,而不考虑当前定时器的状态

del_timer: 从内核链表中删除该定时器,而不考虑当前定时器的状态。

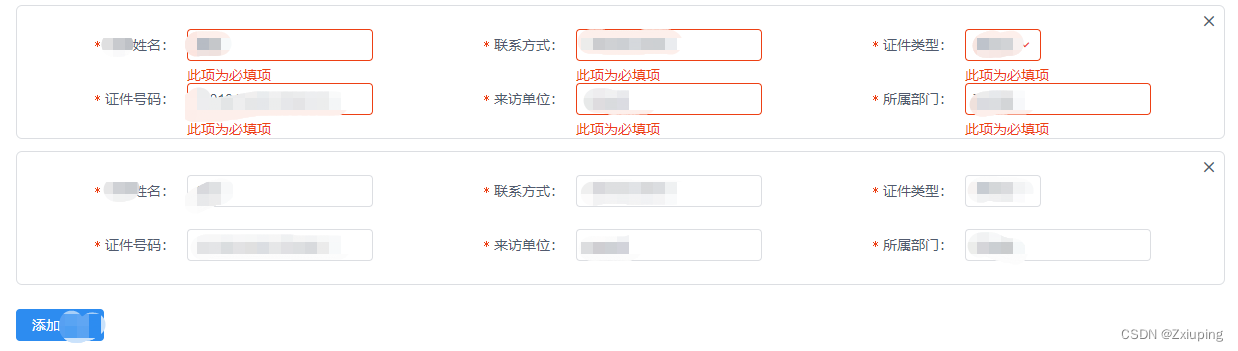

要在驱动中实现一个定时器,需要经过以下几个步骤。

(1)构造一个定时器对象,调用 init_timer 来初始化这个对象,并对 expires、function

和 data 成员赋值。

(2)使用add_timer 将定时器对象添加到内核的定时器链表中。

(3) 定时时间到了之后,定时器函数自动被调用,如果需要周期定时,那么可以在定时函数中使用mod_timer 来修改expires。 (4)在不需要定时器的时候,用 del_timer 来删除定时器.

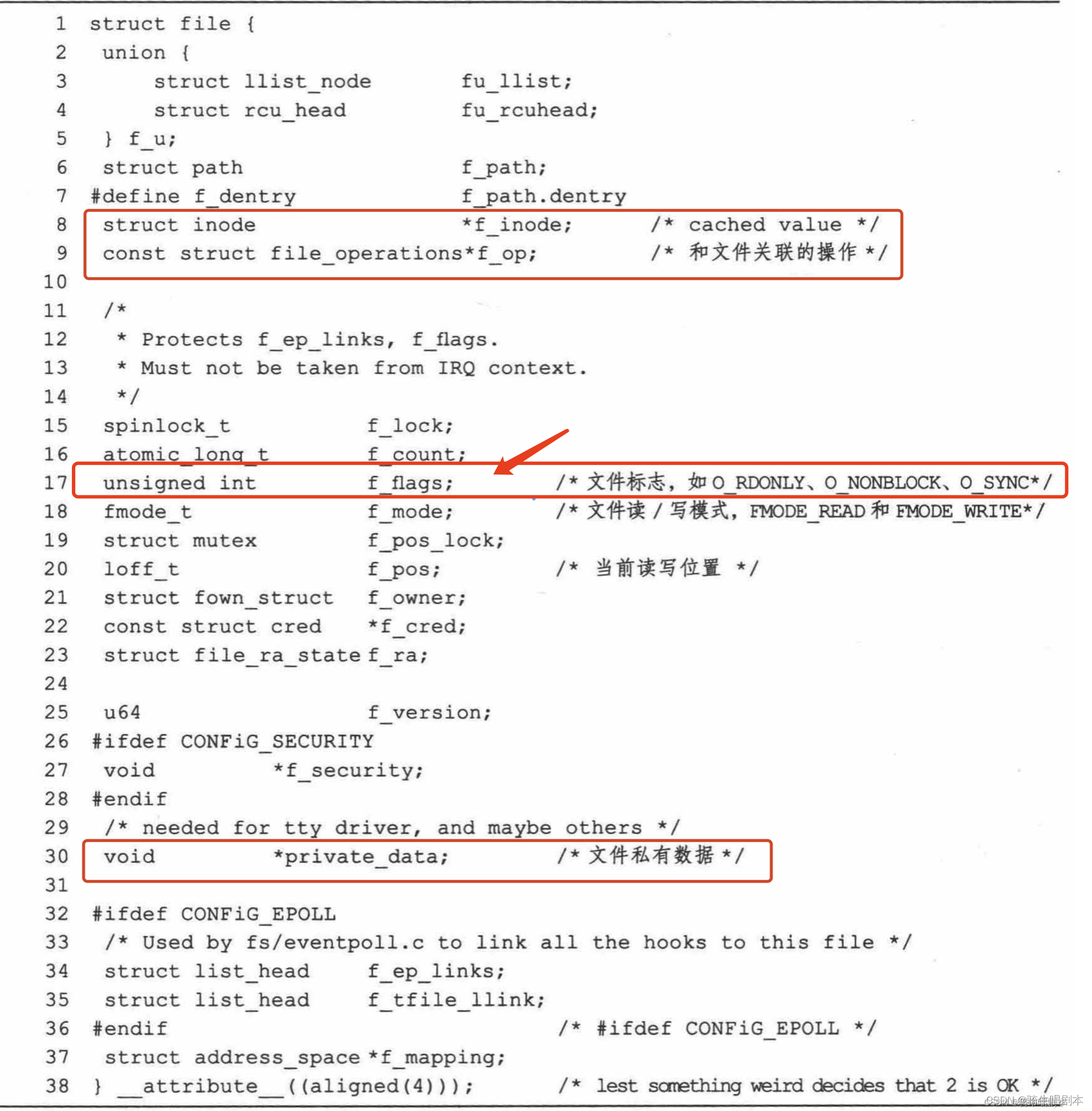

内核是在定时器中断的软中断下半部来处理这些定时器的,内核将会遍历链表中的定时器,如果当前的jiffies 的值和定时器中的expires 的值相等,那么定时器函数将会被执行,所以定时器函数是在中断上下文中执行的。另外,内核为了高效管理这些定时器会将这些定时器按照超时时间进行分组,所以内核只会遍历快要到期的定时器。下面是添加了定时器的虚拟串口驱动代码

#include <linux/init.h>

#include <linux/kernel.h>

#include <linux/module.h>

#include <linux/fs.h>

#include <linux/cdev.h>

#include <linux/kfifo.h>

#include <linux/ioctl.h>

#include <linux/uaccess.h>

#include <linux/wait.h>

#include <linux/sched.h>

#include <linux/poll.h>

#include <linux/aio.h>

#include <linux/random.h>

#include <linux/timer.h>

#include "vser.h"

#define VSER_MAJOR 256

#define VSER_MINOR 0

#define VSER_DEV_CNT 1

#define VSER_DEV_NAME "vser"

struct vser_dev {

unsigned int baud;

struct option opt;

struct cdev cdev;

wait_queue_head_t rwqh;

wait_queue_head_t wwqh;

struct fasync_struct *fapp;

struct timer_list timer;

};

DEFINE_KFIFO(vsfifo, char, 32);

static struct vser_dev vsdev;

static int vser_fasync(int fd, struct file *filp, int on);

static int vser_open(struct inode *inode, struct file *filp)

{

return 0;

}

static int vser_release(struct inode *inode, struct file *filp)

{

vser_fasync(-1, filp, 0);

return 0;

}

static ssize_t vser_read(struct file *filp, char __user *buf, size_t count, loff_t *pos)

{

int ret;

unsigned int copied = 0;

if (kfifo_is_empty(&vsfifo)) {

if (filp->f_flags & O_NONBLOCK)

return -EAGAIN;

if (wait_event_interruptible_exclusive(vsdev.rwqh, !kfifo_is_empty(&vsfifo)))

return -ERESTARTSYS;

}

ret = kfifo_to_user(&vsfifo, buf, count, &copied);

if (!kfifo_is_full(&vsfifo)) {

wake_up_interruptible(&vsdev.wwqh);

kill_fasync(&vsdev.fapp, SIGIO, POLL_OUT);

}

return ret == 0 ? copied : ret;

}

static ssize_t vser_write(struct file *filp, const char __user *buf, size_t count, loff_t *pos)

{

int ret;

unsigned int copied = 0;

if (kfifo_is_full(&vsfifo)) {

if (filp->f_flags & O_NONBLOCK)

return -EAGAIN;

if (wait_event_interruptible_exclusive(vsdev.wwqh, !kfifo_is_full(&vsfifo)))

return -ERESTARTSYS;

}

ret = kfifo_from_user(&vsfifo, buf, count, &copied);

if (!kfifo_is_empty(&vsfifo)) {

wake_up_interruptible(&vsdev.rwqh);

kill_fasync(&vsdev.fapp, SIGIO, POLL_IN);

}

return ret == 0 ? copied : ret;

}

static long vser_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

if (_IOC_TYPE(cmd) != VS_MAGIC)

return -ENOTTY;

switch (cmd) {

case VS_SET_BAUD:

vsdev.baud = arg;

break;

case VS_GET_BAUD:

arg = vsdev.baud;

break;

case VS_SET_FFMT:

if (copy_from_user(&vsdev.opt, (struct option __user *)arg, sizeof(struct option)))

return -EFAULT;

break;

case VS_GET_FFMT:

if (copy_to_user((struct option __user *)arg, &vsdev.opt, sizeof(struct option)))

return -EFAULT;

break;

default:

return -ENOTTY;

}

return 0;

}

static unsigned int vser_poll(struct file *filp, struct poll_table_struct *p)

{

int mask = 0;

poll_wait(filp, &vsdev.rwqh, p);

poll_wait(filp, &vsdev.wwqh, p);

if (!kfifo_is_empty(&vsfifo))

mask |= POLLIN | POLLRDNORM;

if (!kfifo_is_full(&vsfifo))

mask |= POLLOUT | POLLWRNORM;

return mask;

}

static ssize_t vser_aio_read(struct kiocb *iocb, const struct iovec *iov, unsigned long nr_segs, loff_t pos)

{

size_t read = 0;

unsigned long i;

ssize_t ret;

for (i = 0; i < nr_segs; i++) {

ret = vser_read(iocb->ki_filp, iov[i].iov_base, iov[i].iov_len, &pos);

if (ret < 0)

break;

read += ret;

}

return read ? read : -EFAULT;

}

static ssize_t vser_aio_write(struct kiocb *iocb, const struct iovec *iov, unsigned long nr_segs, loff_t pos)

{

size_t written = 0;

unsigned long i;

ssize_t ret;

for (i = 0; i < nr_segs; i++) {

ret = vser_write(iocb->ki_filp, iov[i].iov_base, iov[i].iov_len, &pos);

if (ret < 0)

break;

written += ret;

}

return written ? written : -EFAULT;

}

static int vser_fasync(int fd, struct file *filp, int on)

{

return fasync_helper(fd, filp, on, &vsdev.fapp);

}

static void vser_timer(unsigned long arg)

{

char data;

struct vser_dev *dev = (struct vser_dev *)arg;

get_random_bytes(&data, sizeof(data));

data %= 26;

data += 'A';

if (!kfifo_is_full(&vsfifo))

if(!kfifo_in(&vsfifo, &data, sizeof(data)))

printk(KERN_ERR "vser: kfifo_in failure\n");

if (!kfifo_is_empty(&vsfifo)) {

wake_up_interruptible(&vsdev.rwqh);

kill_fasync(&vsdev.fapp, SIGIO, POLL_IN);

}

mod_timer(&dev->timer, get_jiffies_64() + msecs_to_jiffies(1000));

}

static struct file_operations vser_ops = {

.owner = THIS_MODULE,

.open = vser_open,

.release = vser_release,

.read = vser_read,

.write = vser_write,

.unlocked_ioctl = vser_ioctl,

.poll = vser_poll,

.aio_read = vser_aio_read,

.aio_write = vser_aio_write,

.fasync = vser_fasync,

};

static int __init vser_init(void)

{

int ret;

dev_t dev;

dev = MKDEV(VSER_MAJOR, VSER_MINOR);

ret = register_chrdev_region(dev, VSER_DEV_CNT, VSER_DEV_NAME);

if (ret)

goto reg_err;

cdev_init(&vsdev.cdev, &vser_ops);

vsdev.cdev.owner = THIS_MODULE;

vsdev.baud = 115200;

vsdev.opt.datab = 8;

vsdev.opt.parity = 0;

vsdev.opt.stopb = 1;

ret = cdev_add(&vsdev.cdev, dev, VSER_DEV_CNT);

if (ret)

goto add_err;

init_waitqueue_head(&vsdev.rwqh);

init_waitqueue_head(&vsdev.wwqh);

init_timer(&vsdev.timer);

vsdev.timer.expires = get_jiffies_64() + msecs_to_jiffies(1000);

vsdev.timer.function = vser_timer;

vsdev.timer.data = (unsigned long)&vsdev;

add_timer(&vsdev.timer);

return 0;

add_err:

unregister_chrdev_region(dev, VSER_DEV_CNT);

reg_err:

return ret;

}

static void __exit vser_exit(void)

{

dev_t dev;

dev = MKDEV(VSER_MAJOR, VSER_MINOR);

del_timer(&vsdev.timer);

cdev_del(&vsdev.cdev);

unregister_chrdev_region(dev, VSER_DEV_CNT);

}

module_init(vser_init);

module_exit(vser_exit);

MODULE_LICENSE("GPL");

MODULE_AUTHOR("name <E-mail>");

MODULE_DESCRIPTION("A simple character device driver");

MODULE_ALIAS("virtual-serial");

代码第 34 行给 vser_dev 结构添加一个低分辨率定时器成员,代码第 236行至240行初始化并向内核添加了这个定时器,代码第 256行在模块卸载时删除了定时器。代码第 179 行至第197行是定时器函数的实现,其中代码第 196 行修改了定时值,形成了一个循环定时器。驱动将每过一秒向FIFO 推入一个字符,编译和测试的命令和中断一样。

高分辨率定时器

因为低分辨率定时器以 jiffies来定时,所以定时精度受系统的 Hz 影啊,而通常这值都不高。如果Hz的值为200,那么一个jiffy 的时间就是5 毫,也就是说,定时器的精度就是5毫秒。对于像声卡一类需要高精度定时的设备,这种精度是不能满足要求的,于是内核人员又开发了高分率定时器。

高分辨率定时器是以 ktime_t来定义时间的,类型定义加下。

/*

* include/linux/ktime.h

*

* ktime_t - nanosecond-resolution time format.

*

* Copyright(C) 2005, Thomas Gleixner <tglx@linutronix.de>

* Copyright(C) 2005, Red Hat, Inc., Ingo Molnar

*

* data type definitions, declarations, prototypes and macros.

*

* Started by: Thomas Gleixner and Ingo Molnar

*

* Credits:

*

* Roman Zippel provided the ideas and primary code snippets of

* the ktime_t union and further simplifications of the original

* code.

*

* For licencing details see kernel-base/COPYING

*/

#ifndef _LINUX_KTIME_H

#define _LINUX_KTIME_H

#include <linux/time.h>

#include <linux/jiffies.h>

/*

* ktime_t:

*

* On 64-bit CPUs a single 64-bit variable is used to store the hrtimers

* internal representation of time values in scalar nanoseconds. The

* design plays out best on 64-bit CPUs, where most conversions are

* NOPs and most arithmetic ktime_t operations are plain arithmetic

* operations.

*

* On 32-bit CPUs an optimized representation of the timespec structure

* is used to avoid expensive conversions from and to timespecs. The

* endian-aware order of the tv struct members is chosen to allow

* mathematical operations on the tv64 member of the union too, which

* for certain operations produces better code.

*

* For architectures with efficient support for 64/32-bit conversions the

* plain scalar nanosecond based representation can be selected by the

* config switch CONFIG_KTIME_SCALAR.

*/

union ktime {

s64 tv64;

#if BITS_PER_LONG != 64 && !defined(CONFIG_KTIME_SCALAR)

struct {

# ifdef __BIG_ENDIAN

s32 sec, nsec;

# else

s32 nsec, sec;

# endif

} tv;

#endif

};

typedef union ktime ktime_t; /* Kill this */

/*

* ktime_t definitions when using the 64-bit scalar representation:

*/

#if (BITS_PER_LONG == 64) || defined(CONFIG_KTIME_SCALAR)

/**

* ktime_set - Set a ktime_t variable from a seconds/nanoseconds value

* @secs: seconds to set

* @nsecs: nanoseconds to set

*

* Return: The ktime_t representation of the value.

*/

static inline ktime_t ktime_set(const long secs, const unsigned long nsecs)

{

#if (BITS_PER_LONG == 64)

if (unlikely(secs >= KTIME_SEC_MAX))

return (ktime_t){ .tv64 = KTIME_MAX };

#endif

return (ktime_t) { .tv64 = (s64)secs * NSEC_PER_SEC + (s64)nsecs };

}

/* Subtract two ktime_t variables. rem = lhs -rhs: */

#define ktime_sub(lhs, rhs) \

({ (ktime_t){ .tv64 = (lhs).tv64 - (rhs).tv64 }; })

/* Add two ktime_t variables. res = lhs + rhs: */

#define ktime_add(lhs, rhs) \

({ (ktime_t){ .tv64 = (lhs).tv64 + (rhs).tv64 }; })

/*

* Add a ktime_t variable and a scalar nanosecond value.

* res = kt + nsval:

*/

#define ktime_add_ns(kt, nsval) \

({ (ktime_t){ .tv64 = (kt).tv64 + (nsval) }; })

/*

* Subtract a scalar nanosecod from a ktime_t variable

* res = kt - nsval:

*/

#define ktime_sub_ns(kt, nsval) \

({ (ktime_t){ .tv64 = (kt).tv64 - (nsval) }; })

/* convert a timespec to ktime_t format: */

static inline ktime_t timespec_to_ktime(struct timespec ts)

{

return ktime_set(ts.tv_sec, ts.tv_nsec);

}

/* convert a timeval to ktime_t format: */

static inline ktime_t timeval_to_ktime(struct timeval tv)

{

return ktime_set(tv.tv_sec, tv.tv_usec * NSEC_PER_USEC);

}

/* Map the ktime_t to timespec conversion to ns_to_timespec function */

#define ktime_to_timespec(kt) ns_to_timespec((kt).tv64)

/* Map the ktime_t to timeval conversion to ns_to_timeval function */

#define ktime_to_timeval(kt) ns_to_timeval((kt).tv64)

/* Convert ktime_t to nanoseconds - NOP in the scalar storage format: */

#define ktime_to_ns(kt) ((kt).tv64)

#else /* !((BITS_PER_LONG == 64) || defined(CONFIG_KTIME_SCALAR)) */

/*

* Helper macros/inlines to get the ktime_t math right in the timespec

* representation. The macros are sometimes ugly - their actual use is

* pretty okay-ish, given the circumstances. We do all this for

* performance reasons. The pure scalar nsec_t based code was nice and

* simple, but created too many 64-bit / 32-bit conversions and divisions.

*

* Be especially aware that negative values are represented in a way

* that the tv.sec field is negative and the tv.nsec field is greater

* or equal to zero but less than nanoseconds per second. This is the

* same representation which is used by timespecs.

*

* tv.sec < 0 and 0 >= tv.nsec < NSEC_PER_SEC

*/

/* Set a ktime_t variable to a value in sec/nsec representation: */

static inline ktime_t ktime_set(const long secs, const unsigned long nsecs)

{

return (ktime_t) { .tv = { .sec = secs, .nsec = nsecs } };

}

/**

* ktime_sub - subtract two ktime_t variables

* @lhs: minuend

* @rhs: subtrahend

*

* Return: The remainder of the subtraction.

*/

static inline ktime_t ktime_sub(const ktime_t lhs, const ktime_t rhs)

{

ktime_t res;

res.tv64 = lhs.tv64 - rhs.tv64;

if (res.tv.nsec < 0)

res.tv.nsec += NSEC_PER_SEC;

return res;

}

/**

* ktime_add - add two ktime_t variables

* @add1: addend1

* @add2: addend2

*

* Return: The sum of @add1 and @add2.

*/

static inline ktime_t ktime_add(const ktime_t add1, const ktime_t add2)

{

ktime_t res;

res.tv64 = add1.tv64 + add2.tv64;

/*

* performance trick: the (u32) -NSEC gives 0x00000000Fxxxxxxx

* so we subtract NSEC_PER_SEC and add 1 to the upper 32 bit.

*

* it's equivalent to:

* tv.nsec -= NSEC_PER_SEC

* tv.sec ++;

*/

if (res.tv.nsec >= NSEC_PER_SEC)

res.tv64 += (u32)-NSEC_PER_SEC;

return res;

}

/**

* ktime_add_ns - Add a scalar nanoseconds value to a ktime_t variable

* @kt: addend

* @nsec: the scalar nsec value to add

*

* Return: The sum of @kt and @nsec in ktime_t format.

*/

extern ktime_t ktime_add_ns(const ktime_t kt, u64 nsec);

/**

* ktime_sub_ns - Subtract a scalar nanoseconds value from a ktime_t variable

* @kt: minuend

* @nsec: the scalar nsec value to subtract

*

* Return: The subtraction of @nsec from @kt in ktime_t format.

*/

extern ktime_t ktime_sub_ns(const ktime_t kt, u64 nsec);

/**

* timespec_to_ktime - convert a timespec to ktime_t format

* @ts: the timespec variable to convert

*

* Return: A ktime_t variable with the converted timespec value.

*/

static inline ktime_t timespec_to_ktime(const struct timespec ts)

{

return (ktime_t) { .tv = { .sec = (s32)ts.tv_sec,

.nsec = (s32)ts.tv_nsec } };

}

/**

* timeval_to_ktime - convert a timeval to ktime_t format

* @tv: the timeval variable to convert

*

* Return: A ktime_t variable with the converted timeval value.

*/

static inline ktime_t timeval_to_ktime(const struct timeval tv)

{

return (ktime_t) { .tv = { .sec = (s32)tv.tv_sec,

.nsec = (s32)(tv.tv_usec *

NSEC_PER_USEC) } };

}

/**

* ktime_to_timespec - convert a ktime_t variable to timespec format

* @kt: the ktime_t variable to convert

*

* Return: The timespec representation of the ktime value.

*/

static inline struct timespec ktime_to_timespec(const ktime_t kt)

{

return (struct timespec) { .tv_sec = (time_t) kt.tv.sec,

.tv_nsec = (long) kt.tv.nsec };

}

/**

* ktime_to_timeval - convert a ktime_t variable to timeval format

* @kt: the ktime_t variable to convert

*

* Return: The timeval representation of the ktime value.

*/

static inline struct timeval ktime_to_timeval(const ktime_t kt)

{

return (struct timeval) {

.tv_sec = (time_t) kt.tv.sec,

.tv_usec = (suseconds_t) (kt.tv.nsec / NSEC_PER_USEC) };

}

/**

* ktime_to_ns - convert a ktime_t variable to scalar nanoseconds

* @kt: the ktime_t variable to convert

*

* Return: The scalar nanoseconds representation of @kt.

*/

static inline s64 ktime_to_ns(const ktime_t kt)

{

return (s64) kt.tv.sec * NSEC_PER_SEC + kt.tv.nsec;

}

#endif /* !((BITS_PER_LONG == 64) || defined(CONFIG_KTIME_SCALAR)) */

/**

* ktime_equal - Compares two ktime_t variables to see if they are equal

* @cmp1: comparable1

* @cmp2: comparable2

*

* Compare two ktime_t variables.

*

* Return: 1 if equal.

*/

static inline int ktime_equal(const ktime_t cmp1, const ktime_t cmp2)

{

return cmp1.tv64 == cmp2.tv64;

}

/**

* ktime_compare - Compares two ktime_t variables for less, greater or equal

* @cmp1: comparable1

* @cmp2: comparable2

*

* Return: ...

* cmp1 < cmp2: return <0

* cmp1 == cmp2: return 0

* cmp1 > cmp2: return >0

*/

static inline int ktime_compare(const ktime_t cmp1, const ktime_t cmp2)

{

if (cmp1.tv64 < cmp2.tv64)

return -1;

if (cmp1.tv64 > cmp2.tv64)

return 1;

return 0;

}

static inline s64 ktime_to_us(const ktime_t kt)

{

struct timeval tv = ktime_to_timeval(kt);

return (s64) tv.tv_sec * USEC_PER_SEC + tv.tv_usec;

}

static inline s64 ktime_to_ms(const ktime_t kt)

{

struct timeval tv = ktime_to_timeval(kt);

return (s64) tv.tv_sec * MSEC_PER_SEC + tv.tv_usec / USEC_PER_MSEC;

}

static inline s64 ktime_us_delta(const ktime_t later, const ktime_t earlier)

{

return ktime_to_us(ktime_sub(later, earlier));

}

static inline ktime_t ktime_add_us(const ktime_t kt, const u64 usec)

{

return ktime_add_ns(kt, usec * NSEC_PER_USEC);

}

static inline ktime_t ktime_add_ms(const ktime_t kt, const u64 msec)

{

return ktime_add_ns(kt, msec * NSEC_PER_MSEC);

}

static inline ktime_t ktime_sub_us(const ktime_t kt, const u64 usec)

{

return ktime_sub_ns(kt, usec * NSEC_PER_USEC);

}

extern ktime_t ktime_add_safe(const ktime_t lhs, const ktime_t rhs);

/**

* ktime_to_timespec_cond - convert a ktime_t variable to timespec

* format only if the variable contains data

* @kt: the ktime_t variable to convert

* @ts: the timespec variable to store the result in

*

* Return: %true if there was a successful conversion, %false if kt was 0.

*/

static inline __must_check bool ktime_to_timespec_cond(const ktime_t kt,

struct timespec *ts)

{

if (kt.tv64) {

*ts = ktime_to_timespec(kt);

return true;

} else {

return false;

}

}

/*

* The resolution of the clocks. The resolution value is returned in

* the clock_getres() system call to give application programmers an

* idea of the (in)accuracy of timers. Timer values are rounded up to

* this resolution values.

*/

#define LOW_RES_NSEC TICK_NSEC

#define KTIME_LOW_RES (ktime_t){ .tv64 = LOW_RES_NSEC }

/* Get the monotonic time in timespec format: */

extern void ktime_get_ts(struct timespec *ts);

/* Get the real (wall-) time in timespec format: */

#define ktime_get_real_ts(ts) getnstimeofday(ts)

static inline ktime_t ns_to_ktime(u64 ns)

{

static const ktime_t ktime_zero = { .tv64 = 0 };

return ktime_add_ns(ktime_zero, ns);

}

static inline ktime_t ms_to_ktime(u64 ms)

{

static const ktime_t ktime_zero = { .tv64 = 0 };

return ktime_add_ms(ktime_zero, ms);

}

#endif

ktime 是一个共用体,如果是 64 位的系统,那么时间用 tv64 来表示就可以了;如是 32 位系统,那么时间分别用 sec 和 nsec 来表示秒和纳秒。由此可以看出,高分率定时器的精度可以达到纳秒级。

通常可以用 ktme_set 来初始化这个对象,常用的方法如下。

ktime_t t = ktime_set(secs,nsecs);

高分辨率定时器的结构类型定义如下。

/*

* include/linux/hrtimer.h

*

* hrtimers - High-resolution kernel timers

*

* Copyright(C) 2005, Thomas Gleixner <tglx@linutronix.de>

* Copyright(C) 2005, Red Hat, Inc., Ingo Molnar

*

* data type definitions, declarations, prototypes

*

* Started by: Thomas Gleixner and Ingo Molnar

*

* For licencing details see kernel-base/COPYING

*/

#ifndef _LINUX_HRTIMER_H

#define _LINUX_HRTIMER_H

#include <linux/rbtree.h>

#include <linux/ktime.h>

#include <linux/init.h>

#include <linux/list.h>

#include <linux/wait.h>

#include <linux/percpu.h>

#include <linux/timer.h>

#include <linux/timerqueue.h>

struct hrtimer_clock_base;

struct hrtimer_cpu_base;

/*

* Mode arguments of xxx_hrtimer functions:

*/

enum hrtimer_mode {

HRTIMER_MODE_ABS = 0x0, /* Time value is absolute */

HRTIMER_MODE_REL = 0x1, /* Time value is relative to now */

HRTIMER_MODE_PINNED = 0x02, /* Timer is bound to CPU */

HRTIMER_MODE_ABS_PINNED = 0x02,

HRTIMER_MODE_REL_PINNED = 0x03,

};

/*

* Return values for the callback function

*/

enum hrtimer_restart {

HRTIMER_NORESTART, /* Timer is not restarted */

HRTIMER_RESTART, /* Timer must be restarted */

};

/*

* Values to track state of the timer

*

* Possible states:

*

* 0x00 inactive

* 0x01 enqueued into rbtree

* 0x02 callback function running

* 0x04 timer is migrated to another cpu

*

* Special cases:

* 0x03 callback function running and enqueued

* (was requeued on another CPU)

* 0x05 timer was migrated on CPU hotunplug

*

* The "callback function running and enqueued" status is only possible on

* SMP. It happens for example when a posix timer expired and the callback

* queued a signal. Between dropping the lock which protects the posix timer

* and reacquiring the base lock of the hrtimer, another CPU can deliver the

* signal and rearm the timer. We have to preserve the callback running state,

* as otherwise the timer could be removed before the softirq code finishes the

* the handling of the timer.

*

* The HRTIMER_STATE_ENQUEUED bit is always or'ed to the current state

* to preserve the HRTIMER_STATE_CALLBACK in the above scenario. This

* also affects HRTIMER_STATE_MIGRATE where the preservation is not

* necessary. HRTIMER_STATE_MIGRATE is cleared after the timer is

* enqueued on the new cpu.

*

* All state transitions are protected by cpu_base->lock.

*/

#define HRTIMER_STATE_INACTIVE 0x00

#define HRTIMER_STATE_ENQUEUED 0x01

#define HRTIMER_STATE_CALLBACK 0x02

#define HRTIMER_STATE_MIGRATE 0x04

/**

* struct hrtimer - the basic hrtimer structure

* @node: timerqueue node, which also manages node.expires,

* the absolute expiry time in the hrtimers internal

* representation. The time is related to the clock on

* which the timer is based. Is setup by adding

* slack to the _softexpires value. For non range timers

* identical to _softexpires.

* @_softexpires: the absolute earliest expiry time of the hrtimer.

* The time which was given as expiry time when the timer

* was armed.

* @function: timer expiry callback function

* @base: pointer to the timer base (per cpu and per clock)

* @state: state information (See bit values above)

* @start_site: timer statistics field to store the site where the timer

* was started

* @start_comm: timer statistics field to store the name of the process which

* started the timer

* @start_pid: timer statistics field to store the pid of the task which

* started the timer

*

* The hrtimer structure must be initialized by hrtimer_init()

*/

struct hrtimer {

struct timerqueue_node node;

ktime_t _softexpires;

enum hrtimer_restart (*function)(struct hrtimer *);

struct hrtimer_clock_base *base;

unsigned long state;

#ifdef CONFIG_TIMER_STATS

int start_pid;

void *start_site;

char start_comm[16];

#endif

};

/**

* struct hrtimer_sleeper - simple sleeper structure

* @timer: embedded timer structure

* @task: task to wake up

*

* task is set to NULL, when the timer expires.

*/

struct hrtimer_sleeper {

struct hrtimer timer;

struct task_struct *task;

};

/**

* struct hrtimer_clock_base - the timer base for a specific clock

* @cpu_base: per cpu clock base

* @index: clock type index for per_cpu support when moving a

* timer to a base on another cpu.

* @clockid: clock id for per_cpu support

* @active: red black tree root node for the active timers

* @resolution: the resolution of the clock, in nanoseconds

* @get_time: function to retrieve the current time of the clock

* @softirq_time: the time when running the hrtimer queue in the softirq

* @offset: offset of this clock to the monotonic base

*/

struct hrtimer_clock_base {

struct hrtimer_cpu_base *cpu_base;

int index;

clockid_t clockid;

struct timerqueue_head active;

ktime_t resolution;

ktime_t (*get_time)(void);

ktime_t softirq_time;

ktime_t offset;

};

enum hrtimer_base_type {

HRTIMER_BASE_MONOTONIC,

HRTIMER_BASE_REALTIME,

HRTIMER_BASE_BOOTTIME,

HRTIMER_BASE_TAI,

HRTIMER_MAX_CLOCK_BASES,

};

/*

* struct hrtimer_cpu_base - the per cpu clock bases

* @lock: lock protecting the base and associated clock bases

* and timers

* @active_bases: Bitfield to mark bases with active timers

* @clock_was_set: Indicates that clock was set from irq context.

* @expires_next: absolute time of the next event which was scheduled

* via clock_set_next_event()

* @hres_active: State of high resolution mode

* @hang_detected: The last hrtimer interrupt detected a hang

* @nr_events: Total number of hrtimer interrupt events

* @nr_retries: Total number of hrtimer interrupt retries

* @nr_hangs: Total number of hrtimer interrupt hangs

* @max_hang_time: Maximum time spent in hrtimer_interrupt

* @clock_base: array of clock bases for this cpu

*/

struct hrtimer_cpu_base {

raw_spinlock_t lock;

unsigned int active_bases;

unsigned int clock_was_set;

#ifdef CONFIG_HIGH_RES_TIMERS

ktime_t expires_next;

int hres_active;

int hang_detected;

unsigned long nr_events;

unsigned long nr_retries;

unsigned long nr_hangs;

ktime_t max_hang_time;

#endif

struct hrtimer_clock_base clock_base[HRTIMER_MAX_CLOCK_BASES];

};

static inline void hrtimer_set_expires(struct hrtimer *timer, ktime_t time)

{

timer->node.expires = time;

timer->_softexpires = time;

}

static inline void hrtimer_set_expires_range(struct hrtimer *timer, ktime_t time, ktime_t delta)

{

timer->_softexpires = time;

timer->node.expires = ktime_add_safe(time, delta);

}

static inline void hrtimer_set_expires_range_ns(struct hrtimer *timer, ktime_t time, unsigned long delta)

{

timer->_softexpires = time;

timer->node.expires = ktime_add_safe(time, ns_to_ktime(delta));

}

static inline void hrtimer_set_expires_tv64(struct hrtimer *timer, s64 tv64)

{

timer->node.expires.tv64 = tv64;

timer->_softexpires.tv64 = tv64;

}

static inline void hrtimer_add_expires(struct hrtimer *timer, ktime_t time)

{

timer->node.expires = ktime_add_safe(timer->node.expires, time);

timer->_softexpires = ktime_add_safe(timer->_softexpires, time);

}

static inline void hrtimer_add_expires_ns(struct hrtimer *timer, u64 ns)

{

timer->node.expires = ktime_add_ns(timer->node.expires, ns);

timer->_softexpires = ktime_add_ns(timer->_softexpires, ns);

}

static inline ktime_t hrtimer_get_expires(const struct hrtimer *timer)

{

return timer->node.expires;

}

static inline ktime_t hrtimer_get_softexpires(const struct hrtimer *timer)

{

return timer->_softexpires;

}

static inline s64 hrtimer_get_expires_tv64(const struct hrtimer *timer)

{

return timer->node.expires.tv64;

}

static inline s64 hrtimer_get_softexpires_tv64(const struct hrtimer *timer)

{

return timer->_softexpires.tv64;

}

static inline s64 hrtimer_get_expires_ns(const struct hrtimer *timer)

{

return ktime_to_ns(timer->node.expires);

}

static inline ktime_t hrtimer_expires_remaining(const struct hrtimer *timer)

{

return ktime_sub(timer->node.expires, timer->base->get_time());

}

#ifdef CONFIG_HIGH_RES_TIMERS

struct clock_event_device;

extern void hrtimer_interrupt(struct clock_event_device *dev);

/*

* In high resolution mode the time reference must be read accurate

*/

static inline ktime_t hrtimer_cb_get_time(struct hrtimer *timer)

{

return timer->base->get_time();

}

static inline int hrtimer_is_hres_active(struct hrtimer *timer)

{

return timer->base->cpu_base->hres_active;

}

extern void hrtimer_peek_ahead_timers(void);

/*

* The resolution of the clocks. The resolution value is returned in

* the clock_getres() system call to give application programmers an

* idea of the (in)accuracy of timers. Timer values are rounded up to

* this resolution values.

*/

# define HIGH_RES_NSEC 1

# define KTIME_HIGH_RES (ktime_t) { .tv64 = HIGH_RES_NSEC }

# define MONOTONIC_RES_NSEC HIGH_RES_NSEC

# define KTIME_MONOTONIC_RES KTIME_HIGH_RES

extern void clock_was_set_delayed(void);

#else

# define MONOTONIC_RES_NSEC LOW_RES_NSEC

# define KTIME_MONOTONIC_RES KTIME_LOW_RES

static inline void hrtimer_peek_ahead_timers(void) { }

/*

* In non high resolution mode the time reference is taken from

* the base softirq time variable.

*/

static inline ktime_t hrtimer_cb_get_time(struct hrtimer *timer)

{

return timer->base->softirq_time;

}

static inline int hrtimer_is_hres_active(struct hrtimer *timer)

{

return 0;

}

static inline void clock_was_set_delayed(void) { }

#endif

extern void clock_was_set(void);

#ifdef CONFIG_TIMERFD

extern void timerfd_clock_was_set(void);

#else

static inline void timerfd_clock_was_set(void) { }

#endif

extern void hrtimers_resume(void);

extern ktime_t ktime_get(void);

extern ktime_t ktime_get_real(void);

extern ktime_t ktime_get_boottime(void);

extern ktime_t ktime_get_monotonic_offset(void);

extern ktime_t ktime_get_clocktai(void);

extern ktime_t ktime_get_update_offsets(ktime_t *offs_real, ktime_t *offs_boot,

ktime_t *offs_tai);

DECLARE_PER_CPU(struct tick_device, tick_cpu_device);

/* Exported timer functions: */

/* Initialize timers: */

extern void hrtimer_init(struct hrtimer *timer, clockid_t which_clock,

enum hrtimer_mode mode);

#ifdef CONFIG_DEBUG_OBJECTS_TIMERS

extern void hrtimer_init_on_stack(struct hrtimer *timer, clockid_t which_clock,

enum hrtimer_mode mode);

extern void destroy_hrtimer_on_stack(struct hrtimer *timer);

#else

static inline void hrtimer_init_on_stack(struct hrtimer *timer,

clockid_t which_clock,

enum hrtimer_mode mode)

{

hrtimer_init(timer, which_clock, mode);

}

static inline void destroy_hrtimer_on_stack(struct hrtimer *timer) { }

#endif

/* Basic timer operations: */

extern int hrtimer_start(struct hrtimer *timer, ktime_t tim,

const enum hrtimer_mode mode);

extern int hrtimer_start_range_ns(struct hrtimer *timer, ktime_t tim,

unsigned long range_ns, const enum hrtimer_mode mode);

extern int

__hrtimer_start_range_ns(struct hrtimer *timer, ktime_t tim,

unsigned long delta_ns,

const enum hrtimer_mode mode, int wakeup);

extern int hrtimer_cancel(struct hrtimer *timer);

extern int hrtimer_try_to_cancel(struct hrtimer *timer);

static inline int hrtimer_start_expires(struct hrtimer *timer,

enum hrtimer_mode mode)

{

unsigned long delta;

ktime_t soft, hard;

soft = hrtimer_get_softexpires(timer);

hard = hrtimer_get_expires(timer);

delta = ktime_to_ns(ktime_sub(hard, soft));

return hrtimer_start_range_ns(timer, soft, delta, mode);

}

static inline int hrtimer_restart(struct hrtimer *timer)

{

return hrtimer_start_expires(timer, HRTIMER_MODE_ABS);

}

/* Query timers: */

extern ktime_t hrtimer_get_remaining(const struct hrtimer *timer);

extern int hrtimer_get_res(const clockid_t which_clock, struct timespec *tp);

extern ktime_t hrtimer_get_next_event(void);

/*

* A timer is active, when it is enqueued into the rbtree or the

* callback function is running or it's in the state of being migrated

* to another cpu.

*/

static inline int hrtimer_active(const struct hrtimer *timer)

{

return timer->state != HRTIMER_STATE_INACTIVE;

}

/*

* Helper function to check, whether the timer is on one of the queues

*/

static inline int hrtimer_is_queued(struct hrtimer *timer)

{

return timer->state & HRTIMER_STATE_ENQUEUED;

}

/*

* Helper function to check, whether the timer is running the callback

* function

*/

static inline int hrtimer_callback_running(struct hrtimer *timer)

{

return timer->state & HRTIMER_STATE_CALLBACK;

}

/* Forward a hrtimer so it expires after now: */

extern u64

hrtimer_forward(struct hrtimer *timer, ktime_t now, ktime_t interval);

/* Forward a hrtimer so it expires after the hrtimer's current now */

static inline u64 hrtimer_forward_now(struct hrtimer *timer,

ktime_t interval)

{

return hrtimer_forward(timer, timer->base->get_time(), interval);

}

/* Precise sleep: */

extern long hrtimer_nanosleep(struct timespec *rqtp,

struct timespec __user *rmtp,

const enum hrtimer_mode mode,

const clockid_t clockid);

extern long hrtimer_nanosleep_restart(struct restart_block *restart_block);

extern void hrtimer_init_sleeper(struct hrtimer_sleeper *sl,

struct task_struct *tsk);

extern int schedule_hrtimeout_range(ktime_t *expires, unsigned long delta,

const enum hrtimer_mode mode);

extern int schedule_hrtimeout_range_clock(ktime_t *expires,

unsigned long delta, const enum hrtimer_mode mode, int clock);

extern int schedule_hrtimeout(ktime_t *expires, const enum hrtimer_mode mode);

/* Soft interrupt function to run the hrtimer queues: */

extern void hrtimer_run_queues(void);

extern void hrtimer_run_pending(void);

/* Bootup initialization: */

extern void __init hrtimers_init(void);

#if BITS_PER_LONG < 64

extern u64 ktime_divns(const ktime_t kt, s64 div);

#else /* BITS_PER_LONG < 64 */

# define ktime_divns(kt, div) (u64)((kt).tv64 / (div))

#endif

/* Show pending timers: */

extern void sysrq_timer_list_show(void);

#endif

其中和驱动相关的主要的成员就是 function,它指向定时到期后执行的函数。

高分辨率定时器最常用的函数如下。

void hrtimer_init(struct hrtimer *timer, clockid_t clock_id, enum hrtimer_mode mode);

int hrtimer_start(struct hrtimer *timer, ktime_t tim, const enum hrtimer_mode mode);

static inline u64 hrtimer_forward_now(struct hrtimer *timer, ktime_t interval);

int hrtimer_cancel(struct hrtimer *timer);

hrtimer_init:初始化struct hrtimer 结构对象。clock_id 是时钟的类型,种类很多,常用的 CLOCK_MONOTONIC 表示自系统开机以来的单调递增时间。mode 是时间的模式可以是 HRTIMER_MODE_ABS,表示绝对时间,也可以是 HRTIMER_MODE_REL,表示相对时间。

hrtimer_start;启动定时器。tim 是设定的到期时间,mode 和 hrtimer_init 中的 mode参数含义相同。

hrtimer_forward_now:修改到期时间为从现在开始之后的 interval 时间。

hrtimer_cancel:取消定时器。

使用高分辨率定时器的虚拟串口驱动代码如下

#include <linux/init.h>

#include <linux/kernel.h>

#include <linux/module.h>

#include <linux/fs.h>

#include <linux/cdev.h>

#include <linux/kfifo.h>

#include <linux/ioctl.h>

#include <linux/uaccess.h>

#include <linux/wait.h>

#include <linux/sched.h>

#include <linux/poll.h>

#include <linux/aio.h>

#include <linux/random.h>

#include <linux/ktime.h>

#include <linux/hrtimer.h>

#include "vser.h"

#define VSER_MAJOR 256

#define VSER_MINOR 0

#define VSER_DEV_CNT 1

#define VSER_DEV_NAME "vser"

struct vser_dev {

unsigned int baud;

struct option opt;

struct cdev cdev;

wait_queue_head_t rwqh;

wait_queue_head_t wwqh;

struct fasync_struct *fapp;

struct hrtimer timer;

};

DEFINE_KFIFO(vsfifo, char, 32);

static struct vser_dev vsdev;

static int vser_fasync(int fd, struct file *filp, int on);

static int vser_open(struct inode *inode, struct file *filp)

{

return 0;

}

static int vser_release(struct inode *inode, struct file *filp)

{

vser_fasync(-1, filp, 0);

return 0;

}

static ssize_t vser_read(struct file *filp, char __user *buf, size_t count, loff_t *pos)

{

int ret;

unsigned int copied = 0;

if (kfifo_is_empty(&vsfifo)) {

if (filp->f_flags & O_NONBLOCK)

return -EAGAIN;

if (wait_event_interruptible_exclusive(vsdev.rwqh, !kfifo_is_empty(&vsfifo)))

return -ERESTARTSYS;

}

ret = kfifo_to_user(&vsfifo, buf, count, &copied);

if (!kfifo_is_full(&vsfifo)) {

wake_up_interruptible(&vsdev.wwqh);

kill_fasync(&vsdev.fapp, SIGIO, POLL_OUT);

}

return ret == 0 ? copied : ret;

}

static ssize_t vser_write(struct file *filp, const char __user *buf, size_t count, loff_t *pos)

{

int ret;

unsigned int copied = 0;

if (kfifo_is_full(&vsfifo)) {

if (filp->f_flags & O_NONBLOCK)

return -EAGAIN;

if (wait_event_interruptible_exclusive(vsdev.wwqh, !kfifo_is_full(&vsfifo)))

return -ERESTARTSYS;

}

ret = kfifo_from_user(&vsfifo, buf, count, &copied);

if (!kfifo_is_empty(&vsfifo)) {

wake_up_interruptible(&vsdev.rwqh);

kill_fasync(&vsdev.fapp, SIGIO, POLL_IN);

}

return ret == 0 ? copied : ret;

}

static long vser_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

if (_IOC_TYPE(cmd) != VS_MAGIC)

return -ENOTTY;

switch (cmd) {

case VS_SET_BAUD:

vsdev.baud = arg;

break;

case VS_GET_BAUD:

arg = vsdev.baud;

break;

case VS_SET_FFMT:

if (copy_from_user(&vsdev.opt, (struct option __user *)arg, sizeof(struct option)))

return -EFAULT;

break;

case VS_GET_FFMT:

if (copy_to_user((struct option __user *)arg, &vsdev.opt, sizeof(struct option)))

return -EFAULT;

break;

default:

return -ENOTTY;

}

return 0;

}

static unsigned int vser_poll(struct file *filp, struct poll_table_struct *p)

{

int mask = 0;

poll_wait(filp, &vsdev.rwqh, p);

poll_wait(filp, &vsdev.wwqh, p);

if (!kfifo_is_empty(&vsfifo))

mask |= POLLIN | POLLRDNORM;

if (!kfifo_is_full(&vsfifo))

mask |= POLLOUT | POLLWRNORM;

return mask;

}

static ssize_t vser_aio_read(struct kiocb *iocb, const struct iovec *iov, unsigned long nr_segs, loff_t pos)

{

size_t read = 0;

unsigned long i;

ssize_t ret;

for (i = 0; i < nr_segs; i++) {

ret = vser_read(iocb->ki_filp, iov[i].iov_base, iov[i].iov_len, &pos);

if (ret < 0)

break;

read += ret;

}

return read ? read : -EFAULT;

}

static ssize_t vser_aio_write(struct kiocb *iocb, const struct iovec *iov, unsigned long nr_segs, loff_t pos)

{

size_t written = 0;

unsigned long i;

ssize_t ret;

for (i = 0; i < nr_segs; i++) {

ret = vser_write(iocb->ki_filp, iov[i].iov_base, iov[i].iov_len, &pos);

if (ret < 0)

break;

written += ret;

}

return written ? written : -EFAULT;

}

static int vser_fasync(int fd, struct file *filp, int on)

{

return fasync_helper(fd, filp, on, &vsdev.fapp);

}

static enum hrtimer_restart vser_timer(struct hrtimer *timer)

{

char data;

get_random_bytes(&data, sizeof(data));

data %= 26;

data += 'A';

if (!kfifo_is_full(&vsfifo))

if(!kfifo_in(&vsfifo, &data, sizeof(data)))

printk(KERN_ERR "vser: kfifo_in failure\n");

if (!kfifo_is_empty(&vsfifo)) {

wake_up_interruptible(&vsdev.rwqh);

kill_fasync(&vsdev.fapp, SIGIO, POLL_IN);

}

hrtimer_forward_now(timer, ktime_set(1, 1000));

return HRTIMER_RESTART;

}

static struct file_operations vser_ops = {

.owner = THIS_MODULE,

.open = vser_open,

.release = vser_release,

.read = vser_read,

.write = vser_write,

.unlocked_ioctl = vser_ioctl,

.poll = vser_poll,

.aio_read = vser_aio_read,

.aio_write = vser_aio_write,

.fasync = vser_fasync,

};

static int __init vser_init(void)

{

int ret;

dev_t dev;

dev = MKDEV(VSER_MAJOR, VSER_MINOR);

ret = register_chrdev_region(dev, VSER_DEV_CNT, VSER_DEV_NAME);

if (ret)

goto reg_err;

cdev_init(&vsdev.cdev, &vser_ops);

vsdev.cdev.owner = THIS_MODULE;

vsdev.baud = 115200;

vsdev.opt.datab = 8;

vsdev.opt.parity = 0;

vsdev.opt.stopb = 1;

ret = cdev_add(&vsdev.cdev, dev, VSER_DEV_CNT);

if (ret)

goto add_err;

init_waitqueue_head(&vsdev.rwqh);

init_waitqueue_head(&vsdev.wwqh);

hrtimer_init(&vsdev.timer, CLOCK_MONOTONIC, HRTIMER_MODE_REL);

vsdev.timer.function = vser_timer;

hrtimer_start(&vsdev.timer, ktime_set(1, 1000), HRTIMER_MODE_REL);

return 0;

add_err:

unregister_chrdev_region(dev, VSER_DEV_CNT);

reg_err:

return ret;

}

static void __exit vser_exit(void)

{

dev_t dev;

dev = MKDEV(VSER_MAJOR, VSER_MINOR);

hrtimer_cancel(&vsdev.timer);

cdev_del(&vsdev.cdev);

unregister_chrdev_region(dev, VSER_DEV_CNT);

}

module_init(vser_init);

module_exit(vser_exit);

MODULE_LICENSE("GPL");

MODULE_AUTHOR("name <E-mail>");

MODULE_DESCRIPTION("A simple character device driver");

MODULE_ALIAS("virtual-serial");

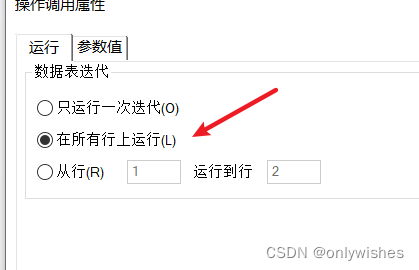

代码第35行给 vser_dev 结构添加了一个高分辨率定时器成员,第238行至第240行初始化了这个高分辨率定时器,并启动了该定时器,第 256 行在模块卸时取消了定时器。当定时时间到了之后,定时器函数 vser_timer 被调用,将随机产生的字符推入 FIFO后,给定时器重新设置了定时值,然后返回HRTIMER_RESTART表示要重新启动定时器.驱动实现的效果和低分辨率定时器的例子是一样的。

随着定时器打印的信息会越来越多

练习

1,关于中断处理例程说法错误的是( C)。

[A] 需要尽快完成

[B] 不能调用可能会引起进程休眠的函数

[C] 如果中断是共享的,内核会决定具体调用哪一个驱动的中断服务例程

[D] 工作在中断上下文

2,中断的下半部机制包括( ABC)。

[A] 软中断

(B] tasklct

[C] 工作队列

3,关于中断下半部机制的说法正确的是( D)。

[A] 可以使处理的总时间减少

[B] 可以提高 CPU 的利用率

[C] 每种下半部机制都不运行在中断上下文中

[D] 在下半部中可以响应新的硬件中断

4,下而哪种下半部机制工作在进程上下文(C)。

[A] 软中断[B] tasklet [C] 工作队列

5.修改低分辨率定时器的 expires 成员使用 (C)函数。

(A] init_timer

(B) add_timer

[C] mod_timer

(D] del_timer

6.关于低分辨率定时器说法错误的是( D)。

[A] 分辨率受 HZ 的影响

[B]function函数指针指向的函数运行在中断上下文中

[C]使用mod_timer可以实现循环定时

[D] 所有定时器都放在一个组中,遍历整个链表非常耗时

7.高分辨率定时器是用 ( C)来定义时间的。

[A]HZ

[C] ktime_t

[B]jiffies