基于Scrapy框架实现POST请求爬虫

前言

本文中介绍 如何基于 Scrapy 框架实现 POST 请求爬虫,并以抓取指定城市的 KFC 门店信息为例进行展示

正文

1、Scrapy框架处理POST请求方法

Scrapy框架 提供了 FormRequest() 方法来发送 POST 请求;

FormRequest() 方法 相比于 Request() 方法多了 formdata 参数,接受包含表单数据的字典或者可迭代的元组,并将其转化为请求的 body。

POST请求:yield scrapy.FormRequest(url=post_url,formdata={},meta={},callback=...)

注意:使用 FormRequest() 方法发送 POST 请求一定要重写 start_requests() 方法

2、Scrapy框架处理POST请求案例

-

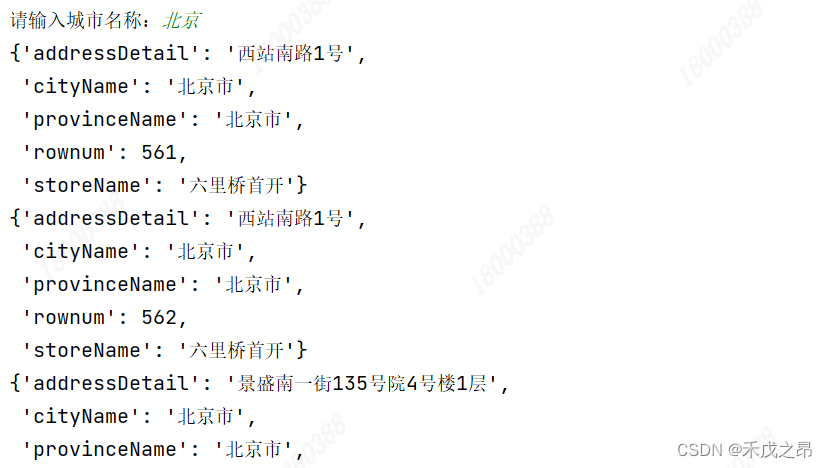

项目需求:抓取指定城市的 KFC 门店信息。终端提示,请输入城市:xx ,将所有 xx 市的 KFC 门店数据抓取下来。

-

所需数据:门店编号、门店名称、门店地址、所属城市、所属省份

-

url 地址:http://www.kfc.com.cn/kfccda/storelist/index.aspx

-

POST请求url地址:http://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx?op=cname

-

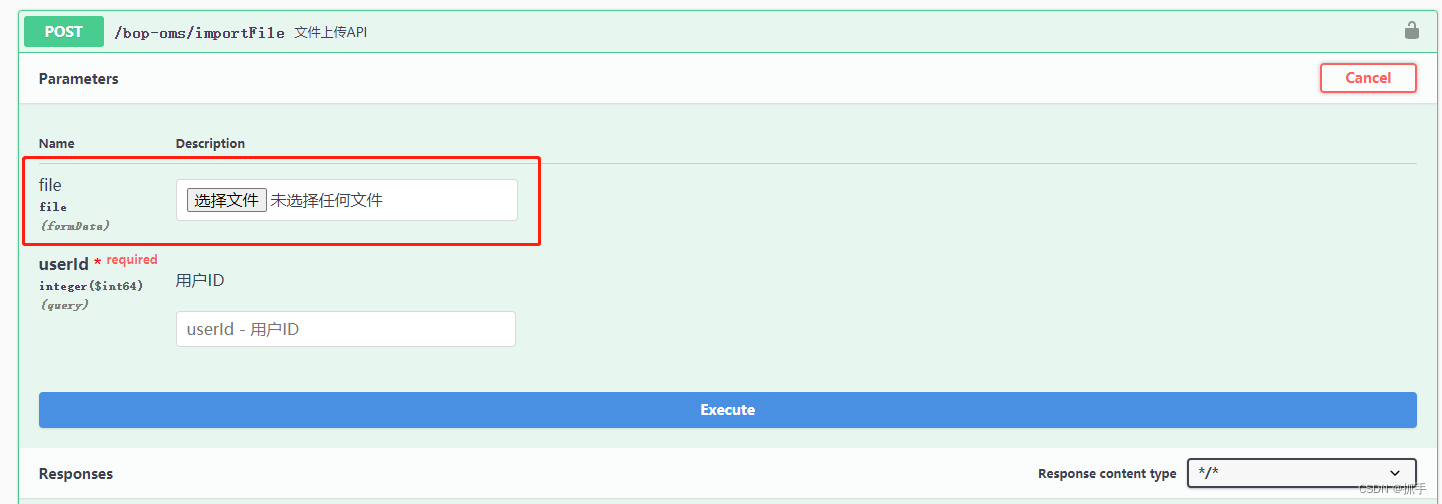

F12抓包分析:找到需要爬取的数据,获取门店信息,获取门店总数

-

获取form表单:获取 form 表单数据

-

创建Scrapy项目:编写items.py文件

import scrapy class KfcspiderItem(scrapy.Item): # 门店编号 rownum = scrapy.Field() # 门店名称 storeName = scrapy.Field() # 门店地址 addressDetail = scrapy.Field() # 所属城市 cityName = scrapy.Field() # 所属省份 provinceName = scrapy.Field() -

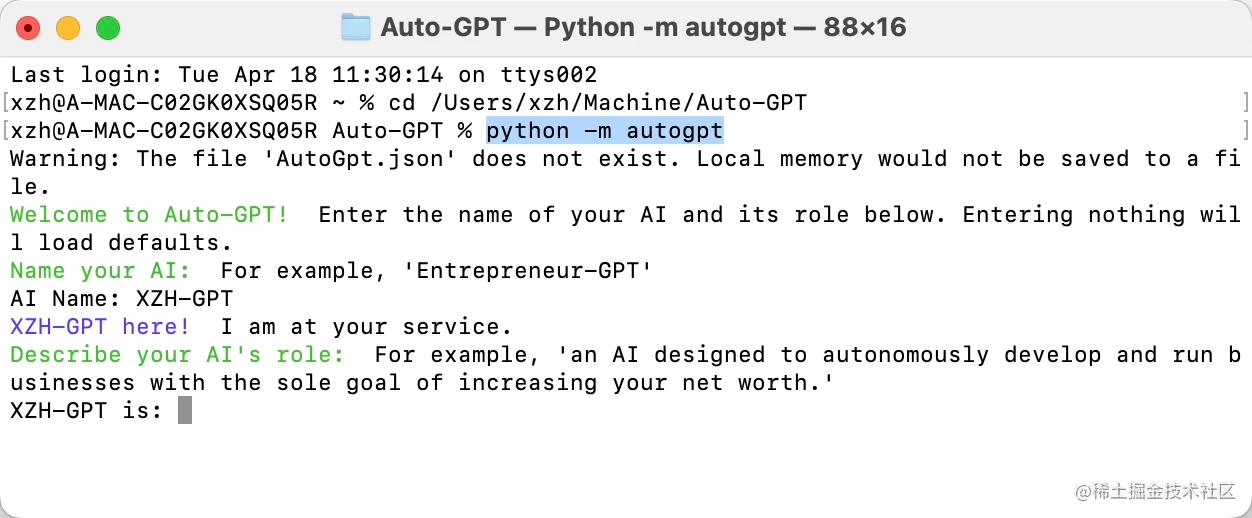

编写爬虫文件

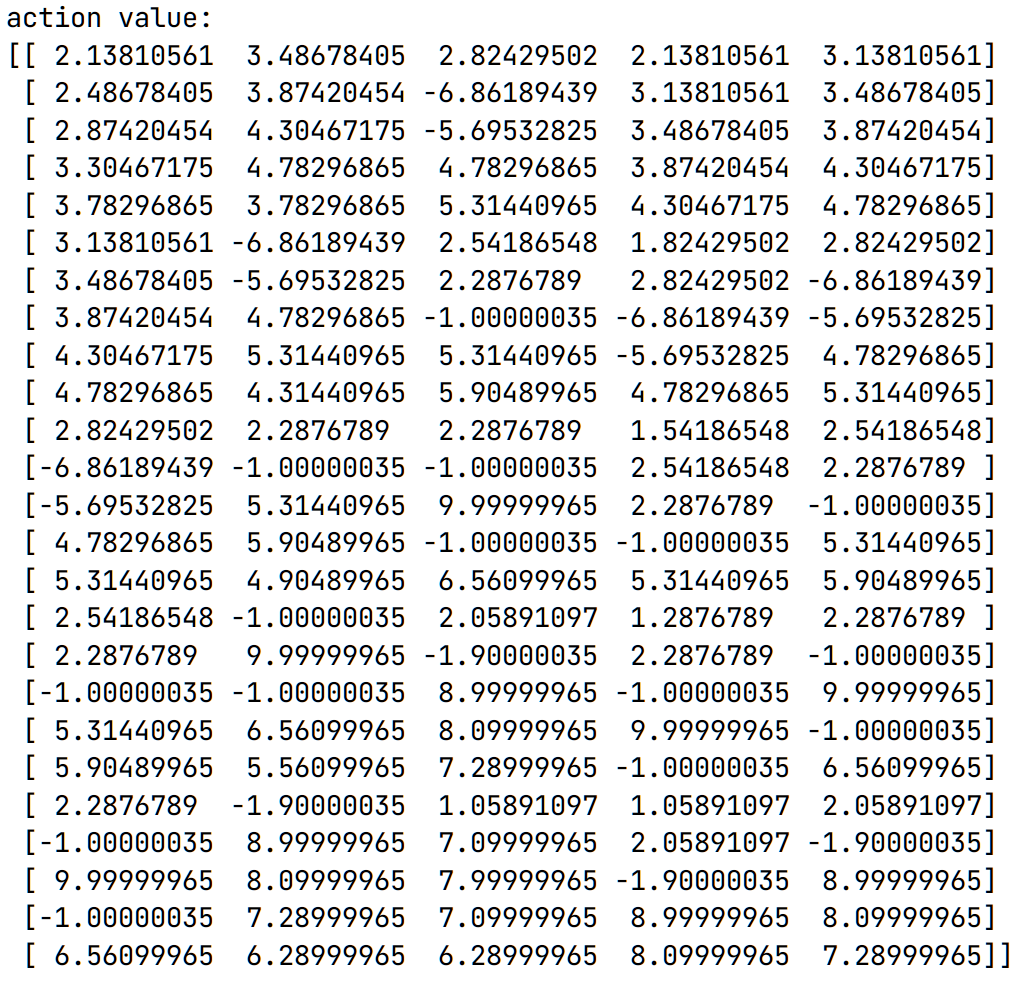

import scrapy import json from ..items import KfcspiderItem class KfcSpider(scrapy.Spider): name = "kfc" allowed_domains = ["www.kfc.com.cn"] post_url = 'http://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx?op=cname' city_name = input("请输入城市名称:") # start_urls = ["http://www.kfc.com.cn/"] def start_requests(self): """ 重写start_requests()方法,获取某个城市的KFC门店总数量 :return: """ formdata = { "cname": self.city_name, "pid": "", "pageIndex": '1', "pageSize": '10' } yield scrapy.FormRequest(url=self.post_url, formdata=formdata, callback=self.get_total,dont_filter=True) def parse(self, response): """ 解析提取具体的门店数据 :param response: :return: """ html=json.loads(response.text) for one_shop_dict in html["Table1"]: item=KfcspiderItem() item["rownum"]=one_shop_dict['rownum'] item["storeName"]=one_shop_dict['storeName'] item["addressDetail"]=one_shop_dict['addressDetail'] item["cityName"]=one_shop_dict['cityName'] item["provinceName"]=one_shop_dict['provinceName'] #一个完整的门店数据提取完成,交给数据管道 yield item def get_total(self, response): """ 获取总页数,并交给调度器入队列 :param response: :return: """ html = json.loads(response.text) count = html['Table'][0]['rowcount'] total_page = count // 10 if count % 10 == 0 else count // 10 + 1 # 将所有页的url地址交给调度器入队列 for page in range(1, total_page + 1): formdata = { "cname": self.city_name, "pid": "", "pageIndex": str(page), "pageSize": '10' } # 交给调度器入队列 yield scrapy.FormRequest(url=self.post_url, formdata=formdata, callback=self.parse) -

编写设置文件:

BOT_NAME = "KFCSpider" SPIDER_MODULES = ["KFCSpider.spiders"] NEWSPIDER_MODULE = "KFCSpider.spiders" # Obey robots.txt rules ROBOTSTXT_OBEY = False # See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs DOWNLOAD_DELAY = 1 # Override the default request headers: DEFAULT_REQUEST_HEADERS = { "Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8", "Accept-Language": "en", "User-Agent": "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.1 (KHTML, like Gecko)" } # 设置日志级别:DEBUG < INFO < WARNING < ERROR < CARITICAL LOG_LEVEL = 'INFO' # 保存日志文件 LOG_FILE = 'KFC.log' # Configure item pipelines # See https://docs.scrapy.org/en/latest/topics/item-pipeline.html ITEM_PIPELINES = { "KFCSpider.pipelines.KfcspiderPipeline": 300, } # Set settings whose default value is deprecated to a future-proof value REQUEST_FINGERPRINTER_IMPLEMENTATION = "2.7" TWISTED_REACTOR = "twisted.internet.asyncioreactor.AsyncioSelectorReactor" FEED_EXPORT_ENCODING = "utf-8" -

在管道文件中直接打印 item

-

创建run.py文件运行爬虫:

from scrapy import cmdline cmdline.execute("scrapy crawl kfc".split()) -

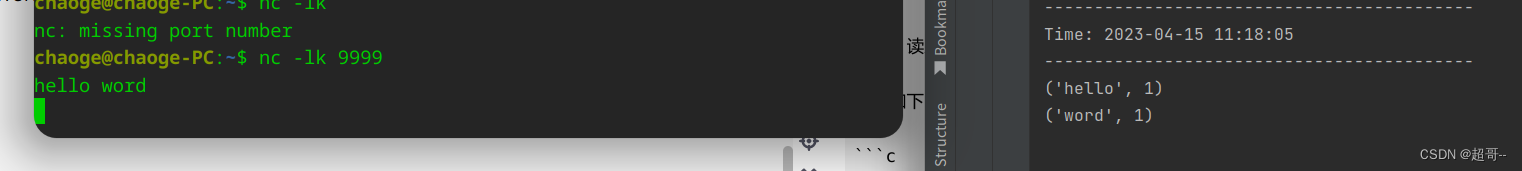

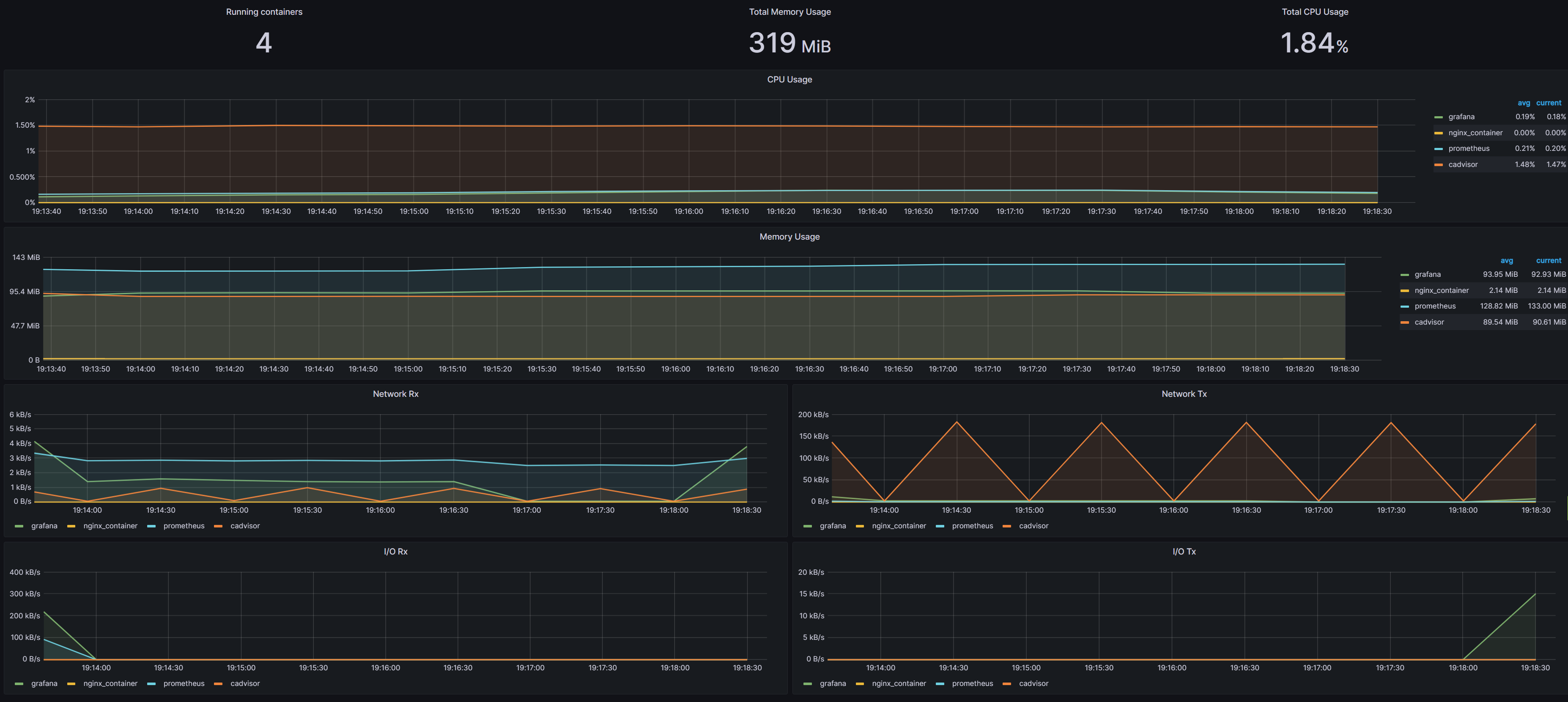

运行效果