作为一个专业测试storage的测试人员,除了对服务器,硬盘熟悉之外,还要对测试工具fio特别熟悉才行。如果在OEM或者专门的HDD&SSD厂家测试,会经常看到测试脚本里边,开发喜欢用fio minimal 模式,这样解析log比较方便。下边给大家介绍一下具体的minimal 参数代表的意思,以及多盘(HDD)shell脚本的写法。

一.打开Fio的 官网 https://fio.readthedocs.io/en/latest/fio_doc.html#threads-processes-and-job-synchronization

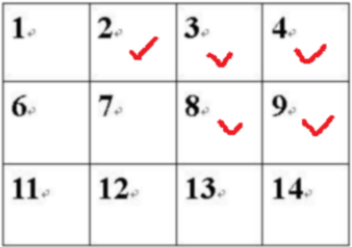

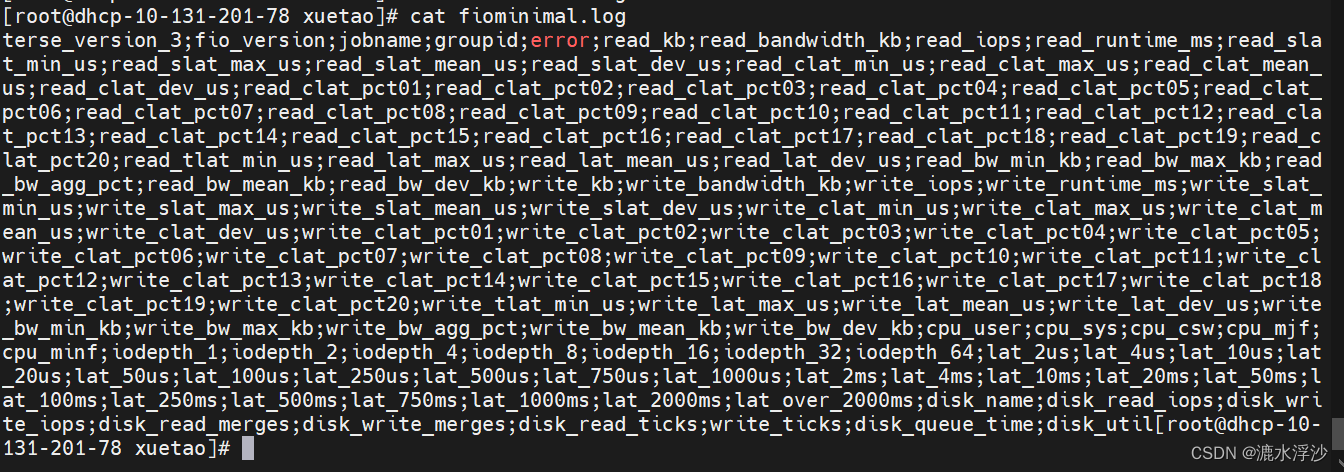

在里边搜索minimal 如图:

可以把里边的详细参数复制一下

稍微转化一下 看着方便

cat fiominimal.log |tr -s “;” “\n” >fiominimal.txt

cat -n fiominimal.txt

结果如下图:

1 terse_version_3

2 fio_version

3 jobname

4 groupid

5 error

6 read_kb

7 read_bandwidth_kb

8 read_iops

9 read_runtime_ms

10 read_slat_min_us

11 read_slat_max_us

12 read_slat_mean_us

13 read_slat_dev_us

14 read_clat_min_us

15 read_clat_max_us

16 read_clat_mean_us

17 read_clat_dev_us

18 read_clat_pct01

19 read_clat_pct02

20 read_clat_pct03

21 read_clat_pct04

22 read_clat_pct05

23 read_clat_pct06

24 read_clat_pct07

25 read_clat_pct08

26 read_clat_pct09

27 read_clat_pct10

28 read_clat_pct11

29 read_clat_pct12

30 read_clat_pct13

31 read_clat_pct14

32 read_clat_pct15

33 read_clat_pct16

34 read_clat_pct17

35 read_clat_pct18

36 read_clat_pct19

37 read_clat_pct20

38 read_tlat_min_us

39 read_lat_max_us

40 read_lat_mean_us

41 read_lat_dev_us

42 read_bw_min_kb

43 read_bw_max_kb

44 read_bw_agg_pct

45 read_bw_mean_kb

46 read_bw_dev_kb

47 write_kb

48 write_bandwidth_kb

49 write_iops

50 write_runtime_ms

51 write_slat_min_us

52 write_slat_max_us

53 write_slat_mean_us

54 write_slat_dev_us

55 write_clat_min_us

56 write_clat_max_us

57 write_clat_mean_us

58 write_clat_dev_us

59 write_clat_pct01

60 write_clat_pct02

61 write_clat_pct03

62 write_clat_pct04

63 write_clat_pct05

64 write_clat_pct06

65 write_clat_pct07

66 write_clat_pct08

67 write_clat_pct09

68 write_clat_pct10

69 write_clat_pct11

70 write_clat_pct12

71 write_clat_pct13

72 write_clat_pct14

73 write_clat_pct15

74 write_clat_pct16

75 write_clat_pct17

76 write_clat_pct18

77 write_clat_pct19

78 write_clat_pct20

79 write_tlat_min_us

80 write_lat_max_us

81 write_lat_mean_us

82 write_lat_dev_us

83 write_bw_min_kb

84 write_bw_max_kb

85 write_bw_agg_pct

86 write_bw_mean_kb

87 write_bw_dev_kb

88 cpu_user

89 cpu_sys

90 cpu_csw

91 cpu_mjf

92 cpu_minf

93 iodepth_1

94 iodepth_2

95 iodepth_4

96 iodepth_8

97 iodepth_16

98 iodepth_32

99 iodepth_64

100 lat_2us

101 lat_4us

102 lat_10us

103 lat_20us

104 lat_50us

105 lat_100us

106 lat_250us

107 lat_500us

108 lat_750us

109 lat_1000us

110 lat_2ms

111 lat_4ms

112 lat_10ms

113 lat_20ms

114 lat_50ms

115 lat_100ms

116 lat_250ms

117 lat_500ms

118 lat_750ms

119 lat_1000ms

120 lat_2000ms

121 lat_over_2000ms

122 disk_name

123 disk_read_iops

124 disk_write_iops

125 disk_read_merges

126 disk_write_merges

127 disk_read_ticks

128 write_ticks

129 disk_queue_time

130 disk_util

可以看出这种模式的log是按照read和write显示的。如果只跑write,read所有的参数值为0.如果跑混合,那么read和write的参数都有具体值。跑一段Fio 具体看下log

fio --name=test --filename=/dev/sdb --ioengine=libaio --direct=1 --thread=1 --numjobs=1 --iodepth=16 --rw=rw --bs=512k --runtime=10 --time_based=1 --size=100% --group_reporting --minimal >hdd_minimal.log

验证一下这个Fio的log:

3;fio3.19;test;0;0;1047040;104380;203;10031;8;37;12.673943;3.985395;1098;584833;63616.460889;99932.659453;1.000000%=1122;5.000000%=1138;10.000000%=1171;20.000000%=2244;30.000000%=4227;40.000000%=7897;50.000000%=13172;60.000000%=21626;70.000000%=37486;80.000000%=131596;90.000000%=231735;95.000000%=278921;99.000000%=425721;99.500000%=455081;99.900000%=497025;99.950000%=583008;99.990000%=583008;0%=0;0%=0;0%=0;1107;584841;63629.220231;99932.575911;51200;137216;100.000000%;104784.473684;22888.752809;1104896;110148;215;10031;8;211;19.042607;6.974656;1103;282660;14041.808021;25284.538987;1.000000%=1122;5.000000%=1122;10.000000%=1236;20.000000%=2146;30.000000%=3063;40.000000%=4489;50.000000%=6651;60.000000%=9109;70.000000%=12910;80.000000%=18219;90.000000%=29229;95.000000%=50593;99.000000%=125304;99.500000%=204472;99.900000%=256901;99.950000%=258998;99.990000%=283115;0%=0;0%=0;0%=0;1116;282685;14060.939651;25284.967437;48128;162816;99.910025%;110048.894737;28420.579353;0.219342%;0.488534%;4204;0;1;0.1%;0.1%;0.1%;0.2%;99.6%;0.0%;0.0%;0.00%;0.00%;0.00%;0.00%;0.00%;0.00%;0.00%;0.00%;0.00%;0.00%;18.18%;14.78%;20.77%;17.39%;12.18%;3.43%;9.61%;3.62%;0.05%;0.00%;0.00%;0.00%;sdb;2074;2152;0;0;127406;30211;157617;99.16%

解析一下:

[root@dhcp-10-131-201-78 xuetao]# cat hdd_minimal.log |tr -s ";" "\n" |sed -n 7p

104380

[root@dhcp-10-131-201-78 xuetao]# cat hdd_minimal.log |tr -s ";" "\n" |sed -n 8p

203

[root@dhcp-10-131-201-78 xuetao]# cat hdd_minimal.log |tr -s ";" "\n" |sed -n 38p

1107

[root@dhcp-10-131-201-78 xuetao]# cat hdd_minimal.log |tr -s ";" "\n" |sed -n 40p

63629.220231

[root@dhcp-10-131-201-78 xuetao]# cat hdd_minimal.log |tr -s ";" "\n" |sed -n 39p

584841

[root@dhcp-10-131-201-78 xuetao]# cat hdd_minimal.log |tr -s ";" "\n" |sed -n 30p

99.000000%=425721

[root@dhcp-10-131-201-78 xuetao]# cat hdd_minimal.log |tr -s ";" "\n" |sed -n 32p

99.900000%=497025

[root@dhcp-10-131-201-78 xuetao]# cat hdd_minimal.log |tr -s ";" "\n" |sed -n 34p

99.990000%=583008

[root@dhcp-10-131-201-78 xuetao]# cat hdd_minimal.log |tr -s ";" "\n" |sed -n 48p

110148

[root@dhcp-10-131-201-78 xuetao]# cat hdd_minimal.log |tr -s ";" "\n" |sed -n 49p

215

[root@dhcp-10-131-201-78 xuetao]# cat hdd_minimal.log |tr -s ";" "\n" |sed -n 79p

1116

[root@dhcp-10-131-201-78 xuetao]# cat hdd_minimal.log |tr -s ";" "\n" |sed -n 81p

14060.939651

[root@dhcp-10-131-201-78 xuetao]# cat hdd_minimal.log |tr -s ";" "\n" |sed -n 80p

282685

[root@dhcp-10-131-201-78 xuetao]# cat hdd_minimal.log |tr -s ";" "\n" |sed -n 71p

99.000000%=125304

[root@dhcp-10-131-201-78 xuetao]# cat hdd_minimal.log |tr -s ";" "\n" |sed -n 73p

99.900000%=256901

[root@dhcp-10-131-201-78 xuetao]# cat hdd_minimal.log |tr -s ";" "\n" |sed -n 75p

99.990000%=283115

我们可以清楚的知道log的第7,8,38,40.39,30.32.34行和48,49,79,81,80,71,73,75行分别代表read模式和write模式下的bw.iops,latmin,latavg,latmax,clat99,clat99.9,clat99.99的值。搞清楚这个我们就可以写多盘跑fio的脚本了

二, 编写跑多盘的脚本

1 先编写跑fio的类型

date

echo " sequential randrom write read test start"

for ioengine in libaio sync

do

for rw in write read randwrite randread

do

for bs in 128k

do

for iodepth in 16

do

for jobs in 1

do

date

echo "$hdd $rw $bs iodepth=$iodepth numjobs=$jobs test satrt"

job_name="${ioengine}_${bs}B_${rw}_${jobs}job_${iodepth}QD"

fio --name=$job_name --filename=/dev/$hdd --ioengine=${ioengine} --direct=1 --thread=1 --numjobs=${jobs} --iodepth=${iodepth} --rw=${rw} --bs=${bs} --runtime=$time --time_based=1 --size=100% --group_reporting --minimal >>${hdd}_rw.log

done

done

done

done

done

echo " sequential randmon mix test"

date

for ioengine in libaio sync

do

for rw in rw randrw

do

for mixread in 80

do

for blk_size in 1024k

do

for jobs in 1

do

for queue_depth in 16

do

job_name="${ioengine}_${rw}_${blk_size}B_mix_read${mixread}_${jobs}job_QD${queue_depth}"

echo "$hdd $job_name test satrt"

fio --name=${job_name} --filename=/dev/$hdd --ioengine=libaio --direct=1 --thread=1 --numjobs=${jobs} --iodepth=${queue_depth} --rw=${rw} --bs=${blk_size} --rwmixread=$mixread --runtime=$time --time_based=1 --size=100% --group_reporting --minimal >> "$hdd"_mix_data.log

done

done

done

done

done

done

2把解析log的shell写法 加上去

date

echo " sequential randrom write read test start"

for ioengine in libaio sync

do

for rw in write read randwrite randread

do

for bs in 128k

do

for iodepth in 16

do

for jobs in 1

do

date

echo "$hdd $rw $bs iodepth=$iodepth numjobs=$jobs test satrt"

job_name="${ioengine}_${bs}B_${rw}_${jobs}job_${iodepth}QD"

fio --name=$job_name --filename=/dev/$hdd --ioengine=${ioengine} --direct=1 --thread=1 --numjobs=${jobs} --iodepth=${iodepth} --rw=${rw} --bs=${bs} --runtime=$time --time_based=1 --size=100% --group_reporting --minimal >>${hdd}_rw.log

if [ "$rw" == "write" -o "$rw" == "randwrite" ] ;then

fiocsv $bs ${ioengine}_$rw $jobs $iodepth $job_name ${hdd}_rw.log "write" $ioengine

else

fiocsv $bs ${ioengine}_$rw $jobs $iodepth $job_name ${hdd}_rw.log "read" $ioengine

fi

done

done

done

done

done

echo " sequential randmon mix test"

date

for ioengine in libaio sync

do

for rw in rw randrw

do

for mixread in 80

do

for blk_size in 1024k

do

for jobs in 1

do

for queue_depth in 16

do

job_name="${ioengine}_${rw}_${blk_size}B_mix_read${mixread}_${jobs}job_QD${queue_depth}"

echo "$hdd $job_name test satrt"

fio --name=${job_name} --filename=/dev/$hdd --ioengine=libaio --direct=1 --thread=1 --numjobs=${jobs} --iodepth=${queue_depth} --rw=${rw} --bs=${blk_size} --rwmixread=$mixread --runtime=$time --time_based=1 --size=100% --group_reporting --minimal >> "$hdd"_mix_data.log

fiocsv $blk_size ${ioengine}_${rw}_mixread=${mixread} $jobs $iodepth $job_name ${hdd}_mix_data.log "mix"

done

done

done

done

done

done

3 因为是并行跑所有的HDD,所以需要除了OS之外,所有的HDD都跑起来

#!/bin/bash

# Autor :xiao xuetao

#date:2022 11 18

if [ $# != 1 ]; then

echo "You must input the time of each testcase (unit is second)"

exit -1

fi

time=$1

function fiocsv(){

readbw=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 7p`

readiops=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 8p`

readlatmin=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 38p`

readlatavg=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 40p`

readlatmax=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 39p`

readclat99=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 30p|awk -F "=" '{print $NF}'`

readclat999=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 32p|awk -F "=" '{print $NF}'`

readclat9999=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 34p|awk -F "=" '{print $NF}'`

writebw=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 48p`

writeiops=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 49p`

writelatmin=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 79p`

writelatavg=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 81p`

writelatmax=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 80p`

writeclat99=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 71p|awk -F "=" '{print $NF}'`

writeclat999=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 73p|awk -F "=" '{print $NF}'`

writeclat9999=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 75p|awk -F "=" '{print $NF}'`

if [ $7 == "read" ];then

echo $1,$2,$3,$4,$readbw,$readiops,$readlatmin,$readlatavg,$readlatmax,$readclat99,$readclat999,$readclat9999 >>${hdd}_re.csv

elif [ $7 == "write" ];then

echo $1,$2,$3,$4,$writebw,$writeiops,$writelatmin,$writelatavg,$writelatmax,$writeclat99,$writeclat999,$writeclat9999 >>${hdd}_re.csv

elif [ $7 == "mix" ];then

echo "$1,$2,$3,$4, "read",$readbw,$readiops,$readlatmin,$readlatavg,$readlatmax,$readclat99,$readclat999,$readclat9999,"write",$writebw,$writeiops,$writelatmin,$writelatavg,$writelatmax,$writeclat99,$writeclat999,$writeclat9999" >>${hdd}_mix.csv

fi

}

function hddtest(){

echo " Blocksize,IO type,Jobs,QueueDepth,Bandwidth(kB/s),IOPS,lat_min(us),lat_avg(us),lat_max(us),Qos(us)99%,Qos(us)99_9%, Qos(us)99_99%">${hdd}_re.csv

echo "Blocksize,IO type,Jobs,QueueDepth,model,Bandwidth(kB/s),IOPS,lat_min(us),lat_avg(us),lat_max(us),Qos(us)99%,Qos(us)99_9%, Qos(us)99_99%,model,Bandwidth(kB/s),IOPS,lat_min(us),lat_avg(us),lat_max(us),Qos(us)99%,Qos(us)99_9%, Qos(us)99_99%">${hdd}_mix.csv

date

echo " sequential randrom write read test start"

for ioengine in libaio sync

do

for rw in write read randwrite randread

do

for bs in 128k

do

for iodepth in 16

do

for jobs in 1

do

date

echo "$hdd $rw $bs iodepth=$iodepth numjobs=$jobs test satrt"

job_name="${ioengine}_${bs}B_${rw}_${jobs}job_${iodepth}QD"

fio --name=$job_name --filename=/dev/$hdd --ioengine=${ioengine} --direct=1 --thread=1 --numjobs=${jobs} --iodepth=${iodepth} --rw=${rw} --bs=${bs} --runtime=$time --time_based=1 --size=100% --group_reporting --minimal >>${hdd}_rw.log

if [ "$rw" == "write" -o "$rw" == "randwrite" ] ;then

fiocsv $bs ${ioengine}_$rw $jobs $iodepth $job_name ${hdd}_rw.log "write" $ioengine

else

fiocsv $bs ${ioengine}_$rw $jobs $iodepth $job_name ${hdd}_rw.log "read" $ioengine

fi

done

done

done

done

done

echo " sequential randmon mix test"

date

for ioengine in libaio sync

do

for rw in rw randrw

do

for mixread in 80

do

for blk_size in 1024k

do

for jobs in 1

do

for queue_depth in 16

do

job_name="${ioengine}_${rw}_${blk_size}B_mix_read${mixread}_${jobs}job_QD${queue_depth}"

echo "$hdd $job_name test satrt"

fio --name=${job_name} --filename=/dev/$hdd --ioengine=libaio --direct=1 --thread=1 --numjobs=${jobs} --iodepth=${queue_depth} --rw=${rw} --bs=${blk_size} --rwmixread=$mixread --runtime=$time --time_based=1 --size=100% --group_reporting --minimal >> "$hdd"_mix_data.log

fiocsv $blk_size ${ioengine}_${rw}_mixread=${mixread} $jobs $iodepth $job_name ${hdd}_mix_data.log "mix"

done

done

done

done

done

done

mkdir $hdd

mkdir $hdd/test_data

mkdir $hdd/test_log

mv $hdd*.log $hdd/test_log

mv $hdd*.csv $hdd/test_data

}

echo "start all hdds fio test"

bootdisk=`df -h | grep -i boot|awk '{print $1}' | grep -iE "/dev/sd" | sed 's/[0-9]//g' |sort -u|awk -F "/" '{print $NF}'`

if test -z "$bootdisk"

then

bootdisk=`df -h |grep -i boot| awk '{print $1}' | grep -iE "/dev/nvme" | sed 's/p[0-9]//g' |sort -u|awk -F "/" '{print $NF}'`

echo "os disk os $bootdisk"

else

echo "os disk is $bootdisk"

fi

for hdd in `lsscsi |grep -i sd |grep -vw $bootdisk|awk -F "/" '{print $NF}'`;

do

hddtest $hdd &

done

4 其实到了这一步,脚本基本上可以说成了,会把每个盘的log和CSV放在以该盘命名的目录里

ls sd*/test_*/

sdb/test_data/:

sdb_mix.csv sdb_re.csv

sdb/test_log/:

sdb_mix_data.log sdb_rw.log

sdd/test_data/:

sdd_mix.csv sdd_re.csv

sdd/test_log/:

sdd_mix_data.log sdd_rw.log

sde/test_data/:

sde_mix.csv sde_re.csv

sde/test_log/:

sde_mix_data.log sde_rw.log

sdf/test_data/:

sdf_mix.csv sdf_re.csv

sdf/test_log/:

sdf_mix_data.log sdf_rw.log

sdg/test_data/:

sdg_mix.csv sdg_re.csv

sdg/test_log/:

sdg_mix_data.log sdg_rw.log

sdh/test_data/:

sdh_mix.csv sdh_re.csv

sdh/test_log/:

sdh_mix_data.log sdh_rw.log

sdi/test_data/:

sdi_mix.csv sdi_re.csv

sdi/test_log/:

sdi_mix_data.log sdi_rw.log

sdj/test_data/:

sdj_mix.csv sdj_re.csv

sdj/test_log/:

sdj_mix_data.log sdj_rw.log

5 但是呢,因为多盘之间需要作对比,所以我们每个单盘CSV的结果放在一个表格里,方便我们看结果

其中多盘之间的最大差异=(盘的最大值-盘的最小值)/盘的最大值

计算方式用awk来计算 比如求最大差距

maxbandwith=`cat result_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $6}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

minbandwith=`cat result_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $6}'|awk 'BEGIN{ min ='$maxbandwith'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

contbandwith=`echo "$maxbandwith $minbandwith"|awk '{printf"%0.10f\n" ,$1-$2 }'`

bandwith=`awk 'BEGIN{printf "%.2f%%\n",('$contbandwith'/'$maxbandwith')*100}'

6 最后把读写的CSV 处理一下。整合到一个先的CSV里

#!/bin/bash

# Autor :xiao xuetao

#date:2022 11 18

if [ $# != 1 ]; then

echo "You must input the time of each testcase (unit is second)"

exit -1

fi

time=$1

function fiocsv(){

readbw=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 7p`

readiops=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 8p`

readlatmin=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 38p`

readlatavg=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 40p`

readlatmax=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 39p`

readclat99=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 30p|awk -F "=" '{print $NF}'`

readclat999=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 32p|awk -F "=" '{print $NF}'`

readclat9999=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 34p|awk -F "=" '{print $NF}'`

writebw=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 48p`

writeiops=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 49p`

writelatmin=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 79p`

writelatavg=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 81p`

writelatmax=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 80p`

writeclat99=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 71p|awk -F "=" '{print $NF}'`

writeclat999=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 73p|awk -F "=" '{print $NF}'`

writeclat9999=`cat $6 |grep $5|tr -s ";" "\n"|sed -n 75p|awk -F "=" '{print $NF}'`

if [ $7 == "read" ];then

echo $1,$2,$3,$4,$readbw,$readiops,$readlatmin,$readlatavg,$readlatmax,$readclat99,$readclat999,$readclat9999 >>${hdd}_re.csv

elif [ $7 == "write" ];then

echo $1,$2,$3,$4,$writebw,$writeiops,$writelatmin,$writelatavg,$writelatmax,$writeclat99,$writeclat999,$writeclat9999 >>${hdd}_re.csv

elif [ $7 == "mix" ];then

echo "$1,$2,$3,$4, "read",$readbw,$readiops,$readlatmin,$readlatavg,$readlatmax,$readclat99,$readclat999,$readclat9999,"write",$writebw,$writeiops,$writelatmin,$writelatavg,$writelatmax,$writeclat99,$writeclat999,$writeclat9999" >>${hdd}_mix.csv

fi

}

function hddtest(){

echo " Blocksize,IO type,Jobs,QueueDepth,Bandwidth(kB/s),IOPS,lat_min(us),lat_avg(us),lat_max(us),Qos(us)99%,Qos(us)99_9%, Qos(us)99_99%">${hdd}_re.csv

echo "Blocksize,IO type,Jobs,QueueDepth,model,Bandwidth(kB/s),IOPS,lat_min(us),lat_avg(us),lat_max(us),Qos(us)99%,Qos(us)99_9%, Qos(us)99_99%,model,Bandwidth(kB/s),IOPS,lat_min(us),lat_avg(us),lat_max(us),Qos(us)99%,Qos(us)99_9%, Qos(us)99_99%">${hdd}_mix.csv

date

echo " sequential randrom write read test start"

for ioengine in libaio sync

do

for rw in write read randwrite randread

do

for bs in 128k

do

for iodepth in 16

do

for jobs in 1

do

date

echo "$hdd $rw $bs iodepth=$iodepth numjobs=$jobs test satrt"

job_name="${ioengine}_${bs}B_${rw}_${jobs}job_${iodepth}QD"

fio --name=$job_name --filename=/dev/$hdd --ioengine=${ioengine} --direct=1 --thread=1 --numjobs=${jobs} --iodepth=${iodepth} --rw=${rw} --bs=${bs} --runtime=$time --time_based=1 --size=100% --group_reporting --minimal >>${hdd}_rw.log

if [ "$rw" == "write" -o "$rw" == "randwrite" ] ;then

fiocsv $bs ${ioengine}_$rw $jobs $iodepth $job_name ${hdd}_rw.log "write" $ioengine

else

fiocsv $bs ${ioengine}_$rw $jobs $iodepth $job_name ${hdd}_rw.log "read" $ioengine

fi

done

done

done

done

done

echo " sequential randmon mix test"

date

for ioengine in libaio sync

do

for rw in rw randrw

do

for mixread in 80

do

for blk_size in 1024k

do

for jobs in 1

do

for queue_depth in 16

do

job_name="${ioengine}_${rw}_${blk_size}B_mix_read${mixread}_${jobs}job_QD${queue_depth}"

echo "$hdd $job_name test satrt"

fio --name=${job_name} --filename=/dev/$hdd --ioengine=libaio --direct=1 --thread=1 --numjobs=${jobs} --iodepth=${queue_depth} --rw=${rw} --bs=${blk_size} --rwmixread=$mixread --runtime=$time --time_based=1 --size=100% --group_reporting --minimal >> "$hdd"_mix_data.log

fiocsv $blk_size ${ioengine}_${rw}_mixread=${mixread} $jobs $iodepth $job_name ${hdd}_mix_data.log "mix"

done

done

done

done

done

done

mkdir $hdd

mkdir $hdd/test_data

mkdir $hdd/test_log

mv $hdd*.log $hdd/test_log

mv $hdd*.csv $hdd/test_data

}

#echo "fix result of write read randread and randwrite "

function resultcsv(){

echo "Device,Blocksize,IO type,Jobs,QueueDepth,Bandwidth(kB/s),IOPS,lat_min(us),lat_avg(us),lat_max(us),Qos(us)99%,Qos(us)99_9%, Qos(us)99_99%">result.csv

for hdd in `lsscsi |grep -i sd |grep -vw $bootdisk|awk -F "/" '{print $NF}'|sed -n 1p`;do

echo `cat $hdd/test_data/${hdd}_re.csv|sed 1,1d|wc -l` >num.log

done

num_block=`cat num.log`

for num in $(seq $num_block);do

for hdd in `lsscsi |grep -i sd |grep -vw $bootdisk|awk -F "/" '{print $NF}'`;

do

echo "$hdd, `cat $hdd/test_data/${hdd}_re.csv|sed 1,1d|sed -n ${num}p` ">>result_${num}.csv

done

done

for num in $(seq $num_block);do

maxbandwith=`cat result_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $6}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

minbandwith=`cat result_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $6}'|awk 'BEGIN{ min ='$maxbandwith'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

contbandwith=`echo "$maxbandwith $minbandwith"|awk '{printf"%0.10f\n" ,$1-$2 }'`

bandwith=`awk 'BEGIN{printf "%.2f%%\n",('$contbandwith'/'$maxbandwith')*100}'`

maxiops=`cat result_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $7}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

miniops=`cat result_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $7}'|awk 'BEGIN{ min ='$maxiops'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

contiops=`echo "$maxiops $miniops"|awk '{printf"%0.10f\n" ,$1-$2 }'`

iops=`awk 'BEGIN{printf "%.2f%%\n",('$contiops'/'$maxiops')*100}'`

maxlatmin=`cat result_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $8}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

minlatmin=`cat result_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $8}'|awk 'BEGIN{ min ='$maxlatmin'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

contlatmin=`echo "$maxlatmin $minlatmin"|awk '{printf"%0.10f\n" ,$1-$2 }'`

latmin=`awk 'BEGIN{printf "%.2f%%\n",('$contlatmin'/'$maxlatmin')*100}'`

maxavg=`cat result_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $9}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

minavg=`cat result_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $9}'|awk 'BEGIN{ min ='$maxavg'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

contavg=`echo "$maxavg $minavg"|awk '{printf"%0.10f\n" ,$1-$2 }'`

avg=`awk 'BEGIN{printf "%.2f%%\n",('$contavg'/'$maxavg')*100}'`

maxlast=`cat result_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $10}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

minlast=`cat result_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $10}'|awk 'BEGIN{ min ='$maxlast'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

contlast=`echo "$maxlast $minlast"|awk '{printf"%0.10f\n" ,$1-$2 }'`

last=`awk 'BEGIN{printf "%.2f%%\n",('$contlast'/'$maxlast')*100}'`

max99lat=`cat result_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $11}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

min99lat=`cat result_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $11}'|awk 'BEGIN{ min ='$max99lat'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

cont99lat=`echo "$max99lat $min99lat"|awk '{printf"%0.10f\n" ,$1-$2 }'`

lat99=`awk 'BEGIN{printf "%.2f%%\n",('$cont99lat'/'$max99lat')*100}'`

max999lat=`cat result_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $12}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

min999lat=`cat result_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $12}'|awk 'BEGIN{ min ='$max999lat'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

cont999lat=`echo "$max999lat $min999lat"|awk '{printf"%0.10f\n" ,$1-$2 }'`

lat999=`awk 'BEGIN{printf "%.2f%%\n",('$cont999lat'/'$max999lat')*100}'`

max9999lat=`cat result_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $13}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

min9999lat=`cat result_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $13}'|awk 'BEGIN{ min ='$max9999lat'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

cont9999lat=`echo "$max9999lat $min9999lat"|awk '{printf"%0.10f\n" ,$1-$2 }'`

lat9999=`awk 'BEGIN{printf "%.2f%%\n",('$cont9999lat'/'$max9999lat')*100}'`

echo "result, ==,==,==,==, $bandwith,$iops, $latmin, $avg, $last, $lat99, $lat999, $lat9999" >>result_${num}.csv

done

}

#echo "fix result of rwmix randrwmix"

function mixcsv(){

echo "Device,Blocksize,IO type,Jobs,QueueDepth,model,Bandwidth(kB/s),IOPS,lat_min(us),lat_avg(us),lat_max(us),Qos(us)99%,Qos(us)99_9%, Qos(us)99_99%,model,Bandwidth(kB/s),IOPS,lat_min(us),lat_avg(us),lat_max(us),Qos(us)99%,Qos(us)99_9%, Qos(us)99_99%">mix.csv

for hdd in `lsscsi |grep -i sd |grep -vw $bootdisk|awk -F "/" '{print $NF}'|sed -n 1p`;do

echo `cat $hdd/test_data/${hdd}_mix.csv|sed 1,1d|wc -l` >mix.log

done

num_mix=`cat mix.log`

for num in $(seq $num_mix);do

for hdd in `lsscsi |grep -i sd |grep -vw $bootdisk|awk -F "/" '{print $NF}'`;

do

echo "$hdd, `cat $hdd/test_data/${hdd}_mix.csv|sed 1,1d|sed -n ${num}p` ">>mix_${num}.csv

done

done

for num in $(seq $num_mix);do

readmaxbandwith=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $7}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

readminbandwith=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $7}'|awk 'BEGIN{ min ='$readmaxbandwith'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

readcontbandwith=`echo "$readmaxbandwith $readminbandwith"|awk '{printf"%0.10f\n" ,$1-$2 }'`

readbandwith=`awk 'BEGIN{printf "%.2f%%\n",('$readcontbandwith'/'$readmaxbandwith')*100}'`

readmaxiops=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $8}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

readminiops=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $8}'|awk 'BEGIN{ min ='$readmaxiops'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

readcontiops=`echo "$readmaxiops $readminiops"|awk '{printf"%0.10f\n" ,$1-$2 }'`

readiops=`awk 'BEGIN{printf "%.2f%%\n",('$readcontiops'/'$readmaxiops')*100}'`

readmaxlatmin=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $9}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

readminlatmin=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $9}'|awk 'BEGIN{ min ='$readmaxlatmin'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

readcontlatmin=`echo "$readmaxlatmin $readminlatmin"|awk '{printf"%0.10f\n" ,$1-$2 }'`

readlatmin=`awk 'BEGIN{printf "%.2f%%\n",('$readcontlatmin'/'$readmaxlatmin')*100}'`

readmaxavg=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $10}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

readminavg=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $10}'|awk 'BEGIN{ min ='$readmaxavg'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

readcontavg=`echo "$readmaxavg $readminavg"|awk '{printf"%0.10f\n" ,$1-$2 }'`

readavg=`awk 'BEGIN{printf "%.2f%%\n",('$readcontavg'/'$readmaxavg')*100}'`

readmaxlast=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $11}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

readminlast=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $11}'|awk 'BEGIN{ min ='$readmaxlast'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

readcontlast=`echo "$readmaxlast $readminlast"|awk '{printf"%0.10f\n" ,$1-$2 }'`

readlast=`awk 'BEGIN{printf "%.2f%%\n",('$readcontlast'/'$readmaxlast')*100}'`

readmax99lat=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $12}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

readmin99lat=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $12}'|awk 'BEGIN{ min ='$readmax99lat'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

readcont99lat=`echo "$readmax99lat $readmin99lat"|awk '{printf"%0.10f\n" ,$1-$2 }'`

readlat99=`awk 'BEGIN{printf "%.2f%%\n",('$readcont99lat'/'$readmax99lat')*100}'`

readmax999lat=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $13}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

readmin999lat=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $13}'|awk 'BEGIN{ min ='$readmax999lat'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

readcont999lat=`echo "$readmax999lat $readmin999lat"|awk '{printf"%0.10f\n" ,$1-$2 }'`

readlat999=`awk 'BEGIN{printf "%.2f%%\n",('$readcont999lat'/'$readmax999lat')*100}'`

readmax9999lat=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $14}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

readmin9999lat=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $14}'|awk 'BEGIN{ min ='$readmax9999lat'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

readcont9999lat=`echo "$readmax9999lat $readmin9999lat"|awk '{printf"%0.10f\n" ,$1-$2 }'`

readlat9999=`awk 'BEGIN{printf "%.2f%%\n",('$readcont9999lat'/'$readmax9999lat')*100}'`

writemaxbandwith=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $16}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

writeminbandwith=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $16}'|awk 'BEGIN{ min ='$writemaxbandwith'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

writecontbandwith=`echo "$writemaxbandwith $writeminbandwith"|awk '{printf"%0.10f\n" ,$1-$2 }'`

writebandwith=`awk 'BEGIN{printf "%.2f%%\n",('$writecontbandwith'/'$writemaxbandwith')*100}'`

writemaxiops=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $17}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

writeminiops=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $17}'|awk 'BEGIN{ min ='$writemaxiops'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

writecontiops=`echo "$writemaxiops $writeminiops"|awk '{printf"%0.10f\n" ,$1-$2 }'`

writeiops=`awk 'BEGIN{printf "%.2f%%\n",('$writecontiops'/'$writemaxiops')*100}'`

writemaxlatmin=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $18}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

writeminlatmin=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $18}'|awk 'BEGIN{ min ='$writemaxlatmin'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

writecontlatmin=`echo "$writemaxlatmin $writeminlatmin"|awk '{printf"%0.10f\n" ,$1-$2 }'`

writelatmin=`awk 'BEGIN{printf "%.2f%%\n",('$writecontlatmin'/'$writemaxlatmin')*100}'`

writemaxavg=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $19}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

writeminavg=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $19}'|awk 'BEGIN{ min ='$writemaxavg'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

writecontavg=`echo "$writemaxavg $writeminavg"|awk '{printf"%0.10f\n" ,$1-$2 }'`

writeavg=`awk 'BEGIN{printf "%.2f%%\n",('$writecontavg'/'$writemaxavg')*100}'`

writemaxlast=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $20}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

writeminlast=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $20}'|awk 'BEGIN{ min ='$writemaxlast'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

writecontlast=`echo "$writemaxlast $writeminlast"|awk '{printf"%0.10f\n" ,$1-$2 }'`

writelast=`awk 'BEGIN{printf "%.2f%%\n",('$writecontlast'/'$writemaxlast')*100}'`

writemax99lat=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $21}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

writemin99lat=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $21}'|awk 'BEGIN{ min ='$writemax99lat'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

writecont99lat=`echo "$writemax99lat $writemin99lat"|awk '{printf"%0.10f\n" ,$1-$2 }'`

writelat99=`awk 'BEGIN{printf "%.2f%%\n",('$writecont99lat'/'$writemax99lat')*100}'`

writemax999lat=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $22}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

writemin999lat=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $22}'|awk 'BEGIN{ min ='$writemax999lat'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

writecont999lat=`echo "$writemax999lat $writemin999lat"|awk '{printf"%0.10f\n" ,$1-$2 }'`

writelat999=`awk 'BEGIN{printf "%.2f%%\n",('$writecont999lat'/'$writemax999lat')*100}'`

writemax9999lat=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $23}'|awk 'BEGIN{ max = 0} {if ($1 > max) max = $1; fi} END{printf "%.10f\n",max}'`

writemin9999lat=`cat mix_${num}.csv |sed 1,1d|tr -s "," " "|awk '{print $23}'|awk 'BEGIN{ min ='$writemax9999lat'} {if ($1 < min) min = $1; fi} END{printf "%.10f\n",min}'`

writecont9999lat=`echo "$writemax9999lat $writemin9999lat"|awk '{printf"%0.10f\n" ,$1-$2 }'`

writelat9999=`awk 'BEGIN{printf "%.2f%%\n",('$writecont9999lat'/'$writemax9999lat')*100}'`

echo "result, ==,==,==,==,read, $readbandwith,$readiops, $readlatmin, $readavg, $readlast, $readlat99, $readlat999, $readlat9999, write,$writebandwith,$writeiops, $writelatmin, $writeavg, $writelast, $writelat99, $writelat999, $writelat9999 " >>mix_${num}.csv

done

for num in $(seq $num_block);do

cat result_${num}.csv >>result.csv

done

for num in $(seq $num_mix);do

cat mix_${num}.csv >>mix.csv

done

}

echo "start all hdds fio test"

bootdisk=`df -h | grep -i boot|awk '{print $1}' | grep -iE "/dev/sd" | sed 's/[0-9]//g' |sort -u|awk -F "/" '{print $NF}'`

if test -z "$bootdisk"

then

bootdisk=`df -h |grep -i boot| awk '{print $1}' | grep -iE "/dev/nvme" | sed 's/p[0-9]//g' |sort -u|awk -F "/" '{print $NF}'`

echo "os disk os $bootdisk"

else

echo "os disk is $bootdisk"

fi

for hdd in `lsscsi |grep -i sd |grep -vw $bootdisk|awk -F "/" '{print $NF}'`;

do

hddtest $hdd &

done

wait

echo "test has done start fix finally csv "

resultcsv

mixcsv

rm -rf result_[0-9]*.csv

rm -rf mix_[0-9]*.csv

mkdir result

mv *.csv result

echo "test has been finsished"

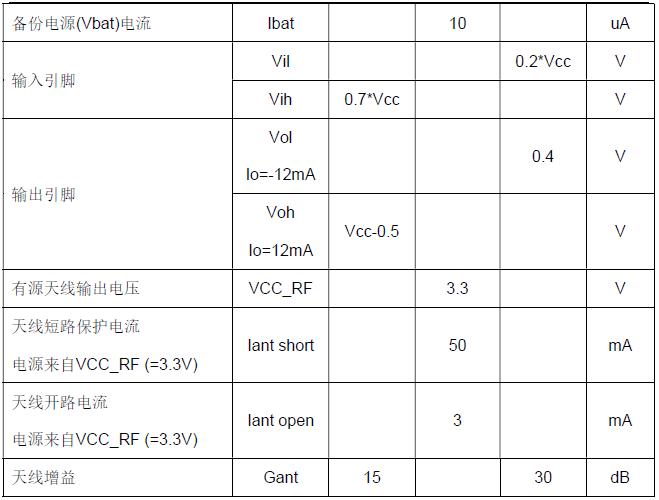

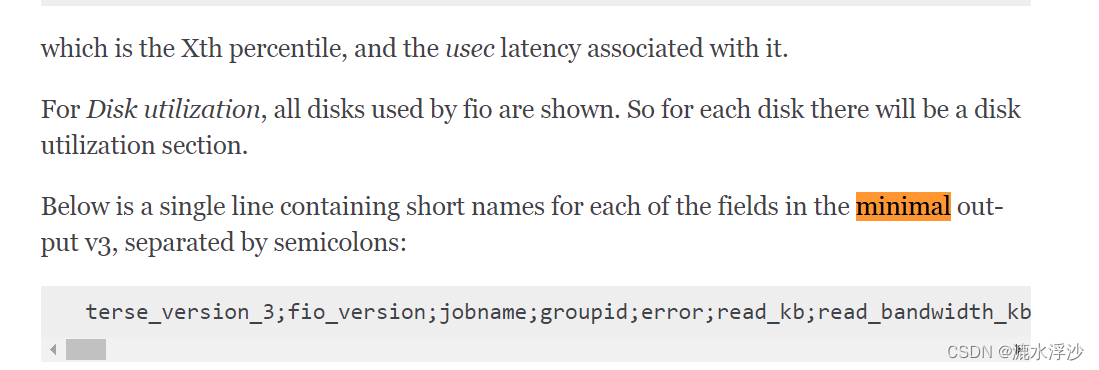

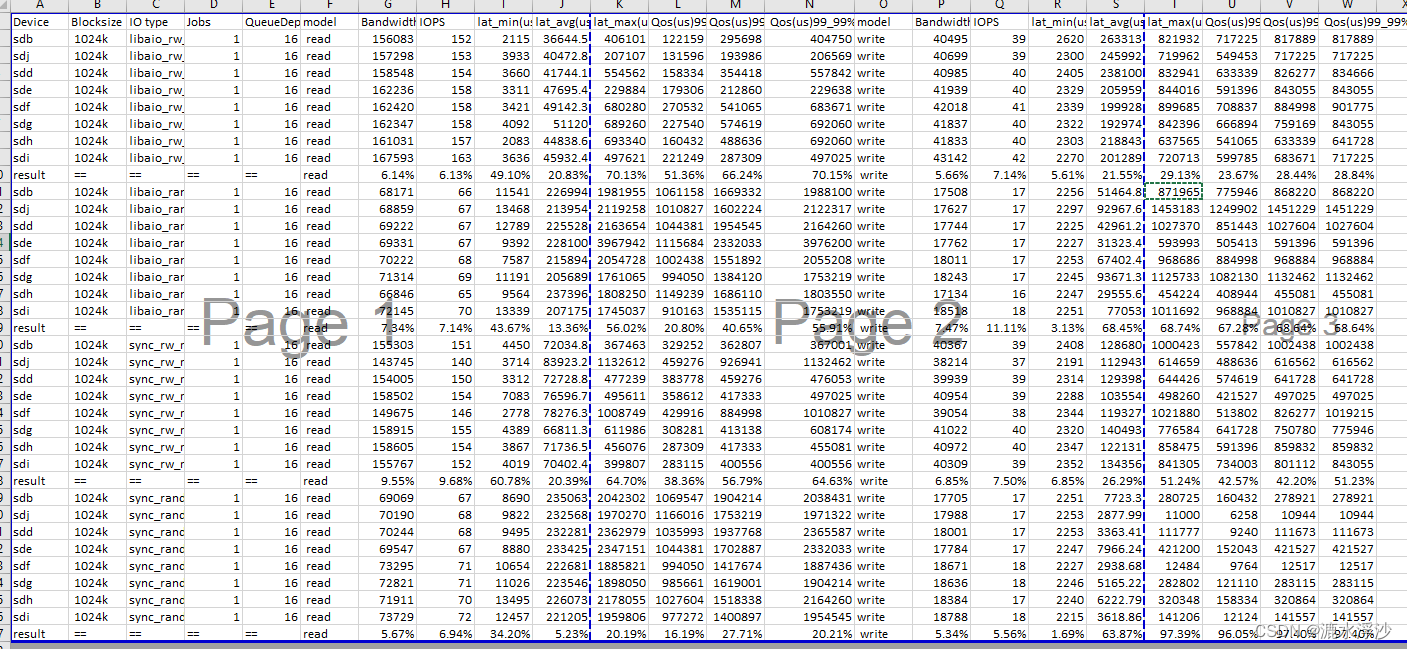

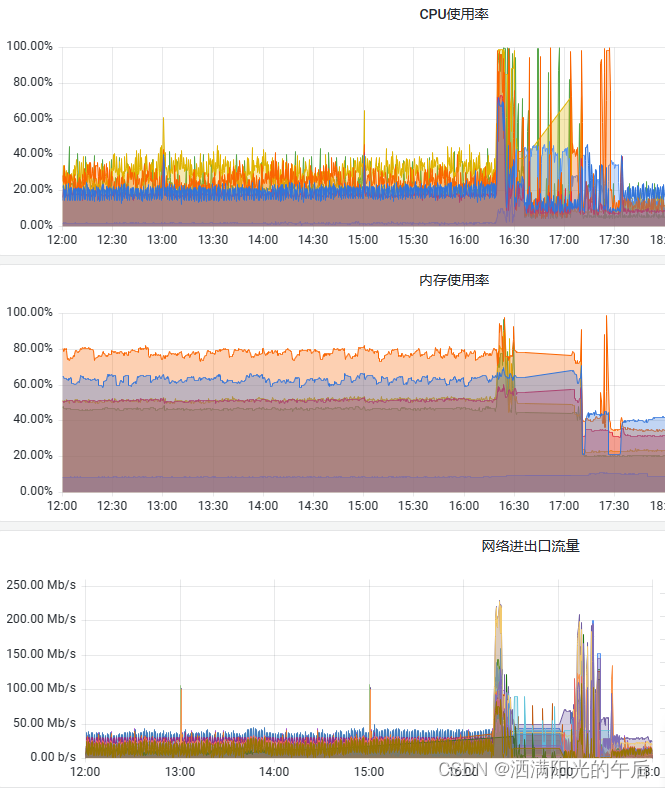

7 最终结果如下:

OK 学习完成

![[leetcode]刷题--关于位运算的几道题](https://img-blog.csdnimg.cn/3d4f3a11a3a34a75acd00c9287c1a9bc.png)