主页: https://google.github.io/mediapipe/solutions/holistic.html

MediaPipe Holistic pipelines 集成了姿势、面部和手部组件的独立模型,每个组件都针对其特定领域进行了优化,每个组件的推断输入图不同。

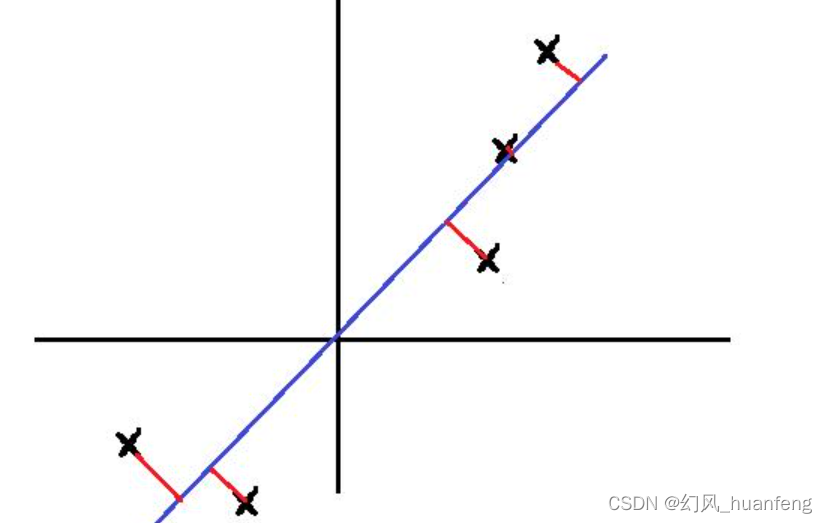

MediaPipe Holistic 首先通过 BlazePose 的姿势检测器和后续的关键点模型来估计人的姿势。然后,利用推断出的姿势关键点,为每只手和脸部推导出三个感兴趣区域(ROI)裁剪,并采用 re-crop 模型来改进 ROI

然后,pipelines 将全分辨率输入帧上裁剪这些 ROI,并应用特定任务的模型来估计它们对应的关键点。最后,将所有关键点与姿势模型的关键点合并,得出全部 540 多个关键点。

实现过程 🚀

编译demo

-

bazel build -c opt --define MEDIAPIPE_DISABLE_GPU=1 --action_env PYTHON_BIN_PATH="C:xxx//python.exe" mediapipe/examples/desktop/holistic_tracking:holistic_tracking_cpu --verbose_failures bazel-bin\mediapipe\examples\desktop\holistic_tracking\holistic_tracking_cpu --calculator_graph_config_file=mediapipe/graphs/holistic_tracking/holistic_tracking_cpu.pbtxt -

如果运行成功,则默认会调用摄像头

编写测试demo

-

环境配置参考:

- https://blog.csdn.net/sunnyblogs/article/details/118891249

- https://blog.csdn.net/haiyangyunbao813/article/details/119192951

-

修改

mediapipe\examples\desktop\holistic_tracking中BUILD -

增加个测试程序节点

cc_binary( name = "holistic_tracking_sample", srcs = ["main.cpp","holistic_detect.h","holistic_detect.cpp"], # linkshared=True, deps = [ "//mediapipe/graphs/holistic_tracking:holistic_tracking_cpu_graph_deps", ], ) -

holistic_detect.h

#ifndef _HOLISTIC_DETECT_H_ #define _HOLISTIC_DETECT_H_ #include <cstdlib> #include "absl/flags/flag.h" #include "absl/flags/parse.h" #include "mediapipe/framework/calculator_framework.h" #include "mediapipe/framework/formats/image_frame.h" #include "mediapipe/framework/formats/image_frame_opencv.h" #include "mediapipe/framework/port/file_helpers.h" #include "mediapipe/framework/port/opencv_highgui_inc.h" #include "mediapipe/framework/port/opencv_imgproc_inc.h" #include "mediapipe/framework/port/opencv_video_inc.h" #include "mediapipe/framework/port/parse_text_proto.h" #include "mediapipe/framework/port/status.h" #include "mediapipe/framework/formats/detection.pb.h" #include "mediapipe/framework/formats/landmark.pb.h" #include "mediapipe/framework/formats/rect.pb.h" #include "mediapipe/framework/formats/classification.pb.h" #include "mediapipe/framework/port/ret_check.h" namespace mp { using holistic_callback_t = std::function<void()>; class HolisticDetect { public: int initModel(const char* model_path) noexcept; int detectVideoCamera(holistic_callback_t call); int detectVideo(const char* video_path, int show_image, holistic_callback_t call); int detectVideo(); int release(); private: absl::Status initGraph(const char* model_path); absl::Status runMPPGraphVideo(const char* video_path, int show_image, holistic_callback_t call); absl::Status releaseGraph(); void showFaceLandmarks(cv::Mat& image); void showPoseLandmarks(cv::Mat& image); void showLHandLandmarks(cv::Mat& image); void showRHandLandmarks(cv::Mat& image); const char* kInputStream = "input_video"; const char* kOutputStream = "output_video"; const char* kWindowName = "MediaPipe"; const char* kOutputLandmarks = "face_landmarks"; const char* kOutputPoseLandmarks = "pose_landmarks"; const char* kOutputLHandLandmarks = "left_hand_landmarks"; const char* kOutputRHandLandmarks = "right_hand_landmarks"; bool init_ = false; mediapipe::CalculatorGraph graph_; const float kMinFloat = std::numeric_limits<float>::lowest(); const float kMaxFloat = std::numeric_limits<float>::max(); std::unique_ptr<mediapipe::OutputStreamPoller> pPoller_; std::unique_ptr<mediapipe::OutputStreamPoller> pPollerFaceLandmarks_; std::unique_ptr<mediapipe::OutputStreamPoller> pPollerPoseLandmarks_; std::unique_ptr<mediapipe::OutputStreamPoller> pPollerLHandLandmarks_; std::unique_ptr<mediapipe::OutputStreamPoller> pPollerRHandLandmarks_; }; } #endif -

holistic_detect.cpp

#include "holistic_detect.h" using namespace mp; int HolisticDetect::initModel(const char* model_path) noexcept { absl::Status run_status = initGraph(model_path); if (!run_status.ok()) return -1; init_ = true; return 1; } int HolisticDetect::detectVideoCamera(holistic_callback_t call) { if (!init_) return -1; absl::Status run_status = runMPPGraphVideo("", true, call); return run_status.ok() ? 1 : -1; } int HolisticDetect::detectVideo(const char* video_path, int show_image, holistic_callback_t call) { if (!init_) return -1; absl::Status run_status = runMPPGraphVideo(video_path, show_image, call); return run_status.ok() ? 1 : -1; } int HolisticDetect::detectVideo() { if (!init_) return -1; absl::Status run_status = runMPPGraphVideo("", 1, nullptr); return run_status.ok() ? 1 : -1; } int HolisticDetect::release() { absl::Status run_status = releaseGraph(); return run_status.ok() ? 1 : -1; } absl::Status HolisticDetect::initGraph(const char* model_path) { std::string calculator_graph_config_contents; MP_RETURN_IF_ERROR(mediapipe::file::GetContents(model_path, &calculator_graph_config_contents)); mediapipe::CalculatorGraphConfig config = mediapipe::ParseTextProtoOrDie<mediapipe::CalculatorGraphConfig>( calculator_graph_config_contents); MP_RETURN_IF_ERROR(graph_.Initialize(config)); auto sop = graph_.AddOutputStreamPoller(kOutputStream); assert(sop.ok()); pPoller_ = std::make_unique<mediapipe::OutputStreamPoller>(std::move(sop.value())); // 脸部特征 mediapipe::StatusOrPoller faceLandmark = graph_.AddOutputStreamPoller(kOutputLandmarks); assert(faceLandmark.ok()); pPollerFaceLandmarks_ = std::make_unique<mediapipe::OutputStreamPoller>(std::move(faceLandmark.value())); // 姿态特征 mediapipe::StatusOrPoller poseLandmark = graph_.AddOutputStreamPoller(kOutputPoseLandmarks); assert(poseLandmark.ok()); pPollerPoseLandmarks_ = std::make_unique<mediapipe::OutputStreamPoller>(std::move(poseLandmark.value())); // 左手特征点 mediapipe::StatusOrPoller leftHandLandmark = graph_.AddOutputStreamPoller(kOutputLHandLandmarks); assert(leftHandLandmark.ok()); pPollerLHandLandmarks_ = std::make_unique<mediapipe::OutputStreamPoller>(std::move(leftHandLandmark.value())); // 右手特征点 mediapipe::StatusOrPoller rightHandLandmark = graph_.AddOutputStreamPoller(kOutputRHandLandmarks); assert(rightHandLandmark.ok()); pPollerRHandLandmarks_ = std::make_unique<mediapipe::OutputStreamPoller>(std::move(rightHandLandmark.value())); MP_RETURN_IF_ERROR(graph_.StartRun({})); std::cout << "======= graph_ StartRun success ============" << std::endl; return absl::OkStatus(); } void HolisticDetect::showFaceLandmarks(cv::Mat& image) { mediapipe::Packet packet_landmarks; if (pPollerFaceLandmarks_->QueueSize() == 0 || !pPollerFaceLandmarks_->Next(&packet_landmarks)) return; // 468 face landmark auto& output_landmarks = packet_landmarks.Get<mediapipe::NormalizedLandmarkList>(); for (int i = 0; i < output_landmarks.landmark_size(); ++i) { const mediapipe::NormalizedLandmark landmark = output_landmarks.landmark(i); float x = landmark.x() * image.cols; float y = landmark.y() * image.rows; //cv::circle(image, cv::Point(x, y), 2, cv::Scalar(0, 255, 0)); // todo // ... } } void HolisticDetect::showPoseLandmarks(cv::Mat& image) { mediapipe::Packet packet_landmarks; if (pPollerPoseLandmarks_->QueueSize() == 0 || !pPollerPoseLandmarks_->Next(&packet_landmarks)) return; // 33 pose landmark auto& output_landmarks = packet_landmarks.Get<mediapipe::NormalizedLandmarkList>(); for (int i = 0; i < output_landmarks.landmark_size(); ++i) { const mediapipe::NormalizedLandmark landmark = output_landmarks.landmark(i); float x = landmark.x() * image.cols; float y = landmark.y() * image.rows; //cv::circle(image, cv::Point(x, y), 2, cv::Scalar(0, 255, 0)); // todo // ... } } void HolisticDetect::showLHandLandmarks(cv::Mat& image) { mediapipe::Packet packet_landmarks; if (pPollerLHandLandmarks_->QueueSize() == 0 || !pPollerLHandLandmarks_->Next(&packet_landmarks)) return; // 21 left hand landmark auto& output_landmarks = packet_landmarks.Get<mediapipe::NormalizedLandmarkList>(); for (int i = 0; i < output_landmarks.landmark_size(); ++i) { const mediapipe::NormalizedLandmark landmark = output_landmarks.landmark(i); float x = landmark.x() * image.cols; float y = landmark.y() * image.rows; //cv::circle(image, cv::Point(x, y), 2, cv::Scalar(0, 255, 0)); // todo // ... } } void HolisticDetect::showRHandLandmarks(cv::Mat& image) { mediapipe::Packet packet_landmarks; if (pPollerRHandLandmarks_->QueueSize() == 0 || !pPollerRHandLandmarks_->Next(&packet_landmarks)) return; // 21 right hand landmark auto& output_landmarks = packet_landmarks.Get<mediapipe::NormalizedLandmarkList>(); for (int i = 0; i < output_landmarks.landmark_size(); ++i) { const mediapipe::NormalizedLandmark landmark = output_landmarks.landmark(i); float x = landmark.x() * image.cols; float y = landmark.y() * image.rows; //cv::circle(image, cv::Point(x, y), 2, cv::Scalar(0, 255, 0)); // todo // ... } } absl::Status HolisticDetect::runMPPGraphVideo(const char* video_path, int show_image, holistic_callback_t call) { cv::VideoCapture capture(video_path); RET_CHECK(capture.isOpened()); #if (CV_MAJOR_VERSION >= 3) && (CV_MINOR_VERSION >= 2) capture.set(cv::CAP_PROP_FRAME_WIDTH, 640); capture.set(cv::CAP_PROP_FRAME_HEIGHT, 480); capture.set(cv::CAP_PROP_FPS, 30); #endif int tmp = 0; bool grab_frames = true; while (grab_frames) { // Capture opencv camera or video frame. cv::Mat camera_frame_raw; capture >> camera_frame_raw; if (camera_frame_raw.empty()) break; cv::Mat camera_frame; cv::cvtColor(camera_frame_raw, camera_frame, cv::COLOR_BGR2RGB); cv::flip(camera_frame, camera_frame, /*flipcode=HORIZONTAL*/ 1); // Wrap Mat into an ImageFrame. auto input_frame = absl::make_unique<mediapipe::ImageFrame>( mediapipe::ImageFormat::SRGB, camera_frame.cols, camera_frame.rows, mediapipe::ImageFrame::kDefaultAlignmentBoundary); cv::Mat input_frame_mat = mediapipe::formats::MatView(input_frame.get()); camera_frame.copyTo(input_frame_mat); // Send image packet into the graph. size_t frame_timestamp_us = (double)cv::getTickCount() / (double)cv::getTickFrequency() * 1e6; MP_RETURN_IF_ERROR(graph_.AddPacketToInputStream( kInputStream, mediapipe::Adopt(input_frame.release()) .At(mediapipe::Timestamp(frame_timestamp_us)))); // Get the graph result packet, or stop if that fails. mediapipe::Packet packet; if (!pPoller_->Next(&packet)) break; showFaceLandmarks(camera_frame); showPoseLandmarks(camera_frame); showLHandLandmarks(camera_frame); showRHandLandmarks(camera_frame); if (show_image) { auto& output_frame = packet.Get<mediapipe::ImageFrame>(); // Convert back to opencv for display or saving. cv::Mat output_frame_mat = mediapipe::formats::MatView(&output_frame); cv::cvtColor(output_frame_mat, output_frame_mat, cv::COLOR_RGB2BGR); cv::imshow(kWindowName, output_frame_mat); cv::waitKey(1); /* cv::Mat output_frame_mat; cv::cvtColor(camera_frame, output_frame_mat, cv::COLOR_RGB2BGR); cv::imwrite(cv::format("out_image/%d.jpg", tmp++), output_frame_mat);*/ } } if (show_image) cv::destroyWindow(kWindowName); return absl::OkStatus(); } absl::Status HolisticDetect::releaseGraph() { MP_RETURN_IF_ERROR(graph_.CloseInputStream(kInputStream)); MP_RETURN_IF_ERROR(graph_.CloseInputStream(kOutputLandmarks)); return graph_.WaitUntilDone(); } -

main.cpp

#include "holistic_detect.h" using namespace mp; HolisticDetect holisticDetect_; void call() { } int main(int argc, char* argv[]) { std::cout << "======= holistic ============" << std::endl; const char* model = argv[1]; const char* video_path = argv[2]; int isShow = 1; int res = holisticDetect_.initModel(model); if (res < 0) { std::cout << "======= initModel error ============" << std::endl; return -1; } res = holisticDetect_.detectVideo(video_path, isShow , call); if (res < 0) return -1; holisticDetect_.release(); return 0; } -

编译

bazel build -c opt --define MEDIAPIPE_DISABLE_GPU=1 --action_env PYTHON_BIN_PATH="C://xx//python.exe" mediapipe/examples/desktop/holistic_tracking:holistic_tracking_sample --verbose_failures -

运行

bazel-bin\mediapipe\examples\desktop\holistic_tracking\holistic_tracking_sample "mediapipe/graphs/holistic_tracking/holistic_tracking_cpu.pbtxt" "video/test.mp4" -

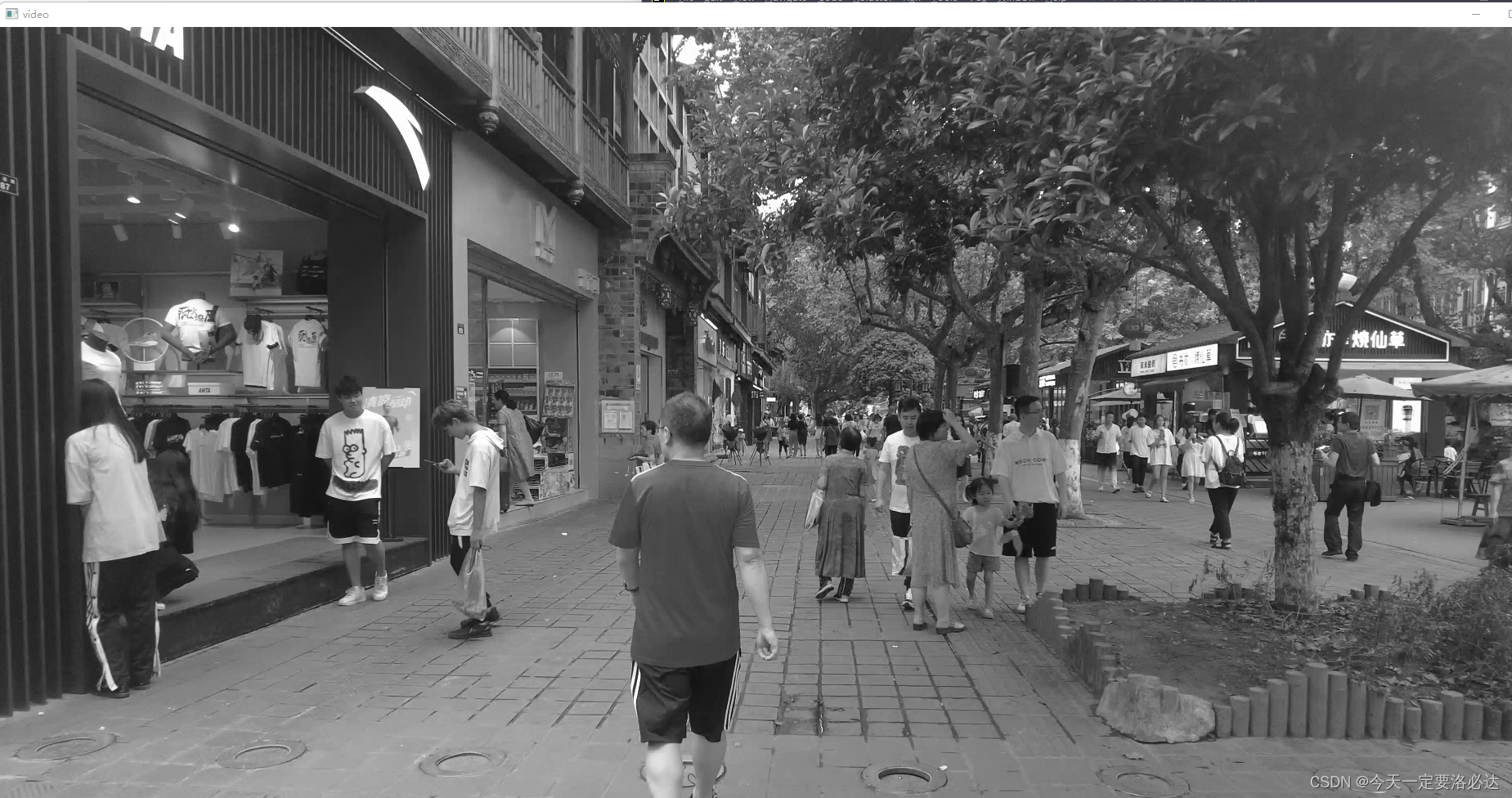

运行成功, 则可获取人脸面部

468个特征点 ,左手21个特征点 , 右手21个特征点 ,姿态33个特征点。

其他模块 ☀️

- IRIS: https://blog.csdn.net/haiyangyunbao813/article/details/122225445?spm=1001.2014.3001.5502

- Pose: https://blog.csdn.net/haiyangyunbao813/article/details/119192951?spm=1001.2014.3001.5502

- Hand: https://blog.csdn.net/haiyangyunbao813/article/details/122464972?spm=1001.2014.3001.5502

- 其他待续…

青春不过几届世界杯

输赢并不是足球的全部 , 真正的热爱或许是笑着庆祝, 哭着鼓掌 。加油马儿,愿 2026 再战一届。 🌟🌟🌟

![[LeetCode周赛复盘] 第 93 场双周赛20221015-补](https://img-blog.csdnimg.cn/faee3da6e7d948ae9e8c181475e39eef.png)

![[附源码]计算机毕业设计个性化名片网站Springboot程序](https://img-blog.csdnimg.cn/d1a1c10188a34f7fb44f51e7f915f8e4.png)