如果是自己来写这部分代码的话,真的是很容易,如果这个框架中有自带的backbone结构的话,那可以从其他地方找到对应的pytorch版本实现,或者自己写。

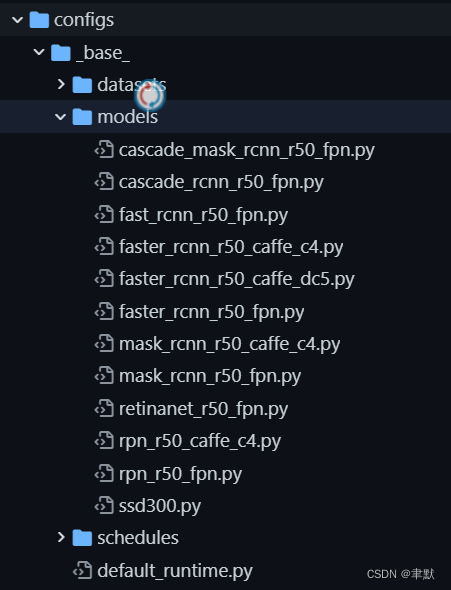

配置部分在configs/_base_/models目录下,具体实现在mmdet/models/backbone目录下。

1.configs

我们看下yolox的backbone吧。https://github.com/open-mmlab/mmdetection/blob/master/configs/yolox/yolox_s_8x8_300e_coco.py

backbone=dict(type='CSPDarknet', deepen_factor=0.33, widen_factor=0.5),

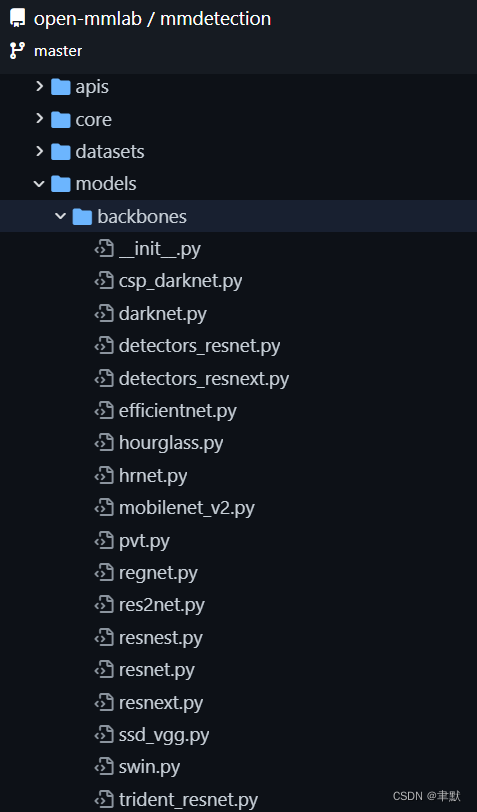

2.具体实现

具体实现,去找CSPDarknet这个类:

https://github.com/open-mmlab/mmdetection/blob/master/mmdet/models/backbones/csp_darknet.py#L124

@BACKBONES.register_module()

class CSPDarknet(BaseModule):

"""CSP-Darknet backbone used in YOLOv5 and YOLOX.

Args:

arch (str): Architecture of CSP-Darknet, from {P5, P6}.

Default: P5.

deepen_factor (float): Depth multiplier, multiply number of

blocks in CSP layer by this amount. Default: 1.0.

widen_factor (float): Width multiplier, multiply number of

channels in each layer by this amount. Default: 1.0.

out_indices (Sequence[int]): Output from which stages.

Default: (2, 3, 4).

frozen_stages (int): Stages to be frozen (stop grad and set eval

mode). -1 means not freezing any parameters. Default: -1.

use_depthwise (bool): Whether to use depthwise separable convolution.

Default: False.

arch_ovewrite(list): Overwrite default arch settings. Default: None.

spp_kernal_sizes: (tuple[int]): Sequential of kernel sizes of SPP

layers. Default: (5, 9, 13).

conv_cfg (dict): Config dict for convolution layer. Default: None.

norm_cfg (dict): Dictionary to construct and config norm layer.

Default: dict(type='BN', requires_grad=True).

act_cfg (dict): Config dict for activation layer.

Default: dict(type='LeakyReLU', negative_slope=0.1).

norm_eval (bool): Whether to set norm layers to eval mode, namely,

freeze running stats (mean and var). Note: Effect on Batch Norm

and its variants only.

init_cfg (dict or list[dict], optional): Initialization config dict.

Default: None.

Example:

>>> from mmdet.models import CSPDarknet

>>> import torch

>>> self = CSPDarknet(depth=53)

>>> self.eval()

>>> inputs = torch.rand(1, 3, 416, 416)

>>> level_outputs = self.forward(inputs)

>>> for level_out in level_outputs:

... print(tuple(level_out.shape))

...

(1, 256, 52, 52)

(1, 512, 26, 26)

(1, 1024, 13, 13)

"""

# From left to right:

# in_channels, out_channels, num_blocks, add_identity, use_spp

arch_settings = {

'P5': [[64, 128, 3, True, False], [128, 256, 9, True, False],

[256, 512, 9, True, False], [512, 1024, 3, False, True]],

'P6': [[64, 128, 3, True, False], [128, 256, 9, True, False],

[256, 512, 9, True, False], [512, 768, 3, True, False],

[768, 1024, 3, False, True]]

}

def __init__(self,

arch='P5',

deepen_factor=1.0,

widen_factor=1.0,

out_indices=(2, 3, 4),

frozen_stages=-1,

use_depthwise=False,

arch_ovewrite=None,

spp_kernal_sizes=(5, 9, 13),

conv_cfg=None,

norm_cfg=dict(type='BN', momentum=0.03, eps=0.001),

act_cfg=dict(type='Swish'),

norm_eval=False,

init_cfg=dict(

type='Kaiming',

layer='Conv2d',

a=math.sqrt(5),

distribution='uniform',

mode='fan_in',

nonlinearity='leaky_relu')):

super().__init__(init_cfg)

arch_setting = self.arch_settings[arch]

if arch_ovewrite:

arch_setting = arch_ovewrite

assert set(out_indices).issubset(

i for i in range(len(arch_setting) + 1))

if frozen_stages not in range(-1, len(arch_setting) + 1):

raise ValueError('frozen_stages must be in range(-1, '

'len(arch_setting) + 1). But received '

f'{frozen_stages}')

self.out_indices = out_indices

self.frozen_stages = frozen_stages

self.use_depthwise = use_depthwise

self.norm_eval = norm_eval

conv = DepthwiseSeparableConvModule if use_depthwise else ConvModule

self.stem = Focus(

3,

int(arch_setting[0][0] * widen_factor),

kernel_size=3,

conv_cfg=conv_cfg,

norm_cfg=norm_cfg,

act_cfg=act_cfg)

self.layers = ['stem']

for i, (in_channels, out_channels, num_blocks, add_identity,

use_spp) in enumerate(arch_setting):

in_channels = int(in_channels * widen_factor)

out_channels = int(out_channels * widen_factor)

num_blocks = max(round(num_blocks * deepen_factor), 1)

stage = []

conv_layer = conv(

in_channels,

out_channels,

3,

stride=2,

padding=1,

conv_cfg=conv_cfg,

norm_cfg=norm_cfg,

act_cfg=act_cfg)

stage.append(conv_layer)

if use_spp:

spp = SPPBottleneck(

out_channels,

out_channels,

kernel_sizes=spp_kernal_sizes,

conv_cfg=conv_cfg,

norm_cfg=norm_cfg,

act_cfg=act_cfg)

stage.append(spp)

csp_layer = CSPLayer(

out_channels,

out_channels,

num_blocks=num_blocks,

add_identity=add_identity,

use_depthwise=use_depthwise,

conv_cfg=conv_cfg,

norm_cfg=norm_cfg,

act_cfg=act_cfg)

stage.append(csp_layer)

self.add_module(f'stage{i + 1}', nn.Sequential(*stage))

self.layers.append(f'stage{i + 1}')

def _freeze_stages(self):

if self.frozen_stages >= 0:

for i in range(self.frozen_stages + 1):

m = getattr(self, self.layers[i])

m.eval()

for param in m.parameters():

param.requires_grad = False

def train(self, mode=True):

super(CSPDarknet, self).train(mode)

self._freeze_stages()

if mode and self.norm_eval:

for m in self.modules():

if isinstance(m, _BatchNorm):

m.eval()

def forward(self, x):

outs = []

for i, layer_name in enumerate(self.layers):

layer = getattr(self, layer_name)

x = layer(x)

if i in self.out_indices:

outs.append(x)

return tuple(outs)

3.如何调用的?

3.1 注册

注册创建类名字典。

@BACKBONES.register_module()

3.2 调用

即以字典中的类名,进行类的实例化。

由于backbone是包在models里面的,所以去看YOLOX这个类。

https://github.com/open-mmlab/mmdetection/blob/master/mmdet/models/detectors/yolox.py#L15

@DETECTORS.register_module()

class YOLOX(SingleStageDetector):

r"""Implementation of `YOLOX: Exceeding YOLO Series in 2021

<https://arxiv.org/abs/2107.08430>`_

Note: Considering the trade-off between training speed and accuracy,

multi-scale training is temporarily kept. More elegant implementation

will be adopted in the future.

Args:

backbone (nn.Module): The backbone module.

neck (nn.Module): The neck module.

bbox_head (nn.Module): The bbox head module.

train_cfg (obj:`ConfigDict`, optional): The training config

of YOLOX. Default: None.

test_cfg (obj:`ConfigDict`, optional): The testing config

of YOLOX. Default: None.

pretrained (str, optional): model pretrained path.

Default: None.

input_size (tuple): The model default input image size. The shape

order should be (height, width). Default: (640, 640).

size_multiplier (int): Image size multiplication factor.

Default: 32.

random_size_range (tuple): The multi-scale random range during

multi-scale training. The real training image size will

be multiplied by size_multiplier. Default: (15, 25).

random_size_interval (int): The iter interval of change

image size. Default: 10.

init_cfg (dict, optional): Initialization config dict.

Default: None.

"""

def __init__(self,

backbone,

neck,

bbox_head,

train_cfg=None,

test_cfg=None,

pretrained=None,

input_size=(640, 640),

size_multiplier=32,

random_size_range=(15, 25),

random_size_interval=10,

init_cfg=None):

super(YOLOX, self).__init__(backbone, neck, bbox_head, train_cfg,

test_cfg, pretrained, init_cfg)

log_img_scale(input_size, skip_square=True)

self.rank, self.world_size = get_dist_info()

self._default_input_size = input_size

self._input_size = input_size

self._random_size_range = random_size_range

self._random_size_interval = random_size_interval

self._size_multiplier = size_multiplier

self._progress_in_iter = 0

def forward_train(self,

img,

img_metas,

gt_bboxes,

gt_labels,

gt_bboxes_ignore=None):

"""

Args:

img (Tensor): Input images of shape (N, C, H, W).

Typically these should be mean centered and std scaled.

img_metas (list[dict]): A List of image info dict where each dict

has: 'img_shape', 'scale_factor', 'flip', and may also contain

'filename', 'ori_shape', 'pad_shape', and 'img_norm_cfg'.

For details on the values of these keys see

:class:`mmdet.datasets.pipelines.Collect`.

gt_bboxes (list[Tensor]): Each item are the truth boxes for each

image in [tl_x, tl_y, br_x, br_y] format.

gt_labels (list[Tensor]): Class indices corresponding to each box

gt_bboxes_ignore (None | list[Tensor]): Specify which bounding

boxes can be ignored when computing the loss.

Returns:

dict[str, Tensor]: A dictionary of loss components.

"""

# Multi-scale training

img, gt_bboxes = self._preprocess(img, gt_bboxes)

losses = super(YOLOX, self).forward_train(img, img_metas, gt_bboxes,

gt_labels, gt_bboxes_ignore)

# random resizing

if (self._progress_in_iter + 1) % self._random_size_interval == 0:

self._input_size = self._random_resize(device=img.device)

self._progress_in_iter += 1

return losses

def _preprocess(self, img, gt_bboxes):

scale_y = self._input_size[0] / self._default_input_size[0]

scale_x = self._input_size[1] / self._default_input_size[1]

if scale_x != 1 or scale_y != 1:

img = F.interpolate(

img,

size=self._input_size,

mode='bilinear',

align_corners=False)

for gt_bbox in gt_bboxes:

gt_bbox[..., 0::2] = gt_bbox[..., 0::2] * scale_x

gt_bbox[..., 1::2] = gt_bbox[..., 1::2] * scale_y

return img, gt_bboxes

def _random_resize(self, device):

tensor = torch.LongTensor(2).to(device)

if self.rank == 0:

size = random.randint(*self._random_size_range)

aspect_ratio = float(

self._default_input_size[1]) / self._default_input_size[0]

size = (self._size_multiplier * size,

self._size_multiplier * int(aspect_ratio * size))

tensor[0] = size[0]

tensor[1] = size[1]

if self.world_size > 1:

dist.barrier()

dist.broadcast(tensor, 0)

input_size = (tensor[0].item(), tensor[1].item())

return input_size

发现并不容易看出backbone的部分,然后去找其基类SingleStageDetector中的代码段,通过获取注册字典中的CSPDarknet:

self.backbone = build_backbone(backbone)https://github.com/open-mmlab/mmdetection/blob/31c84958f54287a8be2b99cbf87a6dcf12e57753/mmdet/models/detectors/single_stage.py#L12

class SingleStageDetector(BaseDetector):

"""Base class for single-stage detectors.

Single-stage detectors directly and densely predict bounding boxes on the

output features of the backbone+neck.

"""

def __init__(self,

backbone,

neck=None,

bbox_head=None,

train_cfg=None,

test_cfg=None,

pretrained=None,

init_cfg=None):

super(SingleStageDetector, self).__init__(init_cfg)

if pretrained:

warnings.warn('DeprecationWarning: pretrained is deprecated, '

'please use "init_cfg" instead')

backbone.pretrained = pretrained

self.backbone = build_backbone(backbone)

if neck is not None:

self.neck = build_neck(neck)

bbox_head.update(train_cfg=train_cfg)

bbox_head.update(test_cfg=test_cfg)

self.bbox_head = build_head(bbox_head)

self.train_cfg = train_cfg

self.test_cfg = test_cfg

def extract_feat(self, img):

"""Directly extract features from the backbone+neck."""

x = self.backbone(img)

if self.with_neck:

x = self.neck(x)

return x

def forward_dummy(self, img):

"""Used for computing network flops.

See `mmdetection/tools/analysis_tools/get_flops.py`

"""

x = self.extract_feat(img)

outs = self.bbox_head(x)

return outs

def forward_train(self,

img,

img_metas,

gt_bboxes,

gt_labels,

gt_bboxes_ignore=None):

"""

Args:

img (Tensor): Input images of shape (N, C, H, W).

Typically these should be mean centered and std scaled.

img_metas (list[dict]): A List of image info dict where each dict

has: 'img_shape', 'scale_factor', 'flip', and may also contain

'filename', 'ori_shape', 'pad_shape', and 'img_norm_cfg'.

For details on the values of these keys see

:class:`mmdet.datasets.pipelines.Collect`.

gt_bboxes (list[Tensor]): Each item are the truth boxes for each

image in [tl_x, tl_y, br_x, br_y] format.

gt_labels (list[Tensor]): Class indices corresponding to each box

gt_bboxes_ignore (None | list[Tensor]): Specify which bounding

boxes can be ignored when computing the loss.

Returns:

dict[str, Tensor]: A dictionary of loss components.

"""

super(SingleStageDetector, self).forward_train(img, img_metas)

x = self.extract_feat(img)

losses = self.bbox_head.forward_train(x, img_metas, gt_bboxes,

gt_labels, gt_bboxes_ignore)

return losses

def simple_test(self, img, img_metas, rescale=False):

"""Test function without test-time augmentation.

Args:

img (torch.Tensor): Images with shape (N, C, H, W).

img_metas (list[dict]): List of image information.

rescale (bool, optional): Whether to rescale the results.

Defaults to False.

Returns:

list[list[np.ndarray]]: BBox results of each image and classes.

The outer list corresponds to each image. The inner list

corresponds to each class.

"""

feat = self.extract_feat(img)

results_list = self.bbox_head.simple_test(

feat, img_metas, rescale=rescale)

bbox_results = [

bbox2result(det_bboxes, det_labels, self.bbox_head.num_classes)

for det_bboxes, det_labels in results_list

]

return bbox_results

def aug_test(self, imgs, img_metas, rescale=False):

"""Test function with test time augmentation.

Args:

imgs (list[Tensor]): the outer list indicates test-time

augmentations and inner Tensor should have a shape NxCxHxW,

which contains all images in the batch.

img_metas (list[list[dict]]): the outer list indicates test-time

augs (multiscale, flip, etc.) and the inner list indicates

images in a batch. each dict has image information.

rescale (bool, optional): Whether to rescale the results.

Defaults to False.

Returns:

list[list[np.ndarray]]: BBox results of each image and classes.

The outer list corresponds to each image. The inner list

corresponds to each class.

"""

assert hasattr(self.bbox_head, 'aug_test'), \

f'{self.bbox_head.__class__.__name__}' \

' does not support test-time augmentation'

feats = self.extract_feats(imgs)

results_list = self.bbox_head.aug_test(

feats, img_metas, rescale=rescale)

bbox_results = [

bbox2result(det_bboxes, det_labels, self.bbox_head.num_classes)

for det_bboxes, det_labels in results_list

]

return bbox_results

def onnx_export(self, img, img_metas, with_nms=True):

"""Test function without test time augmentation.

Args:

img (torch.Tensor): input images.

img_metas (list[dict]): List of image information.

Returns:

tuple[Tensor, Tensor]: dets of shape [N, num_det, 5]

and class labels of shape [N, num_det].

"""

x = self.extract_feat(img)

outs = self.bbox_head(x)

# get origin input shape to support onnx dynamic shape

# get shape as tensor

img_shape = torch._shape_as_tensor(img)[2:]

img_metas[0]['img_shape_for_onnx'] = img_shape

# get pad input shape to support onnx dynamic shape for exporting

# `CornerNet` and `CentripetalNet`, which 'pad_shape' is used

# for inference

img_metas[0]['pad_shape_for_onnx'] = img_shape

if len(outs) == 2:

# add dummy score_factor

outs = (*outs, None)

# TODO Can we change to `get_bboxes` when `onnx_export` fail

det_bboxes, det_labels = self.bbox_head.onnx_export(

*outs, img_metas, with_nms=with_nms)

return det_bboxes, det_labels

![[附源码]计算机毕业设计基于Java酒店管理系统Springboot程序](https://img-blog.csdnimg.cn/8b77efbf25c64a7fac9bd35a9e476171.png)