eks集群默认策略在磁盘使用量达到threshold时会清除镜像,其中pause镜像也可能会被清除

https://aws.amazon.com/cn/premiumsupport/knowledge-center/eks-worker-nodes-image-cache/

pause容器能够为pod创建初始的名称空间,pod的内的容器共享其中的网络空间,该镜像的删除会导致pod无法启动

almighty-pause-container

The image whose network/ipc namespaces containers in each pod will use.

但是由于未知原因,kubelet无法从ecr仓库中再次拉取镜像,出现401错误

pulling from host 918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn failed with status code [manifests 3.5]: 401 Unauthorized

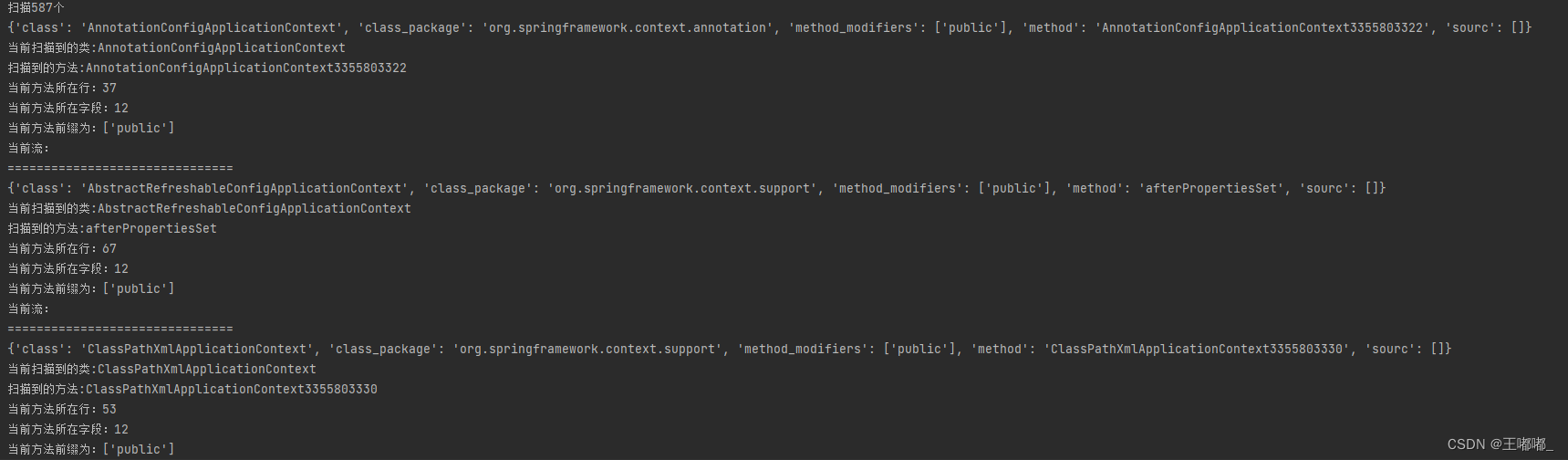

kubelet中和认证相关的启动参数

之前的文章中我们提到了k8s的in-tree凭证提供者,从官方的态度来看,未来会把凭证提供程序作为外部插件来设计

https://github.com/kubernetes/cloud-provider-aws

https://github.com/kubernetes/cloud-provider-aws/blob/v1.26.0/cmd/ecr-credential-provider/main.go

The in-tree cloud provider code has mostly stopped accepting new features, so future development for the AWS cloud provider should continue here. The in-tree plugins will be removed in a future release of Kubernetes.

在1.24集群使用containerd作为运行时,设置为remote

I0224 02:39:02.722293 3523 flags.go:64] FLAG: --container-runtime="remote"

I0224 02:39:02.722297 3523 flags.go:64] FLAG: --container-runtime-endpoint="unix:///run/containerd/containerd.sock"

垃圾回收的参数,可能会清除镜像

I0224 02:39:02.722460 3523 flags.go:64] FLAG: --image-gc-high-threshold="85"

I0224 02:39:02.722463 3523 flags.go:64] FLAG: --image-gc-low-threshold="80"

登录节点查看pause容器

# ctr -n=k8s.io i ls -q | grep pause

918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn/eks/pause:3.5

961992271922.dkr.ecr.cn-north-1.amazonaws.com.cn/eks/pause:3.5

961992271922.dkr.ecr.cn-northwest-1.amazonaws.com.cn/eks/pause:3.5

查看kubelet日志,确实将这个pause容器作为sandbox

Feb 24 02:39:02 ip-192-168-6-203.cn-north-1.compute.internal kubelet[3523]: I0224 02:39:02.722647 3523 flags.go:64] FLAG: --pod-infra-container-image="918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn/eks/pause:3.5"

containerd的配置文件同样指定了这个pause容器

cat /etc/containerd/config.toml

[plugins."io.containerd.grpc.v1.cri"]

sandbox_image = "918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn/eks/pause:3.5"

分析下eks启动脚本中的相关逻辑,通过将pause卸载环境变量中,在kubelet启动时读取

PAUSE_CONTAINER_VERSION="${PAUSE_CONTAINER_VERSION:-3.5}"

AWS_DEFAULT_REGION=$(imds 'latest/dynamic/instance-identity/document' | jq .region -r)

AWS_SERVICES_DOMAIN=$(imds 'latest/meta-data/services/domain')

...

ECR_URI=$(/etc/eks/get-ecr-uri.sh "${AWS_DEFAULT_REGION}" "${AWS_SERVICES_DOMAIN}" "${PAUSE_CONTAINER_ACCOUNT:-}")

PAUSE_CONTAINER_IMAGE=${PAUSE_CONTAINER_IMAGE:-$ECR_URI/eks/pause}

PAUSE_CONTAINER="$PAUSE_CONTAINER_IMAGE:$PAUSE_CONTAINER_VERSION"

...

cat << EOF > /etc/systemd/system/kubelet.service.d/10-kubelet-args.conf

[Service]

Environment='KUBELET_ARGS=--node-ip=$INTERNAL_IP --pod-infra-container-image=$PAUSE_CONTAINER --v=2'

EOF

手动删除pause镜像测试

手动删除镜像

ctr -n=k8s.io i rm 918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn/eks/pause:3.5

创建pod,卡在ContainerCreating状态,kubelet返回以下错误

Warning FailedCreatePodSandBox 4s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to get sandbox image "918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn/eks/pause:3.5": failed to pull image "918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn/eks/pause:3.5": failed to pull and unpack image "918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn/eks/pause:3.5": failed to resolve reference "918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn/eks/pause:3.5": pulling from host 918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn failed with status code [manifests 3.5]: 401 Unauthorized

手动拉取镜像,和pod报错一致即认证失败

$ ctr -n=k8s.io i pull 918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn/eks/pause:3.5

ctr: failed to resolve reference "918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn/eks/pause:3.5": pulling from host 918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn failed with status code [manifests 3.5]: 401 Unauthorized

手动鉴权之后拉取

aws ecr get-login-password --region cn-north-1 | docker login --username AWS --password-stdin 918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn

nerdctl -n=k8s.io pull 918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn/eks/pause:3.5

or

AUTH_STRING=QVdTOmV5xxxxxxxx

crictl pull --auth $AUTH_STRING 918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn/eks/pause:3.5

拉取镜像之后容器启动成功,kubelet不在出现错误日志

题外话,使用 containerd 作为 Kubernetes 容器运行时的时候,容器日志的落盘则由 kubelet 来完成了,被直接保存在 /var/log/pods/<CONTAINER> 目录下面,同时在 /var/log/containers 目录下创建软链接指向日志文件。默认的日志配置同样通过kubelet参数设置

I0224 02:39:02.722286 3523 flags.go:64] FLAG: --container-log-max-files="5"

I0224 02:39:02.722290 3523 flags.go:64] FLAG: --container-log-max-size="10Mi"

pause镜像的认证逻辑

虽然我们通过手动鉴权拉取镜像解决了问题,但此时如果再次删除pause镜像,kubelet仍旧会出现401错误,说明kubelet并没有完成鉴权

逻辑上,kubelet的鉴权是在kubelet启动过程中完成的,推测该行为只在启动时执行一次(pause镜像下载好即可)。那我们可以尝试手动执行bootstrap.sh脚本或者重启kubelet,迫使kubelet主动完成鉴权和拉取镜像的操作

// 删除pasue

ctr -n=k8s.io i rm 918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn/eks/pause:3.5

// 重启kubelet

systemctl restart kubelet

// 重启bootstrap

/etc/eks/bootstrap.sh cluster-name

能明显看到多了一个pause镜像,说明我们的推理是正确的

# nerdctl -n=k8s.io images | grep pause

918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn/eks/pause 3.5 529cf6b1b6e5 3 seconds ago linux/amd64 668.0 KiB 291.7 KiB

961992271922.dkr.ecr.cn-north-1.amazonaws.com.cn/eks/pause 3.5 529cf6b1b6e5 2 weeks ago linux/amd64 668.0 KiB 291.7 KiB

961992271922.dkr.ecr.cn-northwest-1.amazonaws.com.cn/eks/pause 3.5 529cf6b1b6e5 2 weeks ago linux/amd64 668.0 KiB 291.7 KiB

但问题在于,后续如果使用其他仓库的镜像,是能够自主完成鉴权的,例如以下pod使用了其他账号的镜像。那么为什么只有pause镜像仓库无法自主鉴权呢?推测pause镜像的下载逻辑和pod的镜像是不同的

kind: Pod

apiVersion: v1

metadata:

name: test-temp

spec:

containers:

- name: test

image: xxxxxxxx.dkr.ecr.cn-north-1.amazonaws.com.cn/nginx:latest

手动更换bootstrap脚本中的pause镜像源为public.ecr.aws/eks-distro/kubernetes/pause:3.5,重启bootstrap。之后不会再出现401,因为这个是公共仓库

# /etc/systemd/system/kubelet.service.d/10-kubelet-args.conf

[Service]

Environment='KUBELET_ARGS=--node-ip=192.168.6.203 --pod-infra-container-image=public.ecr.aws/eks-distro/kubernetes/pause:3.5 --v=2'

在bootstrap的时候出现以下输出

Using kubelet version 1.24.10

true

Using containerd as the container runtime

true

[Service]

Slice=runtime.slice

‘/etc/eks/containerd/containerd-config.toml’ -> ‘/etc/containerd/config.toml’

‘/etc/eks/containerd/sandbox-image.service’ -> ‘/etc/systemd/system/sandbox-image.service’

‘/etc/eks/containerd/kubelet-containerd.service’ -> ‘/etc/systemd/system/kubelet.service’

nvidia-smi not found

有一个服务专门用来管理sandbox镜像,在containerd之后启动并拉取sandbox镜像,所以我们也可以手动执行这个脚本

cat /etc/eks/containerd/sandbox-image.service

[Unit]

Description=pull sandbox image defined in containerd config.toml

# pulls sandbox image using ctr tool

After=containerd.service

Requires=containerd.service

[Service]

Type=oneshot

ExecStart=/etc/eks/containerd/pull-sandbox-image.sh

[Install]

WantedBy=multi-user.target

查看脚本的内容,实际上就是取拉取了sandbox镜像

cat /etc/eks/containerd/pull-sandbox-image.sh

#!/usr/bin/env bash

set -euo pipefail

source <(grep "sandbox_image" /etc/containerd/config.toml | tr -d ' ')

### Short-circuit fetching sandbox image if its already present

if [[ "$(sudo ctr --namespace k8s.io image ls | grep $sandbox_image)" != "" ]]; then

exit 0

fi

/etc/eks/containerd/pull-image.sh "${sandbox_image}"

拉取镜像的具体逻辑

- 从参数中解析具体信息

- 重试多次拉取镜像

# cat /etc/eks/containerd/pull-image.sh

#!/usr/bin/env bash

img=$1

region=$(echo "${img}" | cut -f4 -d ".")

MAX_RETRIES=3

...

ecr_password=$(retry aws ecr get-login-password --region $region)

if [[ -z ${ecr_password} ]]; then

echo >&2 "Unable to retrieve the ECR password."

exit 1

fi

retry sudo ctr --namespace k8s.io content fetch "${img}" --user AWS:${ecr_password}

那么问题就在于以下这个黑魔法命令,完成了认证工作

ecr_password=$(aws ecr get-login-password --region cn-north-1)

ctr --namespace k8s.io content fetch "918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn/eks/pause:3.5" --user AWS:${ecr_password}

pod镜像的认证机制

现在看来,我们之前讨论过的credential-provider实际上为pod拉取镜像提供了凭证

把kubelet中的那两个参数去掉重新bootstrap

# vi /etc/eks/containerd/kubelet-containerd.service

ExecStart=/usr/bin/kubelet --cloud-provider aws \

// 删除--image-credential-provider-config /etc/eks/ecr-credential-provider/ecr-credential-provider-config \

// 删除--image-credential-provider-bin-dir /etc/eks/ecr-credential-provider \

--config /etc/kubernetes/kubelet/kubelet-config.json \

--kubeconfig /var/lib/kubelet/kubeconfig \

--container-runtime remote \

--container-runtime-endpoint unix:///run/containerd/containerd.sock \

$KUBELET_ARGS $KUBELET_EXTRA_ARGS

# /etc/eks/bootstrap.sh test124

# systemctl daemon-reload

# systemctl restart kubelet

kubelet已经没有鉴权工具了

# systemctl status kubelet -l

● kubelet.service - Kubernetes Kubelet

Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /etc/systemd/system/kubelet.service.d

└─10-kubelet-args.conf, 30-kubelet-extra-args.conf

Active: active (running) since Sat 2023-03-04 11:52:29 UTC; 23min ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 11547 (kubelet)

CGroup: /runtime.slice/kubelet.service

└─11547 /usr/bin/kubelet --cloud-provider aws --config /etc/kubernetes/kubelet/kubelet-config.json --kubeconfig /var/lib/kubelet/kubeconfig --container-runtime remote --container-runtime-endpoint unix:///run/containerd/containerd.sock --node-ip=192.168.9.137 --pod-infra-container-image=918309763551.dkr.ecr.cn-north-1.amazonaws.com.cn/eks/pause:3.5 --v=2 --node-labels=eks.amazonaws.com/sourceLaunchTemplateVersion=1,alpha.eksctl.io/cluster-name=test124,alpha.eksctl.io/nodegroup-name=test124-ng,eks.amazonaws.com/nodegroup-image=ami-0efac015e224c828a,eks.amazonaws.com/capacityType=ON_DEMAND,eks.amazonaws.com/nodegroup=test124-ng6,eks.amazonaws.com/sourceLaunchTemplateId=lt-0adf6b991b5366a98 --max-pods=110

逻辑上现在已经无法拉取ecr镜像了,因为我们无法鉴权,但实际上却没有任何影响,重启containerd和kubelet也一样

直接在bootstrap中把ecr-credential-provider删掉看看

MIME-Version: 1.0

Content-Type: multipart/mixed; boundary="//"

--//

Content-Type: text/x-shellscript; charset="us-ascii"

#!/bin/bash

set -ex

/etc/eks/bootstrap.sh test124 --kubelet-extra-args '--node-labels=eks.amazonaws.com/sourceLaunchTemplateVersion=1,alpha.eksctl.io/cluster-name=test124,alpha.eksctl.io/nodegroup-name=test124-ng6,eks.amazonaws.com/nodegroup-image=ami-0efac015e224c828a,eks.amazonaws.com/capacityType=ON_DEMAND,eks.amazonaws.com/nodegroup=test124-ng6,eks.amazonaws.com/sourceLaunchTemplateId=lt-0adf6b991b5366a98 --max-pods=110' --b64-cluster-ca $B64_CLUSTER_CA --apiserver-endpoint $API_SERVER_URL --dns-cluster-ip $K8S_CLUSTER_DNS_IP --use-max-pods false

--//--

出现以下报错

E0304 13:45:18.793749 4797 kuberuntime_manager.go:260]

"Failed to register CRI auth plugins"

err="plugin binary directory /etc/eks/ecr-credential-provider did not exist"

systemd[1]: kubelet.service: main process exited, code=exited, status=1/FAILURE

// kuberuntime_manager.go

if imageCredentialProviderConfigFile != "" || imageCredentialProviderBinDir != "" {

if err := plugin.RegisterCredentialProviderPlugins(imageCredentialProviderConfigFile, imageCredentialProviderBinDir); err != nil {

klog.ErrorS(err, "Failed to register CRI auth plugins")

os.Exit(1)

}

}

手动进入节点修改kubelet.service,之后启动kubelet将节点加入集群。

在该节点上创建pod,发现仍旧能够从ecr仓库拉取镜像,目前没看出这两个kubelet参数有什么用。。。。日后再说吧

![按位与为零的三元组[掩码+异或的作用]](https://img-blog.csdnimg.cn/f4ab769f57a646edb37acdc351f58bcd.png)