目录

机器信息

升级内核

系统配置

部署容器运行时Containerd

安装crictl客户端命令

配置服务器支持开启ipvs的前提条件

安装 kubeadm、kubelet 和 kubectl

初始化集群 (master)

安装CNI Calico

集群加入node节点

机器信息

| 主机名 | 集群角色 | IP | 内核 | 系统版本 | 配置 |

|---|---|---|---|---|---|

| l-shahe-k8s-master1.ops.prod | master | 10.120.128.1 | 5.4.231-1.el7.elrepo.x86_64 | CentOS Linux release 7.9.2009 (Core) | 32C 128G |

| 10.120.129.1 | node | 10.120.129.1 | 5.4.231-1.el7.elrepo.x86_64 | CentOS Linux release 7.9.2009 (Core) | 32C 128G |

| 10.120.129.2 | node | 10.120.129.2 | 5.4.231-1.el7.elrepo.x86_64 | CentOS Linux release 7.9.2009 (Core) | 32C 128G |

升级内核

参考

kubernetes 1.26.1 Etcd部署(外接)保姆级教程_Cloud孙文波的博客-CSDN博客保姆级部署文档https://blog.csdn.net/weixin_43798031/article/details/129215326

系统配置

参考部署etcd篇

部署容器运行时Containerd

参考部署etcd篇

安装crictl客户端命令

参考部署etcd篇

配置服务器支持开启ipvs的前提条件

参考部署etcd篇

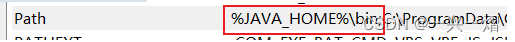

安装 kubeadm、kubelet 和 kubectl

参考部署etcd篇

清理当前集群环境,线上集群需谨慎

swapoff -a

kubeadm reset

systemctl daemon-reload && systemctl restart kubelet

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

cp -r /opt/k8s-install/pki/* /etc/kubernetes/pki/.

systemctl stop kubelet

rm -rf /etc/cni/net.d/*

# 清理etcd数据 一定要谨慎

etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key --endpoints=https://10.120.174.14:2379,https://10.120.175.5:2379,https://10.120

.175.36:2379 del "" --prefix初始化集群 (master)

使用kubeadm config print init-defaults --component-configs KubeletConfiguration可以打印集群初始化默认的使用的配置:

kubeadm.yaml 文件内

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.120.128.1 #master主 机 IP

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: l-shahe-k8s-master1 #master主 机 名

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 10.120.102.9:6443 #LVS IP

controllerManager: {}

dns:

type: CoreDNS

etcd:

external:

endpoints:

- https://10.120.174.14:2379 #外 接 ETCD

- https://10.120.175.5:2379

- https://10.120.175.36:2379

caFile: /etc/kubernetes/pki/etcd/ca.crt #ETCD证 书

certFile: /etc/kubernetes/pki/apiserver-etcd-client.crt

keyFile: /etc/kubernetes/pki/apiserver-etcd-client.key

imageRepository: registry.aliyuncs.com/google_containers #阿 里 云 镜 像

kind: ClusterConfiguration

kubernetesVersion: 1.26.1

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/16 #services网 段

podSubnet: 172.21.10.0/16 #POD网 段 , 需 要 规 划 网 络

scheduler: {}

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

failSwapOn: false

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

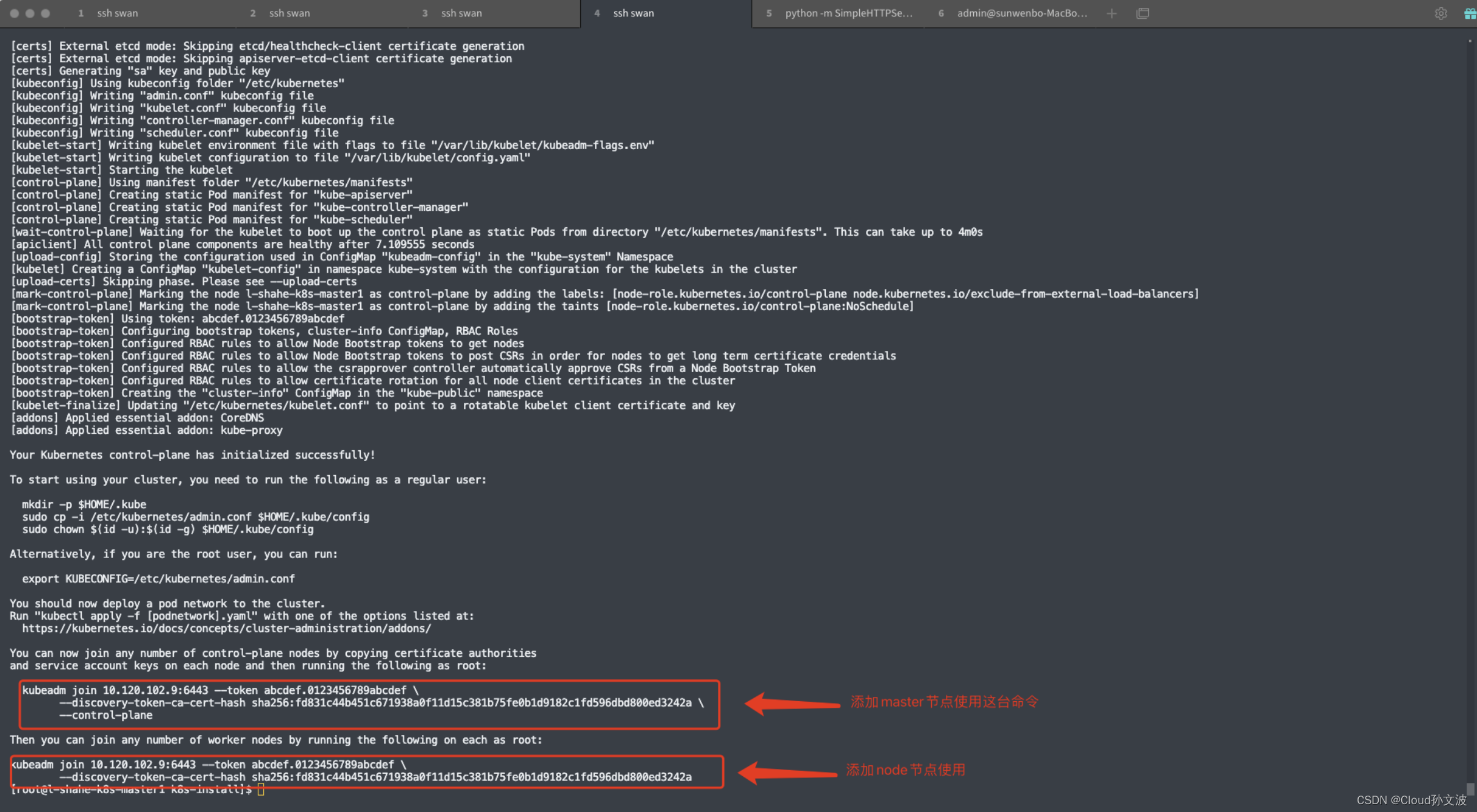

mode: ipvs 执行如下命令,完成初始化

kubeadm init --config kubeadm.yaml --upload-certs

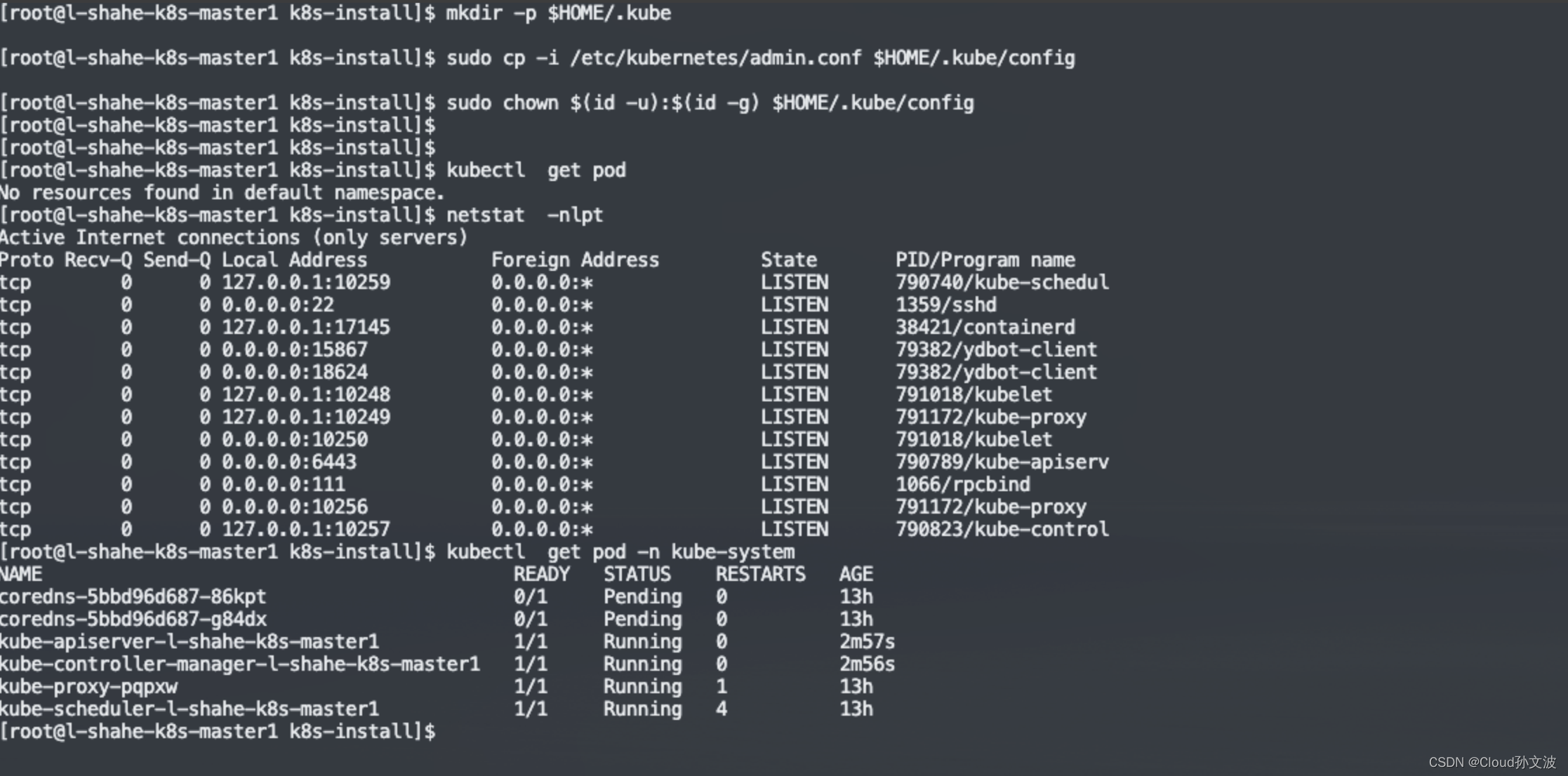

创建kubectl 配置文件

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl label node l-shahe-k8s-master1 node-role.kubernetes.io/control-plane-

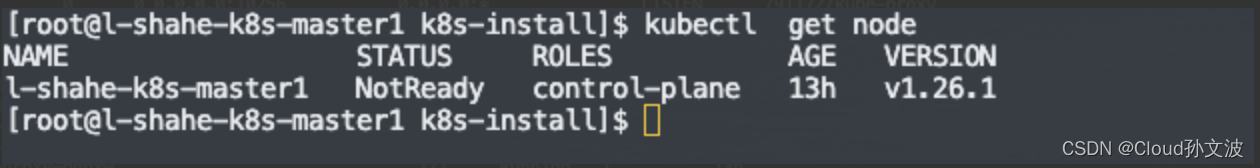

kubectl label node l-shahe-k8s-master1 node-role.kubernetes.io/master=备注:coredns Pending、节点NotReady 是因为没有安装CNI插件,下面步骤进行安装calico CNI讲述

安装CNI Calico

github 下载安装包 release-v3.25.0.tgz

下载解压

mkdir -p /opt/k8s-install/calico/ && cd /opt/k8s-install/calico/

wget 10.60.127.202:19999/k8s-1.26.1-image/release-v3.25.0.tgz

tar xf release-v3.25.0.tgz && cd /opt/k8s-install/calico/release-v3.25.0/images && source /root/.bash_profile

导入镜像

for i in `ls`;do ctr -n k8s.io images import $i;done 安装calico operator

kubectl create -f tigera-operator.yaml. #不需要改原生的任何配置

kubectl create -f custom-resources.yaml

kubectl create -f bgp-config.yaml

kubectl create -f bgp-peer.yamlcustom-resources.yaml

# This section includes base Calico installation configuration.

# For more information, see: https://projectcalico.docs.tigera.io/master/reference/installation/api#operator.tigera.io/v1.Installation

apiVersion: operator.tigera.io/v1

kind: Installation

metadata:

name: default

spec:

# Configures Calico networking.

calicoNetwork:

# Note: The ipPools section cannot be modified post-install.

ipPools:

- blockSize: 27 #每 台 机 器 占 用 的 预 分 配 的 ip地 址

cidr: 172.21.0.0/16

encapsulation: VXLANCrossSubnet

natOutgoing: Enabled

nodeSelector: all()

---

# This section configures the Calico API server.

# For more information, see: https://projectcalico.docs.tigera.io/master/reference/installation/api#operator.tigera.io/v1.APIServer

apiVersion: operator.tigera.io/v1

kind: APIServer

metadata:

name: default

spec: {} bgp-config.yaml

apiVersion: projectcalico.org/v3

kind: BGPConfiguration

metadata:

name: default

spec:

logSeverityScreen: Info

nodeToNodeMeshEnabled: false #关闭全局互联

asNumber: 63400

serviceClusterIPs:

- cidr: 10.96.0.0/12

listenPort: 178

bindMode: NodeIP

#communities:

# - name: bgp-large-community

# value: 63400:300:100

#prefixAdvertisements:

# - cidr: 172.218.4.0/26

# communities:

# - bgp-large-community

# - 63400:120 bgp-peer.yaml

apiVersion: projectcalico.org/v3

kind: BGPPeer

metadata:

name: 10-120-128

spec:

peerIP: '10.120.128.254'

keepOriginalNextHop: true

asNumber: 64531

nodeSelector: rack == '10.120.128'

---

apiVersion: projectcalico.org/v3

kind: BGPPeer

metadata:

name: 10-120-129

spec:

peerIP: '10.120.129.254'

keepOriginalNextHop: true

asNumber: 64532

nodeSelector: rack == '10.120.129'给node节点增加标签

kubectl label node l-shahe-k8s-master1 rack='10.120.128'

kubectl label node 10.120.129.1 rack='10.120.129'

kubectl label node 10.120.129.2 rack='10.120.129'重要:修改node节点的AS number master执行

calicoctl patch node l-shahe-k8s-master1 -p '{"spec": {"bgp": {"asNumber": "64531"}}}'

calicoctl patch node 10.120.129.1 -p '{"spec": {"bgp": {"asNumber": "64532"}}}'

calicoctl patch node 10.120.129.2 -p '{"spec": {"bgp": {"asNumber": "64532"}}}'检查BGP 连接状态

[root@l-shahe-k8s-master1 calico]$ calicoctl node status

Calico process is running.

IPv4 BGP status

+----------------+---------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+----------------+---------------+-------+----------+-------------+

| 10.120.128.254 | node specific | up | 10:34:04 | Established |

+----------------+---------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

[root@10 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+----------------+---------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+----------------+---------------+-------+----------+-------------+

| 10.120.129.254 | node specific | up | 10:48:16 | Established |

+----------------+---------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.修改containerd 配置文件

sandbox_image = "harbor-sh.yidian-inc.com/kubernetes-1.26.1/pause:3.6"

[plugins."io.containerd.grpc.v1.cri".registry]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."harbor-sh.myharbor"]

endpoint = ["https://harbor-sh.myharbor.com"]

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.configs."harbor-sh.myharbor".tls]

insecure_skip_verify = false

ca_file = "/etc/containerd/cert/harbor-sh-ca.crt"

[plugins."io.containerd.grpc.v1.cri".registry.configs."harbor-sh.myharbor".auth]

username = "admin"

password = "OpsSre"

[plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming]

tls_cert_file = ""

tls_key_file = ""集群加入node节点

完成内核升级、系统配置、部署容器运行时Containerd、安装crictl客户端命令、安装 kubeadm、kubelet 和 kubectl

kubelet需要指定 --container-runtime-endpoint=unix:///var/run/containerd/containerd.sock

vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=kubelet: The Kubernetes Node Agent

Documentation=https://kubernetes.io/docs/

Wants=network-online.target

After=network-online.target

[Service]

ExecStart=/usr/bin/kubelet --address=127.0.0.1 --pod-manifest-path=/etc/kubernetes/manifests --container-runtime=remote --container-runtime-endpoint=unix:///var/run/containerd/containerd.sock

Restart=always

StartLimitInterval=0

RestartSec=10

[Install]

WantedBy=multi-user.target重启kubelet

systemctl daemon-reload && systemctl restart kubelet && systemctl status kubelet && systemctl restart containerd将节点加入到集群

kubeadm join 10.120.102.9:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:fd831c44b451c671938a0f11d15c381b75fe0b1d9182c1fd596dbd800ed3242a由于使用了Calico ToR 网络模式每次新加入的节点都要修改calico node节点as number号