文章目录

- 1. 原理概述

- 2. 实验环节

- 2.1 验证与opencv 库函数的结果一致

- 2.2 与 双边滤波比较

- 2.3 引导滤波应用,fathering

- 2.3 引导滤波应用,图像增强

- 2.4 灰度图引导,和各自通道引导的效果差异

- 2.5 不同参数设置影响

- 3. 参考

引导滤波

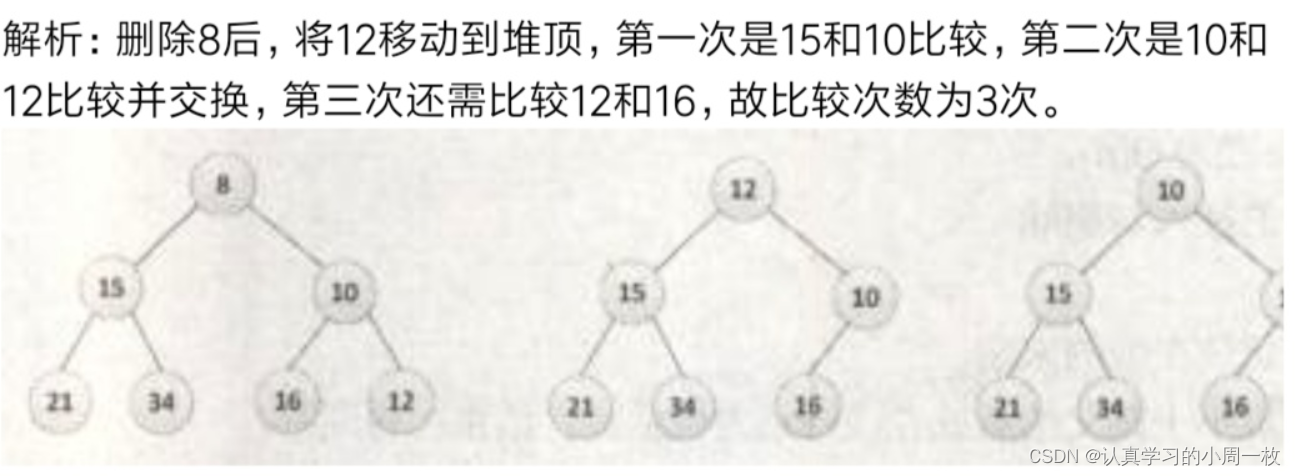

1. 原理概述

引导滤波是三大保边平滑算法之一。

原理介绍参考 图像处理基础(一)引导滤波

2. 实验环节

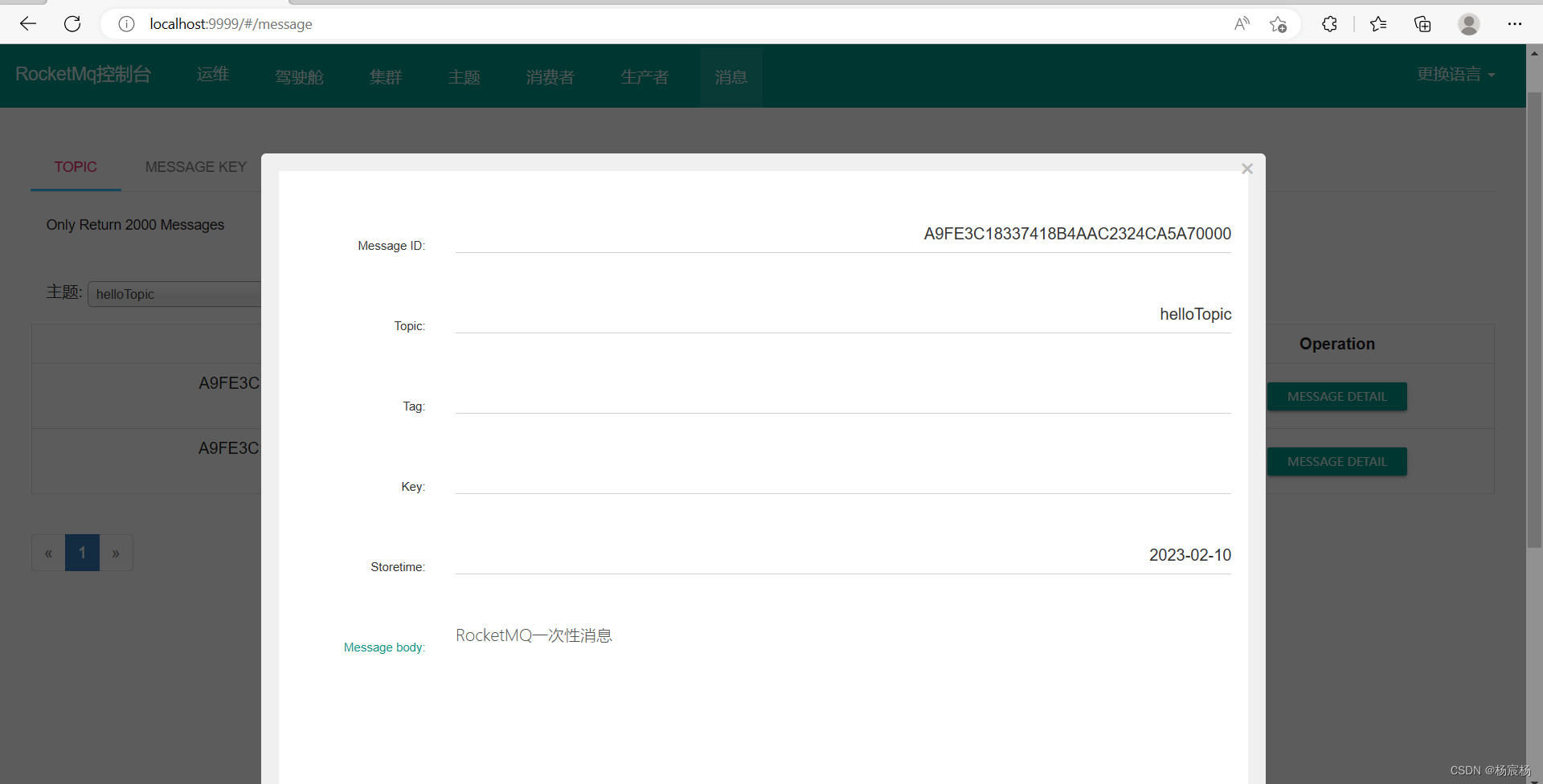

2.1 验证与opencv 库函数的结果一致

- 引导图是单通道时的函数guided_filter(I,p,win_size,eps)

- 引导图时三通道时的函数multi_dim_guide_filter(I, p, r, eps)

- I, p的输入如果归一化 0-1之间,则eps设置为小于1的数,比如0.2,

如果没有归一化,则 eps 需要乘以 (255 * 255) - I, p应该是浮点数

- cv2.ximgproc.guidedFilter 的输入参数r是 window_size // 2

实验图像

guided_filter 和multi_dim_guide_filter 代码:

import cv2

import numpy as np

from matplotlib import pyplot as plt

def guided_filter(I,p,win_size,eps):

'''

% - guidance image: I (should be a gray-scale/single channel image)

% - filtering input image: p (should be a gray-scale/single channel image)

% - local window radius: r

% - regularization parameter: eps

'''

mean_I = cv2.blur(I,(win_size,win_size))

mean_p = cv2.blur(p,(win_size,win_size))

mean_II = cv2.blur(I*I,(win_size,win_size))

mean_Ip = cv2.blur(I*p,(win_size,win_size))

var_I = mean_II - mean_I*mean_I

cov_Ip = mean_Ip - mean_I*mean_p

#print(np.allclose(var_I, cov_Ip))

a = cov_Ip/(var_I+eps)

b = mean_p-a*mean_I

mean_a = cv2.blur(a,(win_size,win_size))

mean_b = cv2.blur(b,(win_size,win_size))

q = mean_a*I + mean_b

#print(mean_II.dtype, cov_Ip.dtype, b.dtype, mean_a.dtype, I.dtype, q.dtype)

return q

def multi_dim_guide_filter(I, p, r, eps):

"""

I 是三通道

p 是单通道或者多通道图像

"""

out = np.zeros_like(p)

if len(p.shape) == 2:

out = multi_dim_guide_filter_single(I, p, r, eps)

else:

for c in range(p.shape[2]):

out[..., c] = multi_dim_guide_filter_single(I, p[..., c], r, eps)

return out

def multi_dim_guide_filter_single(I, p, r, eps):

"""

I : 导向图,多个通道 H, W, C

p : 单个通道 H, W, 1

radius : 均值滤波核长度

eps:

"""

# if len(p.shape) == 2:

# p = p[..., None]

r = (r, r)

mean_I_r = cv2.blur(I[..., 0], r);

mean_I_g = cv2.blur(I[..., 1], r);

mean_I_b = cv2.blur(I[..., 2], r);

# variance of I in each local patch: the matrix Sigma in Eqn(14).

# Note the variance in each local patch is a 3x3 symmetric matrix:

# rr, rg, rb

# Sigma = rg, gg, gb

# rb, gb, bb

var_I_rr = cv2.blur(I[..., 0] * (I[..., 0]), r) - mean_I_r * (mean_I_r) + eps

var_I_rg = cv2.blur(I[..., 0] * (I[..., 1]), r) - mean_I_r * (mean_I_g)

var_I_rb = cv2.blur(I[..., 0] * (I[..., 2]), r) - mean_I_r * (mean_I_b)

var_I_gg = cv2.blur(I[..., 1] * (I[..., 1]), r) - mean_I_g * (mean_I_g) + eps

var_I_gb = cv2.blur(I[..., 1] * (I[..., 2]), r) - mean_I_g * (mean_I_b)

var_I_bb = cv2.blur(I[..., 2] * (I[..., 2]), r) - mean_I_b * (mean_I_b) + eps

# Inverse of Sigma + eps * I

invrr = var_I_gg * (var_I_bb) - var_I_gb * (var_I_gb)

invrg = var_I_gb * (var_I_rb) - var_I_rg * (var_I_bb)

invrb = var_I_rg * (var_I_gb) - var_I_gg * (var_I_rb)

invgg = var_I_rr * (var_I_bb) - var_I_rb * (var_I_rb)

invgb = var_I_rb * (var_I_rg) - var_I_rr * (var_I_gb)

invbb = var_I_rr * (var_I_gg) - var_I_rg * (var_I_rg)

covDet = invrr * (var_I_rr) + invrg * (var_I_rg) + invrb * (var_I_rb)

invrr /= covDet

invrg /= covDet

invrb /= covDet

invgg /= covDet

invgb /= covDet

invbb /= covDet

# process p

mean_p = cv2.blur(p, r)

mean_Ip_r = cv2.blur(I[..., 0] * (p), r)

mean_Ip_g = cv2.blur(I[..., 1] * (p), r)

mean_Ip_b = cv2.blur(I[..., 2] * (p), r)

# covariance of(I, p) in each local patch.

cov_Ip_r = mean_Ip_r - mean_I_r * (mean_p)

cov_Ip_g = mean_Ip_g - mean_I_g * (mean_p)

cov_Ip_b = mean_Ip_b - mean_I_b * (mean_p)

a_r = invrr * (cov_Ip_r) + invrg * (cov_Ip_g) + invrb * (cov_Ip_b)

a_g = invrg * (cov_Ip_r) + invgg * (cov_Ip_g) + invgb * (cov_Ip_b)

a_b = invrb * (cov_Ip_r) + invgb * (cov_Ip_g) + invbb * (cov_Ip_b)

b = mean_p - a_r * (mean_I_r) - a_g * (mean_I_g) - a_b * (mean_I_b)

return (cv2.blur(a_r, r) * (I[..., 0])

+ cv2.blur(a_g, r) * (I[..., 1])

+ cv2.blur(a_b, r) * (I[..., 2])

+ cv2.blur(b, r))

实验代码:

def compare_1_3channel(im, r, eps):

"""

分通道进行和一起进行,结果完全一致

"""

out1 = guided_filter(im, im, r, eps)

out2 = np.zeros_like(out1)

out2[..., 0] = guided_filter(im[..., 0], im[..., 0], r, eps)

out2[..., 1] = guided_filter(im[..., 1], im[..., 1], r, eps)

out2[..., 2] = guided_filter(im[..., 2], im[..., 2], r, eps)

return out1, out2

if __name__ == "__main__":

file = r'D:\code\denoise\denoise_video\guide_filter_image\dd.png'

kernel_size= 7

r = kernel_size // 2

eps = 0.002

input = cv2.imread(file, 1)

out1, out2 = compare_1_3channel(input, kernel_size, (eps * 255 * 255))

cv2.imwrite(file[:-4] + 'out1.png', out1) # 这个结果错误,因为uint8 * uint8仍然赋给了uint8

# out2.png, out3.png, out4.png 结果基本一致

input = input.astype(np.float32) # 要转换为float类型

out1, out2 = compare_1_3channel(input, kernel_size, (eps * 255 * 255))

cv2.imwrite(file[:-4] + 'out2.png', out2)

out1[..., 0] = cv2.ximgproc.guidedFilter(input[..., 0], input[..., 0], r, (eps * 255 * 255))

out1[..., 1] = cv2.ximgproc.guidedFilter(input[..., 1][..., None], input[..., 1][..., None], 3, (eps * 255 * 255))

out1[..., 2] = cv2.ximgproc.guidedFilter(input[..., 2][..., None], input[..., 2][..., None], 3, (eps * 255 * 255))

print('tt : ', out1.min(), out1.max())

out4 = np.clip(out1 * 1, 0, 255).astype(np.uint8)

cv2.imwrite(file[:-4] + 'out4.png', out4)

input = input / 255

input = input.astype(np.float32)

out1, out2 = compare_1_3channel(input, kernel_size, (eps)) # 注意0-1 和 0-255 在eps的差异。

out3 = np.clip(out1 * 255, 0, 255).astype(np.uint8)

cv2.imwrite(file[:-4] + 'out3.png', out3)

# out5.png 和 out6.png结果一致,利用灰度图作为导向图, 注意半径和kernel_size的区别。

guide= cv2.cvtColor(input,cv2.COLOR_BGR2GRAY)

out1 = cv2.ximgproc.guidedFilter(guide, input, r, (eps))

out5 = np.clip(out1 * 255, 0, 255).astype(np.uint8)

cv2.imwrite(file[:-4] + 'out5.png', out5)

out2[..., 0] = guided_filter(guide, input[..., 0], kernel_size, eps)

out2[..., 1] = guided_filter(guide, input[..., 1], kernel_size, eps)

out2[..., 2] = guided_filter(guide, input[..., 2], kernel_size, eps)

out6 = np.clip(out2 * 255, 0, 255).astype(np.uint8)

cv2.imwrite(file[:-4] + 'out6.png', out6)

plt.figure(figsize=(9, 14))

plt.subplot(231), plt.axis('off'), plt.title("guidedFilter error")

plt.imshow(cv2.cvtColor(out1, cv2.COLOR_BGR2RGB))

plt.subplot(232), plt.axis('off'), plt.title("cv2.guidedFilter")

plt.imshow(cv2.cvtColor(out2, cv2.COLOR_BGR2RGB))

plt.subplot(233), plt.axis('off'), plt.title("cv2.guidedFilter")

plt.imshow(cv2.cvtColor(out3, cv2.COLOR_BGR2RGB))

plt.subplot(234), plt.axis('off'), plt.title("cv2.guidedFilter")

plt.imshow(cv2.cvtColor(out4, cv2.COLOR_BGR2RGB))

plt.subplot(235), plt.axis('off'), plt.title("cv2.guidedFilter")

plt.imshow(cv2.cvtColor(out5, cv2.COLOR_BGR2RGB))

plt.subplot(236), plt.axis('off'), plt.title("cv2.guidedFilter")

plt.imshow(cv2.cvtColor(out6, cv2.COLOR_BGR2RGB))

plt.tight_layout()

plt.show()

输出结果

2.2 与 双边滤波比较

个人感觉引导滤波更好

完整代码如下:

if __name__=='__main__':

file = r'D:\code\denoise\denoise_video\guide_filter_image\dd.png'

kernel_size = 7

r = kernel_size // 2

eps1 = 0.004/2

eps2 = 0.002/4

input = cv2.imread(file, 1)

input = input.astype(np.float32) # 要转换为float类型

out1 = guided_filter(input, input, kernel_size, eps1*255*255)

out2 = cv2.bilateralFilter(input, kernel_size, eps2*255*255, eps2*255*255)

out1 = np.clip(out1, 0, 255).astype(np.uint8)

out2 = np.clip(out2, 0, 255).astype(np.uint8)

cv2.imwrite(file[:-4] + 'guide.png', out1)

cv2.imwrite(file[:-4] + 'bi.png', out2)

cv2.imshow("guide", out1)

cv2.imshow("bi", out2)

cv2.waitKey(0)

2.3 引导滤波应用,fathering

实验图像

实验code:

'''

导向滤波的应用: fathering

'''

def run_fathering():

file_I = r'D:\code\denoise\denoise_video\guide_filter_image\apply\c.png'

file_mask = r'D:\code\denoise\denoise_video\guide_filter_image\apply\d.png'

I = cv2.imread(file_I, 1)

I_gray = cv2.cvtColor(I, cv2.COLOR_BGR2GRAY)

input = cv2.imread(file_mask, 0)

kernel_size = 20

r = kernel_size // 2

eps1 = 0.000008 / 2

I = I.astype(np.float32)

I_gray = I_gray.astype(np.float32)

input = input.astype(np.float32) # 要转换为float类型

out1 = cv2.ximgproc.guidedFilter(I, input, r, (eps1 * 255 * 255))

out1 = np.clip(out1, 0, 255).astype(np.uint8)

cv2.imwrite(file_mask[:-4] + 'guide.png', out1)

out2 = cv2.ximgproc.guidedFilter(I_gray, input, r, (eps1 * 255 * 255))

out2 = np.clip(out2, 0, 255).astype(np.uint8)

cv2.imwrite(file_mask[:-4] + 'guide2.png', out2)

out3 = guided_filter(I_gray, input, kernel_size, eps1 * 255 * 255)

out3 = np.clip(out3, 0, 255).astype(np.uint8)

cv2.imwrite(file_mask[:-4] + 'guide3.png', out3)

print(I.shape, input.shape)

out4 = multi_dim_guide_filter(I, input, kernel_size, eps1 * 255 * 255)

out4 = np.clip(out4, 0, 255).astype(np.uint8)

cv2.imwrite(file_mask[:-4] + 'guide4.png', out4)

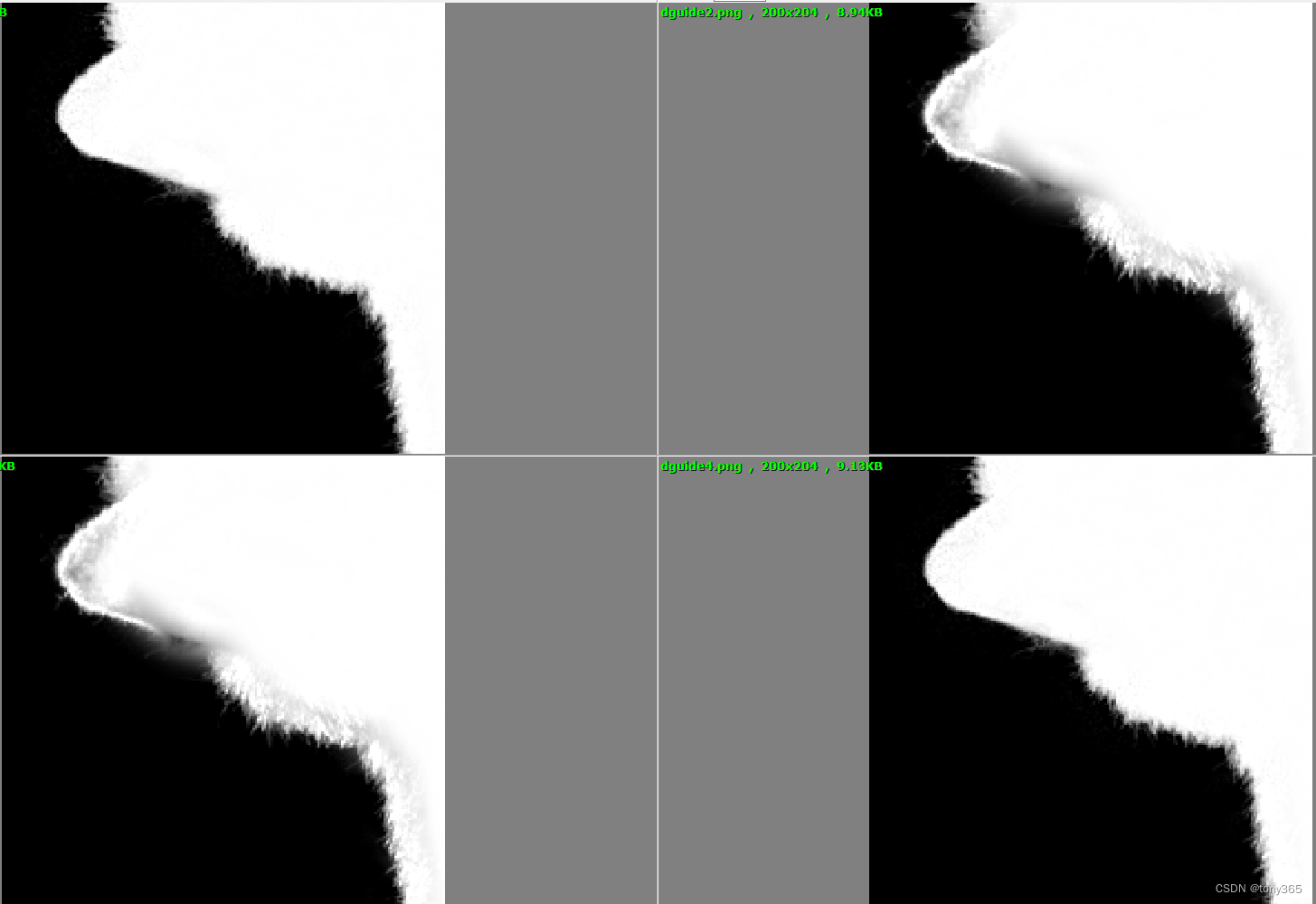

out1 是彩色引导图,opencv库

out2 是灰度引导图,opencv库

out3 是灰度引导图,

out4 是彩色引导图

结果 out1和out4 接近一致,效果好。 out2和out3一致,效果存在问题

2.3 引导滤波应用,图像增强

图片

引导滤波结果稍好一些

实验code:

if __name__ == '__main__':

file = r'D:\code\denoise\denoise_video\guide_filter_image\apply\e.png'

I = cv2.imread(file, 1)

I = I.astype(np.float32)

p = I

kernel_size = 20

r = kernel_size // 2

eps1 = 0.008 / 2

eps2 = 0.002 / 6

out0 = cv2.bilateralFilter(p, kernel_size, eps2 * 255 * 255, eps2 * 255 * 255) # 双边滤波

out1 = multi_dim_guide_filter(I, p, kernel_size, (eps1 * 255 * 255)) # 多通道guide

out2 = guided_filter(I, p, kernel_size, (eps1 * 255 * 255)) # 单通道各自guide

out3 = cv2.ximgproc.guidedFilter(I, p, r, (eps1 * 255 * 255)) # 多通道guide

out4 = (I - out0) * 2 + out0

out5 = (I - out1) * 2 + out1

out6 = (I - out2) * 2 + out2

out7 = (I - out3) * 2 + out3

out0 = np.clip(out0, 0, 255).astype(np.uint8)

out1 = np.clip(out1, 0, 255).astype(np.uint8)

out2 = np.clip(out2, 0, 255).astype(np.uint8)

out3 = np.clip(out3, 0, 255).astype(np.uint8) # out3 应该和 out1结果一致

out4 = np.clip(out4, 0, 255).astype(np.uint8)

out5 = np.clip(out5, 0, 255).astype(np.uint8)

out6 = np.clip(out6, 0, 255).astype(np.uint8) #

out7 = np.clip(out7, 0, 255).astype(np.uint8)

cv2.imwrite(file[:-4] + '0.png', out0)

cv2.imwrite(file[:-4] + '1.png', out1)

cv2.imwrite(file[:-4] + '2.png', out2)

cv2.imwrite(file[:-4] + '3.png', out3)

cv2.imwrite(file[:-4] + '4.png', out4)

cv2.imwrite(file[:-4] + '5.png', out5)

cv2.imwrite(file[:-4] + '6.png', out6)

cv2.imwrite(file[:-4] + '7.png', out7)

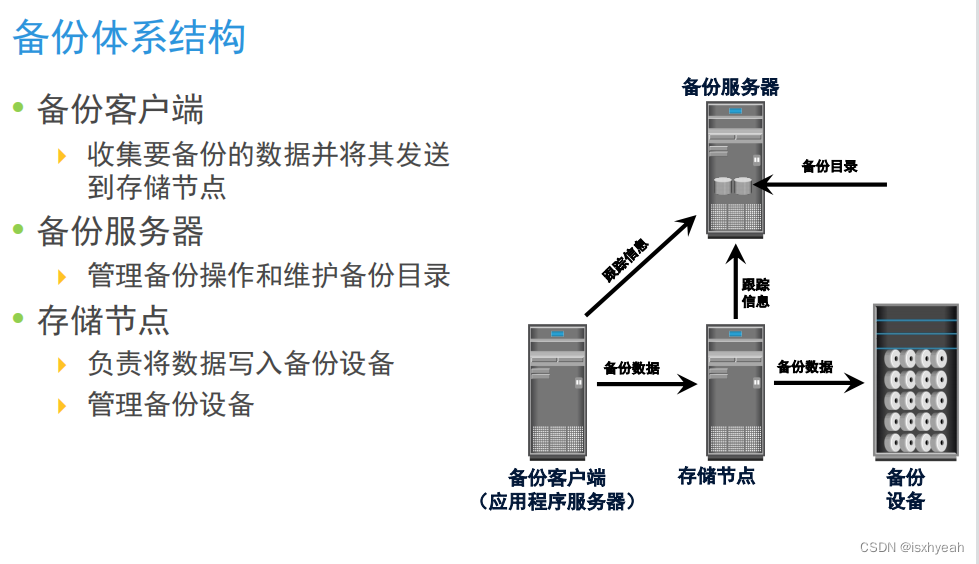

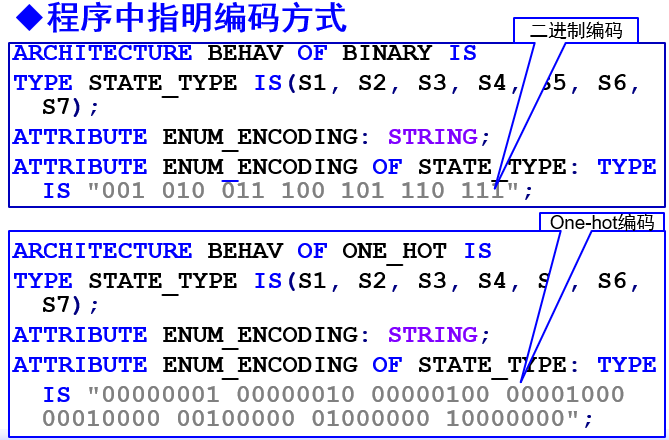

2.4 灰度图引导,和各自通道引导的效果差异

一致有个疑问,

- 分别用r,g,b引导各自通道的效果

- 利用灰度图引导各通道,比1滤波强度更大

- 利用彩色图引导

哪种效果更好呢? 实际使用的时候利用彩色图引导要相对复杂,计算量也更大。

def compare_1gray_3channel(im, r, eps):

"""

分通道进行和一起进行,结果完全一致

"""

out1 = guided_filter(im, im, r, eps)

im_gray = cv2.cvtColor(im, cv2.COLOR_RGB2GRAY)

out2 = np.zeros_like(out1)

out2[..., 0] = guided_filter(im_gray, im[..., 0], r, eps)

out2[..., 1] = guided_filter(im_gray, im[..., 1], r, eps)

out2[..., 2] = guided_filter(im_gray, im[..., 2], r, eps)

return out1, out2

def run_compare_gray_guide():

file = r'D:\code\denoise\denoise_video\guide_filter_image\compare\dd.png'

kernel_size = 17

r = kernel_size // 2

eps1 = 0.02 / 2

input = cv2.imread(file, 1)

input = input.astype(np.float32) # 要转换为float类型

out1, out2 = compare_1gray_3channel(input, r, (eps1 * 255 * 255))

out1 = np.clip(out1, 0, 255).astype(np.uint8)

out2 = np.clip(out2, 0, 255).astype(np.uint8)

cv2.imwrite(file[:-4] + '1.png', out1)

cv2.imwrite(file[:-4] + '2.png', out2)

out3 = cv2.ximgproc.guidedFilter(I, p, r, (eps1 * 255 * 255)) # 多通道

out3 = np.clip(out3, 0, 255).astype(np.uint8)

cv2.imwrite(file[:-4] + '3.png', out3)

2.5 不同参数设置影响

def parameter_tuning():

file = r'D:\code\denoise\denoise_video\guide_filter_image\paramter_tuning\dd.png'

kernel_size = 17

r = kernel_size // 2

eps1 = 0.02 / 2

input = cv2.imread(file, 1)

input = input.astype(np.float32) # 要转换为float类型

index = 0

for r in np.arange(3, 21, 4):

for eps in np.arange(0.000001, 0.00001, 0.000001):

eps1 = eps * 255 * 255

_, out2 = compare_1gray_3channel(input, r, eps1)

out2 = np.clip(out2, 0, 255).astype(np.uint8)

cv2.imwrite(file[:-4] + '{}.png'.format(index), out2)

index += 1

for eps in np.arange(0.2, 1, 0.1):

eps1 = eps * 255 * 255

_, out2 = compare_1gray_3channel(input, r, eps1)

out2 = np.clip(out2, 0, 255).astype(np.uint8)

cv2.imwrite(file[:-4] + '{}.png'.format(index), out2)

index += 1

3. 参考

[1]https://zhuanlan.zhihu.com/p/438206777 有详细解释 和 C++相关代码仓库

[2]https://blog.csdn.net/huixingshao/article/details/42834939 高级图像去雾算法的快速实现, guide filter用于去雾,解释的很清楚

[3]http://giantpandacv.com/academic/传统图像/一些有趣的图像算法/OpenCV图像处理专栏六 来自何凯明博士的暗通道去雾算法(CVPR 2009最佳论文)/去雾代码

[4]https://github.com/atilimcetin/guided-filter引导滤波C++code

![Python之FileNotFoundError: [Errno 2] No such file or directory问题处理](https://img-blog.csdnimg.cn/img_convert/5e96d62a80771794b2a1621eadbabb2a.png)