K8s高可用集群搭建

- 1 方案简介

- 2 集群搭建

- 2.1 安装要求

- 2.2 准备环境

- 2.3 master节点部署keepalived

- 2.4 master节点部署haproxy

- 2.5 所有节点安装docker/kubeadm/kubelet

- 2.6 部署k8smaster01

- 2.7 安装集群网络

- 2.8 k8smaster02加入节点

- 2.9 k8snode01加入集群

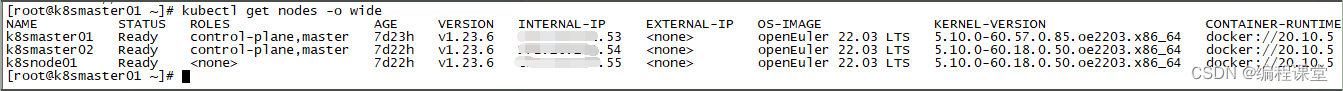

- 3 测试集群

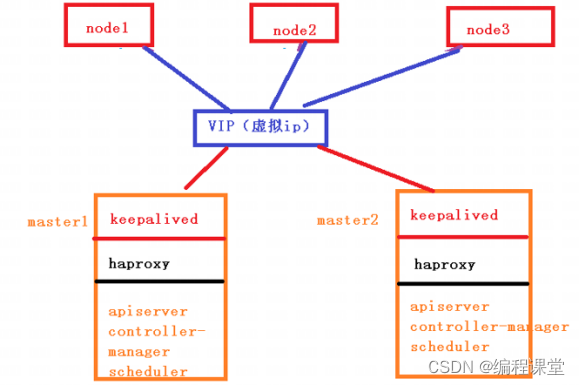

1 方案简介

用到的高可用技术主要是keepalived和haproxy。

keepalived

Keepalived主要是通过虚拟路由冗余来实现高可用功能。Keepalived一个基于VRRP(Virtual Router Redundancy Protocol - 虚拟路由冗余协议) 协议来实现的 LVS 服务高可用方案,可以利用其来解决单点故障。一个LVS服务会有2台服务器运行Keepalived,一台为主服务器(MASTER),一台为备份服务器(BACKUP),但是对外表现为一个虚拟IP,主服务器会发送特定的消息给备份服务器,当备份服务器收不到这个消息的时候,即主服务器宕机的时候, 备份服务器就会接管虚拟IP,继续提供服务,从而保证了高可用性。

haproxy

haproxy 类似于nginx, 是一个负载均衡、反向代理软件。 nginx 采用master-workers 进程模型,每个进程单线程,多核CPU能充分利用。 haproxy 是多线程,单进程就能实现高性能,虽然haproxy 也支持多进程。

2 集群搭建

2.1 安装要求

部署Kubernetes集群机器需要满足以下几个条件:

(1)一台或多台机器,操作系统 CentOS7.x-86_x64。

(2)硬件配置:2GB或更多RAM,2个CPU或更多CPU,硬盘30GB或更多。

(3)可以访问外网,需要拉取镜像,如果服务器不能上网,需要提前下载镜像并导入节点。

(4)禁止swap分区。

2.2 准备环境

| 角色 | ip |

|---|---|

| k8smaster01 | 192.168.10.53 |

| k8smaster02 | 192.168.10.54 |

| k8snode01 | 192.168.10.55 |

| k8s-vip | 192.168.10.61 |

接下来进行如下操作(三台节点都需要执行)

# 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

# 关闭selinux

sed -i 's/enforcing/disabled/' /etc/selinux/config

# 永久

setenforce 0 # 临时

# 根据规划设置主机名

hostnamectl set-hostname <hostname>

# 在主机添加hosts

cat >> /etc/hosts << EOF

192.168.10.53 master01.k8s.io k8smaster01

192.168.10.54 master02.k8s.io k8smaster02

192.168.10.55 node01.k8s.io k8snode01

192.168.10.61 master.k8s.io k8s-vip

EOF

# 将桥接的IPv4流量传递到iptables的链

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system # 生效

# 时间同步

yum install ntpdate -y

ntpdate time.windows.com

2.3 master节点部署keepalived

安装相关包和keepalived

yum install -y conntrack-tools libseccomp libtool-ltdl

yum install -y keepalived

配置k8smaster01节点

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

router_id k8s

}

vrrp_script check_haproxy {

script "killall -0 haproxy"

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 250

advert_int 1

authentication {

auth_type PASS

auth_pass ceb1b3ec013d66163d6ab

}

virtual_ipaddress {

192.168.10.61 # 换为自己的虚拟ip地址

}

track_script {

check_haproxy

}

}

EOF

配置k8smaster02节点

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

router_id k8s

}

vrrp_script check_haproxy {

script "killall -0 haproxy"

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 200

advert_int 1

authentication {

auth_type PASS

auth_pass ceb1b3ec013d66163d6ab

}

virtual_ipaddress {

192.168.10.61 # 换为自己的虚拟ip地址

}

track_script {

check_haproxy

}

}

EOF

上面配置文件中标红的Ip需要换成提前准备好的虚拟Ip。

启动和检查keepalived

# 启动keepalived

systemctl start keepalived.service

# 设置开机启动

systemctl enable keepalived.service

# 查看启动状态

systemctl status keepalived.service

2.4 master节点部署haproxy

安装

yum install -y haproxy

配置

两台master节点的配置均相同,配置中声明了后端代理的两个master节点服务器,指定了haproxy运行的端口为16443等,因此16443端口为集群的入口。

cat > /etc/haproxy/haproxy.cfg << EOF

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

# to have these messages end up in /var/log/haproxy.log you will

# need to:

# 1) configure syslog to accept network log events. This is done

# by adding the '-r' option to the SYSLOGD_OPTIONS in

# /etc/sysconfig/syslog

# 2) configure local2 events to go to the /var/log/haproxy.log

# file. A line like the following can be added to

# /etc/sysconfig/syslog

#

# local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

#---------------------------------------------------------------------

# kubernetes apiserver frontend which proxys to the backends

#---------------------------------------------------------------------

frontend kubernetes-apiserver

mode tcp

bind *:16443

option tcplog

default_backend kubernetes-apiserver

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend kubernetes-apiserver

mode tcp

balance roundrobin

server master01.k8s.io 192.168.10.53:6443 check

server master02.k8s.io 192.168.10.54:6443 check

#---------------------------------------------------------------------

# collection haproxy statistics message

#---------------------------------------------------------------------

listen stats

bind *:1080

stats auth admin:awesomePassword

stats refresh 5s

stats realm HAProxy\ Statistics

stats uri /admin?stats

EOF

上面配置文件中,下面的部分需要更换为自己的ip

server master01.k8s.io 192.168.10.53:6443 check

server master02.k8s.io 192.168.10.54:6443 check

启动和检查haproxy

两台master 都启动

# 设置开机启动

systemctl enable haproxy

# 开启haproxy

systemctl start haproxy

# 查看启动状态

systemctl status haproxy

检查端口

netstat -lntup|grep haproxy

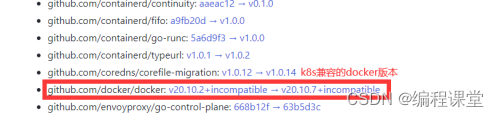

2.5 所有节点安装docker/kubeadm/kubelet

Kubernetes默认CRI(容器运行时)为Docker,因此先安装Docker。

安装docker

在安装Docker时需要注意Docker版本与K8s的版本匹配,具体可以查看K8s文档。

在安装Docker之前,先检查本机是否已经安装过了,若已经安装,需要卸载使用如下指令。

# 查询本机是否已安装

docker yum list installed | grep docker

# 如果有则卸载,避免版本冲突

yum remove docker-ce

# 删除镜像容器等

rm -rf /var/lib/docker

安装并启动Docker

# 查看yum源中docker-ce、docker-ce-cli、containerd.io发布的版本列表

yum list docker-ce --showduplicates | sort -r

yum list docker-ce-cli --showduplicates | sort -r

yum list containerd.io --showduplicates | sort -r

# 从yum源中安装docker-ce、docker-ce-cli、containerd.io

yum install docker-ce-20.10.5-3.el8 docker-ce-cli-20.10.5-3.el8 containerd.io-1.4.3-3.1.el8

# 开启docker服务

systemctl start docker

systemctl start docker.service

# 查看docker服务状态

systemctl status docker

修改docker 镜像源

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]

}

EOF

添加阿里云YUM软件源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

安装kubeadm,kubelet和kubectl

yum install -y kubelet-1.23.6 kubeadm-1.23.6 kubectl-1.23.6

systemctl enable kubelet

2.6 部署k8smaster01

在k8smaster01节点上进行操作。

创建kubeadm配置文件

# 创建kubeadm配置文件存放目录

mkdir /usr/local/kubernetes/manifests -p

# 切换到manifests目录

cd /usr/local/kubernetes/manifests/

# 创建kubeadm配置文件

touch kubeadm-config.yaml

# 编辑kubeadm配置文件

vi kubeadm-config.yaml

apiServer:

certSANs:

- k8smaster01

- k8smaster02

- master.k8s.io

- 192.168.10.61

- 192.168.10.53

- 192.168.10.54

- 127.0.0.1

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "master.k8s.io:16443"

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.23.6

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.1.0.0/16

scheduler: {}

注意

certSANs配置项中配置两台master主机名和ip,虚拟主机名和ip。

kubernetesVersion配置K8s版本号。

certSANs:

- k8smaster01

- k8smaster02

- master.k8s.io

- 192.168.10.61

- 192.168.10.53

- 192.168.10.54

- 127.0.0.1

kubernetesVersion: v1.23.6

在k8smaster01节点执行

kubeadm init --config kubeadm-config.yaml

执行后输入如下内容:

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join master.k8s.io:16443 --token uujjn1.s5tgblpki38bn4n9 \

--discovery-token-ca-cert-hash sha256:9a48f162e823c3d86fg6764cacda1787d382628940fd5718202ccba8cd23a0e2 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join master.k8s.io:16443 --token uujjn1.s5tgblpki38bn4n9 \

--discovery-token-ca-cert-hash sha256:9a48f162e823c3d86fg6764cacda1787d382628940fd5718202ccba8cd23a0e2

[root@k8smaster01 manifests]#

按照输出配置环境变量,使用kubectl工具

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

查看节点状态

k8smaster01已经初始化完成,但状态为NotReady,需要配置网络插件。通过kubectl get pods -n kube-system指令查询READY状态存在0/1。

[root@k8smaster01 manifests]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster01 NotReady control-plane,master 144m v1.23.6

[root@k8smaster01 manifests]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-59d64cd4d4-62bhq 0/1 Pending 0 144m

coredns-59d64cd4d4-95dl5 0/1 Pending 0 144m

etcd-k8smaster01 1/1 Running 0 144m

kube-apiserver-k8smaster01 1/1 Running 0 144m

kube-controller-manager-k8smaster01 1/1 Running 0 144m

kube-proxy-df8c8 1/1 Running 0 144m

kube-scheduler-k8smaster01 1/1 Running 0 145m

2.7 安装集群网络

集群网络只需在k8smaster01上安装即可。

# 创建tmpconfig目录

mkdir /usr/local/tmpconfig

# 切换到tmpconfig目录

cd /usr/local/tmpconfig

# 拉取kube-flannel.yml

wget -c https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

创建以及查看

kubectl apply -f kube-flannel.yml

kubectl get pods -n kube-system

安装完网络插件稍等一会,再次执行kubectl get nodes查看k8smaster01的状态为Ready,再次执行kubectl get pods -n kube-system,查询READY状态都为1/1。

2.8 k8smaster02加入节点

复制密钥及相关文件

从master1复制密钥及相关文件到master2。

ssh root@192.168.10.54 mkdir -p /etc/kubernetes/pki/etcd

scp /etc/kubernetes/admin.conf root@192.168.10.54:/etc/kubernetes

scp /etc/kubernetes/pki/{ca.*,sa.*,front-proxy-ca.*} root@192.168.10.54:/etc/kubernetes/pki

scp /etc/kubernetes/pki/etcd/ca.* root@192.168.10.54:/etc/kubernetes/pki/etcd

master2加入集群

在k8smaster02 执行在k8smaster01上init后输出的join命令,需要带上参数 --control-plane 表示把master控制节点加入集群。

kubeadm join master.k8s.io:16443 --token uujjn1.s5tgblpki38bn4n9 \

--discovery-token-ca-cert-hash sha256:9a48f162e823c3d86ca6764cacda9087d382628940fd5718202ccba8cd23a0e2 \

--control-plane

执行完后输出内容

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

执行上面输出的配置指令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

检查状态

[root@k8smaster02 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8smaster01 Ready control-plane,master 17h v1.23.6

k8smaster02 NotReady control-plane,master 6m21s v1.23.6

[root@k8smaster02 ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-59d64cd4d4-62bhq 1/1 Running 0 17h

kube-system coredns-59d64cd4d4-95dl5 1/1 Running 0 17h

kube-system etcd-k8smaster01 1/1 Running 0 17h

kube-system etcd-k8smaster02 1/1 Running 0 6m22s

kube-system kube-apiserver-k8smaster01 1/1 Running 0 17h

kube-system kube-apiserver-k8smaster02 1/1 Running 0 6m25s

kube-system kube-controller-manager-k8smaster01 1/1 Running 1 17h

kube-system kube-controller-manager-k8smaster02 1/1 Running 0 6m26s

kube-system kube-flannel-ds-p2std 1/1 Running 0 15h

kube-system kube-flannel-ds-vc2w2 0/1 Init:ImagePullBackOff 0 6m27s

kube-system kube-proxy-df8c8 1/1 Running 0 17h

kube-system kube-proxy-nx8dg 1/1 Running 0 6m27s

kube-system kube-scheduler-k8smaster01 1/1 Running 1 17h

kube-system kube-scheduler-k8smaster02 1/1 Running 0 6m26s

2.9 k8snode01加入集群

在k8snode01节点上执行之前K8smaster01输出的信息

kubeadm join master.k8s.io:16443 --token uujjn1.s5tgblpki38bn4n9 \

--discovery-token-ca-cert-hash sha256:9a48f162e856c3d86ca6764cacda1787d382628940fd5718202ccba8cd23a0e2

执行完后输出如下

This node has joined the cluster:* Certificate signing request was sent to apiserver and a response was received.* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

在k8smaste01节点查看

[root@k8smaster01 manifests]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster01 Ready control-plane,master 17h v1.23.6

k8smaster02 NotReady control-plane,master 11m v1.23.6

k8snode01 NotReady <none> 2m26s v1.23.6

重新安装网络

[root@k8smaster01 flannel]# pwd

/usr/local/tmpconfig

[root@k8smaster01 tmpconfig]# kubectl apply -f kube-flannel.yml

再次查看集群状态

[root@k8smaster01 flannel]# kubectl cluster-info

Kubernetes control plane is running at https://master.k8s.io:16443

CoreDNS is running at https://master.k8s.io:16443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

[root@k8smaster01 flannel]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8smaster01 Ready control-plane,master 18h v1.23.6 192.168.10.53 <none> CentOS Linux 7 (Core) 3.10.0-1160.49.1.el7.x86_64 docker://18.6.1

k8smaster02 Ready control-plane,master 38m v1.23.6 192.168.10.54 <none> CentOS Linux 7 (Core) 3.10.0-1160.49.1.el7.x86_64 docker://18.6.1

k8snode01 Ready <none> 28m v1.23.6 192.168.10.55 <none> CentOS Linux 7 (Core) 3.10.0-1160.49.1.el7.x86_64 docker://18.6.1

至此k8s高可用集群搭建完成。

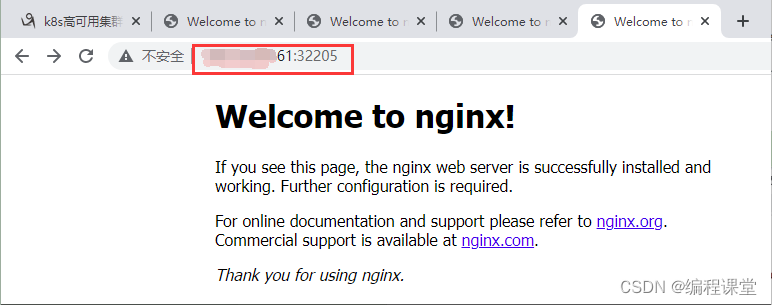

3 测试集群

[root@k8smaster01 ~]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

[root@k8smaster01 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-85b98978db-wwn2m 0/1 ContainerCreating 0 11s <none> k8snode01 <none> <none>

[root@k8smaster01 ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

[root@k8smaster01 ~]#

[root@k8smaster01 ~]#

[root@k8smaster01 ~]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-85b98978db-wwn2m 1/1 Running 0 39s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 7d23h

service/nginx NodePort 10.1.33.179 <none> 80:32205/TCP 14s

[root@k8smaster01 ~]#

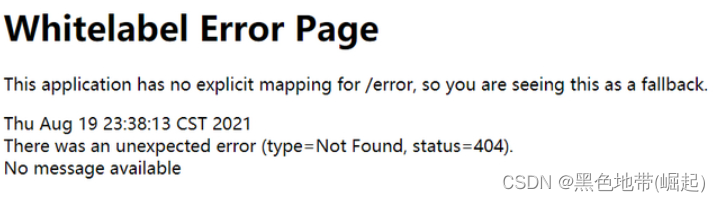

从外部查看任意一个节点的32205端口即可查看到nginx。 192.168.10.53、192.168.10.54、192.168.10.55、192.168.10.61

四个ip都可以访问到。