实验有两个任务,都是为了减少锁的竞争从而提高运行效率。

- Memory allocator

一开始我们是有个双向链表用来存储空闲的内存块,如果很多个进程要竞争这一个链表,就会把效率降低很多。所以我们把链表拆成每个CPU一个,在申请内存的时候就直接在本CPU的链表上找就行了。至于没找到的话,就直接从别的链表里边steal。具体实现如下:

多个内存链表数据结构:

struct {

struct spinlock lock;

struct run *freelist;

} kmem[NCPU];在初始化时初始化锁:

void

kinit()

{

for (int i = 0; i < NCPU; i++)

{

initlock(&kmem[i].lock, "kmem");

}

freerange(end, (void*)PHYSTOP);

}在释放内存时修改单个链表的,获取CPUid直到更新对应CPU链表要关中断,否则切了CPU回来可能把内存放错位置:

void

kfree(void *pa)

{

struct run *r;

if(((uint64)pa % PGSIZE) != 0 || (char*)pa < end || (uint64)pa >= PHYSTOP)

panic("kfree");

// Fill with junk to catch dangling refs.

memset(pa, 1, PGSIZE);

r = (struct run*)pa;

push_off();

int id=cpuid();

if (id<0 || id>=NCPU)

{

panic("kfree:wrong cpuid");

}

acquire(&kmem[id].lock);

r->next = kmem[id].freelist;

kmem[id].freelist = r;

release(&kmem[id].lock);

pop_off();

}分配内存时先在本CPU链表中寻找,如果没有空闲的,从其他链表中偷:

void *

kalloc(void)

{

struct run *r;

push_off();

int id=cpuid();

acquire(&kmem[id].lock);

r = kmem[id].freelist;

if(r){

kmem[id].freelist = r->next;

}else{

for (int i = 0; i < NCPU; i++)

{

if (i==id)

continue;

acquire(&kmem[i].lock);

r = kmem[i].freelist;

if (r){ //链表中还存在下一个节点 需要更新节点

kmem[i].freelist=r->next;

release(&kmem[i].lock);

break;

}

release(&kmem[i].lock);

}

}

release(&kmem[id].lock);

pop_off();

if(r)

memset((char*)r, 5, PGSIZE); // fill with junk

return (void*)r;

}- Buffer cache

这里和上面有点像,问题是一堆用来和外设交换数据的buffer,也有一堆进程想要用,那么想用的时候就得竞争,于是将这些buffer按照块号哈希成多个队列,之后要读了,或者要释放了,就利用块号到对应的哈希表中去找。

首先是初始化部分,照葫芦画瓢:

struct

{

struct spinlock lock;

struct buf buf[NBUF];

// Linked list of all buffers, through prev/next.

// Sorted by how recently the buffer was used.

// head.next is most recent, head.prev is least.

struct buf head[NBUCKETS]; // 哈希桶头

struct spinlock hash_lock[NBUCKETS]; // 哈希桶锁

} bcache;

void binit(void)

{

struct buf *b;

initlock(&bcache.lock, "bcache");

// 哈希桶和哈希桶锁初始化

for (int i = 0; i < NBUCKETS; i++)

{

// snprintf(name, 20, "bcache.bucket.%d", i);

// printf("name:%s\n",);

initlock(&bcache.hash_lock[i], "bcache.bucket");

bcache.head[i].prev = &bcache.head[i];

bcache.head[i].next = &bcache.head[i];

}

int hash_num;

for (int i = 0; i < NBUF; i++)

{

b = &bcache.buf[i];

// printf("blockno:%d\n",b->blockno);

hash_num = HASHNUM(b->blockno);

b->next = bcache.head[hash_num].next;

b->prev = &bcache.head[hash_num];

initsleeplock(&b->lock, "buffer");

bcache.head[hash_num].next->prev = b;

bcache.head[hash_num].next = b;

}

}接着更改bget,这里很坑的一点就是在usertests里边有个manywrites要用到balloc和bfree,试想一下,现在很多进程想读一个块进来,每个都发现不存在,所以都会去分配一块内存块给他,如果我们分配了多个buffer也就是存在多个副本,那么在bfree的时候就会panic(多次释放一块磁盘)。这里我们需要一种机制来保证只分配一个块。那就是,保证检查引用计数和分配一个块的操作捆绑在第一次引用计数更新之后。这里用了LRU的链表,如下实现方法是采用“将刚用完的插入链表头,取的时候从链表尾部向前取”的方式实现:

static struct buf *

bget(uint dev, uint blockno)

{

struct buf *b;

int hash_num = HASHNUM(blockno);

// acquire(&bcache.lock);

// todo 获取哈希桶锁

acquire(&bcache.hash_lock[hash_num]);

// Is the block already cached?

// 如果块已经映射在缓冲中

// 增加块引用数 维护LRU队列 释放bcache锁 获取块的睡眠锁

for (b = bcache.head[hash_num].next; b != &bcache.head[hash_num]; b = b->next)

{

if (b->dev == dev && b->blockno == blockno)

{

b->refcnt++;

// todo 释放哈希锁

release(&bcache.hash_lock[hash_num]);

// release(&bcache.lock);

acquiresleep(&b->lock);

return b;

}

}

release(&bcache.hash_lock[hash_num]);

// Not cached.

// Recycle the least recently used (LRU) unused buffer.

// 遍历所有哈希桶找到一个引用为空的块

acquire(&bcache.lock);

acquire(&bcache.hash_lock[hash_num]);

for (b = bcache.head[hash_num].next; b != &bcache.head[hash_num]; b = b->next)

{

if (b->dev == dev && b->blockno == blockno)

{

b->refcnt++;

// todo 释放哈希锁

release(&bcache.lock);

release(&bcache.hash_lock[hash_num]);

// release(&bcache.lock);

acquiresleep(&b->lock);

return b;

}

}

release(&bcache.hash_lock[hash_num]);

for (int i = 0; i < NBUCKETS; i++)

{

acquire(&bcache.hash_lock[i]);

for (b = bcache.head[i].prev; b != &bcache.head[i]; b = b->prev)

{

if (b->refcnt==0)

{

b->dev=dev;

b->blockno=blockno;

b->valid=0;

b->refcnt=1;

b->prev->next=b->next;

b->next->prev=b->prev;

b->next=bcache.head[hash_num].next;

b->prev=&bcache.head[hash_num];

bcache.head[hash_num].next->prev=b;

bcache.head[hash_num].next=b;

release(&bcache.hash_lock[i]);

release(&bcache.lock);

// release(&bcache.hash_lock[hash_num]);

// release(&bcache.lock);

acquiresleep(&b->lock);

return b;

}

}

release(&bcache.hash_lock[i]);

}

panic("bget: no buffers");

}时间戳方式在buf里边加个ticks,在初始化的时候分配0,之后在brelse更新引用数为0的块的时间戳,在bget里边,从所有链表选出时间最小的引用计数为0的块进行分配即可。

brelse实现如下:

void brelse(struct buf *b)

{

if (!holdingsleep(&b->lock))

panic("brelse");

releasesleep(&b->lock);

// acquire(&bcache.lock);

int hash_num = HASHNUM(b->blockno);

// acquire(&bcache.hash_lock[hash_num]);

if (b->refcnt > 0)

{

b->refcnt--;

}

if (b->refcnt == 0)

{

// no one is waiting for it.

b->next->prev = b->prev;

b->prev->next = b->next;

b->next = bcache.head[hash_num].next;

b->prev = &bcache.head[hash_num];

bcache.head[hash_num].next->prev = b;

bcache.head[hash_num].next = b;

}

}最后的一些小细节:

void bpin(struct buf *b)

{

int hash_num=HASHNUM(b->blockno);

acquire(&bcache.hash_lock[hash_num]);

b->refcnt++;

release(&bcache.hash_lock[hash_num]);

// acquire(&bcache.lock);

// b->refcnt++;

// release(&bcache.lock);

}

void bunpin(struct buf *b)

{

int hash_num=HASHNUM(b->blockno);

acquire(&bcache.hash_lock[hash_num]);

b->refcnt--;

release(&bcache.hash_lock[hash_num]);

// acquire(&bcache.lock);

// b->refcnt++;

// release(&bcache.lock);

}为了通过那个usertests的bigwrite,还得把param.h里边的FSSIZE参数多加个0

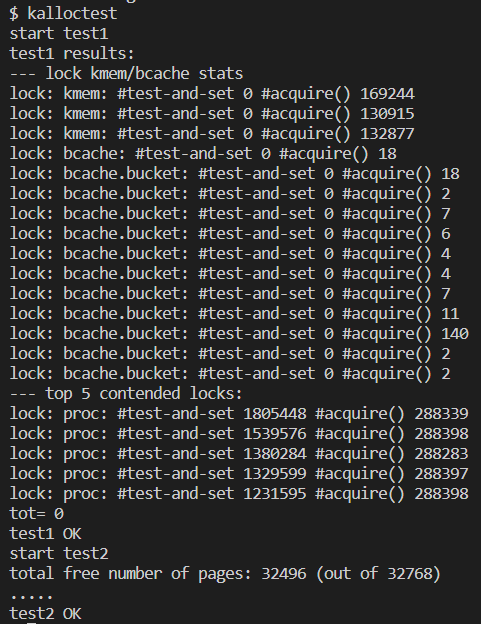

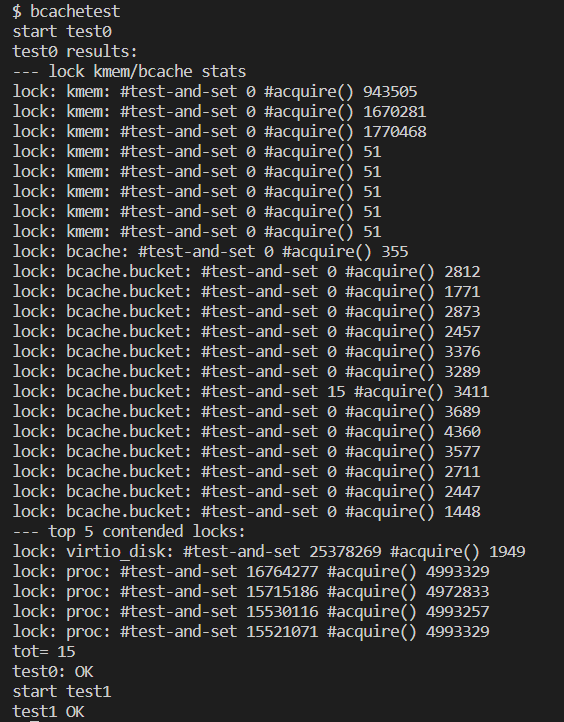

最终通过:

usertests也ALL PASS

![流批一体计算引擎-7-[Flink]的DataStream连接器](https://img-blog.csdnimg.cn/0f8a107145bd450683169a016f6d0830.png)