文章目录

- 6、Multiple Dimension Input 处理多维特征的输入

- 6.1 Revision

- 6.2 Diabetes Dataset 糖尿病数据集

- 6.3 Logistic Regression Model 逻辑斯蒂回归模型

- 6.4 Mini-Batch(N samples)

- 6.5 Neural Network 神经网络

- 6.6 Diabetes Prediction 糖尿病预测

- 6.6.1 Prepare Dataset

- 6.6.2 Define Model

- 6.6.3 Construct Loss and Optimizer

- 6.6.4 Training Cycle

- 6.6.5 Activate function

- 6.6.5 完整代码

6、Multiple Dimension Input 处理多维特征的输入

B站视频教程传送门:PyTorch深度学习实践 - 逻辑斯蒂回归

6.1 Revision

我们先来回顾一下回归与分类:

差别: 主要在于输出值

回归(Regressiom):y ∈ R

分类(Classification):y ∈ { } 离散的集合

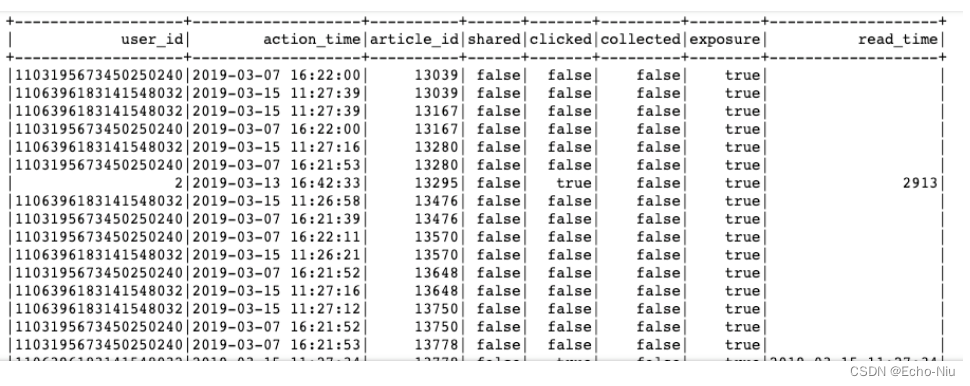

6.2 Diabetes Dataset 糖尿病数据集

如果我们安装过sklearn(python编程安装sklearn),其中就包含糖尿病数据集,可以进入该目录(D:\Software\Anaconda\Lib\site-packages\sklearn\datasets\data)下查看,如下图所示:

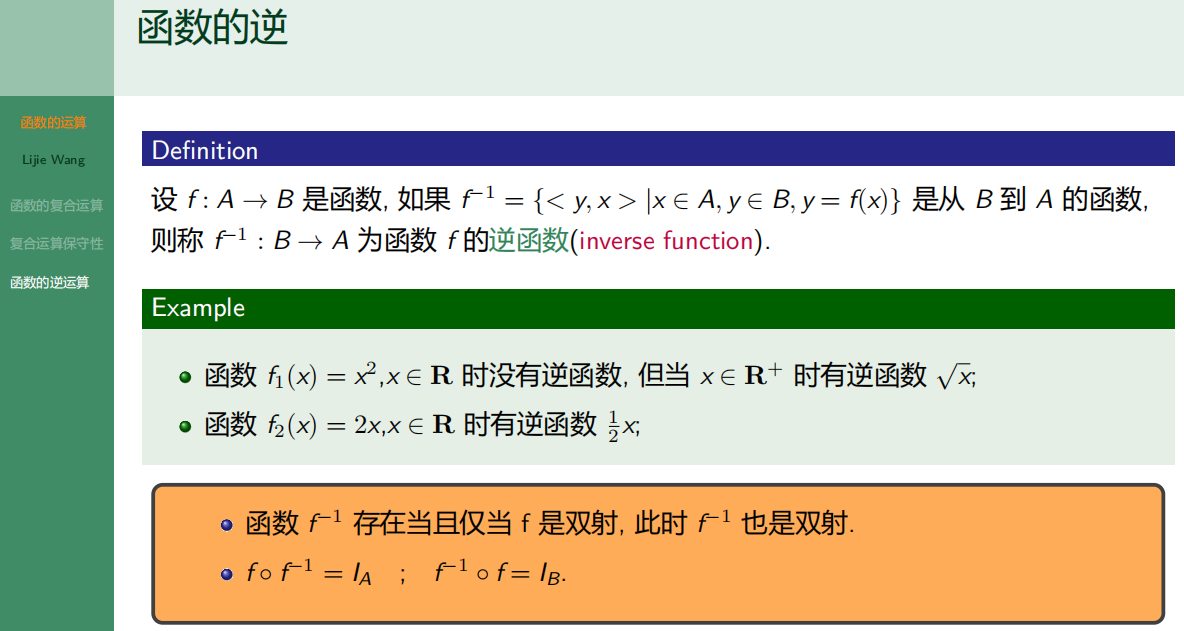

6.3 Logistic Regression Model 逻辑斯蒂回归模型

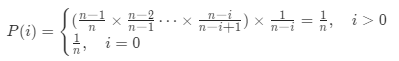

由于这里的 x不再是简简单单的一维,而是 8维,所以应该看成下方两个矩阵相乘:

注: σ = 1 1 + e − z \sigma = \frac {1} {1 + e^{-z}} σ=1+e−z1

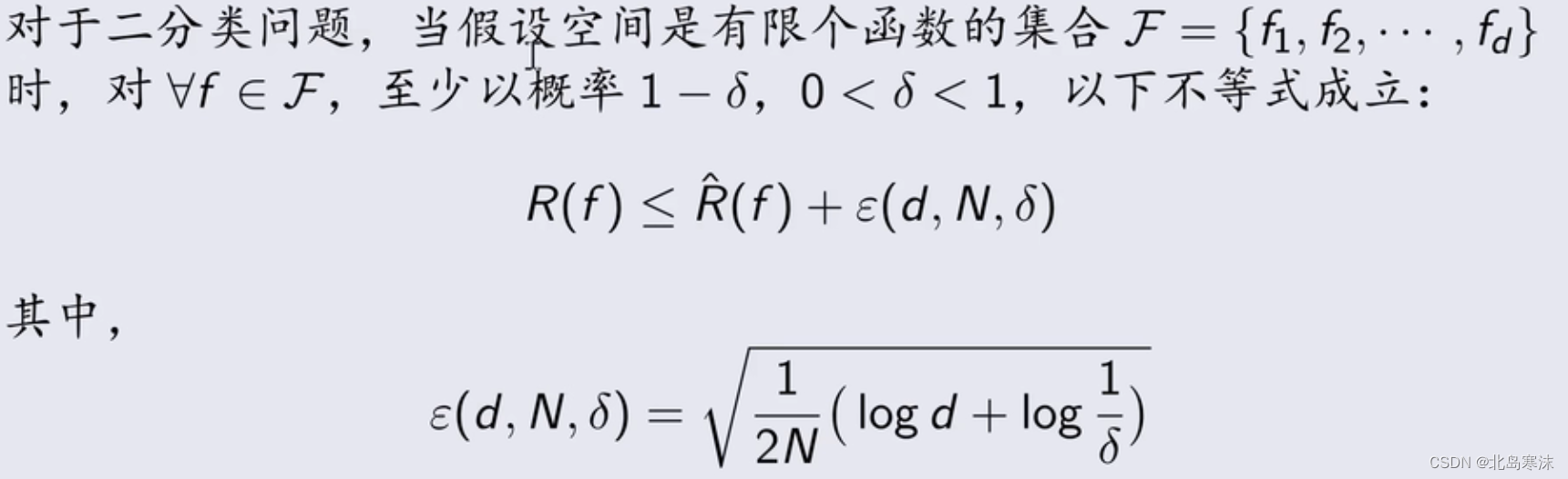

6.4 Mini-Batch(N samples)

import torch

class Liang(torch.nn.Module):

def __init__(self):

super(Liang, self).__init__()

self.linear = nn.Linear(8, 1)

self.sigmoid = torch.nn.Sigmoid()

def forward(self, x):

x = self.sigmoid(self.linear(x))

return x

model = Liang()

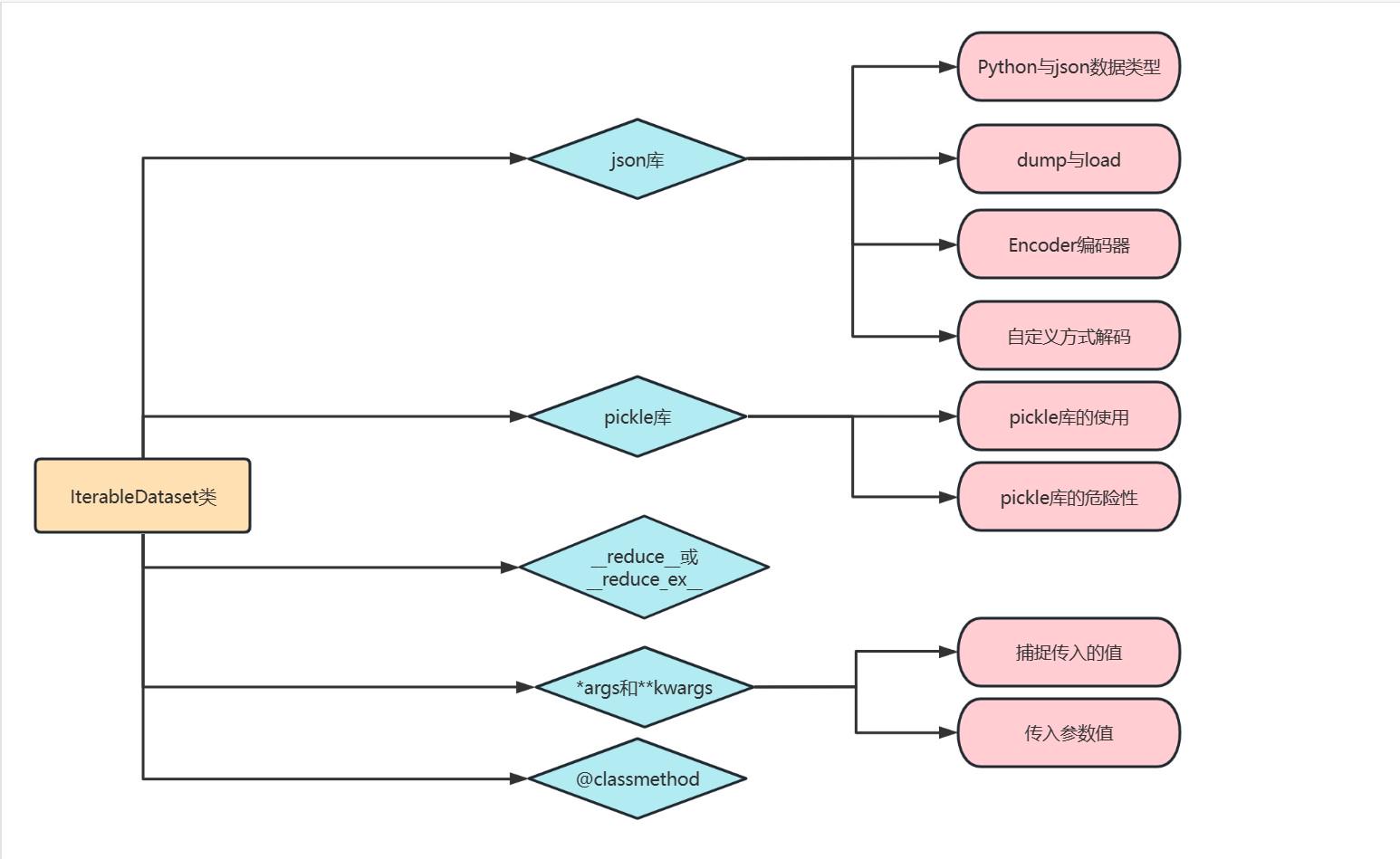

6.5 Neural Network 神经网络

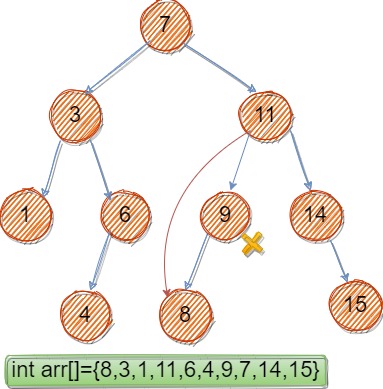

当输入8维,输出2维时:

self.linear = torch.nn.Linear(8, 2)

当输入8维,输出6维时:

self.linear = torch.nn.Linear(8, 6)

可以降维,可以升维,也可以一降(升)一升(降):

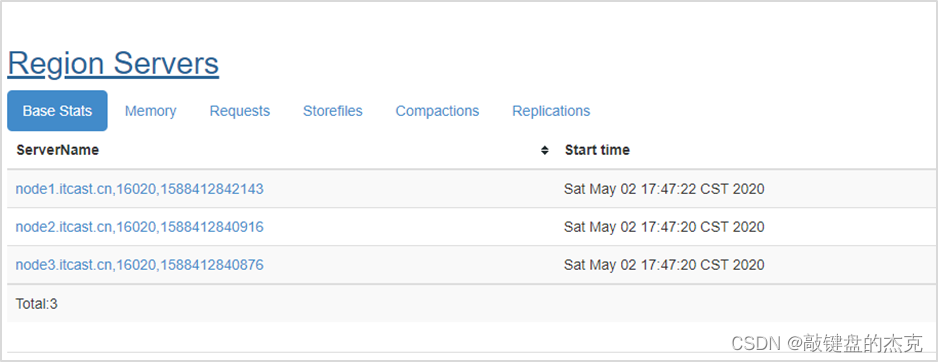

6.6 Diabetes Prediction 糖尿病预测

X1~X8:病人相应的指标

Y:一年后病情是否加重(预测)

6.6.1 Prepare Dataset

import numpy as np

xy = np.loadtxt('../data/diabetes.csv.gz', delimiter=',', dtype=np.float32)

x_data = torch.from_numpy(xy[:, :-1]) # 所有行,除了最后一列

y_data = torch.from_numpy(xy[:, [-1]]) # 所有行,最后一列 转为矩阵而不是向量

6.6.2 Define Model

6.6.3 Construct Loss and Optimizer

criterion = torch.nn.BCELoss(reduction='mean')

optimizer = torch.optim.SGD(model.parameters(), lr=0.1)

6.6.4 Training Cycle

for epoch in range(100):

# Forward

y_pred = model(x_data) # This program has not use Mini-Batch for training. We shall talk about DataLoader later.

loss = criterion(y_pred, y_data)

print(epoch, loss.item())

# Backward

optimizer.zero_grad()

loss.backward()

# Update

optimizer.step()

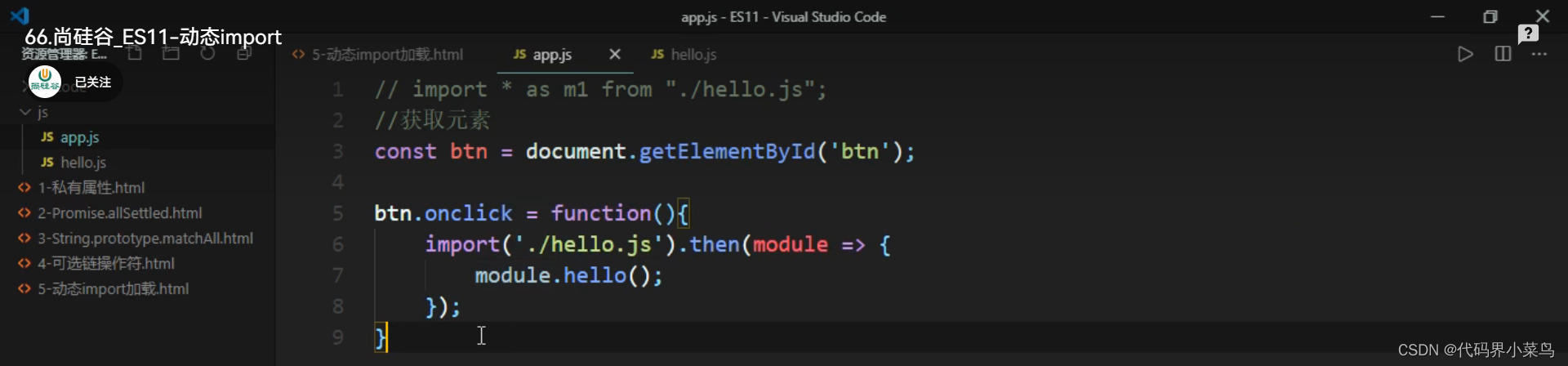

6.6.5 Activate function

神经网络中激活函数的可视化:https://dashee87.github.io/deep%20learning/visualising-activation-functions-in-neural-networks/

PyTorch文档:https://pytorch.org/docs/stable/nn.html#non-linear-activations-weighted-sum-nonlinearity

6.6.5 完整代码

import torch

import numpy as np

import matplotlib.pyplot as plt

xy = np.loadtxt('../data/diabetes.csv.gz', delimiter=',', dtype=np.float32)

x_data = torch.from_numpy(xy[:, :-1]) # 所有行,除了最后一列

y_data = torch.from_numpy(xy[:, [-1]]) # 所有行,最后一列 转为矩阵而不是向量

class Liang(torch.nn.Module):

def __init__(self):

super(Liang, self).__init__()

self.linear1 = torch.nn.Linear(8, 6)

self.linear2 = torch.nn.Linear(6, 4)

self.linear3 = torch.nn.Linear(4, 1)

self.sigmoid = torch.nn.Sigmoid() # Sigmoid

self.tanh = torch.nn.Tanh()

def forward(self, x):

x = self.tanh(self.linear1(x))

x = self.tanh(self.linear2(x))

x = self.sigmoid(self.linear3(x))

return x

model = Liang()

criterion = torch.nn.BCELoss(reduction='mean')

optimizer = torch.optim.SGD(model.parameters(), lr=0.1)

epoch_list = []

loss_list = []

for epoch in range(100):

# Forward

y_pred = model(x_data)

loss = criterion(y_pred, y_data)

print(epoch, loss.item())

epoch_list.append(epoch)

loss_list.append(loss.item())

# Backward

optimizer.zero_grad()

loss.backward()

# Update

optimizer.step()

plt.plot(epoch_list, loss_list)

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.title('Tanh')

plt.show()