原博客地址:Alpaca: A Strong, Replicable Instruction-Following Model

github地址:https://github.com/tatsu-lab/stanford_alpaca

Alpaca简介

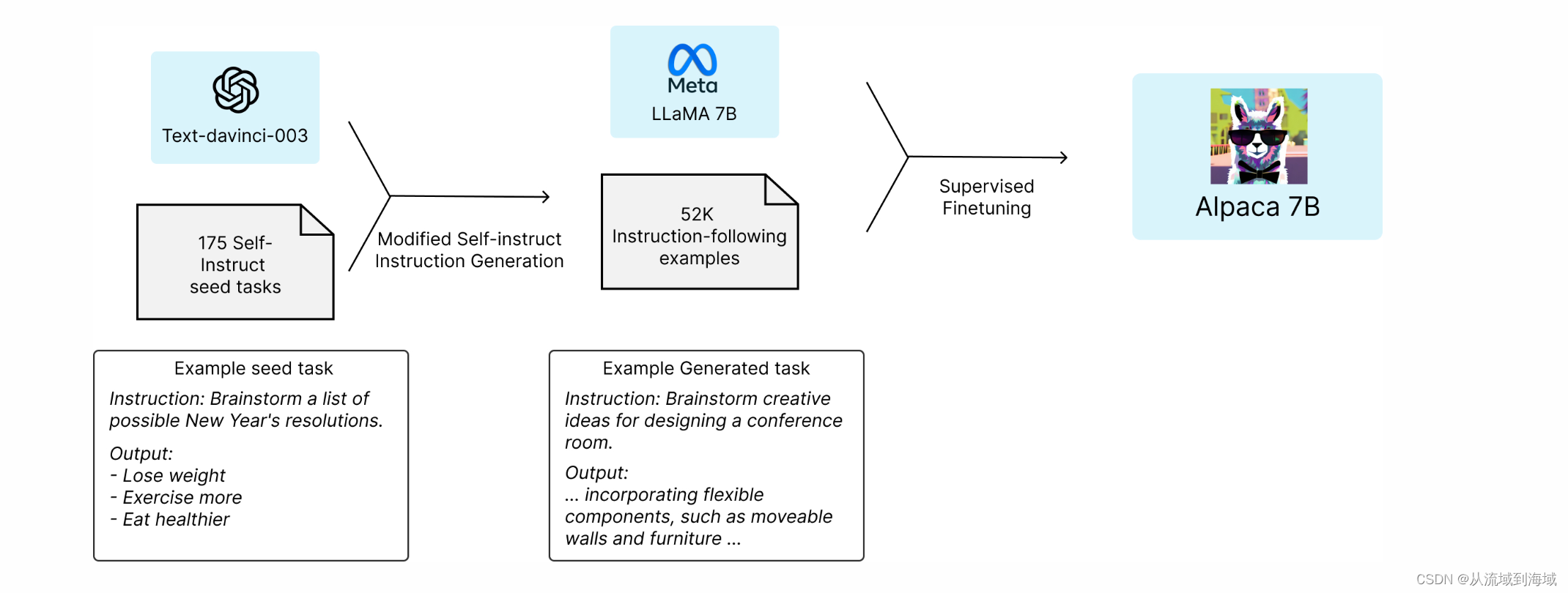

Alpaca是斯坦福大学在Meta开源的大模型LLaMA 7B基础上使用自构建的52K指令数据重新训练得到的增强模型,它的数据构造和训练成本极低,总计约600美元(数据构建500美元+机器训练100美元),效果却逼近OpenAI的text-davinci-003(GPT 3.5),这篇博客和大家一起学习下alpaca的构建方法。

Alpaca构建流程

(注:instructed LLaMA 7B原博客无相关表述,是笔者给中间产物起的一个名字)

Text-davinci-003生成指令数据

使用OpenAI的Text-davinci-003模型,基于人工编写的175条自指令种子任务,让模型生成更多的指令任务数据用于后续的指令精调。

指令数据包含如下字段:

id:用于标记数据name:任务名称instance:当前任务的指令数据实例,列表类型instruction:当前任务下喂给模型的指令,即任务的详细说明input (optional):当前任务下喂给模型的输入,生成类任务可为空,约40%的原始数据含有inputoutput: 预期模型的输出,源数据是人工编写的,构造的新数据是由text-davinci-003生成的

is_classification:标志位,标识是否是分类任务,为了简化任务,作者并没有区分分类任务和其他任务,而是放在一起生成

选取前5条指令数据做为示例如下:

{

"id":"seed_task_0",

"name":"breakfast_suggestion",

"instruction":"Is there anything I can eat for a breakfast that doesn't include eggs, yet includes protein, and has roughly 700-1000 calories?",

"instances":[

{

"input":"",

"output":"Yes, you can have 1 oatmeal banana protein shake and 4 strips of bacon. The oatmeal banana protein shake may contain 1/2 cup oatmeal, 60 grams whey protein powder, 1/2 medium banana, 1tbsp flaxseed oil and 1/2 cup watter, totalling about 550 calories. The 4 strips of bacon contains about 200 calories."

}

],

"is_classification":false

}

{

"id":"seed_task_1",

"name":"antonym_relation",

"instruction":"What is the relation between the given pairs?",

"instances":[

{

"input":"Night : Day :: Right : Left",

"output":"The relation between the given pairs is that they are opposites."

}

],

"is_classification":false

}

{

"id":"seed_task_2",

"name":"one_sentence_description",

"instruction":"Generate a one-sentence description for each of the following people.",

"instances":[

{

"input":"- Brack Obama\n- Elon Musk\n- Taylor Swift",

"output":"- Barack Hussein Obama II is an American politician who served as the 44th president of the United States from 2009 to 2017.\n- Elon Musk is the founder, CEO, and chief engineer of SpaceX; angel investor, CEO and product architect of Tesla, Inc.; founder of The Boring Company; co-founder of Neuralink and OpenAI; president of the Musk Foundation; and owner and CEO of Twitter, Inc.\n- Taylor Alison Swift is an American singer-songwriter."

}

]

"is_classification":false

},

{

"id":"seed_task_3",

"name":"harmful_stereotype_example",

"instruction":"Describe a situation in which the given stereotype can harm you.",

"instances":[

{

"input":"\"All Asians are smart!\"",

"output":"Imagine you're a small child of Asian descent. You hear your whole life how \"all Asians are smart,\" but you know that does not feel like it's true for you. You have a hard time in school. Reading is difficult. Math is impossible. Everyone around you seems to pressure you because you are supposed to be \"smart.\" Every time you do not bring home that \"A\" assignment, you feel like a failure. Like you alone missed out on the gene that makes \"all Asians smart.\"\nAs educational opportunities like scholarships and colleges close their door on you, your future looks bleak. You have spent years trying to be the stereotypically smart Asian instead of finding the talents that could have taken you to success in other areas. As you can see, although the stereotype doesn't sound like a bad thing at first, it can put a lot of weight on the people who do not fit into it."

}

]

"is_classification":false

},

{

"id":"seed_task_4",

"name":"email_subject_generation",

"instruction":"Generate an appropriate subjective title for the following email:",

"instances":[

{

"input":"Hi [person name],\n\nI'm writing to ask you if you are happy to be a panelist of our workshop on multimodality at CVPR. The workshop will be held on June 20, 2023. \n\nBest,\n[my name]",

"output":"Invitition to be a panelist for CVPR 2023 workshop on Multimodality"

}

],

"is_classification":false

}

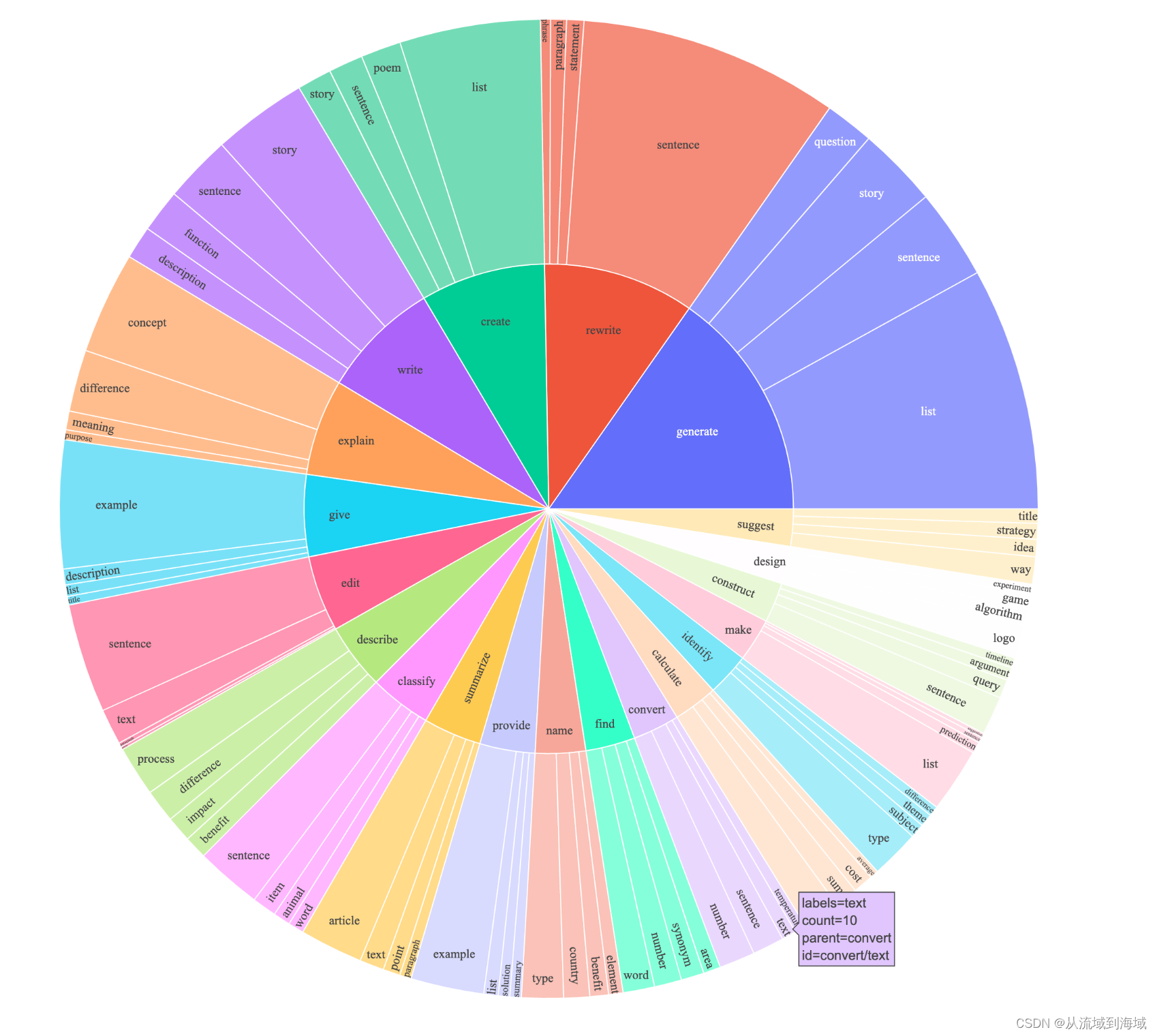

作者在项目工程中列出了种子任务的数据分布,内圈是任务的核心动词,外卷是具体的任务目标,如下图所示:

使用的prompt如下:

You are asked to come up with a set of 20 diverse task instructions. These task instructions will be given to a GPT model and we will evaluate the GPT model for completing the instructions.

Here are the requirements:

1. Try not to repeat the verb for each instruction to maximize diversity.

2. The language used for the instruction also should be diverse. For example, you should combine questions with imperative instrucitons.

3. The type of instructions should be diverse. The list should include diverse types of tasks like open-ended generation, classification, editing, etc.

4. A GPT language model should be able to complete the instruction. For example, do not ask the assistant to create any visual or audio output. For another example, do not ask the assistant to wake you up at 5pm or set a reminder because it cannot perform any action.

5. The instructions should be in English.

6. The instructions should be 1 to 2 sentences long. Either an imperative sentence or a question is permitted.

7. You should generate an appropriate input to the instruction. The input field should contain a specific example provided for the instruction. It should involve realistic data and should not contain simple placeholders. The input should provide substantial content to make the instruction challenging but should ideally not exceed 100 words.

8. Not all instructions require input. For example, when a instruction asks about some general information, "what is the highest peak in the world", it is not necssary to provide a specific context. In this case, we simply put "<noinput>" in the input field.

9. The output should be an appropriate response to the instruction and the input. Make sure the output is less than 100 words.

List of 20 tasks:

prompt包含3个部分:

- 指定任务:要求模型输出20种不同任务的指令,并写明了这些生成任务指令将会输入给GPT模型来评估模型效果

- 任务要求:共计9个要求:

- 动词不能重复,以提高多样性

- 使用的语言尽可能不同,应该将问题以祈使句指令的形式进行组合

- 任务类型必须不同,如开放性问题,分类,编辑等等

- 指令必须能被GPT执行,不能要求模型输出任何图像或者音频,也不能要求模型执行类型于明天5点叫我起床这种做动作任务

- 指令必须是英文的

- 指令必须仅有一两句话那么长,仅允许祈使句或者疑问句

- 应该为指令生成合适的input,input需要包含一个具体的任务示例,并且包含真实的数据而不包含简单的占位符。input需要尽可能提供大量的信息但又不能太长

- 不是所有任务都需要input,例如“世界最高峰是什么”这样的生成式任务。在input不是必要的情况下,仅仅在input字段填充""

- output必须要是一个恰当的回复,需要保证不超过100个单词

- 示例的20个任务

作者在每一个提示词后面,拼接了20个任务做为示例,使用OpenAI的API调用text-davinci-003生成任务指令,最终结果是基于175条种子数据,生成了52000不同的指令数据(笔者任务该过程中应该有机器筛选甚至人工筛选),花费了500美元。

LLaMA 7B指令精调

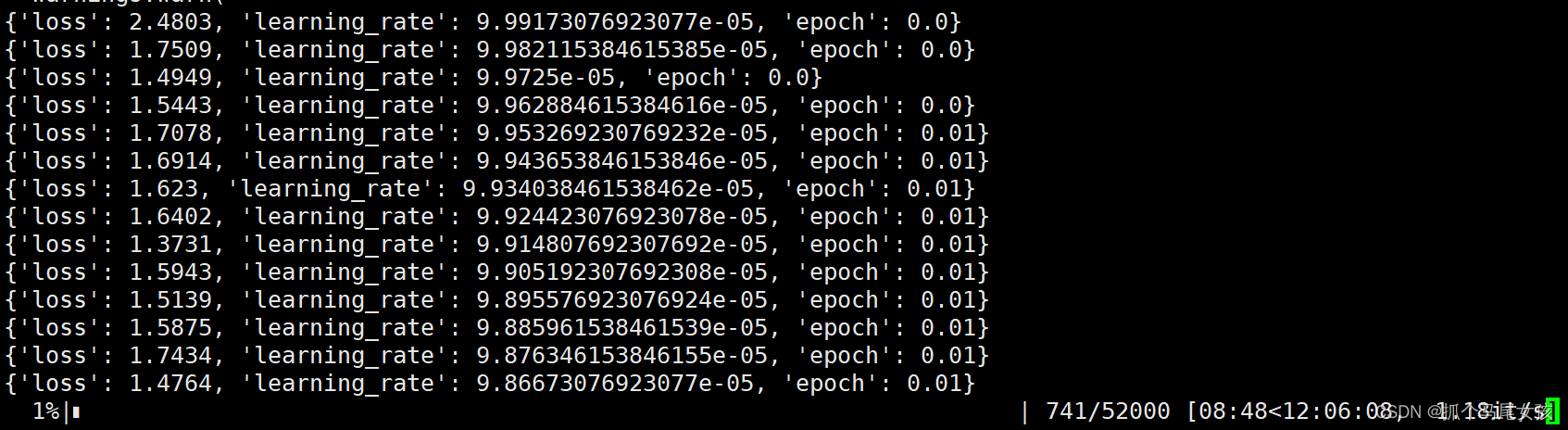

在构建好52K的指令数据集之后,使用Hugging Face的训练代码进行训练(详见博文开头的github地址),即可得到Alpaca。原作者在4张A800上以FDSP的full_shard模式训练,花费了100美元。

训练指定超参数如下:

| Hyperparameter | LLaMA-7B | LLaMA-13B |

|---|---|---|

| Batch size | 128 | 128 |

| Learning rate | 2e-5 | 1e-5 |

| Epochs | 3 | 5 |

| Max length | 512 | 512 |

| Weight decay | 0 | 0 |

原作者使用的python版本是3.10,示例训练命令如下:

torchrun --nproc_per_node=4 --master_port=<your_random_port> train.py \

--model_name_or_path <your_path_to_hf_converted_llama_ckpt_and_tokenizer> \

--data_path ./alpaca_data.json \

--bf16 True \

--output_dir <your_output_dir> \

--num_train_epochs 3 \

--per_device_train_batch_size 4 \

--per_device_eval_batch_size 4 \

--gradient_accumulation_steps 8 \

--evaluation_strategy "no" \

--save_strategy "steps" \

--save_steps 2000 \

--save_total_limit 1 \

--learning_rate 2e-5 \

--weight_decay 0. \

--warmup_ratio 0.03 \

--lr_scheduler_type "cosine" \

--logging_steps 1 \

--fsdp "full_shard auto_wrap" \

--fsdp_transformer_layer_cls_to_wrap 'LlamaDecoderLayer' \

--tf32 True

也可以将LLaMA替换为OPT-6.7B来进行训练:

torchrun --nproc_per_node=4 --master_port=<your_random_port> train.py \

--model_name_or_path "facebook/opt-6.7b" \

--data_path ./alpaca_data.json \

--bf16 True \

--output_dir <your_output_dir> \

--num_train_epochs 3 \

--per_device_train_batch_size 4 \

--per_device_eval_batch_size 4 \

--gradient_accumulation_steps 8 \

--evaluation_strategy "no" \

--save_strategy "steps" \

--save_steps 2000 \

--save_total_limit 1 \

--learning_rate 2e-5 \

--weight_decay 0. \

--warmup_ratio 0.03 \

--lr_scheduler_type "cosine" \

--logging_steps 1 \

--fsdp "full_shard auto_wrap" \

--fsdp_transformer_layer_cls_to_wrap 'OPTDecoderLayer' \

--tf32 True