1、Kubernetes高可用项目介绍

单master节点的可靠性不高,并不适合实际的生产环境。Kubernetes 高可用集群是保证 Master 节点中 API Server 服务的高可用。API Server 提供了 Kubernetes 各类资源对象增删改查的唯一访问入口,是整个 Kubernetes 系统的数据总线和数据中心。采用负载均衡(Load Balance)连接多个 Master 节点可以提供稳定容器云业务。

2、项目架构设计

2.1、项目主机信息

准备6台虚拟机,3台master节点,3台node节点,保证master节点数为>=3的奇数。

硬件:2核CPU+、2G内存+、硬盘20G+

网络:所有机器网络互通、可以访问外网

| 操作系统 | IP地址 | 角色 | 主机名 |

| CentOS7-x86-64 | 192.168.50.53 | master | k8s-master1 |

| CentOS7-x86-64 | 192.168.50.51 | master | k8s-master2 |

| CentOS7-x86-64 | 192.168.50.50 | master | k8s-master3 |

| CentOS7-x86-64 | 192.168.50.54 | node | k8s-node1 |

| CentOS7-x86-64 | 192.168.50.66 | node | k8s-node2 |

| CentOS7-x86-64 | 192.168.50.61 | node | k8s-node3 |

| 192.168.50.123 | vip | master.k8s.io |

项目架构图

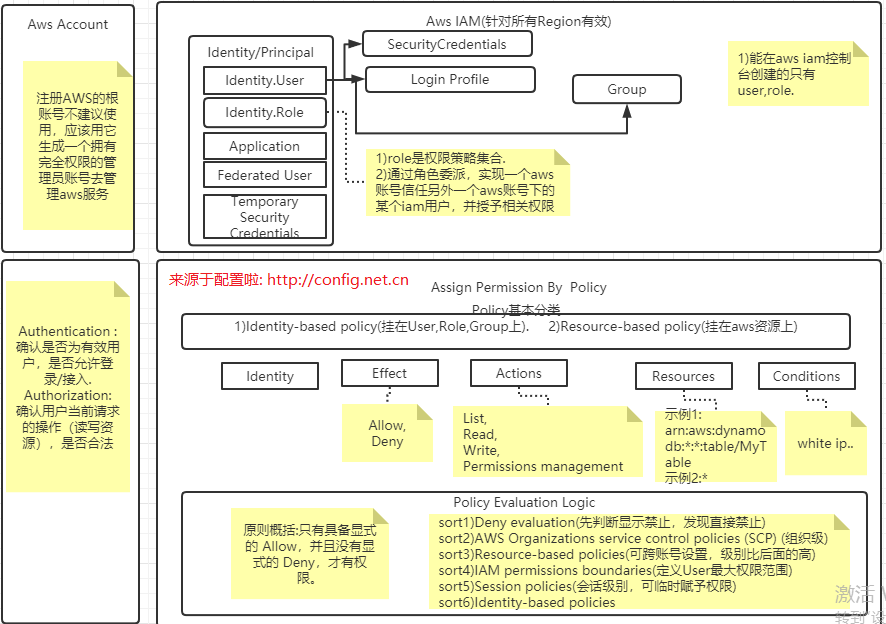

多master节点负载均衡的kubernetes集群。官网给出了两种拓扑结构:堆叠control plane node和external etcd node,本文基于第一种拓扑结构进行搭建。

(堆叠control plane node)

项目实施思路

master节点需要部署etcd、apiserver、controller-manager、scheduler这4种服务,其中etcd、controller-manager、scheduler这三种服务kubernetes自身已经实现了高可用,在多master节点的情况下,每个master节点都会启动这三种服务,同一时间只有一个生效。因此要实现kubernetes的高可用,只需要apiserver服务高可用。

keepalived是一种高性能的服务器高可用或热备解决方案,可以用来防止服务器单点故障导致服务中断的问题。keepalived使用主备模式,至少需要两台服务器才能正常工作。比如keepalived将三台服务器搭建成一个集群,对外提供一个唯一IP,正常情况下只有一台服务器上可以看到这个IP的虚拟网卡。如果这台服务异常,那么keepalived会立即将IP移动到剩下的两台服务器中的一台上,使得IP可以正常使用。

haproxy是一款提供高可用性、负载均衡以及基于TCP(第四层)和HTTP(第七层)应用的代理软件,支持虚拟主机,它是免费、快速并且可靠的一种解决方案。使用haproxy负载均衡后端的apiserver服务,达到apiserver服务高可用的目的。

本文使用的keepalived+haproxy方案,使用keepalived对外提供稳定的入口,使用haproxy对内均衡负载。因为haproxy运行在master节点上,当master节点异常后,haproxy服务也会停止,为了避免这种情况,我们在每一台master节点都部署haproxy服务,达到haproxy服务高可用的目的。由于多master节点会出现投票竞选的问题,因此master节点的数据最好是单数,避免票数相同的情况。

项目实施过程

系统初始化 所有主机

关闭防火墙 更改主机名

[root@ ~]# hostname k8s-master1

[root@ ~]# bash

[root@~]# systemctl stop firewalld

[root@ ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

关闭selinux

[root@~]# sed -i 's/enforcing/disabled/' /etc/selinux/config

[root@~]# setenforce 0

关闭swap

[root@~]# swapoff -a

[root@~]# sed -ri 's/.*swap.*/#&/' /etc/fstab

主机名映射

[root@k8s-master1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.50.53 master1.k8s.io k8s-master1

192.168.50.51 master2.k8s.io k8s-master2

192.168.50.50 master3.k8s.io k8s-master3

192.168.50.54 node1.k8s.io k8s-node1

192.168.50.66 node2.k8s.io k8s-node2

192.168.50.61 node3.k8s.io k8s-node3

192.168.50.123 master.k8s.io k8s-vip

将桥接的IPv4流量传递到iptables的链

[root@~]# cat << EOF >> /etc/sysctl.conf

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> EOF

[root@~]# modprobe br_netfilter

[root@ ~]# sysctl -p

时间同步

[root@k8s-master1 ~]# yum -y install ntpdate

已加载插件:fastestmirror

Determining fastest mirrors

epel/x86_64/metalink | 7.3 kB 00:00:00

[root@k8s-master1 ~]# ntpdate time.windows.com

15 Aug 13:50:29 ntpdate[61505]: adjust time server 52.231.114.183 offset -0.002091 sec

配置部署keepalived服务

安装Keepalived(所有master主机)

[root@k8s-master1 ~]# yum -y install keepalived

三个k8s-master节点配置

[root@ ~]# cat > /etc/keepalived/keepalived.conf <<EOF

> ! Configuration File for keepalived

> global_defs {

> router_id k8s

> }

> vrrp_script check_haproxy {

> script "killall -0 haproxy"

> interval 3

> weight -2

> fall 10

> rise 2

> }

> vrrp_instance VI_1 {

> state MASTER

> interface ens33

> virtual_router_id 51

> priority 100

> advert_int 1

> authentication {

> auth_type PASS

> auth_pass 1111

> }

> virtual_ipaddress {

> 192.168.50.123

> }

> track_script {

> check_haproxy

> }

> }

> EOF

启动和检查

所有master节点都要执行

[root@k8s-master1 ~]# systemctl start keepalived

[root@k8s-master1 ~]# systemctl enable keepalived

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.

查看启动状态

[root@k8s-master1 ~]# systemctl status keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: active (running) since 二 2023-08-15 13:54:16 CST; 52s ago

Main PID: 61546 (keepalived)

CGroup: /system.slice/keepalived.service

├─61546 /usr/sbin/keepalived -D

├─61547 /usr/sbin/keepalived -D

└─61548 /usr/sbin/keepalived -D

8月 15 13:54:22 k8s-master1 Keepalived_vrrp[61548]: Sending gratuitous ARP on ens33 for 192.168....23

8月 15 13:54:22 k8s-master1 Keepalived_vrrp[61548]: Sending gratuitous ARP on ens33 for 192.168....23

8月 15 13:54:22 k8s-master1 Keepalived_vrrp[61548]: Sending gratuitous ARP on ens33 for 192.168....23

8月 15 13:54:22 k8s-master1 Keepalived_vrrp[61548]: Sending gratuitous ARP on ens33 for 192.168....23

8月 15 13:54:23 k8s-master1 Keepalived_vrrp[61548]: Sending gratuitous ARP on ens33 for 192.168....23

8月 15 13:54:23 k8s-master1 Keepalived_vrrp[61548]: VRRP_Instance(VI_1) Sending/queueing gratuit...23

8月 15 13:54:23 k8s-master1 Keepalived_vrrp[61548]: Sending gratuitous ARP on ens33 for 192.168....23

8月 15 13:54:23 k8s-master1 Keepalived_vrrp[61548]: Sending gratuitous ARP on ens33 for 192.168....23

8月 15 13:54:23 k8s-master1 Keepalived_vrrp[61548]: Sending gratuitous ARP on ens33 for 192.168....23

8月 15 13:54:23 k8s-master1 Keepalived_vrrp[61548]: Sending gratuitous ARP on ens33 for 192.168....23

Hint: Some lines were ellipsized, use -l to show in full.

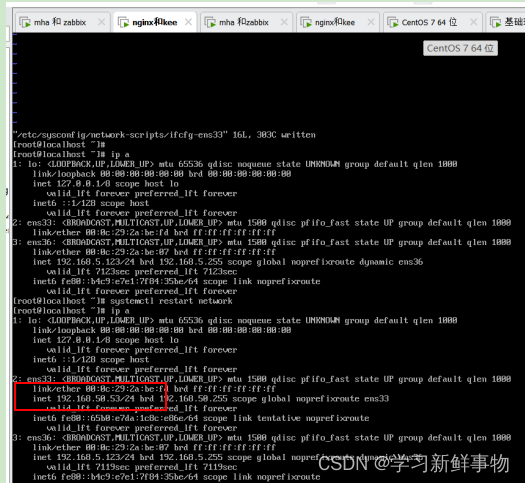

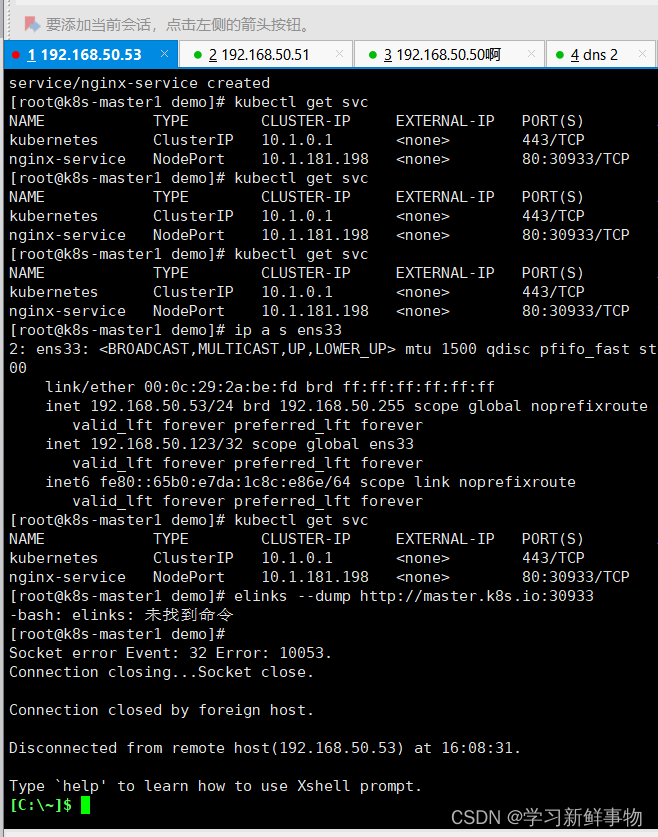

启动完成后在master1查看网络信息

[root@k8s-master1 ~]# ip a s ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:2a:be:fd brd ff:ff:ff:ff:ff:ff

inet 192.168.50.53/24 brd 192.168.50.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.50.123/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::65b0:e7da:1c8c:e86e/64 scope link noprefixroute

valid_lft forever preferred_lft forever

配置部署haproxy服务

所有master主机安装haproxy

[root@k8s-master1 ~]# yum -y install haproxy

每台master节点中的配置均相同,配置中声明了后端代理的每个master节点服务器,指定了haproxy的端口为16443,因此16443端口为集群的入口

[root@k8s-master1 ~]# cat > /etc/haproxy/haproxy.cfg << EOF

> #-------------------------------

> # Global settings

> #-------------------------------

> global

> log 127.0.0.1 local2

> chroot /var/lib/haproxy

> pidfile /var/run/haproxy.pid

> maxconn 4000

> user haproxy

> group haproxy

> daemon

> stats socket /var/lib/haproxy/stats

> #--------------------------------

> # common defaults that all the 'listen' and 'backend' sections will

> # usr if not designated in their block

> #--------------------------------

> defaults

> mode http

> log global

> option httplog

> option dontlognull

> option http-server-close

> option forwardfor except 127.0.0.0/8

> option redispatch

> retries 3

> timeout http-request 10s

> timeout queue 1m

> timeout connect 10s

> timeout client 1m

> timeout server 1m

> timeout http-keep-alive 10s

> timeout check 10s

> maxconn 3000

> #--------------------------------

> # kubernetes apiserver frontend which proxys to the backends

> #--------------------------------

> frontend kubernetes-apiserver

> mode tcp

> bind *:16443

> option tcplog

> default_backend kubernetes-apiserver

> #---------------------------------

> #round robin balancing between the various backends

> #---------------------------------

> backend kubernetes-apiserver

> mode tcp

> balance roundrobin

> server master1.k8s.io 192.168.50.53:6443 check

> server master2.k8s.io 192.168.50.51:6443 check

> server master3.k8s.io 192.168.50.50:6443 check

> #---------------------------------

> # collection haproxy statistics message

> #---------------------------------

> listen stats

> bind *:1080

> stats auth admin:awesomePassword

> stats refresh 5s

> stats realm HAProxy\ Statistics

> stats uri /admin?stats

> EOF

启动和检查

所有master节点都要执行

[root@k8s-master1 ~]# systemctl start haproxy

[root@k8s-master1 ~]# systemctl enable haproxy

Created symlink from /etc/systemd/system/multi-user.target.wants/haproxy.service to /usr/lib/systemd/system/haproxy.service.

查看启动状态

[root@k8s-master1 ~]# systemctl statushaproxy

● haproxy.service - HAProxy Load Balancer

Loaded: loaded (/usr/lib/systemd/system/haproxy.service; enabled; vendor preset: disabled)

Active: active (running) since 二 2023-08-15 13:58:13 CST; 39s ago

Main PID: 61623 (haproxy-systemd)

CGroup: /system.slice/haproxy.service

├─61623 /usr/sbin/haproxy-systemd-wrapper -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pi...

├─61624 /usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -Ds

└─61625 /usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -Ds

8月 15 13:58:13 k8s-master1 systemd[1]: Started HAProxy Load Balancer.

8月 15 13:58:13 k8s-master1 haproxy-systemd-wrapper[61623]: haproxy-systemd-wrapper: executing /...Ds

8月 15 13:58:13 k8s-master1 haproxy-systemd-wrapper[61623]: [WARNING] 226/135813 (61624) : confi...e.

8月 15 13:58:13 k8s-master1 haproxy-systemd-wrapper[61623]: [WARNING] 226/135813 (61624) : confi...e.

Hint: Some lines were ellipsized, use -l to show in full.

检查端口

[root@k8s-master1 ~]# netstat -lntup | grep haproxy

tcp 0 0 0.0.0.0:1080 0.0.0.0:* LISTEN 61625/haproxy

tcp 0 0 0.0.0.0:16443 0.0.0.0:* LISTEN 61625/haproxy

udp 0 0 0.0.0.0:51633 0.0.0.0:* 61624/haproxy

配置部署Docker服务

所有主机上分别部署 Docker 环境,因为 Kubernetes 对容器的编排需要 Docker 的支持。

[root@k8s-master1 ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

--2023-08-15 13:59:44-- http://mirrors.aliyun.com/repo/Centos-7.repo

正在解析主机 mirrors.aliyun.com (mirrors.aliyun.com)... 42.202.208.242, 140.249.32.202, 140.249.32.203, ...

正在连接 mirrors.aliyun.com (mirrors.aliyun.com)|42.202.208.242|:80... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:2523 (2.5K) [application/octet-stream]

正在保存至: “/etc/yum.repos.d/CentOS-Base.repo”

100%[============================================================>] 2,523 --.-K/s 用时 0s

2023-08-15 13:59:45 (451 MB/s) - 已保存 “/etc/yum.repos.d/CentOS-Base.repo” [2523/2523])

[root@ ~]# yum -y install yum-utils device-mapper-persistent-data lvm2

使用 YUM 方式安装 Docker 时,推荐使用阿里的 YUM 源。

[root@~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@ ~]# yum clean all && yum makecache fast

[root@~]# yum -y install docker-ce

[root@ ~]# systemctl start docker

[root@ ~]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

镜像加速器(所有主机配置)

[root@k8s-master1 ~]# cat << END > /etc/docker/daemon.json

> {

> "registry-mirrors":[ "https://nyakyfun.mirror.aliyuncs.com" ]

> }

> END

[root@k8s-master1 ~]# systemctl daemon-reload

[root@k8s-master1 ~]# systemctl restart docker

部署kubelet kubeadm kubectl工具

使用 YUM 方式安装Kubernetes时,推荐使用阿里的yum。

所有主机配置

[root@k8s-master1 ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

> [kubernetes]

> name=Kubernetes

> baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

> enabled=1

> gpgcheck=1

> repo_gpgcheck=1

> gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

> https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

安装kubelet kubeadm kubectl

所有主机配置

[root@k8s-master1 ~]# yum -y install kubelet-1.20.0 kubeadm-1.20.0 kubectl-1.20.0

[root@k8s-master1 ~]# systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

部署Kubernetes Master

在具有vip的master上操作。此处的vip节点为k8s-master1。

创建kubeadm-config.yaml文件

[root@k8s-master1 ~]# cat > kubeadm-config.yaml << EOF

> apiServer:

> certSANs:

> - k8s-master1

> - k8s-master2

> - k8s-master3

> - master.k8s.io

> - 192.168.50.53

> - 192.168.50.51

> - 192.168.50.50

> - 192.168.50.123

> - 127.0.0.1

> extraArgs:

> authorization-mode: Node,RBAC

> timeoutForControlPlane: 4m0s

> apiVersion: kubeadm.k8s.io/v1beta1

> certificatesDir: /etc/kubernetes/pki

> clusterName: kubernetes

> controlPlaneEndpoint: "master.k8s.io:6443"

> controllerManager: {}

> dns:

> type: CoreDNS

> etcd:

> local:

> dataDir: /var/lib/etcd

> imageRepository: registry.aliyuncs.com/google_containers

> kind: ClusterConfiguration

> kubernetesVersion: v1.20.0

> networking:

> dnsDomain: cluster.local

> podSubnet: 10.244.0.0/16

> serviceSubnet: 10.1.0.0/16

> scheduler: {}

> EOF

查看所需镜像信息

[root@k8s-master1 ~]# kubeadm config images list --config kubeadm-config.yaml

W0815 14:35:35.677463 62285 common.go:77] your configuration file uses a deprecated API spec: "kubeadm.k8s.io/v1beta1". Please use 'kubeadm config migrate --old-config old.yaml --new-config new.yaml', which will write the new, similar spec using a newer API version.

registry.aliyuncs.com/google_containers/kube-apiserver:v1.20.0

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.20.0

registry.aliyuncs.com/google_containers/kube-scheduler:v1.20.0

registry.aliyuncs.com/google_containers/kube-proxy:v1.20.0

registry.aliyuncs.com/google_containers/pause:3.2

registry.aliyuncs.com/google_containers/etcd:3.4.13-0

registry.aliyuncs.com/google_containers/coredns:1.7.0

上传k8s所需的镜像并导入(所有master主机)

mkdir master 把文件导入到目录里

[root@k8s-master1 ~]# ll

-rw-------. 1 root root 1417 6月 19 21:55 anaconda-ks.cfg

-rw-r--r--. 1 root root 41715200 9月 6 2022 coredns.tar

-rw-r--r--. 1 root root 290009600 9月 6 2022 etcd.tar

-rw-r--r--. 1 root root 716 8月 15 14:34 kubeadm-config.yaml

-rw-r--r--. 1 root root 172517376 9月 6 2022 kube-apiserver.tar

-rw-r--r--. 1 root root 162437120 9月 6 2022 kube-controller-manager.tar

[root@k8s-master1 master]# ls | while read line

> do

> docker load < $line

> done

unexpected EOF

archive/tar: invalid tar header

225df95e717c: Loading layer 336.4kB/336.4kB

7c9b0f448297: Loading layer 41.37MB/41.37MB

Loaded image ID: sha256:70f311871ae12c14bd0e02028f249f933f925e4370744e4e35f706da773a8f61

fe9a8b4f1dcc: Loading layer 43.87MB/43.87MB

ce04b89b7def: Loading layer 224.9MB/224.9MB

1b2bc745b46f: Loading layer 21.22MB/21.22MB

Loaded image ID: sha256:303ce5db0e90dab1c5728ec70d21091201a23cdf8aeca70ab54943bbaaf0833f

archive/tar: invalid tar header

fc4976bd934b: Loading layer 53.88MB/53.88MB

f103db1d7ea4: Loading layer 118.6MB/118.6MB

Loaded image ID: sha256:0cae8d5cc64c7d8fbdf73ee2be36c77fdabd9e0c7d30da0c12aedf402730bbb2

01b437934b9d: Loading layer 108.5MB/108.5MB

Loaded image ID: sha256:5eb3b7486872441e0943f6e14e9dd5cc1c70bc3047efacbc43d1aa9b7d5b3056

682fbb19de80: Loading layer 21.06MB/21.06MB

2dc2f2423ad1: Loading layer 5.168MB/5.168MB

ad9fb2411669: Loading layer 4.608kB/4.608kB

597151d24476: Loading layer 8.192kB/8.192kB

0d8d54147a3a: Loading layer 8.704kB/8.704kB

6bc5ae70fa9e: Loading layer 37.81MB/37.81MB

Loaded image ID: sha256:7d54289267dc5a115f940e8b1ea5c20483a5da5ae5bb3ad80107409ed1400f19

ac06623e44c6: Loading layer 42.1MB/42.1MB

Loaded image ID: sha256:78c190f736b115876724580513fdf37fa4c3984559dc9e90372b11c21b9cad28

e17133b79956: Loading layer 744.4kB/744.4kB

Loaded image ID: sha256:da86e6ba6ca197bf6bc5e9d900febd906b133eaa4750e6bed647b0fbe50ed43e

使用kubeadm命令初始化k8s

[root@k8s-master1 ~]# kubeadm init --config kubeadm-config.yaml

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join master.k8s.io:6443 --token gmpskr.9sdadby8vakx1wfl \

--discovery-token-ca-cert-hash sha256:391a6edaefa12d19d18f6bda19cd0979f65140a5ac2b496e4d098ac86c0d6d2 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join master.k8s.io:6443 --token gmpskr.9sdadby8vakx1wfl \

--discovery-token-ca-cert-hash sha256:391a6edaefa12d19d18f6bda19cd0979f65140a5ac2b496e4d098ac86c0d6d2

根据初始化的结果操作

[root@k8s-master1 master]# mkdir -p $HOME/.kube

[root@k8s-master1 master]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master1 master]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

查看集群状态

[root@k8s-master1 master]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused

controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused

etcd-0 Healthy {"health":"true"}

注意:出现以上错误情况,是因为/etc/kubernetes/manifests/下的kube-controller-manager.yaml和kube-scheduler.yaml设置的默认端口为0导致的,解决方式是注释掉对应的port即可

修改kube-controller-manager.yaml文件

[root@k8s-master1 ~]# kubeadm init --config kubeadm-config.yaml

[root@k8s-master1 master]# vim /etc/kubernetes/manifests/kube-controller-manager.yaml

26 # - --port=0

[root@k8s-master1 master]# vim /etc/kubernetes/manifests/kube-scheduler.yaml

19 # - --port=0

查看集群状态

[root@k8s-master1 master]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

查看pod信息

[root@k8s-master1 master]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7f89b7bc75-97brm 0/1 Pending 0 9m56s

coredns-7f89b7bc75-pbb96 0/1 Pending 0 9m56s

etcd-k8s-master1 1/1 Running 0 10m

kube-apiserver-k8s-master1 1/1 Running 0 10m

kube-controller-manager-k8s-master1 1/1 Running 0 6m55s

kube-proxy-kwgjw 1/1 Running 0 9m57s

kube-scheduler-k8s-master1 1/1 Running 0 6m32s

查看节点信息

[root@k8s-master1 master]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1 NotReady control-plane,master 10m v1.20.0

添加master节点

在k8s-master2和k8s-master3节点创建文件夹

[root@k8s-master3 master]# mkdir -p /etc/kubernetes/pki/etcd

[root@k8s-master2 ~]# mkdir -p /etc/kubernetes/pki/etcd

在k8s-master1节点执行

从k8s-master1复制秘钥和相关文件到k8s-master2和k8s-master3

[root@k8s-master1 master]# scp /etc/kubernetes/admin.conf root@192.168.50.51:/etc/kubernetes

root@192.168.50.51's password:

admin.conf 100% 5565 6.1MB/s 00:00

[root@k8s-master1 master]# scp /etc/kubernetes/admin.conf root@192.168.50.50:/etc/kubernetes

root@192.168.50.50's password:

admin.conf 100% 5565 7.3MB/s 00:00

[root@k8s-master1 master]# scp /etc/kubernetes/pki/{ca.*,sa.*,front-proxy-ca.*} root@192.168.50.51:/etc/kubernetes/pki

root@192.168.50.51's password:

ca.crt 100% 1066 1.8MB/s 00:00

ca.key 100% 1679 1.8MB/s 00:00

sa.key 100% 1675 2.7MB/s 00:00

sa.pub 100% 451 876.9KB/s 00:00

front-proxy-ca.crt 100% 1078 1.8MB/s 00:00

front-proxy-ca.key 100% 1675 2.3MB/s 00:00

[root@k8s-master1 master]# scp /etc/kubernetes/pki/{ca.*,sa.*,front-proxy-ca.*} root@192.168.50.50:/etc/kubernetes/pki

root@192.168.50.50's password:

ca.crt 100% 1066 1.8MB/s 00:00

ca.key 100% 1679 2.8MB/s 00:00

sa.key 100% 1675 2.8MB/s 00:00

sa.pub 100% 451 917.6KB/s 00:00

front-proxy-ca.crt 100% 1078 1.9MB/s 00:00

front-proxy-ca.key 100% 1675 3.4MB/s 00:00

[root@k8s-master1 master]# scp /etc/kubernetes/pki/etcd/ca.* root@192.168.50.51:/etc/kubernetes/pki/etcd

root@192.168.50.51's password:

ca.crt 100% 1058 1.7MB/s 00:00

ca.key 100% 1679 1.8MB/s 00:00

[root@k8s-master1 master]# scp /etc/kubernetes/pki/etcd/ca.* root@192.168.50.50:/etc/kubernetes/pki/etcd

root@192.168.50.50's password:

ca.crt 100% 1058 1.9MB/s 00:00

ca.key 100% 1679 2.5MB/s 00:00

根据上面初始化的结果操作 将其他master节点加入集群

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join master.k8s.io:6443 --token gmpskr.9sdadby8vakx1wfl \

--discovery-token-ca-cert-hash sha256:391a6edaefa12d19d18f6bda19cd0979f65140a5ac2b496e4d098ac86c03d6d2 \

--control-plane 添加到master

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join master.k8s.io:6443 --token gmpskr.9sdadby8vakx1wfl \

--discovery-token-ca-cert-hash sha256:391a6edaefa12d19d18f6bda19cd0979f65140a5ac2b496e4d098ac86c03d6d2

添加到node

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

k8s-master2和k8s-master3都需要加入

[root@k8s-master3 master]# kubeadm join master.k8s.io:6443 --token gmpskr.9sdadby8vakx1wfl \

> --discovery-token-ca-cert-hash sha256:391a6edaefa12d19d18f6bda19cd0979f65140a5ac2b496e4d098ac8603d6d2 \

> --control-plane

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommened driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 2.0.5. Latest validated version: 19.03

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR DirAvailable--etc-kubernetes-manifests]: /etc/kubernetes/manifests is not empty

[ERROR FileAvailable--etc-kubernetes-kubelet.conf]: /etc/kubernetes/kubelet.conf already exiss

[ERROR Port-10250]: Port 10250 is in use

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-erors=...`

To see the stack trace of this error execute with --v=5 or higher

[root@k8s-master3 master]# mkdir -p $HOME/.kube

[root@k8s-master3 master]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master3 master]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master3 master]# ip a s ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:13:d2:b5 brd ff:ff:ff:ff:ff:ff

inet 192.168.50.50/24 brd 192.168.50.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::3826:6417:7cc3:48a4/64 scope link noprefixroute

valid_lft forever preferred_lft forever

k8s-master2和k8s-master3都加入 master1也要操作这个

[root@]# docker load < flannel_v0.12.0-amd64.tar

Loaded image: quay.io/coreos/flannel:v0.12.0-amd64

[root@]# tar xf cni-plugins-linux-amd64-v0.8.6.tgz

[root@]# cp flannel /opt/cni/bin/

master查看

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready control-plane,master 36m v1.20.0

k8s-master2 Ready control-plane,master 8m50s v1.20.0

k8s-master3 Ready control-plane,master 5m48s v1.20.0

[root@k8s-master1 master]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-7f89b7bc75-97brm 1/1 Running 0 36m

kube-system coredns-7f89b7bc75-pbb96 1/1 Running 0 36m

kube-system etcd-k8s-master1 1/1 Running 0 36m

kube-system etcd-k8s-master2 1/1 Running 0 9m32s

kube-system etcd-k8s-master3 1/1 Running 0 6m30s

kube-system kube-apiserver-k8s-master1 1/1 Running 0 36m

kube-system kube-apiserver-k8s-master2 1/1 Running 0 9m33s

kube-system kube-apiserver-k8s-master3 1/1 Running 0 6m31s

kube-system kube-controller-manager-k8s-master1 1/1 Running 1 33m

kube-system kube-controller-manager-k8s-master2 1/1 Running 0 9m33s

kube-system kube-controller-manager-k8s-master3 1/1 Running 0 6m31s

kube-system kube-flannel-ds-amd64-9tzgx 1/1 Running 0 6m32s

kube-system kube-flannel-ds-amd64-ktmmg 1/1 Running 0 9m34s

kube-system kube-flannel-ds-amd64-pmm5b 1/1 Running 0 22m

kube-system kube-proxy-cjqsg 1/1 Running 0 9m34s

kube-system kube-proxy-kwgjw 1/1 Running 0 36m

kube-system kube-proxy-mzbtz 1/1 Running 0 6m32s

kube-system kube-scheduler-k8s-master1 1/1 Running 1 33m

kube-system kube-scheduler-k8s-master2 1/1 Running 0 9m32s

kube-system kube-scheduler-k8s-master3 1/1 Running 0 6m31s

加入Kubernetes Node

直接在node节点服务器上执行k8s-master1初始化成功后的消息即可:

[root@k8s-node1 master]# kubeadm join master.k8s.io:6443 --token gmpskr.9sdadby8vakx1wfl \

> --discovery-token-ca-cert-hash sha256:391a6edaefa12d19d18f6bda19cd0979f65140a5ac2b496e4d098ac86c03d6d2

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 24.0.5. Latest validated version: 19.03

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

所有node节点操作

[root@]# docker load <flannel_v0.12.0-amd64.tar

Loaded image: quay.io/coreos/flannel:v0.12.0-amd64

[root@]# tar xf cni-plugins-linux-amd64-v0.8.6.tgz

[root@]# cp flannel /opt/cni/bin/

[root@]# kubectl get nodes

master查看

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready control-plane,master 41m v1.20.0

k8s-master2 Ready control-plane,master 13m v1.20.0

k8s-master3 Ready control-plane,master 10m v1.20.0

k8s-node1 Ready <none> 3m29s v1.20.0

k8s-node2 Ready <none> 3m23s v1.20.0

k8s-node3 Ready <none> 3m20s v1.20.0

测试Kubernetes集群

所有node主机导入测试镜像

[root@]# docker pull nginx

Using default tag: latest

latest: Pulling from library/nginx

a2abf6c4d29d: Pull complete

a9edb18cadd1: Pull complete

589b7251471a: Pull complete

186b1aaa4aa6: Pull complete

b4df32aa5a72: Pull complete

a0bcbecc962e: Pull complete

Digest: sha256:0d17b565c37bcbd895e9d92315a05c1c3c9a29f762b011a10c54a66cd53c9b31

Status: Downloaded newer image for nginx:latest

docker.io/library/nginx:latest

master操作

在Kubernetes集群中创建一个pod,验证是否正常运行

[root@k8s-master1 ~]# mkdir demo

[root@k8s-master1 ~]# cd demo/

[root@k8s-master1 demo]# vim nginx-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.19.6

ports:

- containerPort: 80

创建完 Deployment 的资源清单之后,使用 create 执行资源清单来创建容器。通过 get pods 可以查看到 Pod 容器资源已经自动创建完成。

可能有点慢大家看后面的秒数

root@k8s-master1 demo]# kubectl create -f nginx-deployment.yaml

deployment.apps/nginx-deployment created

[root@k8s-master1 demo]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deployment-76ccf9dd9d-qnlg4 0/1 ContainerCreating 0 9s

nginx-deployment-76ccf9dd9d-r76x2 0/1 ContainerCreating 0 9s

nginx-deployment-76ccf9dd9d-tzfwf 0/1 ContainerCreating 0 9s

[root@k8s-master1 demo]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deployment-76ccf9dd9d-qnlg4 1/1 Running 0 48s

nginx-deployment-76ccf9dd9d-r76x2 0/1 ContainerCreating 0 48s

nginx-deployment-76ccf9dd9d-tzfwf 1/1 Running 0 48s

[root@k8s-master1 demo]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deployment-76ccf9dd9d-qnlg4 1/1 Running 0 60s

nginx-deployment-76ccf9dd9d-r76x2 1/1 Running 0 60s

nginx-deployment-76ccf9dd9d-tzfwf 1/1 Running 0 60s

[root@k8s-master1 demo]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-76ccf9dd9d-qnlg4 1/1 Running 0 101s 10.244.5.2 k8s-node3 <none> <none>

nginx-deployment-76ccf9dd9d-r76x2 1/1 Running 0 101s 10.244.5.3 k8s-node3 <none> <none>

nginx-deployment-76ccf9dd9d-tzfwf 1/1 Running 0 101s 10.244.3.2 k8s-node1 <none> <none>

创建Service资源清单

在创建的 nginx-service 资源清单中,定义名称为 nginx-service 的 Service、标签选择器为 app: nginx、type 为 NodePort 指明外部流量可以访问内部容器。在 ports 中定义暴露的端口库号列表,对外暴露访问的端口是 80,容器内部的端口也是 80。

[root@k8s-master1 demo]# vim nginx-service.yaml

kind: Service

apiVersion: v1

metadata:

name: nginx-service

spec:

selector:

app: nginx

type: NodePort

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@k8s-master1 demo]# kubectl create -f nginx-service.yaml

service/nginx-service created

[root@k8s-master1 demo]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 52m

nginx-service NodePort 10.1.181.198 <none> 80:30933/TCP 3m21s

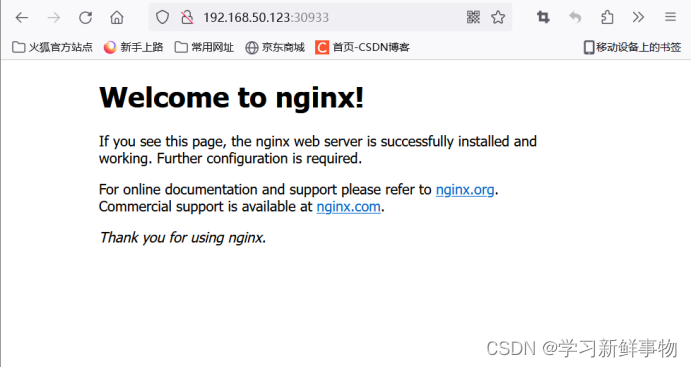

通过浏览器访问nginx:http://master.k8s.io:30373 域名或者VIP地址

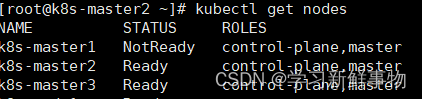

挂起k8s-master1节点,刷新页面还是能访问nginx,说明高可用集群部署成功。

访问

检查会发现VIP已经转移到k8s-master2节点上

[root@k8s-master2 ~]# ip a s ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:44:9f:54 brd ff:ff:ff:ff:ff:ff

inet 192.168.50.51/24 brd 192.168.50.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.50.111/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::4129:5248:8bd3:5e0a/64 scope link noprefixroute

valid_lft forever preferred_lft forever并可以看到1挂了

重启master1

可以看到两个都没有VIP了

[root@k8s-master1 ~]# ip a s ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:2a:be:fd brd ff:ff:ff:ff:ff:ff

inet 192.168.50.53/24 brd 192.168.50.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::65b0:e7da:1c8c:e86e/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@k8s-master2 ~]# ip a s ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:44:9f:54 brd ff:ff:ff:ff:ff:ff

inet 192.168.50.51/24 brd 192.168.50.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::4129:5248:8bd3:5e0a/64 scope link noprefixroute

valid_lft forever preferred_lft forever

需要重启keepalived服务 等待一会恢复VIP

[root@k8s-master1 ~]# systemctl restart keepalived

[root@k8s-master1 ~]# ip a s ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:2a:be:fd brd ff:ff:ff:ff:ff:ff

inet 192.168.50.53/24 brd 192.168.50.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::65b0:e7da:1c8c:e86e/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@k8s-master1 ~]# systemctl restart keepalived

[root@k8s-master1 ~]# ip a s ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:2a:be:fd brd ff:ff:ff:ff:ff:ff

inet 192.168.50.53/24 brd 192.168.50.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::65b0:e7da:1c8c:e86e/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@k8s-master1 ~]# ip a s ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:2a:be:fd brd ff:ff:ff:ff:ff:ff

inet 192.168.50.53/24 brd 192.168.50.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.50.123/32 scope global ens33

valid_lft forever preferred_lft forever