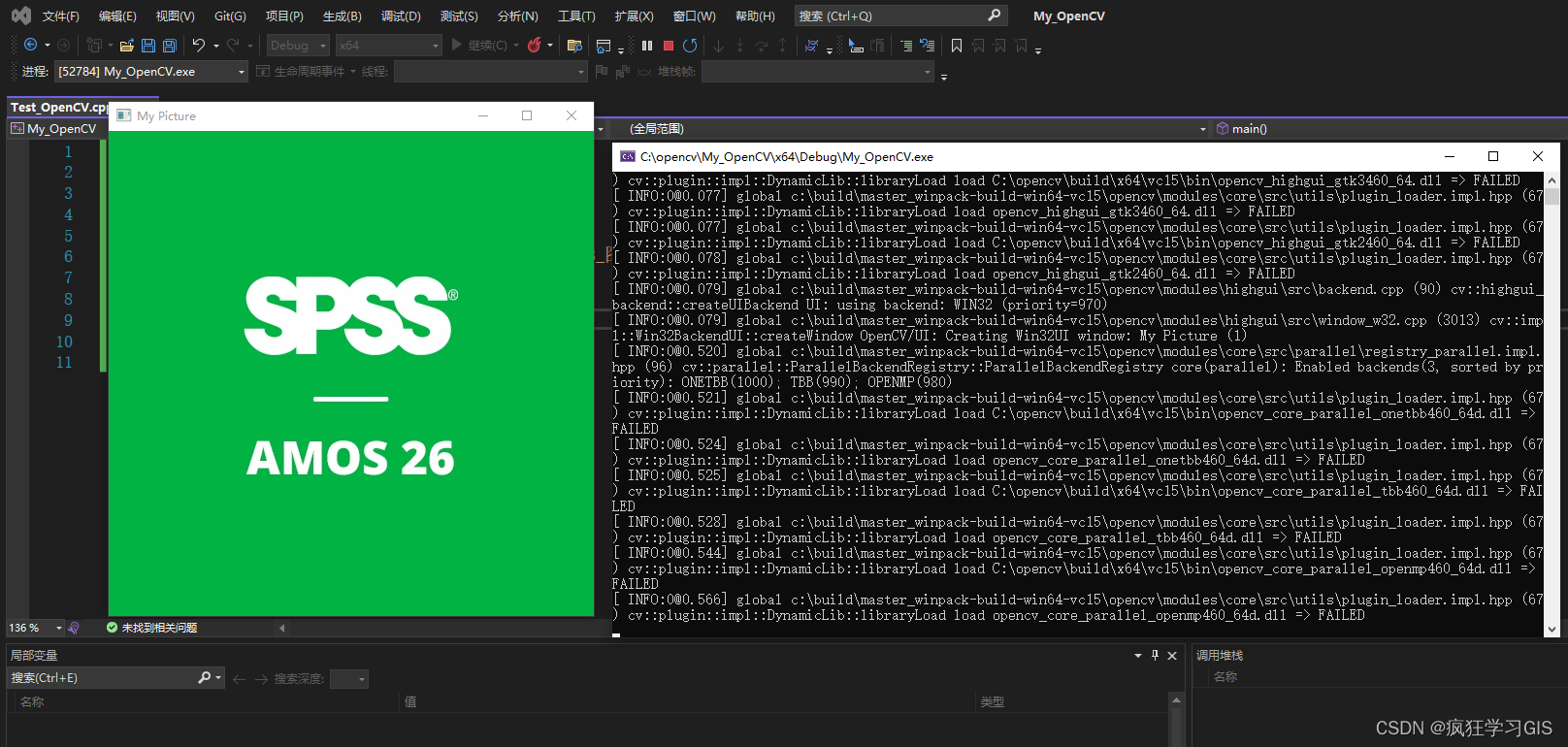

这周用opencv简单实现了一下基于yolov5检测器的车辆计数功能,方法是撞线计数,代码很简单一共就两百多行,测试视频是在b站随便下载的。注:该代码只能演示视频demo效果,一些功能未完善,离实际工程应用还有距离。

实现流程:

(1)训练yolov5模型,这里就没有自己训练了,直接使用官方的开源模型yolov5s.pt;

(2)运行yolov5工程下面的export.py,将pt模型转成onnx模型;

(3)编写yolov5部署的C++工程,包括前处理、推理和后处理部分;

(4)读取视频第一帧,用yolov5检测第一帧图像的车辆目标,计算这些检测框的中心点,

(5)读取视频的后续帧,用yolov5检测每帧图像上的车辆目标,计算新目标和上一帧图像中检测框中心点的距离矩阵;

(6)通过距离约束搜索距离矩阵,确定新旧目标检测框之间的对应关系;

(7)计算对应新旧目标检测框中心点之间的连线,判断和事先设置的虚拟撞线是否相交,若相交则计数加1;

(8)重复(5)-(7)。

实际实现的时候采取的是隔帧判断而不是使用相邻帧,v1的代码实现如下:

#include <iostream>

#include <fstream>

#include <opencv2/opencv.hpp>

// 常量

const float INPUT_WIDTH = 640.0;

const float INPUT_HEIGHT = 640.0;

const float SCORE_THRESHOLD = 0.5;

const float NMS_THRESHOLD = 0.45;

const float CONFIDENCE_THRESHOLD = 0.45;

const std::vector<std::string> class_name = {

"person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light",

"fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow",

"elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard",

"tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple",

"sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch",

"potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear",

"hair drier", "toothbrush" };

// 画框函数

void draw_label(cv::Mat& input_image, std::string label, int left, int top)

{

int baseLine;

cv::Size label_size = cv::getTextSize(label, 0.7, 0.7, 1, &baseLine);

top = std::max(top, label_size.height);

cv::Point tlc = cv::Point(left, top);

cv::Point brc = cv::Point(left , top + label_size.height + baseLine);

cv::putText(input_image, label, cv::Point(left, top + label_size.height), cv::FONT_HERSHEY_SIMPLEX, 0.7, cv::Scalar(0, 255, 255), 1);

}

// 预处理

std::vector<cv::Mat> preprocess(cv::Mat& input_image, cv::dnn::Net& net)

{

cv::Mat blob;

cv::dnn::blobFromImage(input_image, blob, 1. / 255., cv::Size(INPUT_WIDTH, INPUT_HEIGHT), cv::Scalar(), true, false);

net.setInput(blob);

std::vector<cv::Mat> preprcess_image;

net.forward(preprcess_image, net.getUnconnectedOutLayersNames());

return preprcess_image;

}

// 后处理

std::vector<cv::Rect> postprocess(std::vector<cv::Mat>& preprcess_image, cv::Mat& output_image)

{

std::vector<int> class_ids;

std::vector<float> confidences;

std::vector<cv::Rect> boxes;

std::vector<cv::Rect> boxes_nms;

float x_factor = output_image.cols / INPUT_WIDTH;

float y_factor = output_image.rows / INPUT_HEIGHT;

float* data = (float*)preprcess_image[0].data;

const int dimensions = 85;

const int rows = 25200;

for (int i = 0; i < rows; ++i)

{

float confidence = data[4];

if (confidence >= CONFIDENCE_THRESHOLD)

{

float* classes_scores = data + 5;

cv::Mat scores(1, class_name.size(), CV_32FC1, classes_scores);

cv::Point class_id;

double max_class_score;

cv::minMaxLoc(scores, 0, &max_class_score, 0, &class_id);

if (max_class_score > SCORE_THRESHOLD)

{

confidences.push_back(confidence);

class_ids.push_back(class_id.x);

float cx = data[0];

float cy = data[1];

float w = data[2];

float h = data[3];

int left = int((cx - 0.5 * w) * x_factor);

int top = int((cy - 0.5 * h) * y_factor);

int width = int(w * x_factor);

int height = int(h * y_factor);

boxes.push_back(cv::Rect(left, top, width, height));

}

}

data += 85;

}

std::vector<int> indices;

cv::dnn::NMSBoxes(boxes, confidences, SCORE_THRESHOLD, NMS_THRESHOLD, indices);

for (size_t i = 0; i < indices.size(); i++)

{

int idx = indices[i];

cv::Rect box = boxes[idx];

boxes_nms.push_back(box);

int left = box.x;

int top = box.y;

int width = box.width;

int height = box.height;

cv::rectangle(output_image, cv::Point(left, top), cv::Point(left + width, top + height), cv::Scalar(255, 0, 0), 1);

std::string label = cv::format("%.2f", confidences[idx]);

label = class_name[class_ids[idx]] + ":" + label;

draw_label(output_image, label, left, top);

}

return boxes_nms;

}

std::vector<cv::Point> get_center(std::vector<cv::Rect> detections)

{

std::vector<cv::Point> detections_center(detections.size());

for (size_t i = 0; i < detections.size(); i++)

{

detections_center[i] = cv::Point(detections[i].x + detections[i].width / 2, detections[i].y + detections[i].height / 2);

}

return detections_center;

}

float get_distance(cv::Point p1, cv::Point p2)

{

return sqrt(pow(p1.x - p2.x, 2) + pow(p1.y - p2.y, 2));

}

bool is_cross(cv::Point p1, cv::Point p2)

{

if (p1.x == p2.x) return false;

int y = 500; //line1: y = 500

float k = (p1.y - p2.y) / (p1.x - p2.x); //

float b = p1.y - k * p1.x; //line2: y = kx + b

float x = (y - b) / k;

return (x > std::min(p1.x, p2.x) && x < std::max(p1.x, p2.x));

}

int main(int argc, char** argv)

{

cv::VideoCapture capture("test.mp4");

cv::Mat frame;

cv::dnn::Net net = cv::dnn::readNet("yolov5s-f32.onnx");

int frame_num = 0;

int count = 0;

std::vector<cv::Point> detections_center_old;

std::vector<cv::Point> detections_center_new;

while(cv::waitKey(1) < 0)

{

capture >> frame;

if (frame.empty())

break;

std::cout << "******************************************************************* frame_num: " << frame_num << std::endl;

cv::Mat image = frame.clone();

std::vector<cv::Mat> preprcess_image = preprocess(image, net);

std::vector<cv::Rect> detections = postprocess(preprcess_image, image);

if (frame_num == 0)

{

detections_center_old = get_center(detections);

std::cout << "detections_center:" << std::endl;

for (size_t i = 0; i < detections_center_old.size(); i++)

{

std::cout << detections_center_old[i] << std::endl;

}

}

else if (frame_num % 2 == 0)

{

detections_center_new = get_center(detections);

std::cout << "detections_center:" << std::endl;

for (size_t i = 0; i < detections_center_new.size(); i++)

{

std::cout << detections_center_new[i] << std::endl;

}

std::vector<std::vector<float>> distance_matrix(detections_center_new.size(), std::vector<float>(detections_center_old.size()));

std::cout << "distance_matrix:" << std::endl;

for (size_t i = 0; i < detections_center_new.size(); i++)

{

for (size_t j = 0; j < detections_center_old.size(); j++)

{

distance_matrix[i][j] = get_distance(detections_center_new[i], detections_center_old[j]); //

std::cout << distance_matrix[i][j] << " ";

}

std::cout << std::endl;

}

std::cout << "min_index:" << std::endl;

std::vector<float> min_indices(detections_center_new.size());

for (size_t i = 0; i < detections_center_new.size(); i++)

{

std::vector<float> distance_vector = distance_matrix[i];

int min_index = std::min_element(distance_vector.begin(), distance_vector.end()) - distance_vector.begin();

min_indices[i] = min_index;

std::cout << min_index << " ";

}

std::cout << std::endl;

for (size_t i = 0; i < detections_center_new.size(); i++)

{

cv::Point p1 = detections_center_new[i];

cv::Point p2 = detections_center_old[min_indices[i]];

std::cout << p1 << " " << p2 << std::endl;

if (is_cross(p1, p2))

{

std::cout << "is_cross" << p1 << " " << p2 << std::endl;

count++;

}

}

detections_center_old = detections_center_new;

}

frame_num++;

cv::putText(image, "car num: " + std::to_string(count), cv::Point(20, 50), cv::FONT_HERSHEY_SIMPLEX, 0.7, cv::Scalar(0, 255, 255), 1);

cv::line(image, cv::Point(0, 500), cv::Point(1280, 500) , cv::Scalar(0, 0, 255));

cv::imshow("output", image);

cv::imwrite(std::to_string(frame_num) + ".jpg", image);

}

capture.release();

return 0;

}

在调试中,发现v1的实现存在如下问题:出现新目标的时候,计算新旧检测框的对应关系出现匹配错误,导致计数偏多。因此在v2中设置匹配的距离阈值,并简化了判断检测框中心点连线和撞线是否相交的方法。

v2的代码实现如下:

#include <iostream>

#include <fstream>

#include <opencv2/opencv.hpp>

#define DEBUG

// 常量

const float INPUT_WIDTH = 640.0;

const float INPUT_HEIGHT = 640.0;

const float SCORE_THRESHOLD = 0.5;

const float NMS_THRESHOLD = 0.25;

const float CONFIDENCE_THRESHOLD = 0.5;

const std::vector<std::string> class_name = {

"person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light",

"fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow",

"elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard",

"tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple",

"sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch",

"potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear",

"hair drier", "toothbrush" };

const int IMAGE_WIDTH = 1280;

const int IMAGE_HEIGHT = 720;

const int LINE_HEIGHT = IMAGE_HEIGHT / 2;

//画出检测框和标签

void draw_label(cv::Mat& input_image, std::string label, int left, int top)

{

int baseLine;

cv::Size label_size = cv::getTextSize(label, 0.7, 0.7, 1, &baseLine);

top = std::max(top, label_size.height);

cv::Point tlc = cv::Point(left, top);

cv::Point brc = cv::Point(left , top + label_size.height + baseLine);

cv::putText(input_image, label, cv::Point(left, top + label_size.height), cv::FONT_HERSHEY_SIMPLEX, 0.7, cv::Scalar(0, 255, 255), 1);

}

//预处理

std::vector<cv::Mat> preprocess(cv::Mat& input_image, cv::dnn::Net& net)

{

cv::Mat blob;

cv::dnn::blobFromImage(input_image, blob, 1. / 255., cv::Size(INPUT_WIDTH, INPUT_HEIGHT), cv::Scalar(), true, false);

net.setInput(blob);

std::vector<cv::Mat> preprcess_image;

net.forward(preprcess_image, net.getUnconnectedOutLayersNames());

return preprcess_image;

}

//后处理

std::vector<cv::Rect> postprocess(std::vector<cv::Mat>& preprcess_image, cv::Mat& output_image)

{

std::vector<int> class_ids;

std::vector<float> confidences;

std::vector<cv::Rect> boxes;

std::vector<cv::Rect> boxes_nms;

float x_factor = output_image.cols / INPUT_WIDTH;

float y_factor = output_image.rows / INPUT_HEIGHT;

float* data = (float*)preprcess_image[0].data;

const int dimensions = 85;

const int rows = 25200;

for (int i = 0; i < rows; ++i)

{

float confidence = data[4];

if (confidence >= CONFIDENCE_THRESHOLD)

{

float* classes_scores = data + 5;

cv::Mat scores(1, class_name.size(), CV_32FC1, classes_scores);

cv::Point class_id;

double max_class_score;

cv::minMaxLoc(scores, 0, &max_class_score, 0, &class_id);

if (max_class_score > SCORE_THRESHOLD)

{

confidences.push_back(confidence);

class_ids.push_back(class_id.x);

float cx = data[0];

float cy = data[1];

float w = data[2];

float h = data[3];

int left = int((cx - 0.5 * w) * x_factor);

int top = int((cy - 0.5 * h) * y_factor);

int width = int(w * x_factor);

int height = int(h * y_factor);

boxes.push_back(cv::Rect(left, top, width, height));

}

}

data += 85;

}

std::vector<int> indices;

cv::dnn::NMSBoxes(boxes, confidences, SCORE_THRESHOLD, NMS_THRESHOLD, indices);

for (size_t i = 0; i < indices.size(); i++)

{

int idx = indices[i];

cv::Rect box = boxes[idx];

boxes_nms.push_back(box);

int left = box.x;

int top = box.y;

int width = box.width;

int height = box.height;

cv::rectangle(output_image, cv::Point(left, top), cv::Point(left + width, top + height), cv::Scalar(255, 0, 0), 1);

std::string label = cv::format("%.2f", confidences[idx]);

//label = class_name[class_ids[idx]] + ":" + label;

label = "car";

draw_label(output_image, label, left, top);

}

return boxes_nms;

}

//计算检测框的中心

std::vector<cv::Point> get_center(std::vector<cv::Rect> detections)

{

std::vector<cv::Point> detections_center(detections.size());

for (size_t i = 0; i < detections.size(); i++)

{

detections_center[i] = cv::Point(detections[i].x + detections[i].width / 2, detections[i].y + detections[i].height / 2);

}

return detections_center;

}

//计算两点间距离

float get_distance(cv::Point p1, cv::Point p2)

{

return sqrt(pow(p1.x - p2.x, 2) + pow(p1.y - p2.y, 2));

}

//判断连接相邻两帧对应检测框中心的线段是否与红线相交

bool is_cross(cv::Point p1, cv::Point p2)

{

return (p1.y <= LINE_HEIGHT && p2.y > LINE_HEIGHT) || (p1.y > LINE_HEIGHT && p2.y <= LINE_HEIGHT);

}

int main(int argc, char** argv)

{

cv::VideoCapture capture("test.mp4");

cv::Mat frame;

cv::dnn::Net net = cv::dnn::readNet("yolov5s-f32.onnx");

int frame_num = 0;

int count = 0;

std::vector<cv::Point> detections_center_old;

std::vector<cv::Point> detections_center_new;

while(cv::waitKey(1) < 0)

{

capture >> frame;

if (frame.empty())

break;

std::cout << "******************************************************************* frame_num: " << frame_num << std::endl;

cv::Mat image = frame.clone();

std::vector<cv::Mat> preprcess_image = preprocess(image, net);

std::vector<cv::Rect> detections = postprocess(preprcess_image, image);

if (frame_num == 0)

{

detections_center_old = get_center(detections);

#ifdef DEBUG

std::cout << "detections_center:" << std::endl;

for (size_t i = 0; i < detections_center_old.size(); i++)

{

std::cout << detections_center_old[i] << std::endl;

}

#endif // DEBUG

}

else if (frame_num % 2 == 0)

{

detections_center_new = get_center(detections);

#ifdef DEBUG

std::cout << "detections_center:" << std::endl;

for (size_t i = 0; i < detections_center_new.size(); i++)

{

std::cout << detections_center_new[i] << std::endl;

}

#endif // DEBUG

std::vector<std::vector<float>> distance_matrix(detections_center_new.size(), std::vector<float>(detections_center_old.size())); //距离矩阵

for (size_t i = 0; i < detections_center_new.size(); i++)

{

for (size_t j = 0; j < detections_center_old.size(); j++)

{

distance_matrix[i][j] = get_distance(detections_center_new[i], detections_center_old[j]);

}

}

#ifdef DEBUG

std::cout << "min_index:" << std::endl;

#endif // DEBUG

std::vector<float> min_indices(detections_center_new.size());

for (size_t i = 0; i < detections_center_new.size(); i++)

{

std::vector<float> distance_vector = distance_matrix[i];

float min_val = *std::min_element(distance_vector.begin(), distance_vector.end());

int min_index = -1;

if (min_val < LINE_HEIGHT / 5)

min_index = std::min_element(distance_vector.begin(), distance_vector.end()) - distance_vector.begin();

min_indices[i] = min_index;

#ifdef DEBUG

std::cout << min_index << " ";

#endif // DEBUG

}

std::cout << std::endl;

for (size_t i = 0; i < detections_center_new.size(); i++)

{

if (min_indices[i] < 0)

continue;

cv::Point p1 = detections_center_new[i];

cv::Point p2 = detections_center_old[min_indices[i]];

#ifdef DEBUG

std::cout << p1 << " " << p2 << std::endl;

#endif // DEBUG

if (is_cross(p1, p2))

{

#ifdef DEBUG

std::cout << "is_cross" << p1 << " " << p2 << std::endl;

#endif // DEBUG

count++;

}

}

detections_center_old = detections_center_new;

}

cv::putText(image, "car num: " + std::to_string(count), cv::Point(20, 50), cv::FONT_HERSHEY_SIMPLEX, 0.7, cv::Scalar(0, 0, 255), 1);

cv::line(image, cv::Point(0, LINE_HEIGHT), cv::Point(IMAGE_WIDTH, LINE_HEIGHT), cv::Scalar(0, 0, 255));

cv::imshow("output", image);

#ifdef DEBUG

if (frame_num % 2 == 0)

cv::imwrite(std::to_string(frame_num) + ".jpg", image);

#endif // DEBUG

frame_num++;

}

capture.release();

return 0;

}

检测效果实现如下,效果还是可以的。完整视频中有一次计数异常,是因为检测器不准导致车辆检测框位置漂移,可以后续优化。注:由于官方提供的coco80类的开源权重文件用于车辆检测效果不是很好,LZ把检测出的类别直接固定为car,实际应自己重新训练一个车辆检测的模型。

代码、测试视频和转好的权重文件放在下载链接:点击跳转

![[1180]clickhouse查看数据库和表的容量大小](https://img-blog.csdnimg.cn/img_convert/56b53a32fe40a2a8bdc8f293ee985b83.png)

![[附源码]Python计算机毕业设计SSM基于健身房管理系统(程序+LW)](https://img-blog.csdnimg.cn/1135b2990be1437db0cbb7f10e7ee178.png)