文章目录

- 1.查看数据库容量、行数、压缩率

- 2.查看数据表容量、行数、压缩率

- 3.查看数据表分区信息

- 4.查看数据表字段的信息

- 5. 查看表的各个指标

- 6.跟踪分区

- 7.检查数据大小

在mysql中information_schema这个数据库中保存了mysql服务器所有数据库的信息,

而在clickhouse,我们可以通过system.parts查看clickhouse数据库和表的容量大小、行数、压缩率以及分区信息。

在此通过测试数据库来说明。

1.查看数据库容量、行数、压缩率

SELECT

sum(rows) AS `总行数`,

formatReadableSize(sum(data_uncompressed_bytes)) AS `原始大小`,

formatReadableSize(sum(data_compressed_bytes)) AS `压缩大小`,

round((sum(data_compressed_bytes) / sum(data_uncompressed_bytes)) * 100, 0) AS `压缩率`

FROM system.parts

┌────总行数─┬─原始大小──┬─压缩大小─┬─压缩率─┐

│ 326819026 │ 77.15 GiB │ 5.75 GiB │ 7 │

└───────────┴───────────┴──────────┴────────┘

1 rows in set. Elapsed: 0.047 sec. Processed 1.04 thousand rows, 520.93 KB (21.95 thousand rows/s.,

11.02 MB/s.)

2.查看数据表容量、行数、压缩率

--在此查询一张临时表的信息

SELECT

table AS `表名`,

sum(rows) AS `总行数`,

formatReadableSize(sum(data_uncompressed_bytes)) AS `原始大小`,

formatReadableSize(sum(data_compressed_bytes)) AS `压缩大小`,

round((sum(data_compressed_bytes) / sum(data_uncompressed_bytes)) * 100, 0) AS `压缩率`

FROM system.parts

WHERE table IN ('temp_1')

GROUP BY table

┌─表名───┬──总行数─┬─原始大小───┬─压缩大小──┬─压缩率─┐

│ temp_1 │ 3127523 │ 838.21 MiB │ 60.04 MiB │ 7 │

└────────┴─────────┴────────────┴───────────┴────────┘

1 rows in set. Elapsed: 0.008 sec.

3.查看数据表分区信息

--查看测试表在19年12月的分区信息

SELECT

partition AS `分区`,

sum(rows) AS `总行数`,

formatReadableSize(sum(data_uncompressed_bytes)) AS `原始大小`,

formatReadableSize(sum(data_compressed_bytes)) AS `压缩大小`,

round((sum(data_compressed_bytes) / sum(data_uncompressed_bytes)) * 100, 0) AS `压缩率`

FROM system.parts

WHERE (database IN ('default')) AND (table IN ('temp_1')) AND (partition LIKE '2019-12-%')

GROUP BY partition

ORDER BY partition ASC

┌─分区───────┬─总行数─┬─原始大小──┬─压缩大小───┬─压缩率─┐

│ 2019-12-01 │ 24 │ 6.17 KiB │ 2.51 KiB │ 41 │

│ 2019-12-02 │ 9215 │ 2.45 MiB │ 209.74 KiB │ 8 │

│ 2019-12-03 │ 17265 │ 4.46 MiB │ 453.78 KiB │ 10 │

│ 2019-12-04 │ 27741 │ 7.34 MiB │ 677.25 KiB │ 9 │

│ 2019-12-05 │ 31500 │ 8.98 MiB │ 469.30 KiB │ 5 │

│ 2019-12-06 │ 157 │ 37.50 KiB │ 4.95 KiB │ 13 │

│ 2019-12-07 │ 110 │ 32.75 KiB │ 3.86 KiB │ 12 │

└────────────┴────────┴───────────┴────────────┴────────┘

7 rows in set. Elapsed: 0.005 sec.

4.查看数据表字段的信息

SELECT

column AS `字段名`,

any(type) AS `类型`,

formatReadableSize(sum(column_data_uncompressed_bytes)) AS `原始大小`,

formatReadableSize(sum(column_data_compressed_bytes)) AS `压缩大小`,

sum(rows) AS `行数`

FROM system.parts_columns

WHERE (database = 'default') AND (table = 'temp_1')

GROUP BY column

ORDER BY column ASC

┌─字段名───────────┬─类型─────┬─原始大小───┬─压缩大小───┬────行数─┐

│ a │ String │ 23.83 MiB │ 134.13 KiB │ 3127523 │

│ b │ String │ 19.02 MiB │ 127.72 KiB │ 3127523 │

│ c │ String │ 5.97 MiB │ 49.09 KiB │ 3127523 │

│ d │ String │ 3.95 MiB │ 532.86 KiB │ 3127523 │

│ e │ String │ 5.17 MiB │ 49.47 KiB │ 3127523 │

│ totalDate │ DateTime │ 11.93 MiB │ 1.26 MiB │ 3127523 │

└──────────────────┴──────────┴────────────┴────────────┴─────────┘

————————————————

5. 查看表的各个指标

select database,

table,

sum(bytes) as size,

sum(rows) as rows,

min(min_date) as min_date,

max(max_date) as max_date,

sum(bytes_on_disk) as bytes_on_disk,

sum(data_uncompressed_bytes) as data_uncompressed_bytes,

sum(data_compressed_bytes) as data_compressed_bytes,

(data_compressed_bytes / data_uncompressed_bytes) * 100 as compress_rate,

max_date - min_date as days,

size / (max_date - min_date) as avgDaySize

from system.parts

where active

and database = 'database'

and table = 'tablename'

group by database, table

结果为:这种结果显示的大小size是字节,我们如何转换为常见的MB和GB呢?

select

database,

table,

formatReadableSize(size) as size,

formatReadableSize(bytes_on_disk) as bytes_on_disk,

formatReadableSize(data_uncompressed_bytes) as data_uncompressed_bytes,

formatReadableSize(data_compressed_bytes) as data_compressed_bytes,

compress_rate,

rows,

days,

formatReadableSize(avgDaySize) as avgDaySize

from

(

select

database,

table,

sum(bytes) as size,

sum(rows) as rows,

min(min_date) as min_date,

max(max_date) as max_date,

sum(bytes_on_disk) as bytes_on_disk,

sum(data_uncompressed_bytes) as data_uncompressed_bytes,

sum(data_compressed_bytes) as data_compressed_bytes,

(data_compressed_bytes / data_uncompressed_bytes) * 100 as compress_rate,

max_date - min_date as days,

size / (max_date - min_date) as avgDaySize

from system.parts

where active

and database = 'database'

and table = 'tablename'

group by

database,

table

)

结果:这就转换为常见的单位了。

上面过程可以看到,最终都用表进行了聚合,为什么会这样呢?

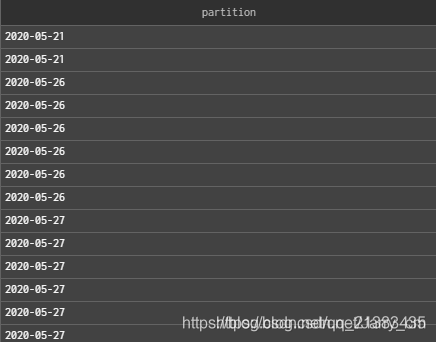

以一个简单的例子来看,我们最常见的是查看表分区,下面来看下不进行聚合的结果:

select partition

from system.parts

where active

and database = 'database'

and table = 'tablename'

结果为:这是因为在CH中,和我们hive表不一样,hive表一个分区只会有一条记录,但CH不是,每个分区分为了不同的marks

因此,我们要实现和hive一样查分区的功能时,要对表进行聚合查看。

6.跟踪分区

SELECT database,

table,

count() AS parts,

uniq(partition) AS partitions,

sum(marks) AS marks,

sum(rows) AS rows,

formatReadableSize(sum(data_compressed_bytes)) AS compressed,

formatReadableSize(sum(data_uncompressed_bytes)) AS uncompressed,

round((sum(data_compressed_bytes) / sum(data_uncompressed_bytes)) * 100.,2) AS percentage

FROM system.parts

WHERE active

and database = 'database'

and table = 'tablename'

GROUP BY database, table

7.检查数据大小

SELECT table,

formatReadableSize(sum(data_compressed_bytes)) AS tc,

formatReadableSize(sum(data_uncompressed_bytes)) AS tu,

round((sum(data_compressed_bytes) / sum(data_uncompressed_bytes)) * 100,2) AS ratio

FROM system.columns

WHERE database = 'database'

and table = 'table'

GROUP BY table

ORDER BY sum(data_compressed_bytes) ASC

参考:https://blog.csdn.net/weixin_39025362/article/details/109051723

https://blog.csdn.net/qq_21383435/article/details/115679147

![[附源码]Python计算机毕业设计SSM基于健身房管理系统(程序+LW)](https://img-blog.csdnimg.cn/1135b2990be1437db0cbb7f10e7ee178.png)

![[附源码]JAVA毕业设计小说阅读网站(系统+LW)](https://img-blog.csdnimg.cn/051397adba5d4a5a9be3ce0a8d91ec47.png)