- 集群角色分配

| Hostname | IP | Role |

| hadoop01 | 192.168.126.132 | JobManager TaskManager |

| hadoop02 | 192.168.126.133 | TaskManager |

| hadoop03 | 192.168.126.134 | TaskManager |

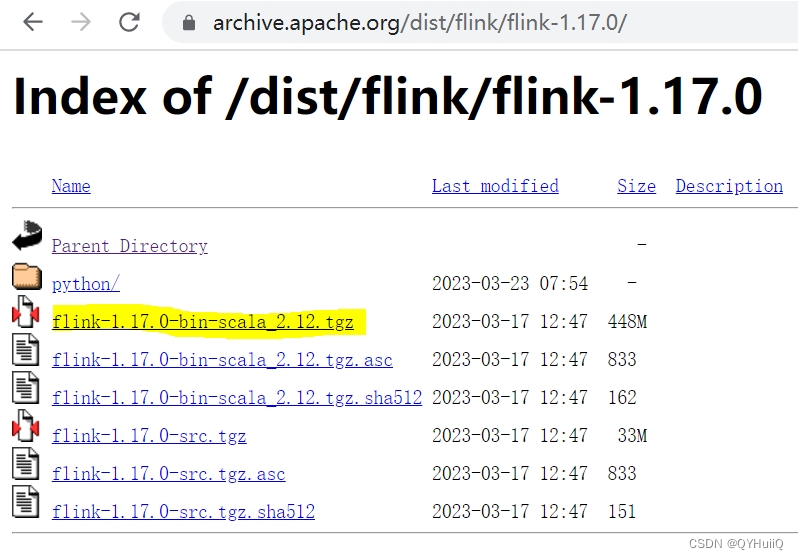

- 下载flink安装包

https://archive.apache.org/dist/flink/flink-1.17.0/

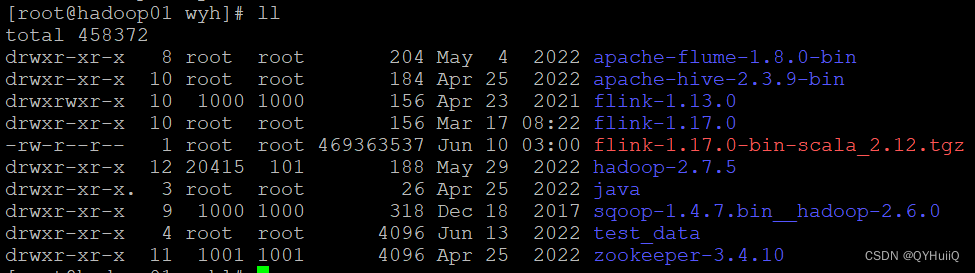

上传至hadoop01并解压:

修改conf/flink-conf.yaml(从flink1.16版本开始,需要修改以下配置)

jobmanager.rpc.address: hadoop01

jobmanager.bind-host: 0.0.0.0

taskmanager.bind-host: 0.0.0.0

taskmanager.host: hadoop01

#用于Web UI,与JobManager保持一致

rest.address: hadoop01

rest.bind-address: 0.0.0.0

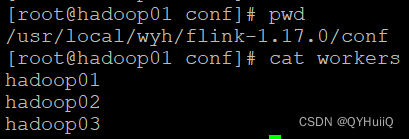

修改conf/workers(将三台TaskManager节点加进来)

修改conf/masters(将JobManager节点加进来)

![]()

然后将hadoop01节点上的flink包分发至hadoop02和hadoop03。

[root@hadoop01 wyh]# pwd

/usr/local/wyh

[root@hadoop01 wyh]# scp -r flink-1.17.0/ hadoop02:$PWD

[root@hadoop01 wyh]# scp -r flink-1.17.0/ hadoop03:$PWD

修改hadoop02和hadoop03的conf/flink-conf.yaml中的taskmanager.host,改为当前各自的主机名。

taskmanager.host: hadoop02taskmanager.host: hadoop03启动集群:

[root@hadoop01 bin]# pwd

/usr/local/wyh/flink-1.17.0/bin

[root@hadoop01 bin]# ./start-cluster.sh

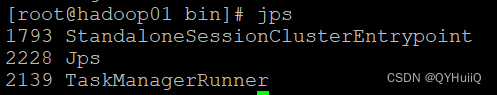

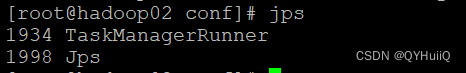

查看进程:

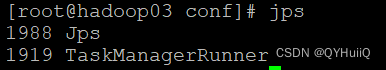

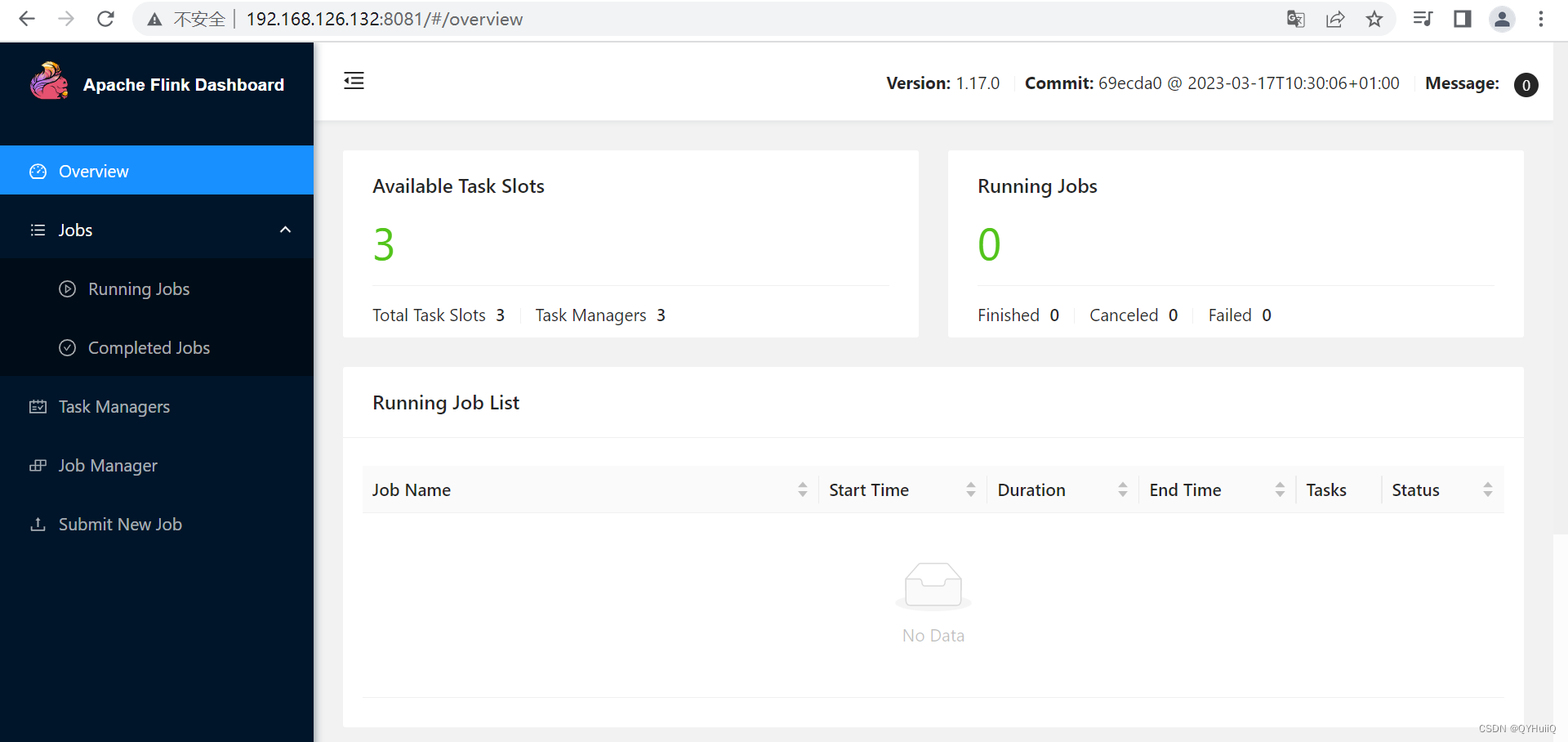

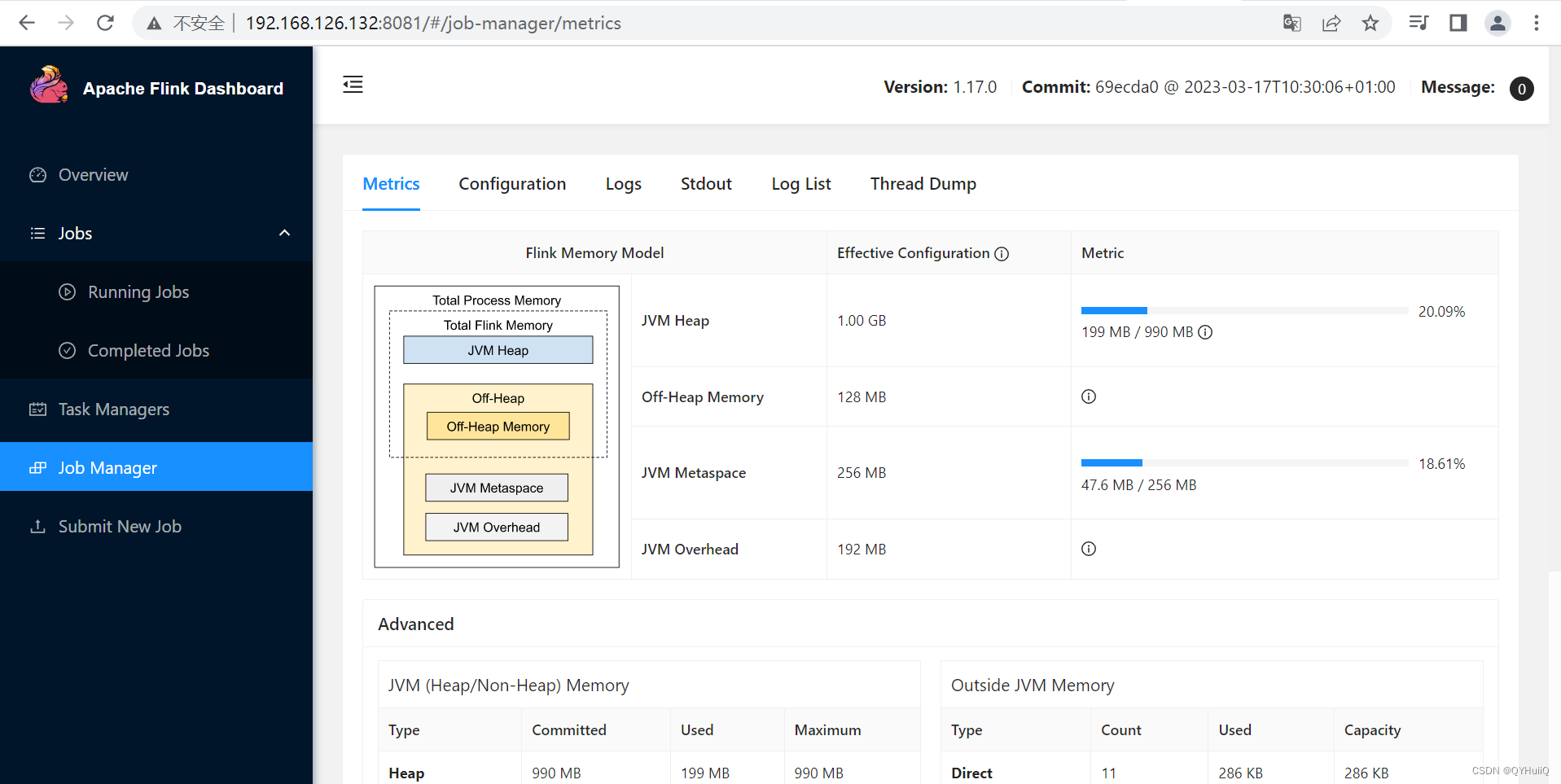

查看Web UI:

http://192.168.126.132:8081/

至此,Flink1.17.0集群搭建完成。