【Python文本处理】基于运动路线记录GPX的文件解析,及对经纬度坐标的数学模型运动速度求解

解析

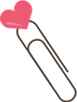

GPX文件格式

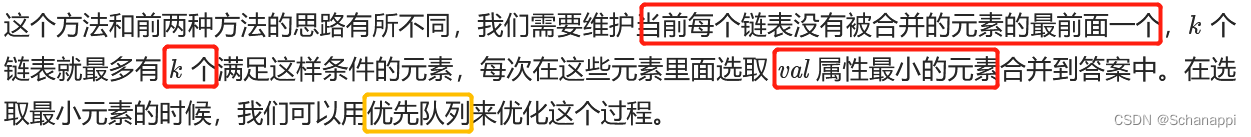

GPX文件本身其实就是坐标、海拔、时间、心率等综合性的xml文件

如图:

海拔:ele

时间:time

心率:heartrate

功率:power

踏频:cadence

距离:distance

一般不用距离distance 但可以根据距离求瞬时速度(前提是时间间隔均匀 最小精度不低于1s) 不过如果距离和坐标之差相差太远 则不太好确定 Strava等软件通过这个来计算瞬时速度和距离 但不用于计算赛段速度(赛段时间)

某一时刻的数据就看trkpt部分

以trkpt 为始 到 /trkpt 为止

比如:

<trkpt lat="30.3940883" lon="112.2400167">

<ele>34</ele>

<time>2023-05-12T12:26:13Z</time>

<extensions>

<heartrate>167</heartrate>

<distance>26698</distance>

</extensions>

</trkpt>

代码实现

首先借助time库 求出每个时刻的参数 并且转换为"%Y-%m-%d %H:%M:%S"格式

而后对海拔 经纬度进行获取

最后获取关键词

import time

keywords_list=["heartrate","cadence","power","distance"]

def read_gpx(gpx):

first_time=0

first_str_time=""

trkpt_flag=0

j=0

num_list=[0]

time_list=[None]

ele_list=[None]

lat_list=[None]

lon_list=[None]

key_list=[]

gpx_dict={}

key_dict={}

for i in keywords_list:

key_list.append([None])

for i in range(len(gpx)):

try:

ti=str((gpx[i].split("<time>")[1]).split("</time>")[0])

now_time = time.mktime(time.strptime(ti, "%Y-%m-%dT%H:%M:%SZ"))

first_time=now_time

first_str_time=time.strftime("%Y-%m-%d %H:%M:%S",time.localtime(first_time))

break

except:

pass

time_list[0]=first_str_time

for i in range(len(gpx)):

if gpx[i].count('<trkpt'):

trkpt_flag=1

j=j+1

num_list.append(j)

time_list.append(j)

ele_list.append(j)

lat_list.append(j)

lon_list.append(j)

for k in range(len(keywords_list)):

key_list[k].append(j)

if trkpt_flag==1:

try:

ti=str((gpx[i].split("<time>")[1]).split("</time>")[0])

now_time = time.mktime(time.strptime(ti, "%Y-%m-%dT%H:%M:%SZ"))

now_str_time=time.strftime("%Y-%m-%d %H:%M:%S",time.localtime(now_time))

time_list[j]=now_str_time

except:

pass

if gpx[i].count('<trkpt'):

lat_list[j]=float(gpx[i].split('"')[1])

lon_list[j]=float(gpx[i].split('"')[3])

try:

ele_list[j]=float(str((gpx[i].split("<ele>")[1]).split("</ele>")[0]))

except:

pass

for k in range(len(keywords_list)):

try:

dat=str((gpx[i].split("<"+keywords_list[k]+">")[1]).split("</"+keywords_list[k]+">")[0])

key_list[k][j]=float(dat)

except:

pass

if gpx[i].count('</trkpt>'):

trkpt_flag=0

if gpx[i].count('</trkseg>'):

break

gpx_dict["num"]=num_list

gpx_dict["time"]=time_list

gpx_dict["lat"]=lat_list

gpx_dict["lon"]=lon_list

gpx_dict["ele"]=ele_list

for k in range(len(keywords_list)):

key_dict[keywords_list[k]]=key_list[k]

gpx_dict["key"]=key_dict

return gpx_dict

最后保存为字典集合

输出结果:

{'num': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127, 128, 129, 130, 131, 132, 133, 134, 135, 136, 137, 138, 139, 140, 141, 142, 143, 144, 145, 146, 147, 148, 149, 150, 151, 152, 153, 154, 155, 156, 157, 158, 159, 160, 161, 162, 163, 164, 165, 166, 167, 168, 169, 170, 171, 172, 173, 174, 175, 176, 177, 178, 179, 180, 181, 182, 183, 184], 'time': ['2023-06-01 09:58:22', '2023-06-01 09:58:41', '2023-06-01 09:58:42', '2023-06-01 09:58:43', '2023-06-01 09:58:44', '2023-06-01 09:58:45', '2023-06-01 09:58:46', '2023-06-01 09:58:47', '2023-06-01 09:58:48', '2023-06-01 09:58:49', '2023-06-01 09:58:50', '2023-06-01 09:58:51', '2023-06-01 09:58:52', '2023-06-01 09:58:53', '2023-06-01 09:58:54', '2023-06-01 09:58:55', '2023-06-01 09:58:56', '2023-06-01 09:58:57', '2023-06-01 09:58:58', '2023-06-01 09:58:59', '2023-06-01 09:59:00', '2023-06-01 09:59:01', '2023-06-01 09:59:02', '2023-06-01 09:59:03', '2023-06-01 09:59:04', '2023-06-01 09:59:05', '2023-06-01 09:59:06', '2023-06-01 09:59:07', '2023-06-01 09:59:08', '2023-06-01 09:59:09', '2023-06-01 09:59:10', '2023-06-01 09:59:11', '2023-06-01 09:59:12', '2023-06-01 09:59:13', '2023-06-01 09:59:14', '2023-06-01 09:59:15', '2023-06-01 09:59:16', '2023-06-01 09:59:17', '2023-06-01 09:59:18', '2023-06-01 09:59:19', '2023-06-01 09:59:20', '2023-06-01 09:59:21', '2023-06-01 09:59:22', '2023-06-01 09:59:23', '2023-06-01 09:59:24', '2023-06-01 09:59:25', '2023-06-01 09:59:26', '2023-06-01 09:59:27', '2023-06-01 09:59:28', '2023-06-01 09:59:29', '2023-06-01 09:59:30', '2023-06-01 09:59:31', '2023-06-01 09:59:32', '2023-06-01 09:59:33', '2023-06-01 09:59:34', '2023-06-01 09:59:35', '2023-06-01 09:59:36', '2023-06-01 09:59:37', '2023-06-01 09:59:38', '2023-06-01 09:59:39', '2023-06-01 09:59:40', '2023-06-01 09:59:41', '2023-06-01 09:59:42', '2023-06-01 09:59:43', '2023-06-01 09:59:44', '2023-06-01 09:59:45', '2023-06-01 09:59:46', '2023-06-01 09:59:47', '2023-06-01 09:59:48', '2023-06-01 09:59:49', '2023-06-01 09:59:50', '2023-06-01 09:59:51', '2023-06-01 09:59:52', '2023-06-01 09:59:53', '2023-06-01 09:59:54', '2023-06-01 09:59:55', '2023-06-01 09:59:56', '2023-06-01 09:59:57', '2023-06-01 09:59:58', '2023-06-01 09:59:59', '2023-06-01 10:00:00', '2023-06-01 10:00:01', '2023-06-01 10:00:02', '2023-06-01 10:00:03', '2023-06-01 10:00:04', '2023-06-01 10:00:05', '2023-06-01 10:00:06', '2023-06-01 10:00:07', '2023-06-01 10:00:08', '2023-06-01 10:00:09', '2023-06-01 10:00:10', '2023-06-01 10:00:11', '2023-06-01 10:00:12', '2023-06-01 10:00:13', '2023-06-01 10:00:14', '2023-06-01 10:00:15', '2023-06-01 10:00:16', '2023-06-01 10:00:17', '2023-06-01 10:00:18', '2023-06-01 10:00:19', '2023-06-01 10:00:20', '2023-06-01 10:00:21', '2023-06-01 10:00:22', '2023-06-01 10:00:23', '2023-06-01 10:00:24', '2023-06-01 10:00:25', '2023-06-01 10:00:26', '2023-06-01 10:00:27', '2023-06-01 10:00:28', '2023-06-01 10:00:29', '2023-06-01 10:00:30', '2023-06-01 10:00:31', '2023-06-01 10:00:32', '2023-06-01 10:00:33', '2023-06-01 10:00:34', '2023-06-01 10:00:35', '2023-06-01 10:00:36', '2023-06-01 10:00:37', '2023-06-01 10:00:38', '2023-06-01 10:00:39', '2023-06-01 10:00:40', '2023-06-01 10:00:41', '2023-06-01 10:00:42', '2023-06-01 10:00:43', '2023-06-01 10:00:44', '2023-06-01 10:00:45', '2023-06-01 10:00:46', '2023-06-01 10:00:47', '2023-06-01 10:00:48', '2023-06-01 10:00:49', '2023-06-01 10:00:50', '2023-06-01 10:00:51', '2023-06-01 10:00:52', '2023-06-01 10:00:53', '2023-06-01 10:00:54', '2023-06-01 10:00:55', '2023-06-01 10:00:56', '2023-06-01 10:00:57', '2023-06-01 10:00:58', '2023-06-01 10:00:59', '2023-06-01 10:01:00', '2023-06-01 10:01:01', '2023-06-01 10:01:02', '2023-06-01 10:01:03', '2023-06-01 10:01:04', '2023-06-01 10:01:05', '2023-06-01 10:01:06', '2023-06-01 10:01:07', '2023-06-01 10:01:08', '2023-06-01 10:01:09', '2023-06-01 10:01:10', '2023-06-01 10:01:11', '2023-06-01 10:01:12', '2023-06-01 10:01:13', '2023-06-01 10:01:14', '2023-06-01 10:01:15', '2023-06-01 10:01:16', '2023-06-01 10:01:17', '2023-06-01 10:01:18', '2023-06-01 10:01:19', '2023-06-01 10:01:20', '2023-06-01 10:01:21', '2023-06-01 10:01:22', '2023-06-01 10:01:23', '2023-06-01 10:01:24', '2023-06-01 10:01:25', '2023-06-01 10:01:26', '2023-06-01 10:01:27', '2023-06-01 10:01:28', '2023-06-01 10:01:29', '2023-06-01 10:01:30', '2023-06-01 10:01:31', '2023-06-01 10:01:32', '2023-06-01 10:01:33', '2023-06-01 10:01:34', '2023-06-01 10:01:54', '2023-06-01 10:01:55', '2023-06-01 10:01:56', '2023-06-01 10:01:57', '2023-06-01 10:02:13', '2023-06-01 10:02:14', '2023-06-01 10:02:15', '2023-06-01 10:02:16', '2023-06-01 10:02:17', '2023-06-01 10:02:18'], 'lat': [None, 30.452805, 30.4528125, 30.4528189, 30.4528247, 30.4528287, 30.4528306, 30.4528274, 30.4528185, 30.4528061, 30.4527819, 30.4527605, 30.4527407, 30.4527255, 30.4527165, 30.4527105, 30.4527043, 30.4527002, 30.4526961, 30.4526878, 30.4526799, 30.4526724, 30.4526653, 30.4526585, 30.4526511, 30.4526432, 30.4526382, 30.4526364, 30.4526347, 30.4526313, 30.4526268, 30.4526225, 30.4526161, 30.4526085, 30.4526009, 30.4525931, 30.452585, 30.4525761, 30.4525672, 30.4525573, 30.4525471, 30.4525388, 30.4525315, 30.4525241, 30.4525176, 30.4525106, 30.4525042, 30.4524992, 30.4524957, 30.4524939, 30.4524964, 30.4525027, 30.4525095, 30.4525144, 30.4525146, 30.4525092, 30.4525001, 30.452491, 30.4524821, 30.4524735, 30.4524656, 30.4524585, 30.4524522, 30.4524467, 30.4524397, 30.4524306, 30.4524212, 30.4524128, 30.4524072, 30.4524037, 30.4523976, 30.452387, 30.4523743, 30.4523626, 30.4523544, 30.4523475, 30.4523411, 30.4523334, 30.4523246, 30.4523151, 30.4523058, 30.4522994, 30.4522931, 30.452284, 30.4522709, 30.4522573, 30.4522449, 30.4522331, 30.4522227, 30.4522135, 30.4522036, 30.4521941, 30.4521846, 30.4521749, 30.452165, 30.4521557, 30.4521475, 30.4521407, 30.452135, 30.4521298, 30.4521242, 30.4521187, 30.4521134, 30.4521077, 30.452099, 30.4520891, 30.4520801, 30.4520722, 30.4520656, 30.4520581, 30.4520494, 30.4520406, 30.4520326, 30.4520258, 30.4520207, 30.4520166, 30.4520156, 30.4520157, 30.4520148, 30.4520109, 30.4520021, 30.4519891, 30.451975, 30.4519647, 30.4519547, 30.4519429, 30.4519314, 30.4519224, 30.4519173, 30.4519121, 30.451905, 30.4518969, 30.4518886, 30.4518795, 30.45187, 30.4518607, 30.4518506, 30.451841, 30.451832, 30.4518227, 30.4518112, 30.4517992, 30.451789, 30.4517806, 30.4517714, 30.4517642, 30.4517577, 30.4517519, 30.4517441, 30.4517381, 30.4517355, 30.4517277, 30.4517154, 30.451696, 30.4516821, 30.4516804, 30.4516841, 30.4516871, 30.4516861, 30.4516817, 30.4516731, 30.4516592, 30.4516392, 30.4516241, 30.4515869, 30.4515576, 30.4515302, 30.4515145, 30.4514761, 30.4514449, 30.4514142, 30.4513832, 30.451353, 30.4513262, 30.4513047, 30.4512268, 30.4512039, 30.4511808, 30.4511591, 30.4510384, 30.4510348, 30.4510312, 30.4510243, 30.4510148, 30.4509683], 'lon': [None, 114.42341, 114.4234075, 114.4234007, 114.4233901, 114.4233757, 114.4233531, 114.4233333, 114.4233105, 114.4232842, 114.4232355, 114.4231986, 114.4231621, 114.4231234, 114.42308, 114.4230341, 114.4229862, 114.4229577, 114.4229293, 114.4228785, 114.4228315, 114.4227793, 114.4227282, 114.4226775, 114.4226272, 114.422577, 114.4225274, 114.4224779, 114.4224276, 114.4223772, 114.4223269, 114.4222768, 114.4222261, 114.4221743, 114.422121, 114.4220673, 114.4220143, 114.4219619, 114.4219094, 114.4218565, 114.4218039, 114.4217506, 114.421697, 114.4216435, 114.4215901, 114.4215373, 114.4214851, 114.4214326, 114.4213798, 114.4213273, 114.421277, 114.4212281, 114.4211797, 114.4211314, 114.4210826, 114.4210331, 114.4209821, 114.4209311, 114.4208804, 114.4208289, 114.420777, 114.4207252, 114.4206734, 114.4206211, 114.4205688, 114.4205168, 114.420465, 114.4204133, 114.4203623, 114.4203093, 114.4202557, 114.4202033, 114.4201521, 114.4201022, 114.4200552, 114.4200083, 114.419961, 114.4199125, 114.4198619, 114.4198099, 114.4197569, 114.4197042, 114.4196513, 114.4195989, 114.4195456, 114.4194927, 114.4194397, 114.4193878, 114.4193369, 114.419286, 114.4192341, 114.4191815, 114.419129, 114.4190763, 114.4190232, 114.4189688, 114.418915, 114.418861, 114.4188058, 114.4187511, 114.418697, 114.4186434, 114.4185905, 114.4185378, 114.4184854, 114.4184328, 114.4183798, 114.4183267, 114.4182727, 114.4182176, 114.4181623, 114.4181082, 114.4180579, 114.4180111, 114.4179664, 114.4179228, 114.4178808, 114.41784, 114.4177991, 114.4177536, 114.4177059, 114.4176574, 114.4176081, 114.4175565, 114.4175062, 114.4174569, 114.4174089, 114.4173598, 114.4173088, 114.4172565, 114.4172029, 114.4171484, 114.4170935, 114.4170372, 114.4169823, 114.4169284, 114.4168755, 114.4168227, 114.4167703, 114.416719, 114.4166684, 114.4166182, 114.4165688, 114.41652, 114.4164722, 114.4164238, 114.4163759, 114.4163279, 114.4162817, 114.4162345, 114.4161873, 114.416144, 114.4161079, 114.4160834, 114.4160621, 114.4160486, 114.4160213, 114.4159636, 114.4159197, 114.4158811, 114.415848, 114.4158204, 114.4157996, 114.4157916, 114.4157787, 114.4157734, 114.4157721, 114.4157722, 114.4157734, 114.4157742, 114.4157747, 114.4157775, 114.4157841, 114.4157923, 114.415801, 114.415851, 114.4158589, 114.4158646, 114.4158705, 114.4158796, 114.4158809, 114.4158822, 114.4158852, 114.4158888, 114.4159033], 'ele': [None, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 21.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 19.0, 20.0, 20.0, 20.0, 20.0, 20.0, 20.0], 'key': {'heartrate': [None, 99.0, 101.0, 101.0, 100.0, 100.0, 100.0, 100.0, 100.0, 100.0, 101.0, 101.0, 101.0, 101.0, 102.0, 102.0, 102.0, 102.0, 101.0, 101.0, 101.0, 101.0, 100.0, 99.0, 99.0, 100.0, 100.0, 100.0, 100.0, 100.0, 102.0, 102.0, 104.0, 104.0, 103.0, 103.0, 104.0, 104.0, 105.0, 107.0, 108.0, 108.0, 108.0, 108.0, 108.0, 108.0, 109.0, 109.0, 109.0, 109.0, 109.0, 109.0, 109.0, 109.0, 109.0, 109.0, 109.0, 109.0, 109.0, 108.0, 108.0, 108.0, 109.0, 109.0, 110.0, 110.0, 110.0, 110.0, 110.0, 110.0, 110.0, 110.0, 110.0, 110.0, 110.0, 109.0, 110.0, 110.0, 110.0, 110.0, 109.0, 109.0, 110.0, 109.0, 110.0, 109.0, 109.0, 109.0, 109.0, 110.0, 109.0, 109.0, 109.0, 109.0, 110.0, 110.0, 110.0, 110.0, 111.0, 111.0, 112.0, 114.0, 114.0, 114.0, 113.0, 114.0, 114.0, 113.0, 113.0, 113.0, 114.0, 114.0, 114.0, 114.0, 114.0, 114.0, 114.0, 113.0, 113.0, 113.0, 114.0, 114.0, 114.0, 114.0, 114.0, 114.0, 114.0, 114.0, 114.0, 114.0, 114.0, 114.0, 113.0, 113.0, 114.0, 114.0, 114.0, 113.0, 113.0, 113.0, 113.0, 113.0, 113.0, 113.0, 113.0, 113.0, 113.0, 113.0, 113.0, 113.0, 113.0, 115.0, 115.0, 115.0, 118.0, 118.0, 118.0, 118.0, 118.0, 118.0, 118.0, 117.0, 118.0, 118.0, 118.0, 118.0, 118.0, 117.0, 118.0, 118.0, 118.0, 118.0, 117.0, 117.0, 117.0, 112.0, 112.0, 112.0, 112.0, 113.0, 113.0, 113.0, 112.0, 112.0, 112.0], 'cadence': [None, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127, 128, 129, 130, 131, 132, 133, 134, 135, 136, 137, 138, 139, 140, 141, 142, 143, 144, 145, 146, 147, 148, 149, 150, 151, 152, 153, 154, 155, 156, 157, 158, 159, 160, 161, 162, 163, 164, 165, 166, 167, 168, 169, 170, 171, 172, 173, 174, 175, 176, 177, 178, 179, 180, 181, 182, 183, 184], 'power': [None, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127, 128, 129, 130, 131, 132, 133, 134, 135, 136, 137, 138, 139, 140, 141, 142, 143, 144, 145, 146, 147, 148, 149, 150, 151, 152, 153, 154, 155, 156, 157, 158, 159, 160, 161, 162, 163, 164, 165, 166, 167, 168, 169, 170, 171, 172, 173, 174, 175, 176, 177, 178, 179, 180, 181, 182, 183, 184], 'distance': [None, 0.0, 0.0, 0.0, 0.0, 4.0, 9.0, 12.0, 15.0, 19.0, 23.0, 27.0, 31.0, 36.0, 40.0, 44.0, 49.0, 54.0, 60.0, 65.0, 70.0, 75.0, 80.0, 84.0, 89.0, 94.0, 99.0, 104.0, 109.0, 114.0, 118.0, 124.0, 129.0, 134.0, 139.0, 144.0, 149.0, 154.0, 160.0, 165.0, 170.0, 175.0, 180.0, 186.0, 191.0, 196.0, 201.0, 206.0, 211.0, 216.0, 220.0, 224.0, 229.0, 234.0, 239.0, 244.0, 249.0, 254.0, 259.0, 264.0, 269.0, 274.0, 279.0, 284.0, 290.0, 295.0, 300.0, 305.0, 310.0, 315.0, 320.0, 325.0, 330.0, 334.0, 339.0, 344.0, 348.0, 354.0, 359.0, 364.0, 369.0, 374.0, 379.0, 384.0, 390.0, 395.0, 400.0, 405.0, 410.0, 416.0, 421.0, 426.0, 431.0, 436.0, 442.0, 447.0, 452.0, 458.0, 463.0, 468.0, 473.0, 478.0, 483.0, 488.0, 494.0, 499.0, 504.0, 510.0, 515.0, 520.0, 525.0, 530.0, 534.0, 538.0, 542.0, 546.0, 550.0, 554.0, 559.0, 564.0, 569.0, 574.0, 579.0, 584.0, 589.0, 594.0, 598.0, 603.0, 608.0, 614.0, 619.0, 624.0, 630.0, 636.0, 641.0, 646.0, 651.0, 656.0, 661.0, 666.0, 671.0, 676.0, 680.0, 685.0, 690.0, 694.0, 699.0, 704.0, 708.0, 712.0, 716.0, 719.0, 722.0, 724.0, 726.0, 731.0, 736.0, 740.0, 743.0, 746.0, 748.0, 752.0, 755.0, 758.0, 761.0, 764.0, 767.0, 770.0, 774.0, 778.0, 781.0, 784.0, 787.0, 788.0, 790.0, 803.0, 806.0, 808.0, 809.0, 820.0, 820.0, 820.0, 820.0, 821.0, 821.0]}}

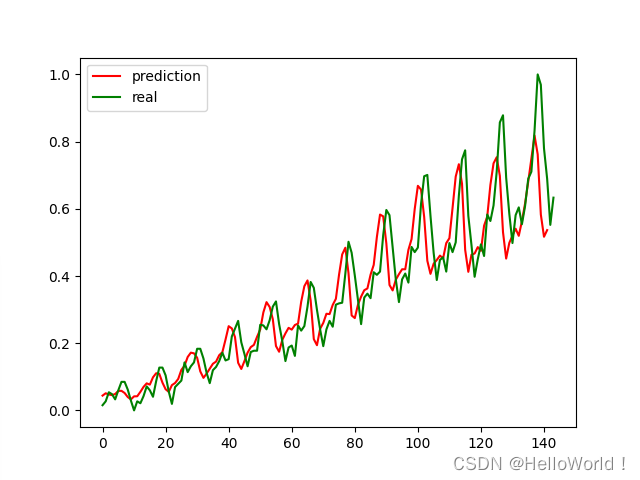

速度求解

速度=位移/时间

所以最先要做的就是算出两个经纬度坐标点的距离(弧长)

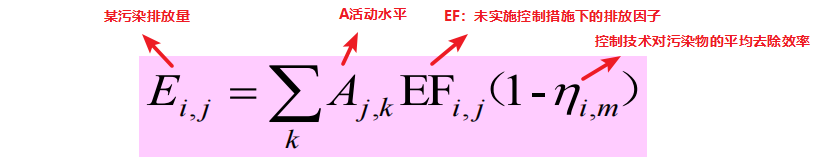

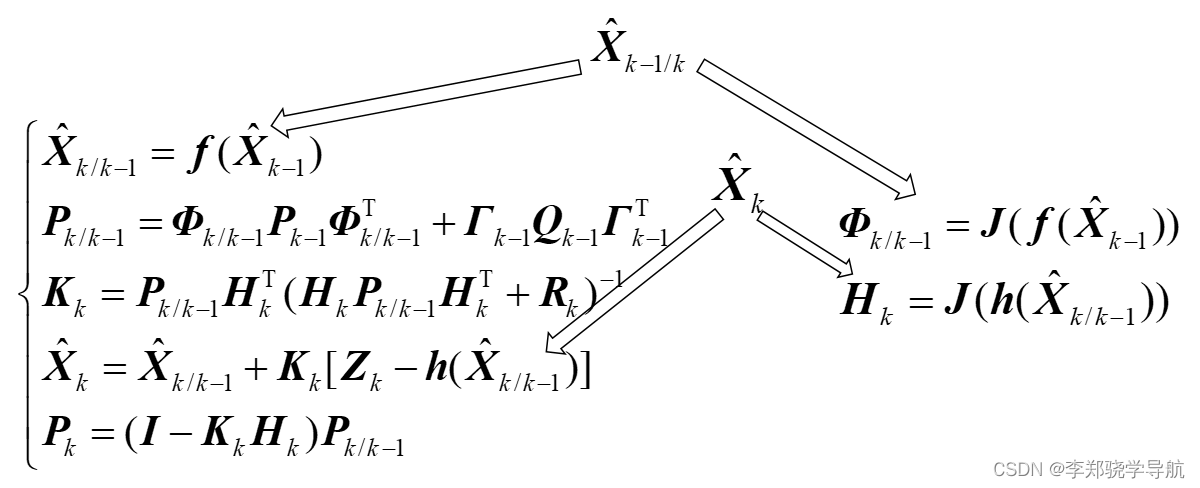

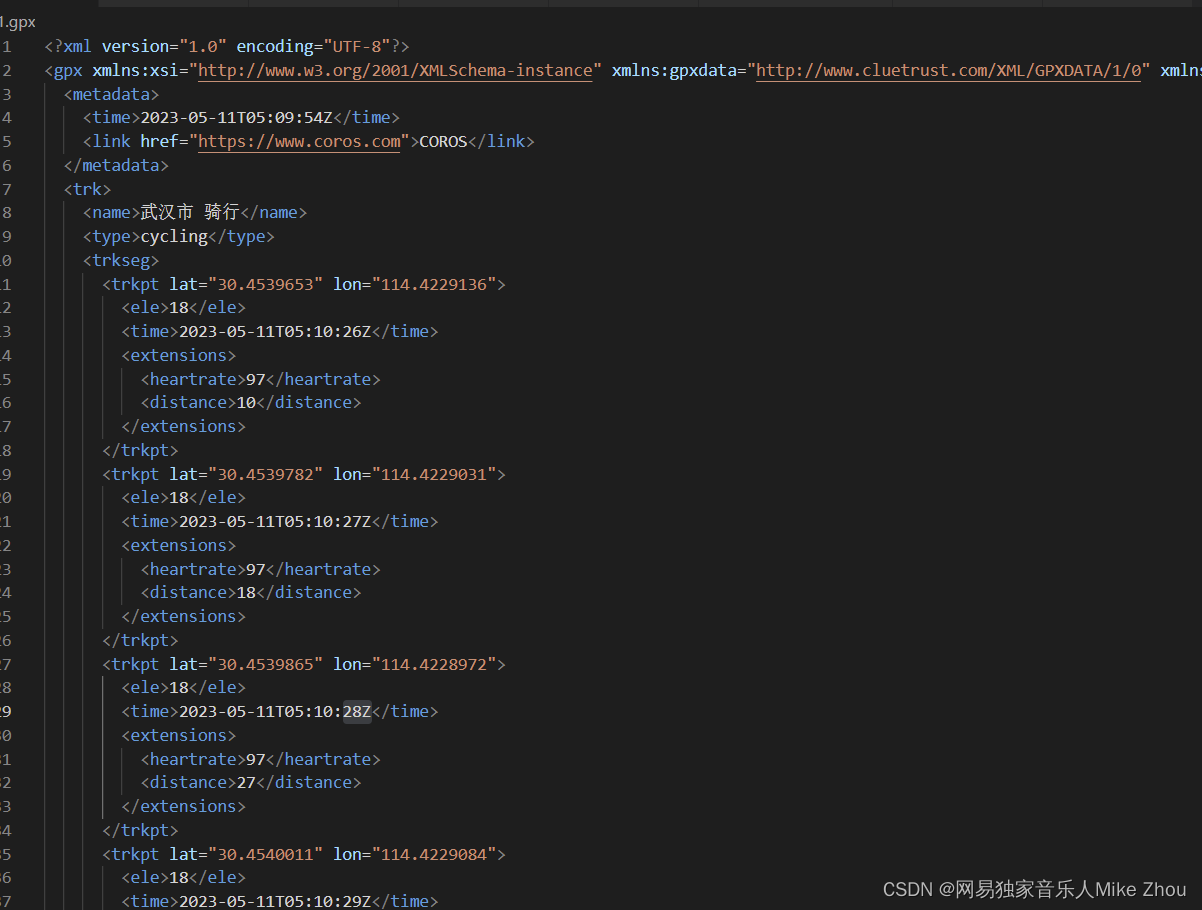

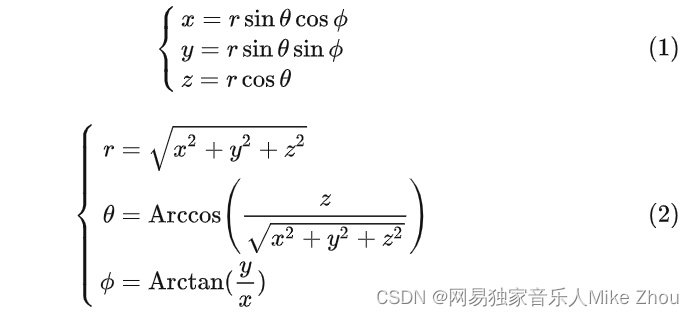

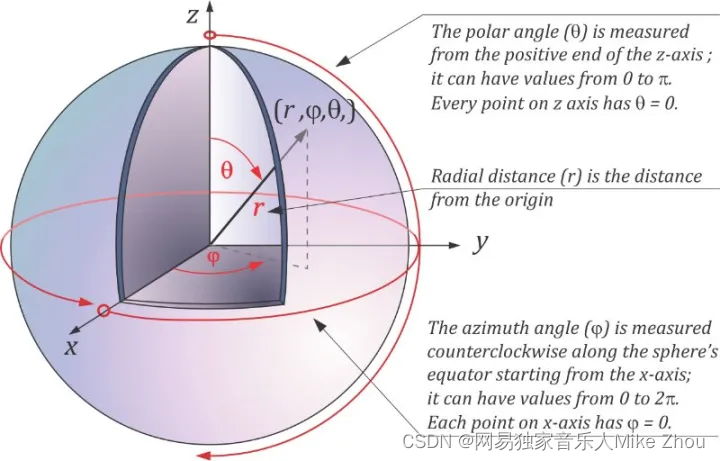

数学模型

首先 地球的平均半径为6371393米

近视地球为一个球体 则经纬度坐标就是一个球坐标方程

球坐标和直角坐标转换用到这两个方程组:

其中 φ为经度 90°-θ为纬度(这里有个坑需要注意):

标准球坐标为:

可以看到 θ是90°-纬度

所以如果带入经纬度的话 就是:

(theta是经度 phi是纬度)

df['r'] = 6371393+df['ele']# radius of the Earth in meters

df['theta'] = np.deg2rad(df['lon'])

df['phi'] = np.deg2rad(df['lat'])

df['x'] = df['r']*np.cos(df['theta'])*np.cos(df['phi'])

df['y'] = df['r']*np.sin(df['theta'])*np.cos(df['phi'])

df['z'] = df['r']*np.sin(df['phi'])

df['x2'] = df['x'].shift()

df['y2'] = df['y'].shift()

df['z2'] = df['z'].shift()

df['distance'] = np.sqrt((df['x2']-df['x'])**2 + (df['y2']-df['y'])**2 + (df['z2']-df['z'])**2)

df['central_angle'] = np.arccos((df['x']*df['x2'] + df['y']*df['y2'] + df['z']*df['z2'])/df['r']**2)

df['arclength'] = df['central_angle']*df['r']

df['elapsed_time'] = (df.index.to_series().diff() / pd.Timedelta(seconds=1))

df['speed'] = df['arclength'] / df['elapsed_time']*3.6 # km/h

df = df[columns + ['speed']]

通过三角函数计算出两个坐标点的弧长,则可以算出该时间段的速度

但物理里面学过:

若要精确计算速度 则应该求两个连续弧长/两个经过的时间

但也可以不这么麻烦:

弧长公式在球坐标中更容易求得 而且如果转成直角坐标 则可能把角度弄错(我第一次就把90°-θ踩坑了 导致我的弧长求反三角函数时 居然大于1)

计算经纬度坐标距离的原理就是就算球面两点间的距离,经纬度表示法实际是用角度描述的坐标。纬度上下一共180度,经度360度。

假设地球是标准的球形,计算距离就等于计算一个截面圆的弧长。计算弧长的话需要先求出两点相对于球芯的夹角。然后根据半径就可以求出弧长了,也就是距离。

假设A点纬度lat1,精度lng1.B点纬度lat2, 经度lng2, 地球半径为R

求这个夹角之前,先把坐标转换为直角空间坐标系的点,并让地心为坐标中心点,即

A(Rcos(lat1)cos(lng1), Rcos(lat1)sin(lng1), Rsin(lat1))

B(Rcos(lat2)cos(lng2), Rcos(lat2)sin(lng2), Rsin(lat2))

然后算夹角,这里用向量的夹角计算方法,可以求出夹角余弦值,那么夹角COS为

COS = (向量A*向量B) / (向量A的模 * 向量B的模)

令A为(x,y,z),B(a,b,c)

COS = (xa+yb+zc)/[√(x2+y2+z2)*√(a2+b2+c2)]

把经纬度的坐标代进去

COS = cos(lat2) * cos(lat1) * cos(lng1-lng2) + sin(lat2)*sin(lat1)

得到夹角的余弦值可得角度,然后就可以算出弧长了,即得距离(arccos 为反余弦函数,这里反余弦的结果得到的是弧度)

d = 2PI*R * (arccos(COS) / 2 * PI)

d = R * arccos(COS)

最终

d = R * arccos( cos(lat2) * cos(lat1) * cos(lng2-lng1) + sin(lat2)*sin(lat1))

代码:

df['arclength'] = df['r'] * np.arccos(np.cos(df['phi']) * np.cos(df['phi2']) * np.cos(df['theta2']-df['theta']) + np.sin(df['phi'])*np.sin(df['phi2']))

加上物理中求速度的公式 运动速度求解就成了:

其中elapsed_time_list为经过的时间列表

try:

speed_list.append((df['arclength'][i]+df['arclength'][i+1])/ (elapsed_time_list[i]+elapsed_time_list[i+1])) # km/h

except:

speed_list.append(df['arclength'][i]/ elapsed_time_list[i]) # m/s

如果还想更加精确 则再延伸两个时间段 并且求出来的速度按权重去求平均值 这里就需要经验值了

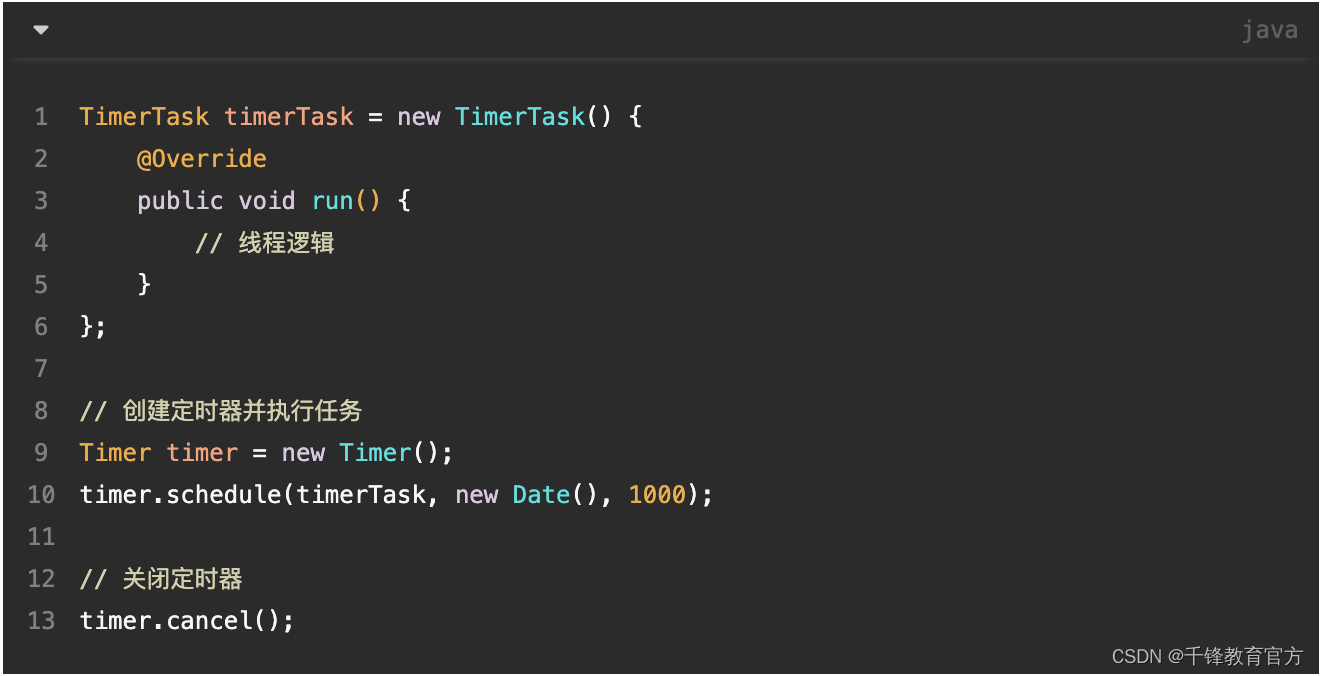

代码实现

这里用到了numpy和pandas做数学计算

同时也要引入状态机考虑如果时间戳相同的结果(一般GPX是一秒钟更新一次 所以也没啥影响)

def calculate_speed(time_list,lat_list,lon_list,ele_list):

if len(time_list)==len(lat_list) and len(time_list)==len(lon_list) and len(time_list)==len(ele_list):

pass

else:

return

df = pd.DataFrame({'time': time_list, 'lat': lat_list, 'lon': lon_list, 'ele': ele_list})

columns = df.columns.tolist()

df['r'] = 6371393+df['ele'] # radius of the Earth in meters

df['theta'] = np.deg2rad(df['lon'])

df['phi'] = np.deg2rad(df['lat'])

df['theta2']=df['theta'].shift()

df['phi2']=df['phi'].shift()

df['arclength'] = df['r'] * np.arccos(np.cos(df['phi']) * np.cos(df['phi2']) * np.cos(df['theta2']-df['theta']) + np.sin(df['phi'])*np.sin(df['phi2']))

elapsed_time_list=[]

stamp_list=[]

speed_list=[]

stamp_same_list=[]

same_flag=0

enable_flag=0

exit_same_list=[]

for i in range(len(time_list)):

try:

stamp_list.append(time.mktime(time.strptime(time_list[i], "%Y-%m-%d %H:%M:%S")))

enable_flag=1

if i>0 and enable_flag==1:

if stamp_list[i]>stamp_list[i-1]:

elapsed_time_list.append(stamp_list[i]-stamp_list[i-1])

if same_flag==1:

same_flag=0

exit_same_list.append(i)

else:

if same_flag==0:

stamp_same_list.append(i)

same_flag=1

elapsed_time_list.append(0.0)

else:

elapsed_time_list.append(None)

except:

if enable_flag==0:

elapsed_time_list.append(None)

else:

if same_flag==0:

stamp_same_list.append(i)

same_flag=1

elapsed_time_list.append(0.0)

if len(stamp_same_list)>len(exit_same_list):

exit_same_list.append(0)

j=0

displacement=0.0

displacement_time=0.0

end_displacement=0.0

same_flag=0

end_elapsed_time=0.0

stamp_same_in_1st_flag=0

if len(stamp_same_list)==0:

for i in range(len(elapsed_time_list)):

try:

try:

speed_list.append((df['arclength'][i]+df['arclength'][i+1])/ (elapsed_time_list[i]+elapsed_time_list[i+1])) # km/h

except:

speed_list.append(df['arclength'][i]/ elapsed_time_list[i]) # m/s

except:

speed_list.append(None)

else:

for i in range(len(elapsed_time_list)):

try:

if i>0:

try:

if i==stamp_same_list[j]-1:

same_flag=1

if stamp_same_list[0]==1 and stamp_same_in_1st_flag==0:

stamp_same_in_1st_flag=1

same_flag=1

except:

pass

if same_flag==0:

try:

speed_list.append((df['arclength'][i]+df['arclength'][i+1])/ (elapsed_time_list[i]+elapsed_time_list[i+1])) # m/s

except:

speed_list.append(df['arclength'][i]/ elapsed_time_list[i]) # m/s

else:

try:

displacement=displacement+df['arclength'][i]

except:

displacement=displacement+0.0

try:

displacement_time=displacement_time+elapsed_time_list[i]

except:

displacement_time=displacement_time+0.0

if j==len(stamp_same_list)-1 and exit_same_list[j]==0:

if end_elapsed_time==0.0:

end_elapsed_time=elapsed_time_list[i]

end_displacement=end_displacement+df['arclength'][i]

if end_elapsed_time>0.0:

speed_list.append(end_displacement/end_elapsed_time)

else:

speed_list.append(end_displacement/1.0)

else:

speed_list.append(None)

if i==exit_same_list[j]:

avg_speed=displacement/displacement_time

for k in range(stamp_same_list[j]-1,i+1):

speed_list[k]=avg_speed

j=j+1

try:

if elapsed_time_list[j]-end_elapsed_time[j-1]<=1:

pass

else:

same_flag=0

displacement=0.0

displacement_time=0.0

except:

same_flag=0

displacement=0.0

displacement_time=0.0

else:

speed_list.append(None)

except:

speed_list.append(None)

df['elapsed_time'] = elapsed_time_list

df['speed'] = speed_list # m/s

df['speed'] = 3.6*df['speed'] # km/h

df = df[columns + ['arclength']+['speed']]

return df

输出结果:

time lat ... arclength speed

0 2023-06-01 09:58:22 NaN ... NaN NaN

1 2023-06-01 09:58:41 30.452805 ... NaN NaN

2 2023-06-01 09:58:42 30.452813 ... 0.870153 3.309064

3 2023-06-01 09:58:43 30.452819 ... 0.968216 3.904454

4 2023-06-01 09:58:44 30.452825 ... 1.200925 4.775849

5 2023-06-01 09:58:45 30.452829 ... 1.452325 6.533599

6 2023-06-01 09:58:46 30.452831 ... 2.177452 7.392403

7 2023-06-01 09:58:47 30.452827 ... 1.929438 7.792940

8 2023-06-01 09:58:48 30.452818 ... 2.399973 9.492160

9 2023-06-01 09:58:49 30.452806 ... 2.873450 14.872643

10 2023-06-01 09:58:50 30.452782 ... 5.389130 17.373583

11 2023-06-01 09:58:51 30.452761 ... 4.262861 15.114427

12 2023-06-01 09:58:52 30.452741 ... 4.134043 14.777814

13 2023-06-01 09:58:53 30.452725 ... 4.075853 15.040074

14 2023-06-01 09:58:54 30.452717 ... 4.279743 15.715562

15 2023-06-01 09:58:55 30.452710 ... 4.451124 16.370151

16 2023-06-01 09:58:56 30.452704 ... 4.643404 13.346376

17 2023-06-01 09:58:57 30.452700 ... 2.771250 9.955965

18 2023-06-01 09:58:58 30.452696 ... 2.759842 13.890313

19 2023-06-01 09:58:59 30.452688 ... 4.956999 17.185842

20 2023-06-01 09:59:00 30.452680 ... 4.590692 17.396119

21 2023-06-01 09:59:01 30.452672 ... 5.073819 18.063651

22 2023-06-01 09:59:02 30.452665 ... 4.961543 17.782722

23 2023-06-01 09:59:03 30.452658 ... 4.917747 17.655921

24 2023-06-01 09:59:04 30.452651 ... 4.891098 17.607951

25 2023-06-01 09:59:05 30.452643 ... 4.891098 17.420183

26 2023-06-01 09:59:06 30.452638 ... 4.786782 17.164355

27 2023-06-01 09:59:07 30.452636 ... 4.748971 17.231882

28 2023-06-01 09:59:08 30.452635 ... 4.824296 17.407732

29 2023-06-01 09:59:09 30.452631 ... 4.846666 17.447997

.. ... ... ... ... ...

155 2023-06-01 10:01:15 30.451680 ... 1.308677 7.122322

156 2023-06-01 10:01:16 30.451684 ... 2.648168 14.741737

157 2023-06-01 10:01:17 30.451687 ... 5.541685 17.552431

158 2023-06-01 10:01:18 30.451686 ... 4.209665 14.294663

159 2023-06-01 10:01:19 30.451682 ... 3.731814 12.683910

160 2023-06-01 10:01:20 30.451673 ... 3.314802 11.483126

161 2023-06-01 10:01:21 30.451659 ... 3.064712 10.890838

162 2023-06-01 10:01:22 30.451639 ... 2.985753 8.692529

163 2023-06-01 10:01:23 30.451624 ... 1.843429 11.085891

164 2023-06-01 10:01:24 30.451587 ... 4.315399 13.704922

165 2023-06-01 10:01:25 30.451558 ... 3.298446 11.427151

166 2023-06-01 10:01:26 30.451530 ... 3.049971 8.631806

167 2023-06-01 10:01:27 30.451514 ... 1.745477 10.830216

168 2023-06-01 10:01:28 30.451476 ... 4.271310 13.934782

169 2023-06-01 10:01:29 30.451445 ... 3.470236 12.391505

170 2023-06-01 10:01:30 30.451414 ... 3.413934 12.368084

171 2023-06-01 10:01:31 30.451383 ... 3.457224 12.377582

172 2023-06-01 10:01:32 30.451353 ... 3.419210 11.702734

173 2023-06-01 10:01:33 30.451326 ... 3.082309 10.101783

174 2023-06-01 10:01:34 30.451305 ... 2.529793 2.130881

175 2023-06-01 10:01:54 30.451227 ... 9.900348 2.152631

176 2023-06-01 10:01:55 30.451204 ... 2.656664 9.511795

177 2023-06-01 10:01:56 30.451181 ... 2.627666 9.192733

178 2023-06-01 10:01:57 30.451159 ... 2.479408 3.373405

179 2023-06-01 10:02:13 30.451038 ... 13.450560 2.933653

180 2023-06-01 10:02:14 30.451035 ... 0.402802 1.450089

181 2023-06-01 10:02:15 30.451031 ... 0.402802 2.195135

182 2023-06-01 10:02:16 30.451024 ... 0.816717 3.477648

183 2023-06-01 10:02:17 30.451015 ... 1.115310 11.646074

184 2023-06-01 10:02:18 30.450968 ... 5.354732 19.277034

[185 rows x 6 columns]

整体代码

# -*- coding: utf-8 -*-

"""

Created on Tue Jun 6 13:58:42 2023

@author: ZHOU

"""

import numpy as np

import pandas as pd

import time

keywords_list=["heartrate","cadence","power","distance"]

def read_gpx(gpx):

first_time=0

first_str_time=""

trkpt_flag=0

j=0

num_list=[0]

time_list=[None]

ele_list=[None]

lat_list=[None]

lon_list=[None]

key_list=[]

gpx_dict={}

key_dict={}

for i in keywords_list:

key_list.append([None])

for i in range(len(gpx)):

try:

ti=str((gpx[i].split("<time>")[1]).split("</time>")[0])

now_time = time.mktime(time.strptime(ti, "%Y-%m-%dT%H:%M:%SZ"))

first_time=now_time

first_str_time=time.strftime("%Y-%m-%d %H:%M:%S",time.localtime(first_time))

break

except:

pass

time_list[0]=first_str_time

for i in range(len(gpx)):

if gpx[i].count('<trkpt'):

trkpt_flag=1

j=j+1

num_list.append(j)

time_list.append(j)

ele_list.append(j)

lat_list.append(j)

lon_list.append(j)

for k in range(len(keywords_list)):

key_list[k].append(j)

if trkpt_flag==1:

try:

ti=str((gpx[i].split("<time>")[1]).split("</time>")[0])

now_time = time.mktime(time.strptime(ti, "%Y-%m-%dT%H:%M:%SZ"))

now_str_time=time.strftime("%Y-%m-%d %H:%M:%S",time.localtime(now_time))

time_list[j]=now_str_time

except:

pass

if gpx[i].count('<trkpt'):

lat_list[j]=float(gpx[i].split('"')[1])

lon_list[j]=float(gpx[i].split('"')[3])

try:

ele_list[j]=float(str((gpx[i].split("<ele>")[1]).split("</ele>")[0]))

except:

pass

for k in range(len(keywords_list)):

try:

dat=str((gpx[i].split("<"+keywords_list[k]+">")[1]).split("</"+keywords_list[k]+">")[0])

key_list[k][j]=float(dat)

except:

pass

if gpx[i].count('</trkpt>'):

trkpt_flag=0

if gpx[i].count('</trkseg>'):

break

gpx_dict["num"]=num_list

gpx_dict["time"]=time_list

gpx_dict["lat"]=lat_list

gpx_dict["lon"]=lon_list

gpx_dict["ele"]=ele_list

for k in range(len(keywords_list)):

key_dict[keywords_list[k]]=key_list[k]

gpx_dict["key"]=key_dict

return gpx_dict

def calculate_speed(time_list,lat_list,lon_list,ele_list):

if len(time_list)==len(lat_list) and len(time_list)==len(lon_list) and len(time_list)==len(ele_list):

pass

else:

return

df = pd.DataFrame({'time': time_list, 'lat': lat_list, 'lon': lon_list, 'ele': ele_list})

columns = df.columns.tolist()

df['r'] = 6371393+df['ele'] # radius of the Earth in meters

df['theta'] = np.deg2rad(df['lon'])

df['phi'] = np.deg2rad(df['lat'])

df['theta2']=df['theta'].shift()

df['phi2']=df['phi'].shift()

df['arclength'] = df['r'] * np.arccos(np.cos(df['phi']) * np.cos(df['phi2']) * np.cos(df['theta2']-df['theta']) + np.sin(df['phi'])*np.sin(df['phi2']))

elapsed_time_list=[]

stamp_list=[]

speed_list=[]

stamp_same_list=[]

same_flag=0

enable_flag=0

exit_same_list=[]

for i in range(len(time_list)):

try:

stamp_list.append(time.mktime(time.strptime(time_list[i], "%Y-%m-%d %H:%M:%S")))

enable_flag=1

if i>0 and enable_flag==1:

if stamp_list[i]>stamp_list[i-1]:

elapsed_time_list.append(stamp_list[i]-stamp_list[i-1])

if same_flag==1:

same_flag=0

exit_same_list.append(i)

else:

if same_flag==0:

stamp_same_list.append(i)

same_flag=1

elapsed_time_list.append(0.0)

else:

elapsed_time_list.append(None)

except:

if enable_flag==0:

elapsed_time_list.append(None)

else:

if same_flag==0:

stamp_same_list.append(i)

same_flag=1

elapsed_time_list.append(0.0)

if len(stamp_same_list)>len(exit_same_list):

exit_same_list.append(0)

j=0

displacement=0.0

displacement_time=0.0

end_displacement=0.0

same_flag=0

end_elapsed_time=0.0

stamp_same_in_1st_flag=0

if len(stamp_same_list)==0:

for i in range(len(elapsed_time_list)):

try:

try:

speed_list.append((df['arclength'][i]+df['arclength'][i+1])/ (elapsed_time_list[i]+elapsed_time_list[i+1])) # km/h

except:

speed_list.append(df['arclength'][i]/ elapsed_time_list[i]) # m/s

except:

speed_list.append(None)

else:

for i in range(len(elapsed_time_list)):

try:

if i>0:

try:

if i==stamp_same_list[j]-1:

same_flag=1

if stamp_same_list[0]==1 and stamp_same_in_1st_flag==0:

stamp_same_in_1st_flag=1

same_flag=1

except:

pass

if same_flag==0:

try:

speed_list.append((df['arclength'][i]+df['arclength'][i+1])/ (elapsed_time_list[i]+elapsed_time_list[i+1])) # m/s

except:

speed_list.append(df['arclength'][i]/ elapsed_time_list[i]) # m/s

else:

try:

displacement=displacement+df['arclength'][i]

except:

displacement=displacement+0.0

try:

displacement_time=displacement_time+elapsed_time_list[i]

except:

displacement_time=displacement_time+0.0

if j==len(stamp_same_list)-1 and exit_same_list[j]==0:

if end_elapsed_time==0.0:

end_elapsed_time=elapsed_time_list[i]

end_displacement=end_displacement+df['arclength'][i]

if end_elapsed_time>0.0:

speed_list.append(end_displacement/end_elapsed_time)

else:

speed_list.append(end_displacement/1.0)

else:

speed_list.append(None)

if i==exit_same_list[j]:

avg_speed=displacement/displacement_time

for k in range(stamp_same_list[j]-1,i+1):

speed_list[k]=avg_speed

j=j+1

try:

if elapsed_time_list[j]-end_elapsed_time[j-1]<=1:

pass

else:

same_flag=0

displacement=0.0

displacement_time=0.0

except:

same_flag=0

displacement=0.0

displacement_time=0.0

else:

speed_list.append(None)

except:

speed_list.append(None)

df['elapsed_time'] = elapsed_time_list

df['speed'] = speed_list # m/s

df['speed'] = 3.6*df['speed'] # km/h

df = df[columns + ['arclength']+['speed']]

return df

if __name__ == '__main__':

calculate_speed(['2017-07-08 22:05:45', '2017-07-08 22:05:46', '2017-07-08 22:05:47', '2017-07-08 22:05:48', '2017-07-08 22:05:49','2017-07-08 22:05:50'],[-33.81612, -33.81615, -33.816117, -33.816082, -33.816043,-33.816001],[150.871031, 150.871035, 150.87104399999998, 150.87105400000002, 150.871069,150.871080],[74.0, 73.0, 74.0, 75.0, 73.0,75.0])

path="./2.gpx"

f=open(path, 'r', encoding="utf-8")

gpx=f.readlines()

f.close()

gpx=read_gpx(gpx)

print(gpx)

df=calculate_speed(gpx["time"],gpx["lat"],gpx["lon"],gpx["ele"])

print(df)