目录

- 前言

- 一、yolov8环境搭建

- 二、测试

- 训练模型,评估模型,并导出模型

- 实测检测效果

- 测试人体姿态估计

前言

YOLO系列层出不穷,从yolov5到现在的yolov8仅仅不到一年的时间。追踪新技术,了解前沿算法,一起来测试下yolov8在不同人物如pose检测的效果吧!

一、yolov8环境搭建

1.1 Source code: git

1.2 安装必要的package:

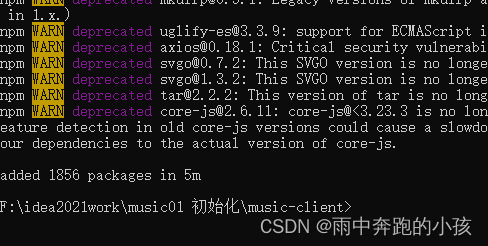

安装须知:yolo5是可以兼容python3.7以及对应的numpy; 而yolo8使用python3.8以上。

不然会遇到问题:

- TypeError: concatenate() got an unexpected keyword argument ‘dtype’ #2029

- 解决办法:conda一个新的环境,python=3.8 并直接pip install ultralytics

- 按照上述操作,还遇到bug:

F.conv2d(input, weight, bias, self.stride,

RuntimeError: GET was unable to find an engine to execute this computation"

- 解决方法参考:Issue

此外,国内防火墙有可能会禁止某些package的下载,特别是与pytorch相关的大文件。可参考国内镜像下载包

二、测试

训练模型,评估模型,并导出模型

from ultralytics import YOLO

# Create a new YOLO model from scratch

model = YOLO('yolov8n.yaml')

# Load a pretrained YOLO model (recommended for training)

model = YOLO('yolov8n.pt')

# Train the model using the 'coco128.yaml' dataset for 3 epochs

results = model.train(data='coco128.yaml', epochs=3)

# Evaluate the model's performance on the validation set

results = model.val()

# Perform object detection on an image using the model

results = model('https://ultralytics.com/images/bus.jpg')

# Export the model to ONNX format

success = model.export(format='onnx')

可以成功训练评估以及导出onnx模型文件.

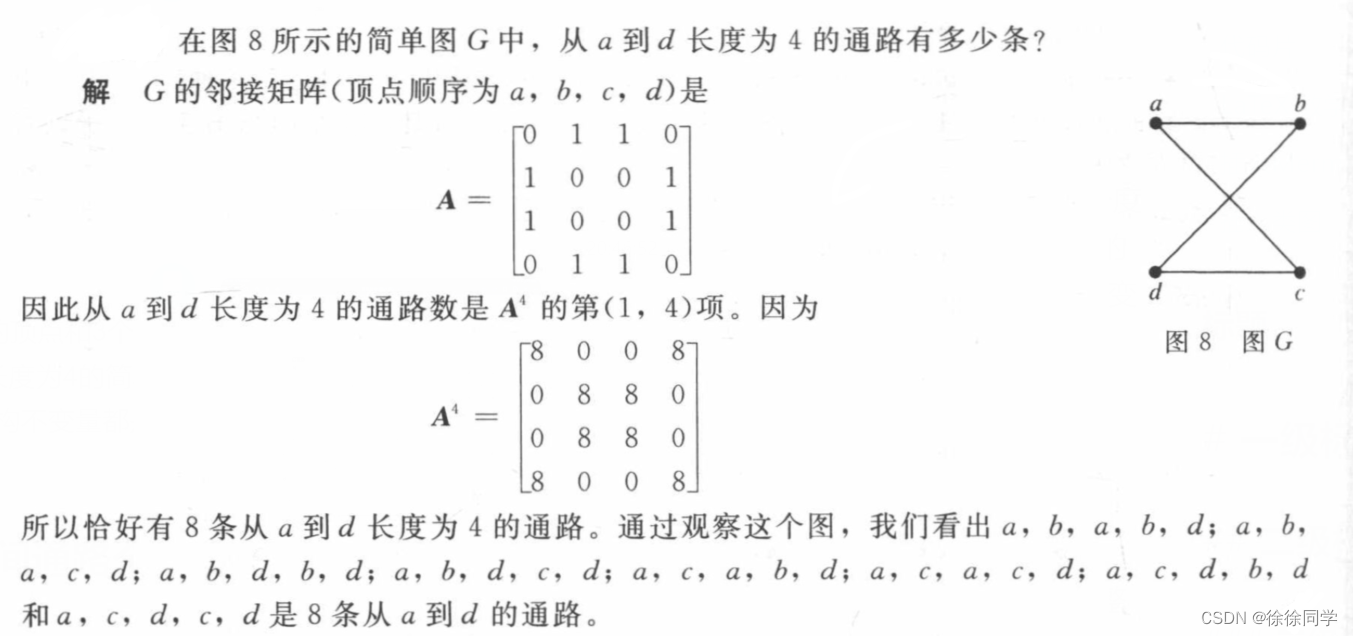

实测检测效果

拿一张照片去测试上述训练后的模型

from ultralytics import YOLO

from PIL import Image

import cv2

model = YOLO("model.pt")

# accepts all formats - image/dir/Path/URL/video/PIL/ndarray. 0 for webcam

results = model.predict(source="0")

results = model.predict(source="folder", show=True) # Display preds. Accepts all YOLO predict arguments

# from PIL

im1 = Image.open("bus.jpg")

results = model.predict(source=im1, save=True) # save plotted images

# from ndarray

im2 = cv2.imread("bus.jpg")

results = model.predict(source=im2, save=True, save_txt=True) # save predictions as labels

# from list of PIL/ndarray

results = model.predict(source=[im1, im2])

实测效果如下:

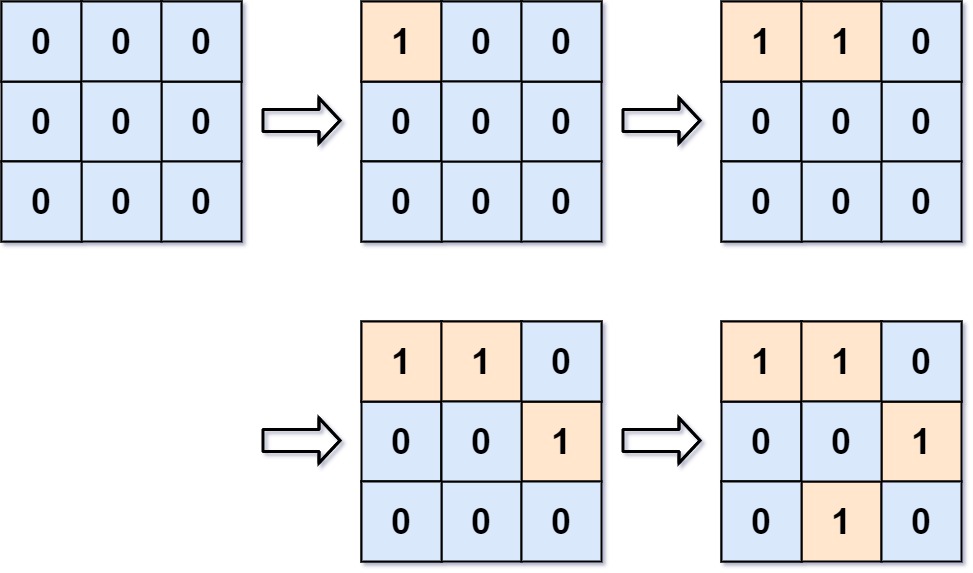

测试人体姿态估计

打开摄像头,检测人物的姿态

from ultralytics import YOLO

import cv2

import math

import os

import glob

import numpy as np

# Load a model

model = YOLO('yolov8n-pose.pt') # load an official model

# model = YOLO('path/to/best.pt') # load a custom trained

video_path = 0

cap = cv2.VideoCapture(video_path)

while cap.isOpened():

success, frame = cap.read()

results = model(frame, imgsz=256)

annotated_frame = results[0].plot()

print(results[0].tojson('data.json'))

cv2.imshow("YOLOv8 pose inference", annotated_frame)

if cv2.waitKey(1) & 0xFF == ord("q"):

break

cap.release()

cv2.destroyAllWindows()

这是打开本地摄像头实测的效果图,实时性能佳