LVS和nginx的测试

实验DR模式

| 服务器 | IP | 备注 |

|---|---|---|

| mysql | 192.168.137.178 | 测试服务器 |

| lvs | **vip ** 192.168.137.99 RIP 192.168.137.100 | lvs服务器 |

| nginx1 | RIP 192.168.137.101 | |

| nginx2 | RIP 192.168.137.102 |

LVS四层代理

test----lvs vip—nginx1/ngin2

LVS服务器的配置

[root@lvs openresty-1.17.8.2]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.137.99:80 rr

-> 192.168.137.101:80 Route 1 0 4994

-> 192.168.137.102:80 Route 1 0 5030

[root@lvs openresty-1.17.8.2]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:9a:2c:4d brd ff:ff:ff:ff:ff:ff

inet 192.168.137.100/24 brd 192.168.137.255 scope global noprefixroute enp0s3

valid_lft forever preferred_lft forever

inet 192.168.137.99/24 brd 192.168.137.255 scope global secondary noprefixroute enp0s3:0

valid_lft forever preferred_lft forever

inet6 fe80::9740:e62:24b0:b04d/64 scope link noprefixroute

valid_lft forever preferred_lft forever

测试

在test服务器上测试,访问均匀的分布在 两个rip上

for i in {1…3000};do curl http://192.168.137.99;done

在nginx服务器上查看日志,发现日志中remoteip 是client的ip。

结论

1、在lvs rr算法下,不设置-p参数的话,请求能均匀的分布在两台服务器上。

2、lvs的dr模式,后端nginx获取的remote_ip不是lvs代理服务器的IP。是直接client过来的IP。

3、后端直接给客户端回包。不经过代理,后端与代理也没有链接

nginx七层代理

test----lvs nginx8080—nginx1/ngin2

LVS服务器上的nginx的配置

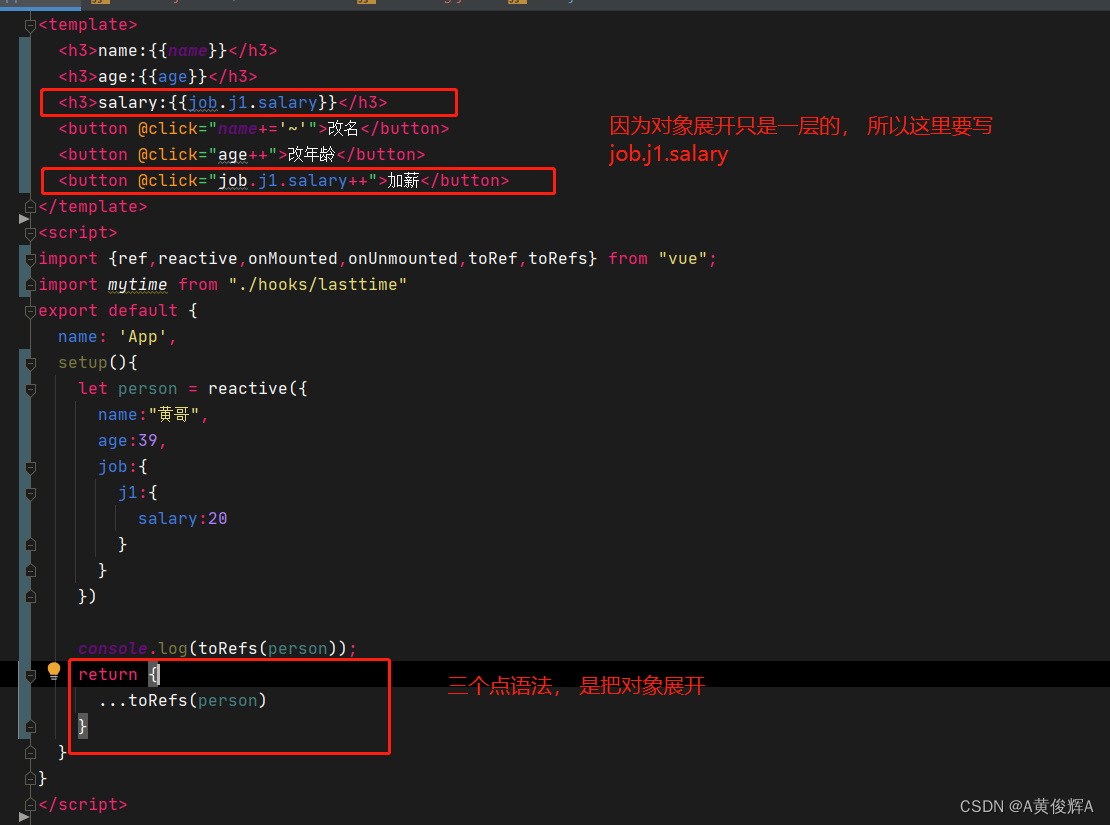

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

upstream nginx{

server 192.168.137.101 weight=100 max_fails=2 fail_timeout=30s;

server 192.168.137.102 weight=100 max_fails=2 fail_timeout=30s;

}

server {

listen 8080;

server_name localhost;

##让代理设置请求头,传递之前的IP

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header REMOTE-HOST $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

location / {

proxy_pass http://nginx;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

}

后端nginx配置

#nginx1配置,设置上realip模块,记录真实IP

server {

listen 80;

server_name localhost;

set_real_ip_from 192.168.137.100; #表示来自代理服务器的IP,不是用户的真实IP

real_ip_header X-Forwarded-For; #用户真实IP存在X-Forwarded-For请求头中

real_ip_recursive on;

location / {

root html;

index index.html index.htm;

}

}

#nginx2,不设置realip模块,记录真实IP

server {

listen 80;

server_name localhost;

location / {

root html;

index index.html index.htm;

}

}

测试

在test服务器上测试

for i in {1…3000};do curl http://192.168.137.99:8080/;done

for i in {1…3000};do curl http://192.168.137.100:8080/;done

观察日志中的remote_ip和http_x_forwarded_for

观察两后台nginx的建立的链接

观察测试服务器的链接

结论

1、nginx七层代理的请求均匀的打在了两台机器上

2、用户访问nginx代理,后端实际与代理进行通讯。流量从代理来,走代理出去。不同于lvs的DR模式

3、nginx的七层代理可以通过xff设置IP透传。代理的ip记录在http_x_forwarded_for中。可通过realip模块将clientIP过滤出来,通过remote_ip打印

nginx四层代理

test----lvs nginx8081—nginx1/ngin2

LVS服务器上nginx的配置

stream {

upstream web{

server 192.168.137.101:80 weight=100 max_fails=2 fail_timeout=30s;

server 192.168.137.102:80 weight=100 max_fails=2 fail_timeout=30s;

}

server {

listen 8081;

proxy_pass web;

}

}

测试

观察后端nginx日志

观察后端服务器socket链接

结论

1、nginx四层代理不能透传IP

2、和7层一样流量经过代理出去。类似lvs的nat模式

keepalive四层

test----nginx1(keepalive vip dr)—nginx1/ngin2

这次在服务器nginx1和nginx2上部署的keepalive nginx1做keepalive的主

keepalived主配置

global_defs {

router_id nginx1

}

vrrp_instance VI_1 {

state MASTER

interface enp0s3

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.137.103

}

}

virtual_server 192.168.137.103 80 {

delay_loop 3

lb_algo rr

lb_kind DR

###persistence_timeout 0

protocol TCP

real_server 192.168.137.101 80 {

weight 1

TCP_CHECK{

connect_timeout 3

retry 3

delay_before_retry 3

}

}

real_server 192.168.137.102 80 {

weight 1

TCP_CHECK{

connect_timeout 3

retry 3

delay_before_retry 3

}

}

}

keepalived备配置

##keepalived配置

global_defs {

router_id nginx2

}

vrrp_instance VI_1 {

state BACKUP

interface enp0s3

virtual_router_id 51

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.137.103

}

}

virtual_server 192.168.137.103 80 {

delay_loop 3

lb_algo rr

lb_kind DR

###persistence_timeout 0

protocol TCP

#此处需要把这个到nginx1的转发注释掉,否则转发会死循环

# real_server 192.168.137.101 80 {

# weight 1

# TCP_CHECK{

# connect_timeout 3

# retry 3

# delay_before_retry 3

# }

# }

real_server 192.168.137.102 80 {

weight 1

TCP_CHECK{

connect_timeout 3

retry 3

delay_before_retry 3

}

}

}

#路由和ip配置

ip addr add 192.168.137.103/32 dev lo label lo:2

route add -host 192.168.137.103 dev lo:2

结论

1、后台realserver需要抑制IP和设置路由

2、备机上的转发规则不能往主机转,否则死循环。因此把备机上的规则只要指向自己。

3、DR模式直接给客户端回包,不需要再经过代理服务器