1.准备工作

准备五台centos 7的虚拟机(每台虚拟机分配2核2G,存储使用20G硬盘,必须2核不然报错):如下图机器的分配情况:

| IP | 节点名称 | 节点类型 |

|---|---|---|

| 10.10.10.11 | k8s-master11 | master |

| 10.10.10.12 | k8s-master12 | master |

| 10.10.10.13 | k8s-master13 | master |

| 10.10.10.21 | k8s-node01 | node |

| 10.10.10.22 | k8s-node02 | node |

| 10.10.10.200 | k8s-master-lb | load-balance |

1.1 基本【】配置

五台机器配置hosts

vim /etc/hosts

10.10.10.11 jx-nginx-11 k8s-master11

10.10.10.12 jx-nginx-12 k8s-master12

10.10.10.13 jx-nginx-13 k8s-master13

10.10.10.21 jx-nginx-21 k8s-node01

10.10.10.22 jx-nginx-22 k8s-node02

10.10.10.100 k8s-master-lb

1.2yum源配置

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

1.3 必备工具安装

yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git -y

1.4有节点关闭防火墙、selinux、dnsmasq、swap。

systemctl disable --now firewalld

systemctl disable --now dnsmasq

systemctl disable --now NetworkManager

setenforce 0

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinux

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

1.5 关闭swap分区

swapoff -a && sysctl -w vm.swappiness=0

sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab

1.6 安装ntpdate

rpm -ivh http://mirrors.wlnmp.com/centos/wlnmp-release-centos.noarch.rpm

yum install ntpdate -y

所有节点同步时间。时间同步配置如下:

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo 'Asia/Shanghai' >/etc/timezone

ntpdate time2.aliyun.com

## 加入到crontab

crontab -e

*/5 * * * * ntpdate time2.aliyun.com

所有节点配置limit:

ulimit -SHn 65535

vim /etc/security/limits.conf

# 末尾添加如下内容

* soft nofile 655360

* hard nofile 131072

* soft nproc 655350

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

Master11节点免密钥登录其他节点:

ssh-keygen -t rsa

for i in k8s-master11 k8s-master12 k8s-master13 k8s-node21 k8s-node22;do ssh-copy-id -i .ssh/id_rsa.pub $i;done

所有机器内核升级

cd /root

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm

cd /root && yum localinstall -y kernel-ml*

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)"

##重启生效

yum update -y && reboot

所有节点安装ipvsadm:

yum install ipvsadm ipset sysstat conntrack libseccomp -y

vim /etc/modules-load.d/ipvs.conf

##加入以下内容

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack_ipv4

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

## 加载内核配置

systemctl enable --now systemd-modules-load.service

开启一些k8s集群中必须的内核参数,所有节点配置k8s内核

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

sysctl --system

安装组件

所有节点安装docker

yum install docker-ce-19.03.* -y

##开机自启

systemctl daemon-reload && systemctl enable --now docker

所有节点安状kubeadm

yum install kubeadm-1.20* kubelet-1.20* kubectl-1.20* -y

默认配置的pause镜像使用gcr.io仓库,国内可能无法访问,所以这里配置Kubelet使用阿里云的pause镜像:

cat >/etc/sysconfig/kubelet<<EOF

KUBELET_EXTRA_ARGS="--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.2"

EOF

systemctl daemon-reload

高可用组件安装

## 所有Master节点通过yum安装HAProxy和KeepAlived:

yum install keepalived haproxy -y

vim /etc/haproxy/haproxy.cfg

## 删除所有内容,加入以下内容,并修改自己ip

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

frontend k8s-master

bind 0.0.0.0:16443

bind 127.0.0.1:16443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-master01 10.10.10.11:6443 check

server k8s-master02 10.10.10.12:6443 check

server k8s-master03 10.10.10.13:6443 check

所有Master节点配置KeepAlived,

配置不一样,注意区分

注意每个节点的IP和网卡(interface参数)

Master11节点的配置:

! Configuration File for keepalived

global_defs {

router_id k8s-master11

script_user root

enable_script_security

3

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

mcast_src_ip 10.10.10.200

virtual_router_id 51

priority 101

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.10.10.200

}

# track_script {

# chk_apiserver

# }

}

Master02节点的配置:

! Configuration File for keepalived

global_defs {

router_id k8s-master12

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

mcast_src_ip 10.10.10.200

virtual_router_id 51

priority 101

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.10.10.200

}

# track_script {

# chk_apiserver

# }

}

Master03节点的配置:

! Configuration File for keepalived

global_defs {

router_id k8s-master13

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

mcast_src_ip 10.10.10.200

virtual_router_id 51

priority 101

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.10.10.200

}

# track_script {

# chk_apiserver

# }

}

配置KeepAlived健康检查文件:

vim /etc/keepalived/check_apiserver.sh

## 加入下面内容

#!/bin/bash

err=0

for k in $(seq 1 3)

do

check_code=$(pgrep haproxy)

if [[ $check_code == "" ]]; then

err=$(expr $err + 1)

sleep 1

continue

else

err=0

break

fi

done

if [[ $err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

##加以执行权限

chmod +x /etc/keepalived/check_apiserver.sh

## 启动haproxy和keepalived

systemctl daemon-reload

systemctl enable --now haproxy

systemctl enable --now keepalived

### 测试vip

ping 10.10.10.200

集群初始化

主要在三台master加入下面的yaml

vim /root/kubeadm-config.yaml

##加入以下内容

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: 7t2weq.bjbawausm0jaxury

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.10.10.11

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master11

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

certSANs:

- 10.10.10.200

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 10.10.10.200:16443

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.20.15

networking:

dnsDomain: cluster.local

podSubnet: 172.168.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

所有Master节点提前下载镜像,可以节省初始化时间

kubeadm config images pull --config /root/kubeadm-config.yaml

Master11节点初始化,初始化以后会在/etc/kubernetes目录下生成对应的证书和配置文件,之后其他Master节点加入Master01即可:

kubeadm init --config /root/kubeadm-config.yaml --upload-certs

初始化成功之后记录下token值

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 10.10.10.100:16443 --token 7t2weq.bjbawausm0jaxury \

--discovery-token-ca-cert-hash sha256:2eb41cbafe1464ba85f617cfa60424540c0b2d5ff121e30946b10ec9e518a5a8 \

--control-plane --certificate-key 505ca58be261429e1495681c3aaafc0396a06e522d131afa83390bd5bfc1629b

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.10.10.100:16443 --token 7t2weq.bjbawausm0jaxury \

--discovery-token-ca-cert-hash sha256:2eb41cbafe1464ba85f617cfa60424540c0b2d5ff121e30946b10ec9e518a5a8

Master11节点配置环境变量,用于访问Kubernetes集群:

cat <<EOF >> /root/.bashrc

export KUBECONFIG=/etc/kubernetes/admin.conf

EOF

source /root/.bashrc

查看节点状态

kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master11 NotReady control-plane,master 5m23s v1.20.15

初始化其他master加入集群

kubeadm join 10.10.10.100:16443 --token 7t2weq.bjbawausm0jaxury \

--discovery-token-ca-cert-hash sha256:2eb41cbafe1464ba85f617cfa60424540c0b2d5ff121e30946b10ec9e518a5a8 \

--control-plane --certificate-key 505ca58be261429e1495681c3aaafc0396a06e522d131afa83390bd5bfc1629b

添加Node节点加入集群

kubeadm join 10.10.10.100:16443 --token 7t2weq.bjbawausm0jaxury \

--discovery-token-ca-cert-hash sha256:2eb41cbafe1464ba85f617cfa60424540c0b2d5ff121e30946b10ec9e518a5a8

查看集群状态:

[root@jx-nginx-11 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

jx-apache-21 Ready <none> 95m v1.20.15

jx-apache-22 Ready <none> 95m v1.20.15

jx-nginx-12 Ready control-plane,master 98m v1.20.15

jx-nginx-13 Ready control-plane,master 97m v1.20.15

k8s-master11 Ready control-plane,master 101m v1.20.15

Calico安装,以下步骤只在master11执行

cd /root/ ; git clone https://github.com/dotbalo/k8s-ha-install.git

cd /root/k8s-ha-install && git checkout manual-installation-v1.20.x && cd calico/

sed -i 's#etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"#etcd_endpoints: "https://192.168.0.201:2379,https://192.168.0.202:2379,https://192.168.0.203:2379"#g' calico-etcd.yaml

ETCD_CA=`cat /etc/kubernetes/pki/etcd/ca.crt | base64 | tr -d '\n'`

ETCD_CERT=`cat /etc/kubernetes/pki/etcd/server.crt | base64 | tr -d '\n'`

ETCD_KEY=`cat /etc/kubernetes/pki/etcd/server.key | base64 | tr -d '\n'`

sed -i "s@# etcd-key: null@etcd-key: ${ETCD_KEY}@g; s@# etcd-cert: null@etcd-cert: ${ETCD_CERT}@g; s@# etcd-ca: null@etcd-ca: ${ETCD_CA}@g" calico-etcd.yaml

sed -i 's#etcd_ca: ""#etcd_ca: "/calico-secrets/etcd-ca"#g; s#etcd_cert: ""#etcd_cert: "/calico-secrets/etcd-cert"#g; s#etcd_key: "" #etcd_key: "/calico-secrets/etcd-key" #g' calico-etcd.yaml

POD_SUBNET=`cat /etc/kubernetes/manifests/kube-controller-manager.yaml | grep cluster-cidr= | awk -F= '{print $NF}'`

sed -i 's@# - name: CALICO_IPV4POOL_CIDR@- name: CALICO_IPV4POOL_CIDR@g; s@# value: "192.168.0.0/16"@ value: '"${POD_SUBNET}"'@g' calico-etcd.yaml

创建calico

kubectl apply -f calico-etcd.yaml

Metrics Server部署

scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-node01:/etc/kubernetes/pki/front-proxy-ca.crt

scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-node02:/etc/kubernetes/pki/front-proxy-ca.crt

安装metrics server

cd /root/k8s-ha-install/metrics-server-0.4.x-kubeadm/

kubectl create -f comp.yaml

kubectl top node

[root@jx-nginx-11 ~]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

jx-apache-21 161m 8% 792Mi 41%

jx-apache-22 149m 7% 756Mi 39%

jx-nginx-12 385m 19% 1074Mi 56%

jx-nginx-13 400m 20% 1179Mi 62%

k8s-master11 407m 20% 1129Mi 59%

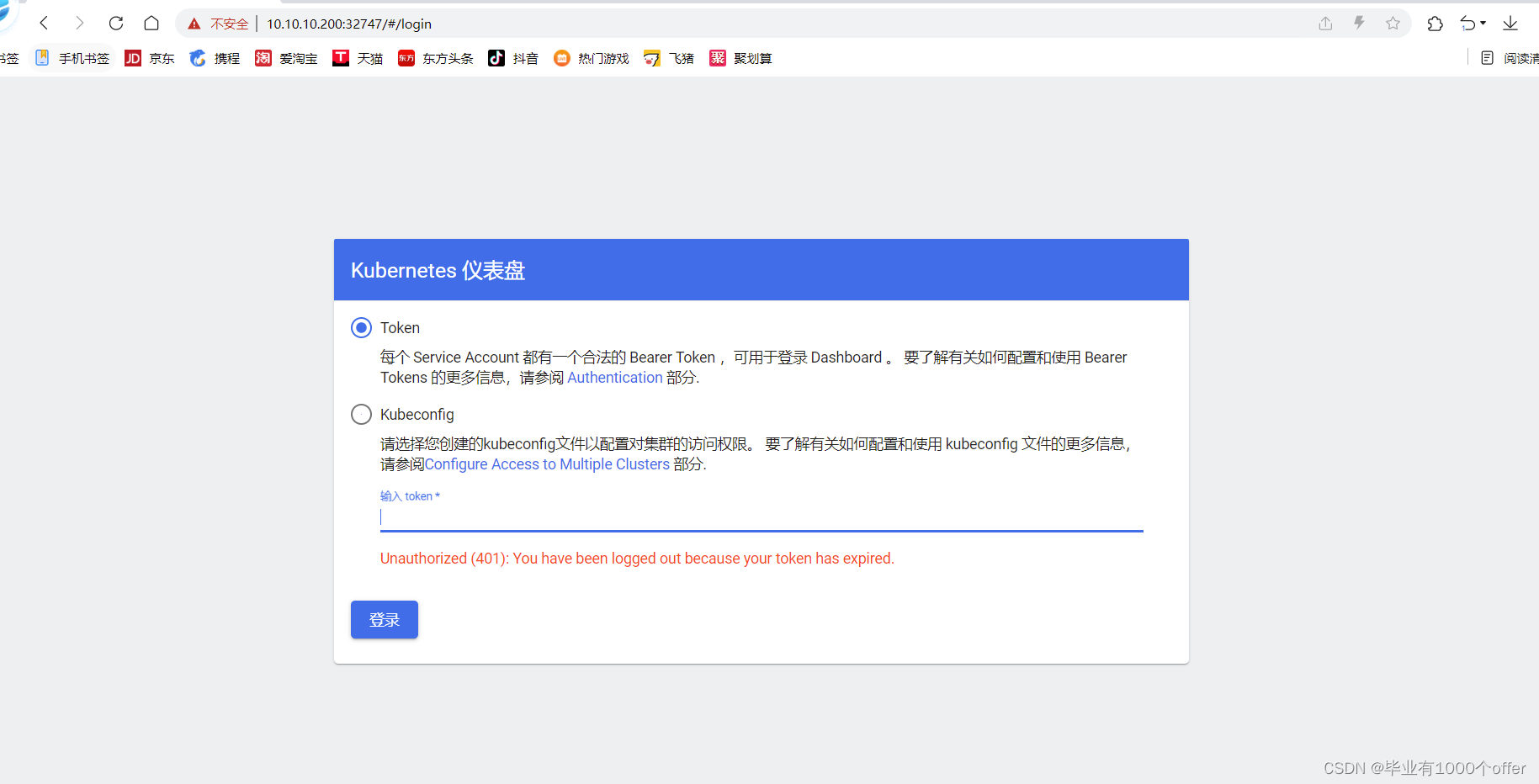

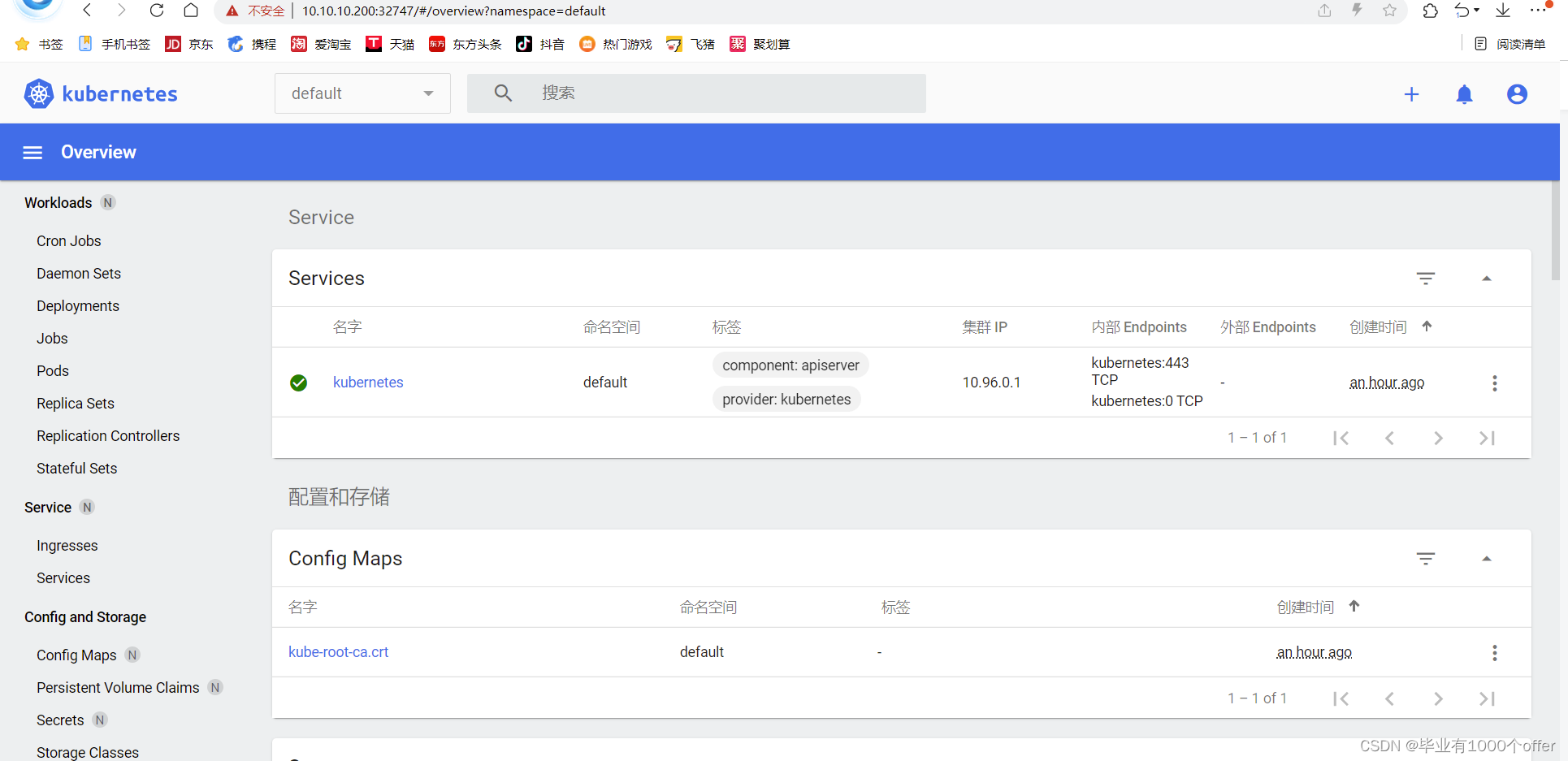

Dashboard部署

cd /root/k8s-ha-install/dashboard/

kubectl create -f .

更改dashboard的svc为NodePort:

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

查看端口

kubectl get svc kubernetes-dashboard -n kubernetes-dashboard

[root@jx-nginx-11 ~]# kubectl get svc kubernetes-dashboard -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.111.102.154 <none> 443:32747/TCP 94m

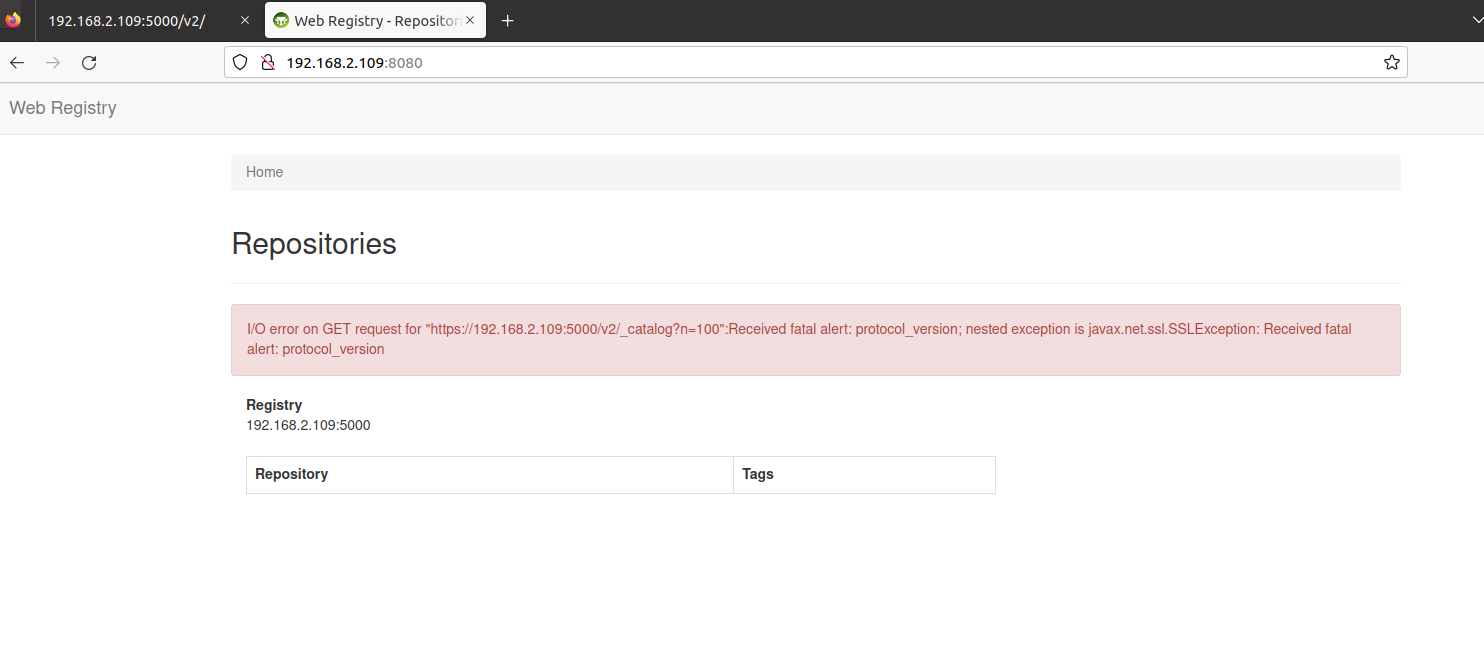

访问浏览器,https://10.10.10.200:32747 目前谷歌浏览器好像不太能访问,可以看看火狐,edge,浏览器启动参数加上 --test-type --ignore-certificate-errors

查看token值

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

[root@jx-nginx-11 ~]# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

Name: admin-user-token-qqnd4

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: 4b41595e-437d-4ca6-ab80-9af359bbb6b5

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1066 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InVtMjlQLUJJOUlkWDRrR0QxU3dOWkFjeTVyWjRDQTRlOGR5UkFncndITlkifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXFxbmQ0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI0YjQxNTk1ZS00MzdkLTRjYTYtYWI4MC05YWYzNTliYmI2YjUiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.kjHq8lYDADfOkhYN5eUVGAsbVoxte2BZJXq9f-TZp9dqB75g-W9K_hWbdcSW5vBdNuEMJZFrC5VSXwjTt-tpMODMUcic9GzdL-Hc4ZEuyVRTmpAX0YwEZ9l39CW8fahsWHMz4-AuJG3jRzBED3R5pXcM_f6kPth_hsWthXAQeFEqXZZSBQDz1xIN2_Tz3-v3eHXPDBUBYnNOhyQyYQ416MRDC1Lo3vCmk07aCKQ9pczsc4plnV62qxt8fGwFaxSwCTek1Cg1ms7_d6ctBgpL5REQTqooAsKQjMCgpmNYHun4nI8SPTnj0mtS7aFOvtqRMl43mHWktrvS1iGg0wQOow

访问通过token

![[创新工具和方法论]-01- DOE课程基础知识](https://img-blog.csdnimg.cn/img_convert/2be39d9d93d4d74633a5f19aaeff8b99.jpeg)