一、k8s架构

master: 11.0.1.3

node: 11.0.1.4,11.0.1.5(nfs)

nfs: 11.0.1.5

二、安装nfs

安装nfs-utils和rpcbind

nfs客户端和服务端都安装nfs-utils包

yum install nfs-utils rpcbind -y

创建共享目录

mkdir -p /nfsdata

chmod 777 /nfsdata

编辑/etc/exports文件添加如下内容

vi /etc/exports

/nfsdata *(rw,sync,no_root_squash)

启动服务

systemctl enable rpcbind.service --now

systemctl enable nfs.service --now

启动顺序一定是rpcbind->nfs,否则有可能出现错误

三、部署自动分配PV的相关程序

先从github上拉取nacos的代码:

git clone https://github.com/nacos-group/nacos-k8s.git

内容结构如下:

[root@master1 ~]# tree nacos-k8s/deploy

nacos-k8s/deploy

├── ceph

│ ├── pvc.yaml

│ └── sc.yaml

├── ingress

│ ├── ingress-nginx.yaml

│ └── service-nodeport.yaml

├── mysql

│ ├── mysql-ceph.yaml

│ ├── mysql-local.yaml

│ ├── mysql-nfs.yaml

│ └── mysql.yaml

├── nacos

│ ├── nacos-ingress.yaml

│ ├── nacos-no-pvc-ingress.yaml

│ ├── nacos-pvc-ceph.yaml

│ ├── nacos-pvc-nfs.yaml

│ ├── nacos-quick-start.yaml

│ └── nacos-tmp.yaml

└── nfs

├── class.yaml

├── deployment.yaml

└── rbac.yaml

因为StorageClass可以实现自动配置,所以使用StorageClass之前,我们需要先安装存储驱动的自动配置程序,而这个配置程序必须拥有一定的权限去访问我们的kubernetes集群(类似dashboard一样,必须有权限访问各种api,才能实现管理)。

创建rbac:

[root@master1 ~]# cat nacos-k8s/deploy/nfs/rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: devops

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: devops

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: devops

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

创建StorageClass

[root@master1 ~]# cat nacos-k8s/deploy/nfs/class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: fuseim.pri/ifs

parameters:

archiveOnDelete: "false"

创建自动配置程序 - NFS客户端

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-client-provisioner

namespace: devops

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccount: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

# 这里IP是你nfs服务器的IP

value: 11.0.1.5

- name: NFS_PATH

# 这里路径就是你上面的nfs服务器上的路径

value: /nfsdata

volumes:

- name: nfs-client-root

nfs:

# 这里IP是你nfs服务器的IP

server: 11.0.1.5

# 这里路径就是你上面的nfs服务器上的路径

path: /nfsdata

四、部署MySQL

MySQL的配置文件如下:

[root@master1 ~]# cat nacos-k8s/deploy/mysql/mysql-nfs.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql

namespace: devops

annotations:

volume.beta.kubernetes.io/storage-provisioner: managed-nfs-storage

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: managed-nfs-storage

---

apiVersion: v1

kind: ReplicationController

metadata:

name: mysql

namespace: devops

labels:

name: mysql

spec:

replicas: 1

selector:

name: mysql

template:

metadata:

labels:

name: mysql

spec:

containers:

- name: mysql

image: nacos/nacos-mysql:5.7

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: "root"

- name: MYSQL_DATABASE

value: "nacos_devtest"

- name: MYSQL_USER

value: "nacos"

- name: MYSQL_PASSWORD

value: "nacos"

volumeMounts:

- name: mysql-data

mountPath: /var/lib/mysql

volumes:

- name: mysql-data

persistentVolumeClaim:

claimName: mysql

---

apiVersion: v1

kind: Service

metadata:

name: mysql

namespace: devops

labels:

name: mysql

spec:

type: NodePort

ports:

- port: 3306

targetPort: 3306

nodePort: 30001

selector:

name: mysql

查看执行后结果:

[root@master1 ~]# kubectl get pod,svc -n devops

NAME READY STATUS RESTARTS AGE

pod/mysql-bthlg 1/1 Running 0 79m

pod/nfs-client-provisioner-5698b6944c-r4fqt 1/1 Running 0 31m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/mysql NodePort 10.103.249.249 <none> 3306:30001/TCP 79m

五、部署Nacos集群

[root@master1 ~]# cat nacos-k8s/deploy/nacos/nacos-nfs.yaml

# 请阅读Wiki文章

# https://github.com/nacos-group/nacos-k8s/wiki/%E4%BD%BF%E7%94%A8peerfinder%E6%89%A9%E5%AE%B9%E6%8F%92%E4%BB%B6

---

apiVersion: v1

kind: Service

metadata:

name: nacos-headless

namespace: devops

labels:

app: nacos

spec:

publishNotReadyAddresses: true

ports:

- port: 8848

name: server

targetPort: 8848

- port: 9848

name: client-rpc

targetPort: 9848

- port: 9849

name: raft-rpc

targetPort: 9849

## 兼容1.4.x版本的选举端口

- port: 7848

name: old-raft-rpc

targetPort: 7848

clusterIP: None

selector:

app: nacos

---

apiVersion: v1

kind: ConfigMap

metadata:

name: nacos-cm

namespace: devops

data:

mysql.host: "mysql"

mysql.db.name: "nacos_devtest"

mysql.port: "3306"

mysql.user: "nacos"

mysql.password: "nacos"

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: nacos

namespace: devops

spec:

podManagementPolicy: Parallel

serviceName: nacos-headless

replicas: 3

template:

metadata:

labels:

app: nacos

annotations:

pod.alpha.kubernetes.io/initialized: "true"

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "app"

operator: In

values:

- nacos

topologyKey: "kubernetes.io/hostname"

serviceAccountName: nfs-client-provisioner

initContainers:

- name: peer-finder-plugin-install

image: nacos/nacos-peer-finder-plugin:1.1

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /home/nacos/plugins/peer-finder

name: data

subPath: peer-finder

containers:

- name: nacos

imagePullPolicy: IfNotPresent

image: nacos/nacos-server:v2.2.0

resources:

requests:

memory: "2Gi"

cpu: "500m"

ports:

- containerPort: 8848

name: client-port

- containerPort: 9848

name: client-rpc

- containerPort: 9849

name: raft-rpc

- containerPort: 7848

name: old-raft-rpc

env:

- name: NACOS_REPLICAS

value: "3"

- name: SERVICE_NAME

value: "nacos-headless"

- name: DOMAIN_NAME

value: "cluster.local"

- name: POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

- name: MYSQL_SERVICE_HOST

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.host

- name: MYSQL_SERVICE_DB_NAME

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.db.name

- name: MYSQL_SERVICE_PORT

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.port

- name: MYSQL_SERVICE_USER

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.user

- name: MYSQL_SERVICE_PASSWORD

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.password

- name: SPRING_DATASOURCE_PLATFORM

value: "mysql"

- name: NACOS_SERVER_PORT

value: "8848"

- name: NACOS_APPLICATION_PORT

value: "8848"

- name: PREFER_HOST_MODE

value: "hostname"

volumeMounts:

- name: data

mountPath: /home/nacos/plugins/peer-finder

subPath: peer-finder

- name: data

mountPath: /home/nacos/data

subPath: data

- name: data

mountPath: /home/nacos/logs

subPath: logs

volumeClaimTemplates:

- metadata:

name: data

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

accessModes: [ "ReadWriteMany" ]

resources:

requests:

storage: 1Gi

selector:

matchLabels:

app: nacos

执行完成后,查看结果:

[root@master1 ~]# kubectl get pod,svc -n devops

NAME READY STATUS RESTARTS AGE

pod/mysql-bthlg 1/1 Running 0 79m

pod/nacos-0 1/1 Running 0 30m

pod/nacos-1 1/1 Running 0 30m

pod/nacos-2 1/1 Running 0 30m

pod/nfs-client-provisioner-5698b6944c-r4fqt 1/1 Running 0 31m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/mysql NodePort 10.103.249.249 <none> 3306:30001/TCP 79m

service/nacos-headless ClusterIP None <none> 8848/TCP,9848/TCP,9849/TCP,7848/TCP 30m

检查nacos的日志:

[root@master1 ~]# kubectl logs -f nacos-0 -n devops

+ export CUSTOM_SEARCH_NAMES=application

+ CUSTOM_SEARCH_NAMES=application

+ export CUSTOM_SEARCH_LOCATIONS=file:/home/nacos/conf/

+ CUSTOM_SEARCH_LOCATIONS=file:/home/nacos/conf/

+ export MEMBER_LIST=

+ MEMBER_LIST=

+ PLUGINS_DIR=/home/nacos/plugins/peer-finder

+ [[ cluster == \s\t\a\n\d\a\l\o\n\e ]]

+ [[ '' == \e\m\b\e\d\d\e\d ]]

+ JAVA_OPT=' -server -Xms1g -Xmx1g -Xmn512m -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=320m'

+ [[ n == \y ]]

+ JAVA_OPT=' -server -Xms1g -Xmx1g -Xmn512m -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=320m -XX:-OmitStackTraceInFastThrow -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/home/nacos/logs/java_heapdump.hprof'

+ JAVA_OPT=' -server -Xms1g -Xmx1g -Xmn512m -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=320m -XX:-OmitStackTraceInFastThrow -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/home/nacos/logs/java_heapdump.hprof -XX:-UseLargePages'

+ print_servers

+ [[ ! -d /home/nacos/plugins/peer-finder ]]

+ bash /home/nacos/plugins/peer-finder/plugin.sh

+ sleep 30

2023/04/12 15:21:58 Peer list updated

was []

now [nacos-0.nacos-headless.devops.svc.cluster.local nacos-1.nacos-headless.devops.svc.cluster.local nacos-2.nacos-headless.devops.svc.cluster.local]

2023/04/12 15:21:58 execing: /home/nacos/plugins/peer-finder/on-start.sh with stdin: nacos-0.nacos-headless.devops.svc.cluster.local

nacos-1.nacos-headless.devops.svc.cluster.local

nacos-2.nacos-headless.devops.svc.cluster.local

2023/04/12 15:21:59

+ [[ all == \c\o\n\f\i\g ]]

+ [[ all == \n\a\m\i\n\g ]]

+ [[ ! -z '' ]]

+ [[ ! -z '' ]]

+ [[ ! -z '' ]]

+ [[ ! -z '' ]]

+ [[ ! -z '' ]]

+ [[ hostname == \h\o\s\t\n\a\m\e ]]

+ JAVA_OPT=' -server -Xms1g -Xmx1g -Xmn512m -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=320m -XX:-OmitStackTraceInFastThrow -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/home/nacos/logs/java_heapdump.hprof -XX:-UseLargePages -Dnacos.preferHostnameOverIp=true'

+ JAVA_OPT=' -server -Xms1g -Xmx1g -Xmn512m -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=320m -XX:-OmitStackTraceInFastThrow -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/home/nacos/logs/java_heapdump.hprof -XX:-UseLargePages -Dnacos.preferHostnameOverIp=true -Dnacos.member.list='

++ sed -E -n 's/.* version "([0-9]*).*$/\1/p'

++ /usr/lib/jvm/java-1.8.0-openjdk/bin/java -version

+ JAVA_MAJOR_VERSION=1

+ [[ 1 -ge 9 ]]

+ JAVA_OPT=' -server -Xms1g -Xmx1g -Xmn512m -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=320m -XX:-OmitStackTraceInFastThrow -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/home/nacos/logs/java_heapdump.hprof -XX:-UseLargePages -Dnacos.preferHostnameOverIp=true -Dnacos.member.list= -Djava.ext.dirs=/usr/lib/jvm/java-1.8.0-openjdk/jre/lib/ext:/usr/lib/jvm/java-1.8.0-openjdk/lib/ext:/home/nacos/plugins/health:/home/nacos/plugins/cmdb:/home/nacos/plugins/mysql'

+ JAVA_OPT=' -server -Xms1g -Xmx1g -Xmn512m -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=320m -XX:-OmitStackTraceInFastThrow -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/home/nacos/logs/java_heapdump.hprof -XX:-UseLargePages -Dnacos.preferHostnameOverIp=true -Dnacos.member.list= -Djava.ext.dirs=/usr/lib/jvm/java-1.8.0-openjdk/jre/lib/ext:/usr/lib/jvm/java-1.8.0-openjdk/lib/ext:/home/nacos/plugins/health:/home/nacos/plugins/cmdb:/home/nacos/plugins/mysql -Xloggc:/home/nacos/logs/nacos_gc.log -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintGCTimeStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=100M'

+ JAVA_OPT=' -server -Xms1g -Xmx1g -Xmn512m -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=320m -XX:-OmitStackTraceInFastThrow -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/home/nacos/logs/java_heapdump.hprof -XX:-UseLargePages -Dnacos.preferHostnameOverIp=true -Dnacos.member.list= -Djava.ext.dirs=/usr/lib/jvm/java-1.8.0-openjdk/jre/lib/ext:/usr/lib/jvm/java-1.8.0-openjdk/lib/ext:/home/nacos/plugins/health:/home/nacos/plugins/cmdb:/home/nacos/plugins/mysql -Xloggc:/home/nacos/logs/nacos_gc.log -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintGCTimeStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=100M -Dnacos.home=/home/nacos'

+ JAVA_OPT=' -server -Xms1g -Xmx1g -Xmn512m -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=320m -XX:-OmitStackTraceInFastThrow -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/home/nacos/logs/java_heapdump.hprof -XX:-UseLargePages -Dnacos.preferHostnameOverIp=true -Dnacos.member.list= -Djava.ext.dirs=/usr/lib/jvm/java-1.8.0-openjdk/jre/lib/ext:/usr/lib/jvm/java-1.8.0-openjdk/lib/ext:/home/nacos/plugins/health:/home/nacos/plugins/cmdb:/home/nacos/plugins/mysql -Xloggc:/home/nacos/logs/nacos_gc.log -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintGCTimeStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=100M -Dnacos.home=/home/nacos -jar /home/nacos/target/nacos-server.jar'

+ JAVA_OPT=' -server -Xms1g -Xmx1g -Xmn512m -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=320m -XX:-OmitStackTraceInFastThrow -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/home/nacos/logs/java_heapdump.hprof -XX:-UseLargePages -Dnacos.preferHostnameOverIp=true -Dnacos.member.list= -Djava.ext.dirs=/usr/lib/jvm/java-1.8.0-openjdk/jre/lib/ext:/usr/lib/jvm/java-1.8.0-openjdk/lib/ext:/home/nacos/plugins/health:/home/nacos/plugins/cmdb:/home/nacos/plugins/mysql -Xloggc:/home/nacos/logs/nacos_gc.log -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintGCTimeStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=100M -Dnacos.home=/home/nacos -jar /home/nacos/target/nacos-server.jar '

+ JAVA_OPT=' -server -Xms1g -Xmx1g -Xmn512m -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=320m -XX:-OmitStackTraceInFastThrow -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/home/nacos/logs/java_heapdump.hprof -XX:-UseLargePages -Dnacos.preferHostnameOverIp=true -Dnacos.member.list= -Djava.ext.dirs=/usr/lib/jvm/java-1.8.0-openjdk/jre/lib/ext:/usr/lib/jvm/java-1.8.0-openjdk/lib/ext:/home/nacos/plugins/health:/home/nacos/plugins/cmdb:/home/nacos/plugins/mysql -Xloggc:/home/nacos/logs/nacos_gc.log -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintGCTimeStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=100M -Dnacos.home=/home/nacos -jar /home/nacos/target/nacos-server.jar --spring.config.additional-location=file:/home/nacos/conf/'

+ JAVA_OPT=' -server -Xms1g -Xmx1g -Xmn512m -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=320m -XX:-OmitStackTraceInFastThrow -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/home/nacos/logs/java_heapdump.hprof -XX:-UseLargePages -Dnacos.preferHostnameOverIp=true -Dnacos.member.list= -Djava.ext.dirs=/usr/lib/jvm/java-1.8.0-openjdk/jre/lib/ext:/usr/lib/jvm/java-1.8.0-openjdk/lib/ext:/home/nacos/plugins/health:/home/nacos/plugins/cmdb:/home/nacos/plugins/mysql -Xloggc:/home/nacos/logs/nacos_gc.log -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintGCTimeStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=100M -Dnacos.home=/home/nacos -jar /home/nacos/target/nacos-server.jar --spring.config.additional-location=file:/home/nacos/conf/ --spring.config.name=application'

+ JAVA_OPT=' -server -Xms1g -Xmx1g -Xmn512m -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=320m -XX:-OmitStackTraceInFastThrow -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/home/nacos/logs/java_heapdump.hprof -XX:-UseLargePages -Dnacos.preferHostnameOverIp=true -Dnacos.member.list= -Djava.ext.dirs=/usr/lib/jvm/java-1.8.0-openjdk/jre/lib/ext:/usr/lib/jvm/java-1.8.0-openjdk/lib/ext:/home/nacos/plugins/health:/home/nacos/plugins/cmdb:/home/nacos/plugins/mysql -Xloggc:/home/nacos/logs/nacos_gc.log -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintGCTimeStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=100M -Dnacos.home=/home/nacos -jar /home/nacos/target/nacos-server.jar --spring.config.additional-location=file:/home/nacos/conf/ --spring.config.name=application --logging.config=/home/nacos/conf/nacos-logback.xml'

+ JAVA_OPT=' -server -Xms1g -Xmx1g -Xmn512m -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=320m -XX:-OmitStackTraceInFastThrow -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/home/nacos/logs/java_heapdump.hprof -XX:-UseLargePages -Dnacos.preferHostnameOverIp=true -Dnacos.member.list= -Djava.ext.dirs=/usr/lib/jvm/java-1.8.0-openjdk/jre/lib/ext:/usr/lib/jvm/java-1.8.0-openjdk/lib/ext:/home/nacos/plugins/health:/home/nacos/plugins/cmdb:/home/nacos/plugins/mysql -Xloggc:/home/nacos/logs/nacos_gc.log -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintGCTimeStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=100M -Dnacos.home=/home/nacos -jar /home/nacos/target/nacos-server.jar --spring.config.additional-location=file:/home/nacos/conf/ --spring.config.name=application --logging.config=/home/nacos/conf/nacos-logback.xml --server.max-http-header-size=524288'

+ echo 'Nacos is starting, you can docker logs your container'

+ exec /usr/lib/jvm/java-1.8.0-openjdk/bin/java -server -Xms1g -Xmx1g -Xmn512m -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=320m -XX:-OmitStackTraceInFastThrow -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/home/nacos/logs/java_heapdump.hprof -XX:-UseLargePages -Dnacos.preferHostnameOverIp=true -Dnacos.member.list= -Djava.ext.dirs=/usr/lib/jvm/java-1.8.0-openjdk/jre/lib/ext:/usr/lib/jvm/java-1.8.0-openjdk/lib/ext:/home/nacos/plugins/health:/home/nacos/plugins/cmdb:/home/nacos/plugins/mysql -Xloggc:/home/nacos/logs/nacos_gc.log -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintGCTimeStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=100M -Dnacos.home=/home/nacos -jar /home/nacos/target/nacos-server.jar --spring.config.additional-location=file:/home/nacos/conf/ --spring.config.name=application --logging.config=/home/nacos/conf/nacos-logback.xml --server.max-http-header-size=524288

Nacos is starting, you can docker logs your container

,--.

,--.'|

,--,: : | Nacos 2.2.0

,`--.'`| ' : ,---. Running in cluster mode, All function modules

| : : | | ' ,'\ .--.--. Port: 8848

: | \ | : ,--.--. ,---. / / | / / ' Pid: 1

| : ' '; | / \ / \. ; ,. :| : /`./ Console: http://nacos-0.nacos-headless.devops.svc.cluster.local:8848/nacos/index.html

' ' ;. ;.--. .-. | / / '' | |: :| : ;_

| | | \ | \__\/: . .. ' / ' | .; : \ \ `. https://nacos.io

' : | ; .' ," .--.; |' ; :__| : | `----. \

| | '`--' / / ,. |' | '.'|\ \ / / /`--' /

' : | ; : .' \ : : `----' '--'. /

; |.' | , .-./\ \ / `--'---'

'---' `--`---' `----'

2023-04-12 15:22:47,327 INFO The server IP list of Nacos is [nacos-0.nacos-headless.devops.svc.cluster.local:8848, nacos-1.nacos-headless.devops.svc.cluster.local:8848, nacos-2.nacos-headless.devops.svc.cluster.local:8848]

2023-04-12 15:22:48,966 INFO Nacos is starting...

2023-04-12 15:22:51,275 INFO Nacos is starting...

2023-04-12 15:22:52,431 INFO Nacos is starting...

2023-04-12 15:22:53,841 INFO Nacos is starting...

2023-04-12 15:22:55,126 INFO Nacos is starting...

2023-04-12 15:22:56,432 INFO Nacos is starting...

2023-04-12 15:22:57,614 INFO Nacos is starting...

2023-04-12 15:22:59,760 INFO Nacos is starting...

2023-04-12 15:23:02,355 INFO Nacos is starting...

2023-04-12 15:23:03,414 INFO Nacos is starting...

2023-04-12 15:23:04,512 INFO Nacos is starting...

2023-04-12 15:23:05,565 INFO Nacos is starting...

2023-04-12 15:23:06,566 INFO Nacos is starting...

2023-04-12 15:23:07,567 INFO Nacos is starting...

2023-04-12 15:23:09,218 INFO Nacos is starting...

2023-04-12 15:23:10,395 INFO Nacos is starting...

2023-04-12 15:23:11,413 INFO Nacos is starting...

2023-04-12 15:23:12,416 INFO Nacos is starting...

2023-04-12 15:23:13,417 INFO Nacos is starting...

2023-04-12 15:23:14,431 INFO Nacos is starting...

2023-04-12 15:23:16,033 INFO Nacos is starting...

2023-04-12 15:23:18,371 INFO Nacos is starting...

2023-04-12 15:23:19,642 INFO Nacos is starting...

2023-04-12 15:23:20,762 INFO Nacos is starting...

2023-04-12 15:23:21,870 INFO Nacos is starting...

2023-04-12 15:23:22,883 INFO Nacos is starting...

2023-04-12 15:23:23,917 INFO Nacos is starting...

2023-04-12 15:23:24,983 INFO Nacos is starting...

2023-04-12 15:23:25,171 INFO Nacos started successfully in cluster mode. use external storage

通过日志可以看出,nacos-0、nacos-1、nacos-2均以集群形式运行正常。但是目前我们的nacos的svc是Cluster IP形式,我们还无法通过浏览器去访问,需借助ingress-nginx。

六、创建ingress

获取ingress-nginx并安装,本次案例使用的是0.30版本

[root@master1 ~]# mkdir nacos-k8s/deploy/ingress

cd nacos-k8s/deploy/ingress

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.30.0/deploy/static/mandatory.yaml

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.30.0/deploy/static/provider/baremetal/service-nodeport.yaml

kubectl apply -f ./

上面两个文件不好下载的话,可以复制下面的:

mandatory.yaml内容如下:

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

nodeSelector:

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.30.0

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 101

runAsUser: 101

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

apiVersion: v1

kind: LimitRange

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

limits:

- min:

memory: 90Mi

cpu: 100m

type: Container

service-nodeport.yaml内容如下:

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

- name: https

port: 443

targetPort: 443

protocol: TCP

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

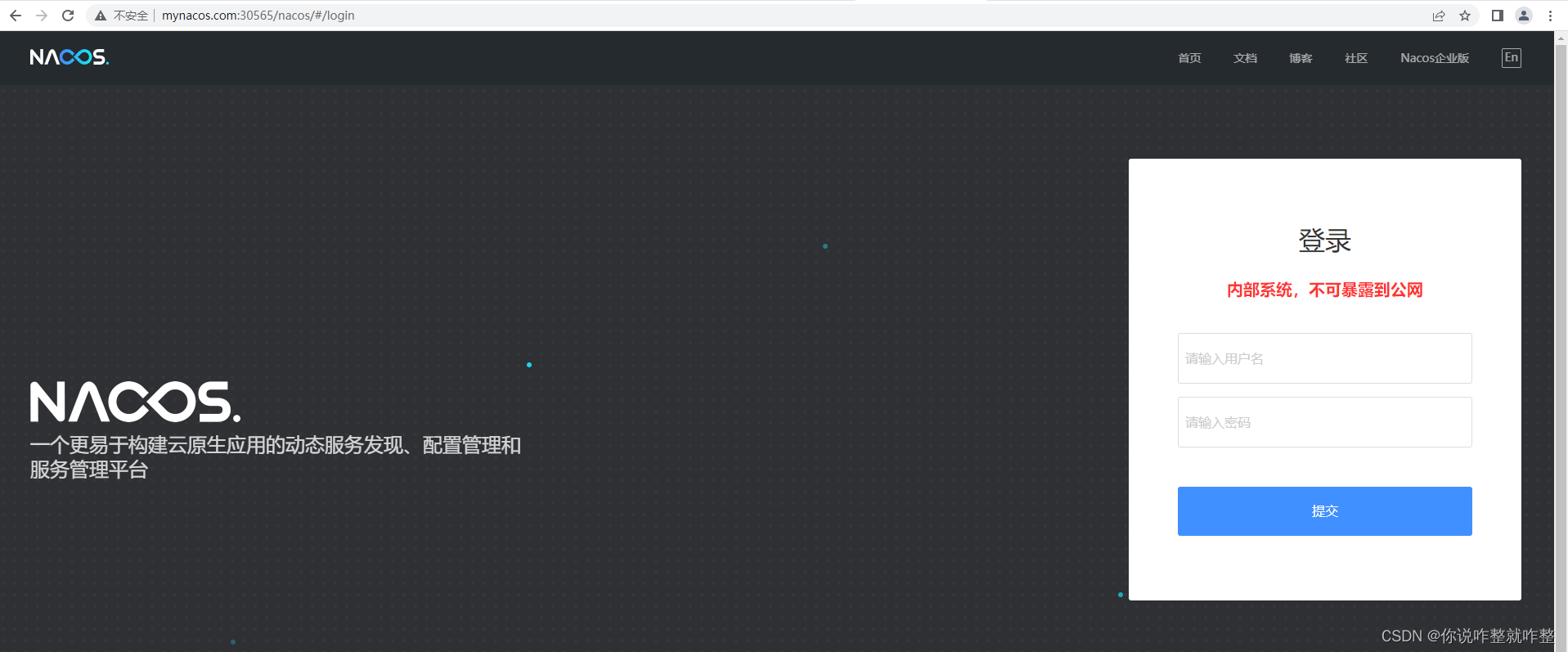

查看创建好的ingress-nginx的svc,端口是30565:

[root@master1 ~]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx NodePort 10.110.95.210 <none> 80:30565/TCP,443:30228/TCP 16m

创建nacos的ingress

[root@master1 nacos]# cat nacos-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nacos-ingress

namespace: devops

spec:

rules:

- host: www.mynacos.com

http:

paths:

- path: /nacos

backend:

serviceName: nacos-headless

servicePort: 8848

创建完成后,观察一会,等ingress获取到地址后访问测试:

[root@master1 ~]# kubectl get ingress -n devops

NAME CLASS HOSTS ADDRESS PORTS AGE

nacos-ingress <none> www.mynacos.com 80 10s

[root@master1 ~]# kubectl get ingress -n devops

NAME CLASS HOSTS ADDRESS PORTS AGE

nacos-ingress <none> www.mynacos.com 10.110.95.210 80 35m

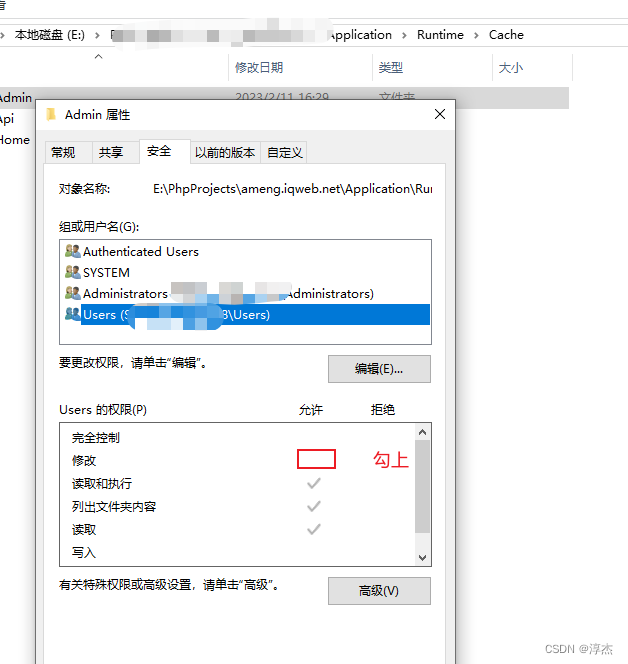

在Windows的hosts文件里加上如下内容:

11.0.1.3 www.mynacos.com

通过浏览器访问 www.mynacos.com:30565/nacos:

登录进去,用户名和密码均为nacos:

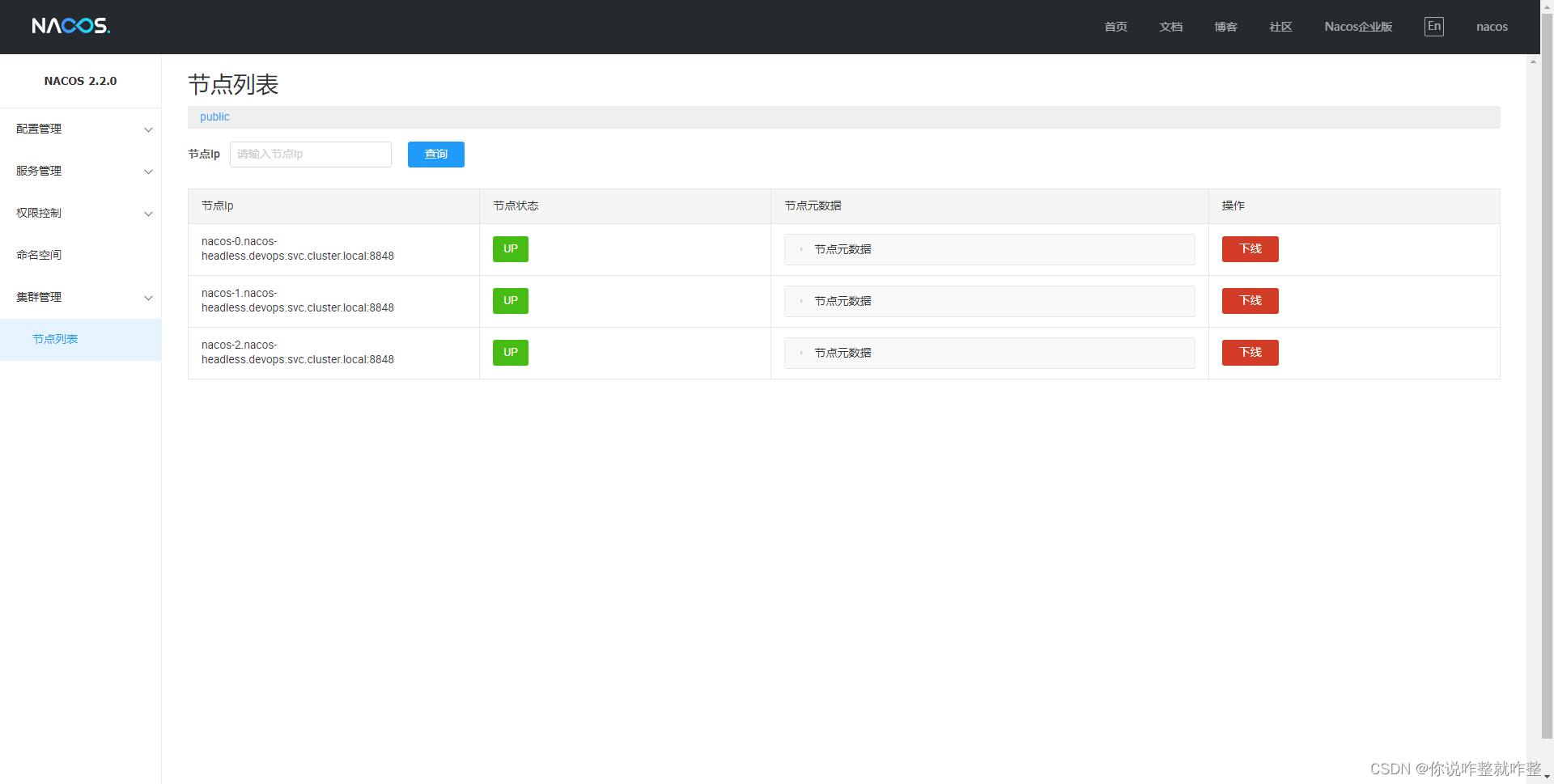

可以看到当前集群三个节点均为我们创建的节点。

![[Linux]环境变量](https://img-blog.csdnimg.cn/94dbb11cf7c642f39cd0254a90275532.png)