hive运行失败会导致临时目录无法自动清理,因此需要自己写脚本去进行清理

实际发现hive临时目录有两个:

/tmp/hive/{user}/*

/warehouse/tablespace//hive/**/.hive-staging_hive

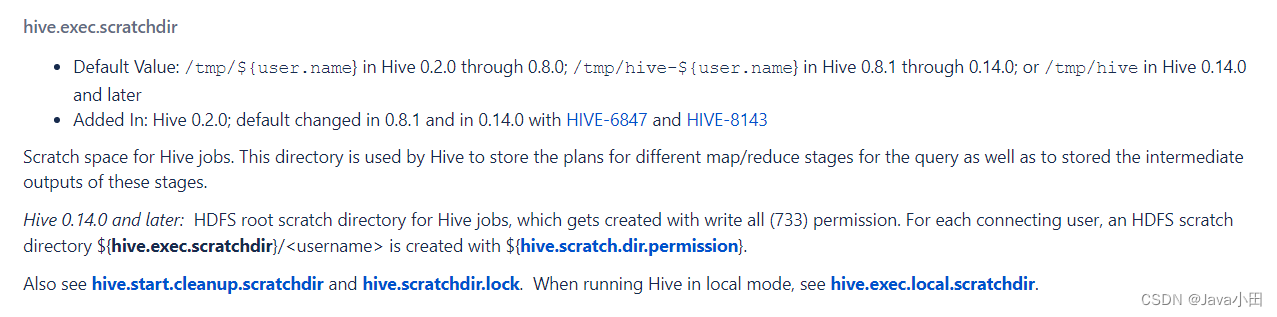

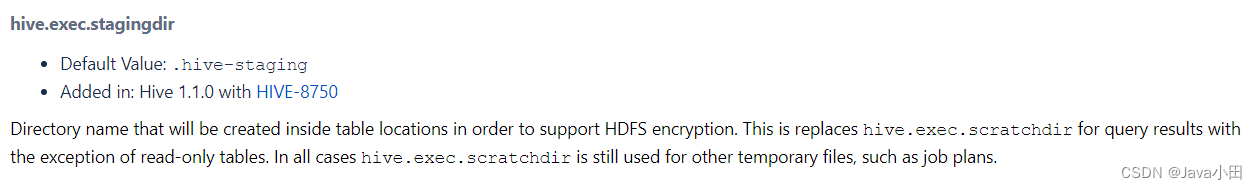

分别由配置hive.exec.scratchdir和hive.exec.stagingdir决定:

要注意的是stagingdir可能存在多个层级的目录中,比如xxx.db/.hive-staging_xxx,xxx.db/${table}/.hive-staging_xxx。这里为了偷懒,只清理两层即可,如果发现有更多层,再多加一层的调用即可

创建清理脚本clean_hive_tmpfile.sh

#!/bin/bash

usage="Usage: cleanup.sh [days]"

if [ ! "$1" ]

then

echo $usage

exit 1

fi

now=$(date +%s)

days=$1

cleanTmpFile() {

echo "clean path:"$1

su hdfs -c "hdfs dfs -ls -d $1" | grep "^d" | while read f; do

dir_date=`echo $f | awk '{print $6}'`

difference=$(( ( $now - $(date -d "$dir_date" +%s) ) / (24 * 60 * 60 ) ))

if [ $difference -gt $days ]; then

echo $f

name=`echo $f| awk '{ print $8 }'`

echo "delete:"$name

su hdfs -c "hadoop fs -rm -r -skipTrash $name"

fi

done

}

cleanTmpFile /tmp/hive/*/*

cleanTmpFile "/warehouse/tablespace/*/hive/*/.hive-staging_*"

cleanTmpFile "/warehouse/tablespace/*/hive/*/*/.hive-staging_*"

配置crontab,每天凌晨2点执行,清理30天以前的目录

0 2 * * * sh /data/script/clean_hive_tmpfile.sh 30 >> /tmp/clean_hive_tmpfile.log

参考:

https://cwiki.apache.org/confluence/display/Hive/Configuration+Properties#ConfigurationProperties-hive.start.cleanup.scratchdir

https://www.cnblogs.com/ucarinc/p/11831280.html

https://blog.csdn.net/zhoudetiankong/article/details/51800887

http://t.zoukankan.com/telegram-p-10748530.html