就在不久前,Mistral 公司在开源了 Pixtral 12B 视觉多模态大模型之后,又开源了自家的企业级小型模型 Mistral-Small-Instruct-2409 (22B),这是 Mistral AI 最新的企业级小型模型,是 Mistral Small v24.02 的升级版。该机型可根据 Mistral Research License 使用,为客户提供了灵活的选择,使其能够在翻译、摘要、情感分析和其他不需要完整通用模型的任务中,选择经济高效、快速可靠的解决方案。

Mistral Small 雏形采用 Mixtral-8X7B-v0.1(46.7B),这是一个具有 12B 活动参数的稀疏专家混合模型。它的推理能力更强,功能更多,可以生成和推理代码,并且是多语言的,支持英语、法语、德语、意大利语和西班牙语。

太激动人心了, Mistral 型号的性能总是出类拔萃。现在,我们在很多缝隙上都有了出色的覆盖范围

-

8b- Llama 3.1 8b

-

12b- Nemo 12b

-

22b- Mistral Small

-

27b- Gemma-2 27b

-

35b- Command-R 35b 08-2024

-

40-60b- GAP (我相信这里有两个新的 MOE,但我最后发现 Llamacpp 不支持它们)

-

70b- Llama 3.1 70b

-

103b- Command-R+ 103b

-

123b- Mistral Large 2

-

141b- WizardLM-2 8x22b

-

230b- Deepseek V2/2.5

-

405b- Llama 3.1 405b

Mistral Small v24.09 拥有 220 亿个参数,为客户提供了介于 Mistral NeMo 12B 和 Mistral Large 2 之间的便捷中间点,提供了可在各种平台和环境中部署的经济高效的解决方案。。

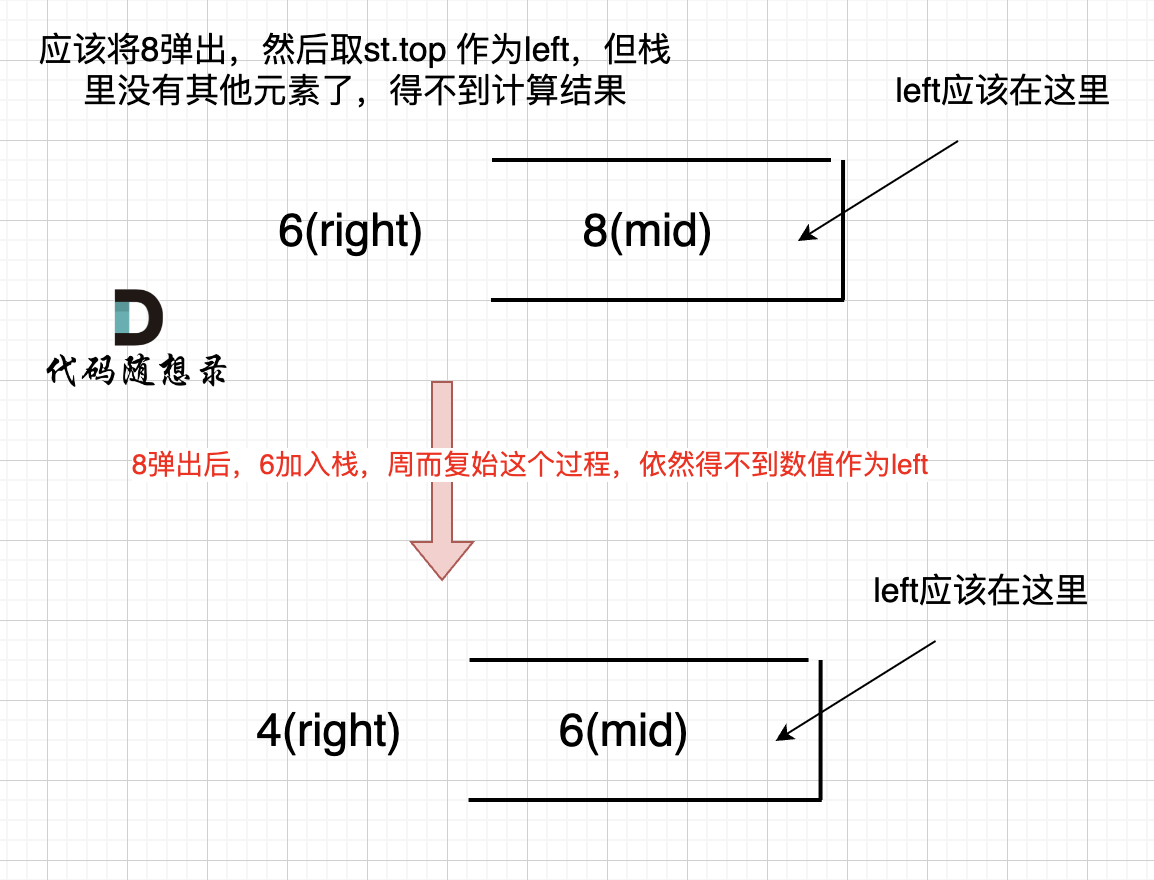

Mistral Small v24.09 拥有 220 亿个参数,为客户提供了介于 Mistral NeMo 12B 和 Mistral Large 2 之间的便捷中间点,提供了可在各种平台和环境中部署的经济高效的解决方案。如下图所示,与以前的模型相比,新的小型模型在人类对齐、推理能力和代码方面都有显著改进。

Mistral-Small-Instruct-2409 是一个指示微调版本,具有以下特点:

- 22B 参数

- 词汇量达 32768

- 支持函数调用

- 128k 序列长度

使用

vLLM(推荐)

安装 vLLM >= v0.6.1.post1

pip install --upgrade vllm

安装 mistral_common >= 1.4.1

pip install --upgrade mistral_common

本地

from vllm import LLM

from vllm.sampling_params import SamplingParams

model_name = "mistralai/Mistral-Small-Instruct-2409"

sampling_params = SamplingParams(max_tokens=8192)

# note that running Mistral-Small on a single GPU requires at least 44 GB of GPU RAM

# If you want to divide the GPU requirement over multiple devices, please add *e.g.* `tensor_parallel=2`

llm = LLM(model=model_name, tokenizer_mode="mistral", config_format="mistral", load_format="mistral")

prompt = "How often does the letter r occur in Mistral?"

messages = [

{

"role": "user",

"content": prompt

},

]

outputs = llm.chat(messages, sampling_params=sampling_params)

print(outputs[0].outputs[0].text)

服务器

vllm serve mistralai/Mistral-Small-Instruct-2409 --tokenizer_mode mistral --config_format mistral --load_format mistral

注意: 在单 GPU 上运行 Mistral-Small 至少需要 44 GB GPU 内存。

如果要将 GPU 需求分配给多个设备,请添加 --tensor_parallel=2 等信息

客户端

curl --location 'http://<your-node-url>:8000/v1/chat/completions' \

--header 'Content-Type: application/json' \

--header 'Authorization: Bearer token' \

--data '{

"model": "mistralai/Mistral-Small-Instruct-2409",

"messages": [

{

"role": "user",

"content": "How often does the letter r occur in Mistral?"

}

]

}'

Mistral-inference

安装mistral_inference >= 1.4.1

pip install mistral_inference --upgrade

下载

from huggingface_hub import snapshot_download

from pathlib import Path

mistral_models_path = Path.home().joinpath('mistral_models', '22B-Instruct-Small')

mistral_models_path.mkdir(parents=True, exist_ok=True)

snapshot_download(repo_id="mistralai/Mistral-Small-Instruct-2409", allow_patterns=["params.json", "consolidated.safetensors", "tokenizer.model.v3"], local_dir=mistral_models_path)

聊天

mistral-chat $HOME/mistral_models/22B-Instruct-Small --instruct --max_tokens 256

Instruct following

from mistral_inference.transformer import Transformer

from mistral_inference.generate import generate

from mistral_common.tokens.tokenizers.mistral import MistralTokenizer

from mistral_common.protocol.instruct.messages import UserMessage

from mistral_common.protocol.instruct.request import ChatCompletionRequest

tokenizer = MistralTokenizer.from_file(f"{mistral_models_path}/tokenizer.model.v3")

model = Transformer.from_folder(mistral_models_path)

completion_request = ChatCompletionRequest(messages=[UserMessage(content="How often does the letter r occur in Mistral?")])

tokens = tokenizer.encode_chat_completion(completion_request).tokens

out_tokens, _ = generate([tokens], model, max_tokens=64, temperature=0.0, eos_id=tokenizer.instruct_tokenizer.tokenizer.eos_id)

result = tokenizer.instruct_tokenizer.tokenizer.decode(out_tokens[0])

print(result)

Function calling

from mistral_common.protocol.instruct.tool_calls import Function, Tool

from mistral_inference.transformer import Transformer

from mistral_inference.generate import generate

from mistral_common.tokens.tokenizers.mistral import MistralTokenizer

from mistral_common.protocol.instruct.messages import UserMessage

from mistral_common.protocol.instruct.request import ChatCompletionRequest

tokenizer = MistralTokenizer.from_file(f"{mistral_models_path}/tokenizer.model.v3")

model = Transformer.from_folder(mistral_models_path)

completion_request = ChatCompletionRequest(

tools=[

Tool(

function=Function(

name="get_current_weather",

description="Get the current weather",

parameters={

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA",

},

"format": {

"type": "string",

"enum": ["celsius", "fahrenheit"],

"description": "The temperature unit to use. Infer this from the users location.",

},

},

"required": ["location", "format"],

},

)

)

],

messages=[

UserMessage(content="What's the weather like today in Paris?"),

],

)

tokens = tokenizer.encode_chat_completion(completion_request).tokens

out_tokens, _ = generate([tokens], model, max_tokens=64, temperature=0.0, eos_id=tokenizer.instruct_tokenizer.tokenizer.eos_id)

result = tokenizer.instruct_tokenizer.tokenizer.decode(out_tokens[0])

print(result)

Hugging Face Transformers

from transformers import LlamaTokenizerFast, MistralForCausalLM

import torch

device = "cuda"

tokenizer = LlamaTokenizerFast.from_pretrained('mistralai/Mistral-Small-Instruct-2409')

tokenizer.pad_token = tokenizer.eos_token

model = MistralForCausalLM.from_pretrained('mistralai/Mistral-Small-Instruct-2409', torch_dtype=torch.bfloat16)

model = model.to(device)

prompt = "How often does the letter r occur in Mistral?"

messages = [

{"role": "user", "content": prompt},

]

model_input = tokenizer.apply_chat_template(messages, tokenize=True, add_generation_prompt=True, return_tensors="pt").to(device)

gen = model.generate(model_input, max_new_tokens=150)

dec = tokenizer.batch_decode(gen)

print(dec)

输出

<s>

[INST]

How often does the letter r occur in Mistral?

[/INST]

To determine how often the letter "r" occurs in the word "Mistral,"

we can simply count the instances of "r" in the word.

The word "Mistral" is broken down as follows:

- M

- i

- s

- t

- r

- a

- l

Counting the "r"s, we find that there is only one "r" in "Mistral."

Therefore, the letter "r" occurs once in the word "Mistral."

</s>

看来 Mistral 尝试用 CoT 来修复草莓问题🙂

资料

https://mistral.ai/news/september-24-release/

https://artificialanalysis.ai/models/mistral-small

https://huggingface.co/mistralai/Mistral-Small-Instruct-2409