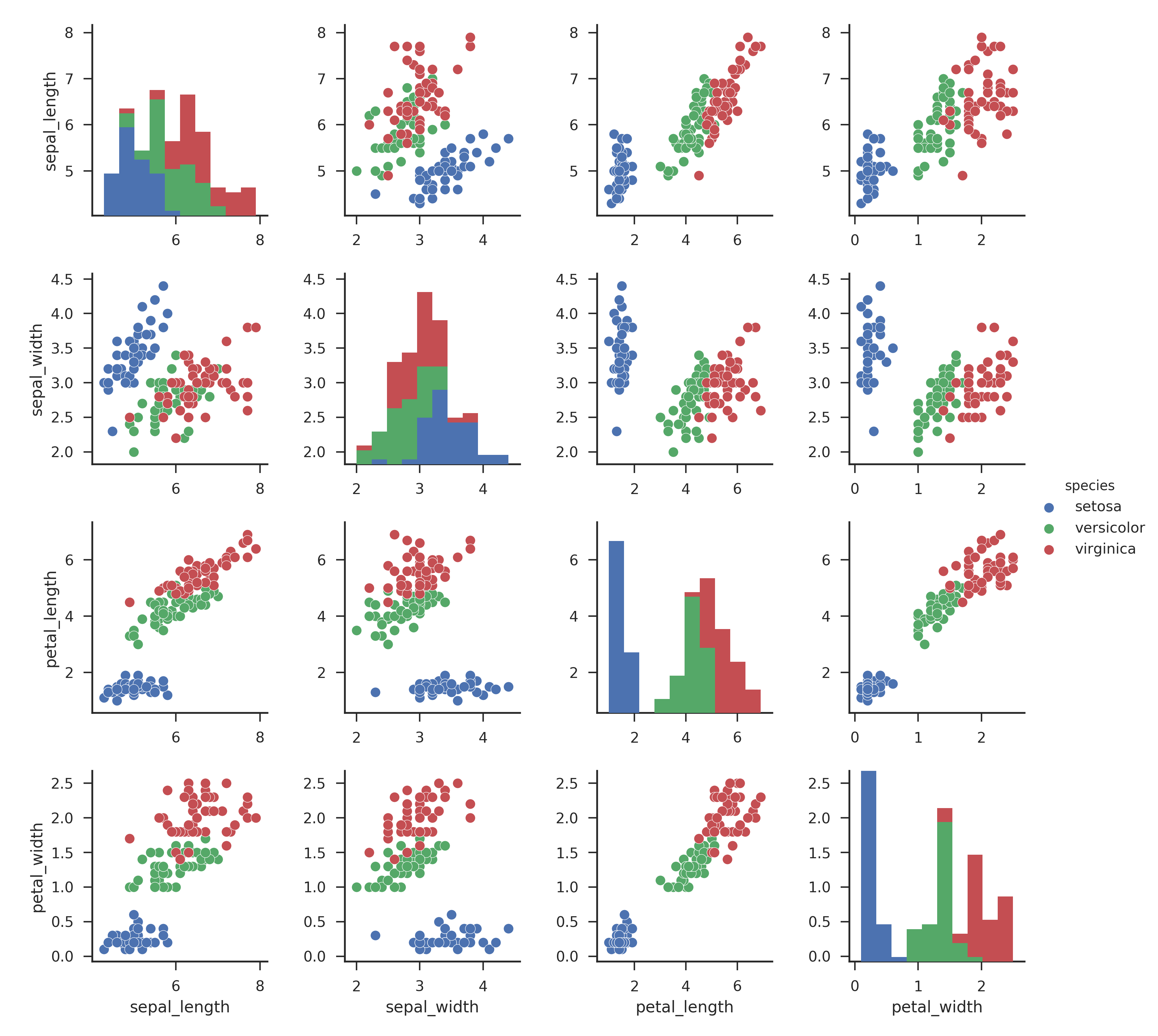

偏差与方差

- 题目

- 欠拟合

- 改进欠拟合

- 影响偏差和方差因素

- 训练集拟合情况

- 训练集和测试集代价函数

- 选择最优lamda

- 整体代码

训练集:训练模型

·验证集︰模型选择,模型的最终优化

·测试集:利用训练好的模型测试其泛化能力

#训练集

x_train,y_train = data['X'],data[ 'y']

#验证集

x_val,y_val = data['Xval'],data[ 'yval']

x_val.shape,y_val.shape

#测试集

x_test,y_test = data['Xtest'],data[ 'ytest']

x_test.shape,y_test.shape

题目

def reg_cost(theta,x,y,lamda):

cost=np.sum(np.power(x@theta-y.flatten(),2))

reg=theta[1:]@theta[1:]*lamda

return (cost+reg)/(2*len(x))

def reg_gradient(theta,x,y,lamda):

grad=(x@theta-y.flatten())@x

reg=lamda*theta

reg[0]=0

return (grad+reg)/(len(x))

def train_mode(x,y,lamda):

theta=np.ones(x.shape[1])

res=minimize(

fun=reg_cost,

x0=theta,

args=(x,y,lamda),

method='TNC',

jac=reg_gradient

)

return res.x

欠拟合

"""

训练样本从1开始递增进行训练

比较训练集和验证集上的损失函数的变化情况

"""

def plot_learning_curve(x_train,y_train,x_val,y_val,lamda):

x= range(1,len(x_train)+1)

training_cost =[]

cv_cost =[]

for i in x:

res = train_mode(x_train[:i,:],y_train[:i,:],lamda)

training_cost_i = reg_cost(res,x_train[:i,:],y_train[:i,:],lamda)

cv_cost_i = reg_cost(res,x_val,y_val,lamda)

training_cost.append(training_cost_i)

cv_cost.append(cv_cost_i)

plt.plot(x,training_cost,label = 'training cost')

plt.plot(x,cv_cost,label = 'cv cost')

plt.legend()

plt.xlabel("number of training examples")

plt.ylabel("error")

plt.show()

改进欠拟合

影响偏差和方差因素

"""

任务:构造多项式特征,进行多项式回归

"""

def poly_feature(x, power):

for i in range(2, power + 1):

x= np.insert(x, x.shape[1], np.power(x[:, 1], i), axis = 1)

return x

"""

归一化

"""

def get_means_stds(x):

means = np.mean(x, axis=0)

stds = np.std(x, axis=0)

return means, stds

def feature_normalize(x,means,stds):

x [:,1:]=(x[:,1:] - means[1:,])/stds[1:]

return x

power=6

x_train_poly=poly_feature(x_train,power)

x_val_poly=poly_feature(x_val,power)

x_test_poly=poly_feature(x_test,power)

train_means,train_stds=get_means_stds(x_train_poly)

x_train_norm=feature_normalize(x_train_poly,train_means,train_stds)

x_val_norm=feature_normalize(x_val_poly,train_means,train_stds)

x_test_norm=feature_normalize(x_test_poly,train_means,train_stds)

theta_fit=train_mode(x_train_norm,y_train,lamda =0)

训练集拟合情况

"""

训练集

绘制数据集和拟合函数

"""

def plot_poly_fit():

plot_data()

x = np.linspace(-60,60,100)

xx= x.reshape(100,1)

xx= np.insert(xx,0,1,axis=1)

xx= poly_feature(xx,power)

xx= feature_normalize(xx,train_means,train_stds)

plt.plot(x,xx@theta_fit,'r--')

训练集和测试集代价函数

plot_learning_curve(x_train_norm,y_train, x_val_norm, y_val, lamda=0)

此时lamda=0没有加入正则化

可以看出是高方差,过拟合了,此时lamda=0没有加入正则化

加入正则化如下

plot_learning_curve(x_train_norm,y_train, x_val_norm, y_val, lamda=1)

此时训练集误差增大,验证集误差减小了

但是lamda不能过大了,如下

plot_learning_curve(x_train_norm,y_train, x_val_norm, y_val, lamda=100)

选择最优lamda

lamdas=[0,0.001,0.003,0.01,0.03,0.1,0.3,1,2,3,10]

training_cost =[]

cv_cost =[]

for lamda in lamdas:

res = train_mode(x_train_norm,y_train,lamda)

tc = reg_cost(res,x_train_norm,y_train,lamda=0)

cv = reg_cost(res,x_val_norm,y_val,lamda=0)

training_cost.append(tc)

cv_cost.append(cv)

plt.plot(lamdas,training_cost,label="training cost")

plt.plot(lamdas,cv_cost,label="cv cos")

plt.legend()

plt.show()

l=lamdas[np.argmin(cv_cost)]#寻找最优lamda

print(l)

res = train_mode(x_train_norm,y_train,lamda =l)

test_cost = reg_cost(res,x_test_norm,y_test,lamda = 0)

print(test_cost)

整体代码

import numpy as np

import matplotlib.pyplot as plt

import scipy.io as sio

from scipy.optimize import minimize

def plot_data():

fig,ax = plt.subplots()

ax.scatter(x_train[:,1],y_train)

ax.set(xlabel = "change in water level(x)",

ylabel = 'water flowing out og the dam(y)')

def reg_cost(theta,x,y,lamda):

cost=np.sum(np.power(x@theta-y.flatten(),2))

reg=theta[1:]@theta[1:]*lamda

return (cost+reg)/(2*len(x))

def reg_gradient(theta,x,y,lamda):

grad=(x@theta-y.flatten())@x

reg=lamda*theta

reg[0]=0

return (grad+reg)/(len(x))

def train_mode(x,y,lamda):

theta=np.ones(x.shape[1])

res=minimize(

fun=reg_cost,

x0=theta,

args=(x,y,lamda),

method='TNC',

jac=reg_gradient

)

return res.x

"""

训练样本从1开始递增进行训练

比较训练集和验证集上的损失函数的变化情况

"""

def plot_learning_curve(x_train,y_train,x_val,y_val,lamda):

x= range(1,len(x_train)+1)

training_cost =[]

cv_cost =[]

for i in x:

res = train_mode(x_train[:i,:],y_train[:i,:],lamda)

training_cost_i = reg_cost(res,x_train[:i,:],y_train[:i,:],lamda)

cv_cost_i = reg_cost(res,x_val,y_val,lamda)

training_cost.append(training_cost_i)

cv_cost.append(cv_cost_i)

plt.plot(x,training_cost,label = 'training cost')

plt.plot(x,cv_cost,label = 'cv cost')

plt.legend()

plt.xlabel("number of training examples")

plt.ylabel("error")

plt.show()

"""

任务:构造多项式特征,进行多项式回归

"""

def poly_feature(x, power):

for i in range(2, power + 1):

x= np.insert(x, x.shape[1], np.power(x[:, 1], i), axis = 1)

return x

"""

归一化

"""

def get_means_stds(x):

means = np.mean(x, axis=0)

stds = np.std(x, axis=0)

return means, stds

def feature_normalize(x,means,stds):

x [:,1:]=(x[:,1:] - means[1:,])/stds[1:]

return x

"""

训练集

绘制数据集和拟合函数

"""

def plot_poly_fit():

plot_data()

x = np.linspace(-60,60,100)

xx= x.reshape(100,1)

xx= np.insert(xx,0,1,axis=1)

xx= poly_feature(xx,power)

xx= feature_normalize(xx,train_means,train_stds)

plt.plot(x,xx@theta_fit,'r--')

data=sio.loadmat("E:/学习/研究生阶段/python-learning/吴恩达机器学习课后作业/code/ex5-bias vs variance/ex5data1.mat")

#训练集

x_train,y_train = data['X'],data[ 'y']

#验证集

x_val,y_val = data['Xval'],data[ 'yval']

x_val.shape,y_val.shape

#测试集

x_test,y_test = data['Xtest'],data[ 'ytest']

x_test.shape,y_test.shape

#

x_train = np.insert(x_train,0,1,axis=1)

x_val = np.insert(x_val,0,1,axis=1)

x_test = np.insert(x_test,0,1,axis=1)

# plot_data()

theta=np.ones(x_train.shape[1])

lamda=1

# print(reg_cost(theta,x_train,y_train,lamda))

#print(reg_gradient(theta,x_train,y_train,lamda))

# theta_final=train_mode(x_train,y_train,lamda=0)

# plot_data()

# plt.plot(x_train[:,1],x_train@theta_final,c='r')

# plt.show()

#plot_learning_curve(x_train,y_train, x_val, y_val, lamda)

power=6

x_train_poly=poly_feature(x_train,power)

x_val_poly=poly_feature(x_val,power)

x_test_poly=poly_feature(x_test,power)

train_means,train_stds=get_means_stds(x_train_poly)

x_train_norm=feature_normalize(x_train_poly,train_means,train_stds)

x_val_norm=feature_normalize(x_val_poly,train_means,train_stds)

x_test_norm=feature_normalize(x_test_poly,train_means,train_stds)

theta_fit=train_mode(x_train_norm,y_train,lamda =0)

# plot_poly_fit()

#plot_learning_curve(x_train_norm,y_train, x_val_norm, y_val, lamda=100)

lamdas=[0,0.001,0.003,0.01,0.03,0.1,0.3,1,2,3,10]

training_cost =[]

cv_cost =[]

for lamda in lamdas:

res = train_mode(x_train_norm,y_train,lamda)

tc = reg_cost(res,x_train_norm,y_train,lamda=0)

cv = reg_cost(res,x_val_norm,y_val,lamda=0)

training_cost.append(tc)

cv_cost.append(cv)

plt.plot(lamdas,training_cost,label="training cost")

plt.plot(lamdas,cv_cost,label="cv cos")

plt.legend()

plt.show()

l=lamdas[np.argmin(cv_cost)]#寻找最优lamda

print(l)

res = train_mode(x_train_norm,y_train,lamda =l)

test_cost = reg_cost(res,x_test_norm,y_test,lamda = 0)

print(test_cost)