1.scrapy

Scrapy是一个为了爬取网站数据,提取结构性数据而编写的应用框架。 可以应用在包括数据挖掘,信息处理 或存储历史数据等一系列的程序中。

2.scrapy项目的创建以及运行

1.创建scrapy项目:终端输入 scrapy startproject 项目名称

2.项目组成:

- spiders

- init.py 自定义的爬虫文件.py ‐‐‐》由我们自己创建,是实现爬虫核心功能的文件

- init.py items.py ‐‐‐》定义数据结构的地方,是一个继承自scrapy.Item的类

- middlewares.py ‐‐‐》中间件 代理

- pipelines.py ‐‐‐》管道文件,里面只有一个类,用于处理下载数据的后续处理 默认是300优先级,值越小优先级越高(1‐1000)

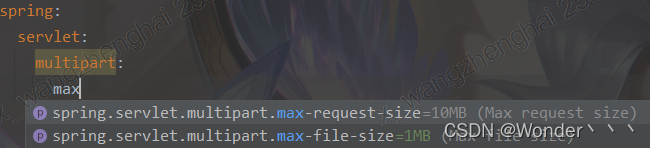

- settings.py ‐‐‐》配置文件 比如:是否遵守robots协议,User‐Agent定义等

3.创建爬虫文件:

创建爬虫文件:

(1)跳转到spiders文件夹 cd 目录名字/目录名字/spiders

(2)scrapy genspider 爬虫名字 网页的域名

爬虫文件的基本组成:

- 继承scrapy.Spider类

- name = ‘baidu’ ‐‐‐》 运行爬虫文件时使用的名字

- allowed_domains ‐‐‐》 爬虫允许的域名,在爬取的时候,如果不是此域名之下的 url,会被过滤掉

- start_urls ‐‐‐》 声明了爬虫的起始地址,可以写多个url,一般是一个

- response.text ‐‐‐》响应的是字符串

- response.body ‐‐‐》响应的是二进制文件

- response.xpath()‐》xpath方法的返回值类型是selector列表

- extract() ‐‐‐》提取的是selector对象的是data

- extract_first() ‐‐‐》提取的是selector列表中的第一个数据

- parse(self, response) ‐‐‐》解析数据的回调函数

运行爬虫文件:

scrapy crawl 爬虫名称 注意:应在spiders文件夹内执行

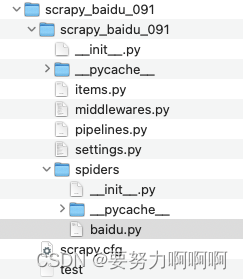

4.简单样例

4.1文件结构

4.2代码

import scrapy

class BaiduSpider(scrapy.Spider):

# 爬虫的名字 用于运行爬虫的时候 使用的值

name = 'baidu'

# 允许访问的域名

allowed_domains = ['http://www.baidu.com']

# 起始的url地址 指的是第一次要访问的域名

# start_urls 是在allowed_domains的前面添加一个http://

# 在 allowed_domains的后面添加一个/

start_urls = ['http://www.baidu.com/']

# 是执行了start_urls之后 执行的方法 方法中的response 就是返回的那个对象

# 相当于 response = urllib.request.urlopen()

# response = requests.get()

def parse(self, response):

print('苍茫的天涯是我的爱')

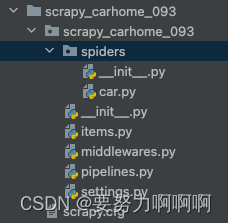

5.简单样例

5.1文件结构

import scrapy

class CarSpider(scrapy.Spider):

name = 'car'

allowed_domains = ['https://car.autohome.com.cn/price/brand-15.html']

start_urls = ['https://car.autohome.com.cn/price/brand-15.html']#ruguo 结尾是html的话,那么是不需要加上\

def parse(self, response):

#//div[@class='main-title']/a/text()

#//div[@class="main-lever"]//span/span/text()

name_list = response.xpath('//div[@class="main-title"]/a/text()')

price_list = response.xpath('//div[@class="main-lever"]//span/span/text()')

print(name_list)#输出的是一个列表

'''

[<Selector xpath='//div[@class="main-title"]/a/text()' data='宝马1系'>, <Selector xpath='//div[@class="main-title"]/a/text()' data='宝马3系'>, <Selector xp/div[@class="main-title"]/a/text()' data='宝马i3'>, <Selector xpath='//div[@class="main-title"]/a/text()' data='宝马5系'>, <Selector xpath='//div[@class="mitle"]/a/text()' data='宝马5系新能源'>, <Selector xpath='//div[@class="main-title"]/a/text()' data='宝马X1'>, <Selector xpath='//div[@class="main-title"]/a data='宝马X2'>, <Selector xpath='//div[@class="main-title"]/a/text()' data='宝马iX3'>, <Selector xpath='//div[@class="main-title"]/a/text()' data='宝马X3'lector xpath='//div[@class="main-title"]/a/text()' data='宝马X5'>, <Selector xpath='//div[@class="main-title"]/a/text()' data='宝马2系'>, <Selector xpath='[@class="main-title"]/a/text()' data='宝马4系'>, <Selector xpath='//div[@class="main-title"]/a/text()' data='宝马i4'>, <Selector xpath='//div[@class="main-"]/a/text()' data='宝马5系(进口)'>, <Selector xpath='//div[@class="main-title"]/a/text()' data='宝马6系GT'>]

输出的是列表中的每一个data中的值

宝马1系

宝马3系

宝马i3

宝马5系

宝马5系新能源

宝马X1

宝马X2

宝马iX3

宝马X3

宝马X5

宝马2系

宝马4系

宝马i4

宝马5系(进口)

'''

for name in name_list:

print(name.extract())

print('============')

print(price_list.extract_first())#获取得到的是第一个Selector的值的data。

pass

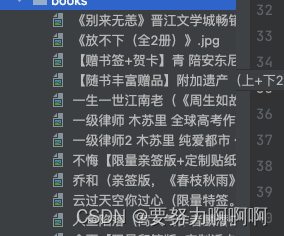

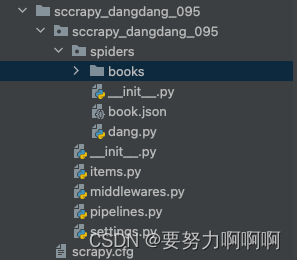

6.完整样例

6.1 代码结构

6.2 代码

dang.py

import scrapy

from sccrapy_dangdang_095.items import SccrapyDangdang095Item

class DangSpider(scrapy.Spider):

name = 'dang'

# allowed_domains = ['http://e.dangdang.com/list-AQQG-dd_sale-0-1.html']

# start_urls = ['http://e.dangdang.com/list-AQQG-dd_sale-0-1.html']#ruguo 结尾是html的话,那么是不需要加上\

allowed_domains = ['category.dangdang.com']

start_urls = ['http://category.dangdang.com/cp01.01.02.00.00.00.html']

base_url = "http://category.dangdang.com/pg"

page = 1

def parse(self, response):

#//div[@class="title"]/text()

#//div[@class="price"]/span/text()

#pipelines 下载数据

#items 定义数据结构

#所有的seletor的对象 都可以再次调用xpath方法

li_list = response.xpath('//ul[@id="component_59"]/li')

for li in li_list:

#里面就有一个东西

src = li.xpath('.//img/@data-original').extract_first()

#第一张

if src:

src = src

else:

src = li.xpath('.//img/@src').extract_first()

name = li.xpath('.//img/@alt').extract_first()#获取到名字

price = li.xpath('.//p[@class="price"]/span[1]/text()').extract_first()#获取到p标签中的价格

book = SccrapyDangdang095Item(src=src, name=name, price=price)

#huoqu获取一个book就将book交给pipelines

yield book

if self.page < 100:

self.page = self.page + 1

url = self.base_url + str(self.page) + '-cp01.01.02.00.00.00.html'

# 怎么去调用parse方法

# scrapy.Request就是scrpay的get请求

# url就是请求地址

# callback是你要执行的那个函数 注意不需要加()

yield scrapy.Request(url=url, callback=self.parse)

print('============')

items.py

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class SccrapyDangdang095Item(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

# tongshu通俗的说就是需要下载的数据都有什么

#图片

src = scrapy.Field()

#名字

name = scrapy.Field()

#价格

price = scrapy.Field()

pass

pipelines.py

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

from itemadapter import ItemAdapter

# 如果想使用管道的话 那么就必须在settings中开启管道

class SccrapyDangdang095Pipeline:

# 在爬虫文件开始的之前就执行的一个方法

def open_spider(self,spider):

self.fp = open('book.json','w',encoding='utf-8')

# item就是yield后面的book对象

def process_item(self, item, spider):

self.fp.write(str(item))

return item

#在爬虫文件执行完之后 执行的方法

def close_spider(self,spider):

self.fp.close()

import urllib.request

# 多条管道开启

# (1) 定义管道类

# (2) 在settings中开启管道

# 'scrapy_dangdang_095.pipelines.DangDangDownloadPipeline':301

class DangDangDownloadPipeline:

def process_item(self,item,spider):

url = 'http:' + item.get('src')

filename = './books/' + item.get('name') + '.jpg'

urllib.request.urlretrieve(url=url, filename=filename)

return item

setting.py

# Scrapy settings for sccrapy_dangdang_095 project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

BOT_NAME = 'sccrapy_dangdang_095'

SPIDER_MODULES = ['sccrapy_dangdang_095.spiders']

NEWSPIDER_MODULE = 'sccrapy_dangdang_095.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'sccrapy_dangdang_095 (+http://www.yourdomain.com)'

# Obey robots.txt rules

ROBOTSTXT_OBEY = True

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#}

# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'sccrapy_dangdang_095.middlewares.SccrapyDangdang095SpiderMiddleware': 543,

#}

# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'sccrapy_dangdang_095.middlewares.SccrapyDangdang095DownloaderMiddleware': 543,

#}

# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

# 管道可以有很多个 那么管道是有优先级的 优先级的范围是1到1000 值越小优先级越高

'sccrapy_dangdang_095.pipelines.SccrapyDangdang095Pipeline': 300,

# DangDangDownloadPipeline

'sccrapy_dangdang_095.pipelines.DangDangDownloadPipeline':301

}

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

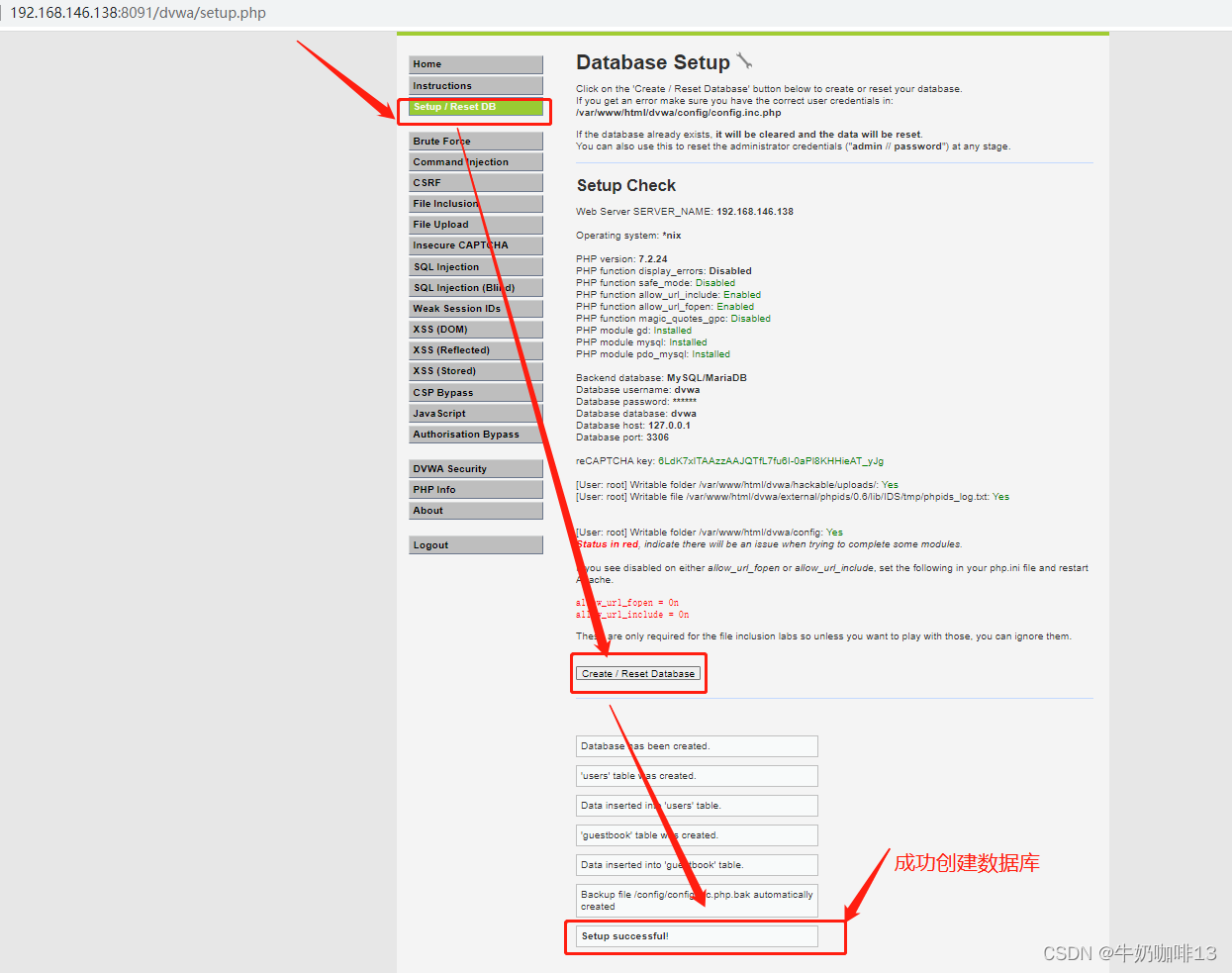

下载成功