线性CNN模型的结构与训练

- 引入包

- LeNet

- 模型结构

- 模型构建

- AlexNet

- 模型结构

- 模型构建

- VGG

- 模型结构

- 模型构建

- 加载数据集

- 累加器

- 精度

- 训练

引入包

import torch

from torch import nn

from torchvision import datasets

from torchvision import transforms

from torch.utils.data import DataLoader

from tqdm import tqdm

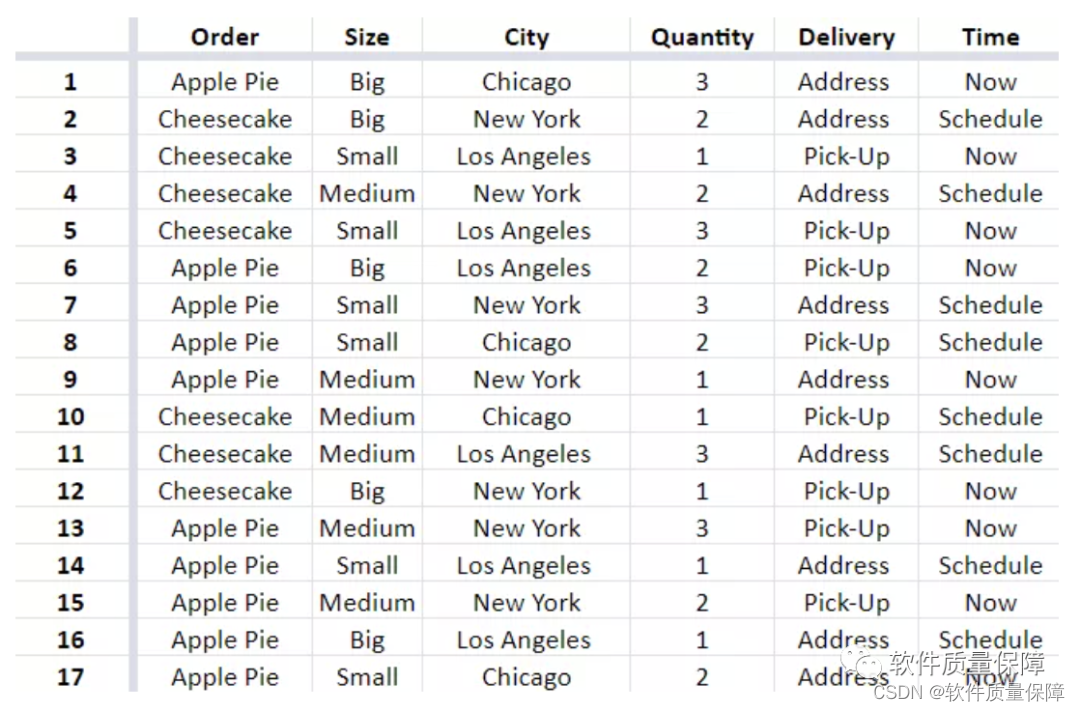

LeNet

LeNet是一个较为简单的线性卷积模型,最早是应用于手写数字识别,提到手写数字识别就不得不提到mnist数据集,而为了与后续其他较强的模型进行对比,我们采用fashion_mnist数据集为例。

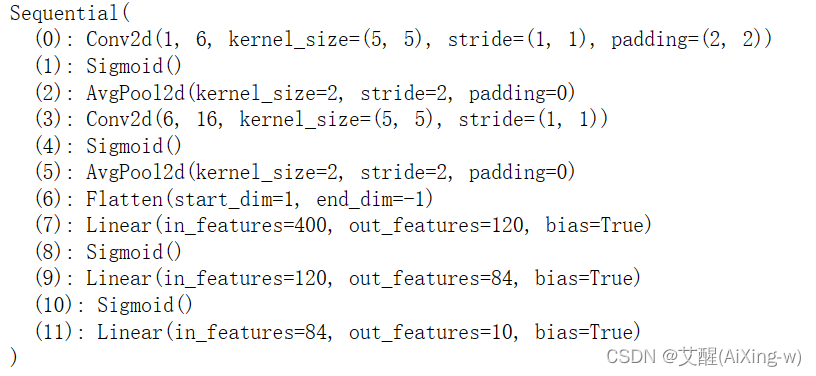

模型结构

模型结构如下

模型构建

因为LeNet是线性模型,所以我们直接使用torch.nn.Sequential构建模型

net = nn.Sequential(nn.Conv2d(1, 6, kernel_size=5, padding=2), nn.Sigmoid(), # (1, 28, 28)->(6, 28, 28)

nn.AvgPool2d(kernel_size=2, stride=2), # (6, 28, 28)->(6, 14, 14)

nn.Conv2d(6, 16, kernel_size=5), nn.Sigmoid(), # (6, 14, 14)->(16, 10, 10)

nn.AvgPool2d(kernel_size=2, stride=2), # (16, 10, 10)->(16, 5, 5)

nn.Flatten(),

nn.Linear(16 * 5 * 5, 120), nn.Sigmoid(), # (16 * 5 * 5, 120)

nn.Linear(120, 84), nn.Sigmoid(), # (120, 84)

nn.Linear(84, 10)

)

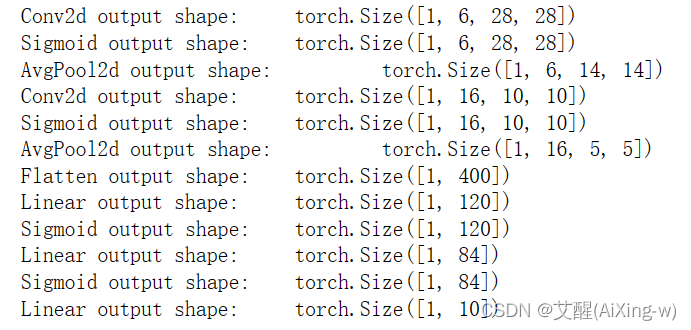

我们先构建一个伪数据输入模型,来观察它的每一层的结构

X= torch.randn((1, 1, 28, 28))

for layer in net:

X = layer(X)

print(layer.__class__.__name__,'output shape: \t',X.shape)

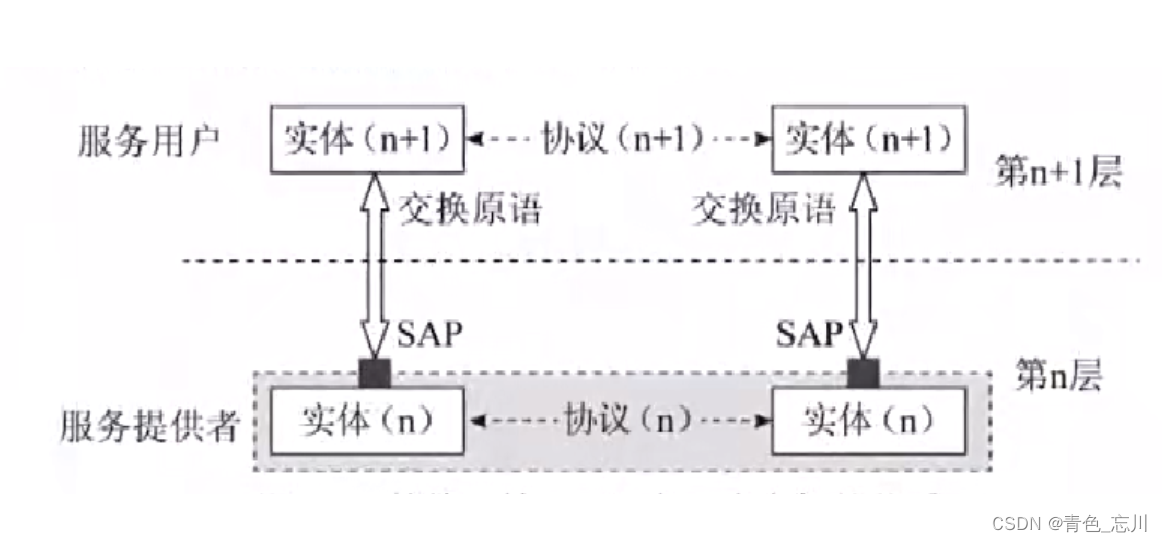

AlexNet

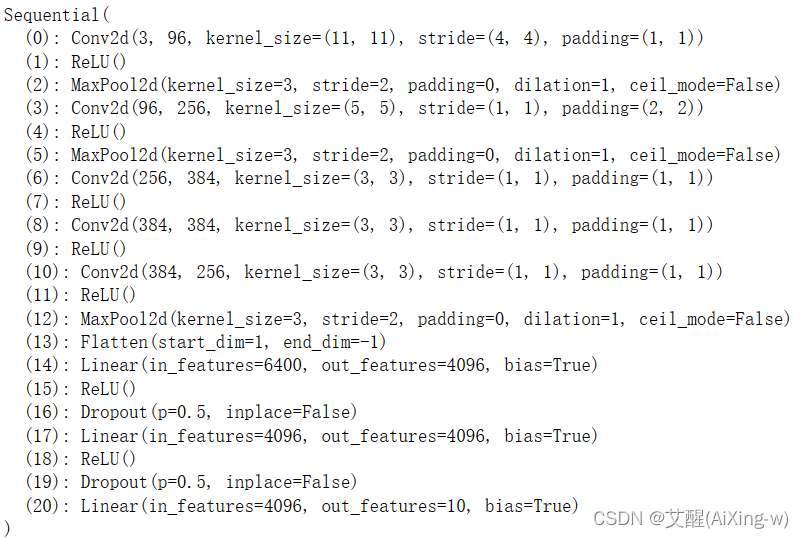

模型结构

AlexNet也是一个线性模型,相当于算是LeNet的加强版,模型结构如下

模型构建

net = nn.Sequential(nn.Conv2d(3, 96, kernel_size=11, stride=4, padding=1),nn.ReLU(), # (3, 224, 224)->(96, 54, 54)

nn.MaxPool2d(kernel_size=3, stride=2), # (96, 26, 26)

nn.Conv2d(96, 256, kernel_size=5, padding=2), nn.ReLU(), # (256, 26, 26)

nn.MaxPool2d(kernel_size=3, stride=2), # (256, 12, 12)

nn.Conv2d(256, 384, kernel_size=3, padding=1), nn.ReLU(), # (384, 12, 12)

nn.Conv2d(384, 384, kernel_size=3, padding=1), nn.ReLU(), # (384, 12, 12)

nn.Conv2d(384, 256, kernel_size=3, padding=1), nn.ReLU(), # (256, 12, 12)

nn.MaxPool2d(kernel_size=3, stride=2), # (256, 5, 5)

nn.Flatten(), # 256 * 5 * 5

nn.Linear(6400, 4096), nn.ReLU(),

nn.Dropout(0.5),

nn.Linear(4096, 4096), nn.ReLU(),

nn.Dropout(0.5),

nn.Linear(4096, 10)

)

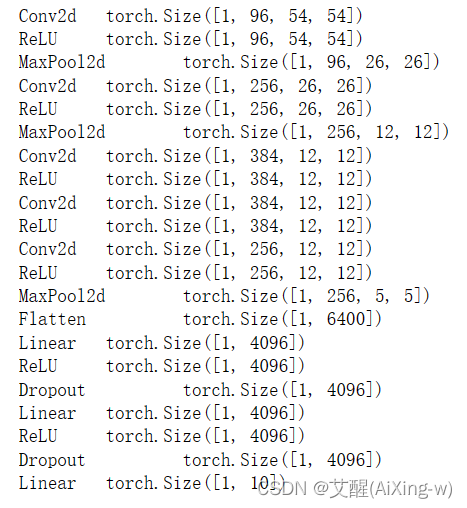

观察

X = torch.randn((1, 3, 224, 224))

for layer in net:

X = layer(X)

print(layer.__class__.__name__, '\t', X.shape)

VGG

模型结构

VGG虽然仍然是线性模型,但是他引入了块的结构,模型结构依然是线性,只不过构建的方式更为简便,即不用一层层的去构建,直接通过构建块来构建

模型构建

vgg块

def vgg_block(num_conv, in_channels, out_channels):

layers = []

for _ in range(num_conv):

layers.append(nn.Conv2d(in_channels, out_channels, kernel_size=3, padding=1))

layers.append(nn.ReLU())

in_channels = out_channels

layers.append(nn.MaxPool2d(kernel_size=2, stride=2))

return nn.Sequential(*layers)

构建vgg11模型

def vgg11(in_channel):

conv_archs = ((1, 64), (1, 128), (2, 256), (2, 512), (2, 512))

conv_blks = []

for (num_conv, out_channel) in conv_archs:

conv_blks.append(vgg_block(num_conv, in_channel, out_channel))

in_channel = out_channel

return nn.Sequential(*conv_blks, nn.Flatten(),

nn.Linear(out_channel*7*7, 4096), nn.ReLU(),

nn.Dropout(0.5),

nn.Linear(4096, 4096), nn.ReLU(),

nn.Linear(4096, 10)

)

net = vgg11(1)

加载数据集

可以直接使用torchvision.datasets中的FashionMNIST直接加载数据集,其中需要注意的是读入是图像的数据一定要使用transforms.ToTensor将数据转换成torch模型支持的数据类型,即tensor类型

def load_fashion_mnist(batch_size, resize=None):

tran = [transforms.ToTensor()]

if resize:

tran.insert(0, transforms.Resize(resize))

tran = transforms.Compose(tran)

mnist_train = datasets.FashionMNIST(root='./data', train=True, transform=tran, download=True)

mnist_test = datasets.FashionMNIST(root='./data', train=False, transform=tran, download=True)

return (DataLoader(mnist_train, batch_size, shuffle=True),

DataLoader(mnist_test, batch_size, shuffle=False)

)

batch_size = 256

train_iter, test_iter = load_fashion_mnist(batch_size)

累加器

定义一个累加器,用来累积,在后边训练的时候用于统计精度和损失,这个是参照的李沐的动手学深度学习,刚开始的时候我也是按照这个这样写的,但是后边根据个人的使用习惯已经被替换掉了,暂时这个还是跟李沐老师的符合一下。

class Accumulator:

def __init__(self, n):

self.data = [0.0] * n

def add(self, *args):

self.data = [a + float(b) for a, b in zip(self.data, args)]

def reset(self):

self.data = [0.0] * len(self.data)

def __getitem__(self, idx):

return self.data[idx]

精度

def accuracy(y_hat, y):

if len(y_hat.shape) > 1 and y_hat.shape[1] > 1:

y_hat = torch.argmax(y_hat, axis=1)

cmp = y_hat.type(y.dtype)==y

return float(cmp.type(y.dtype).sum())

其中y_hat是预测标签,y是真实标签。

这里需要强调的是,y作为真实标签,每个标签是一个数字,而y_hat既可以是一个数字,也可以是神经网络输出的不同类别的概率,为了统一形式,当输入的y_hat不为数字时,我们使用torch.argmax获取类别概率最大的索引,即最大的值。

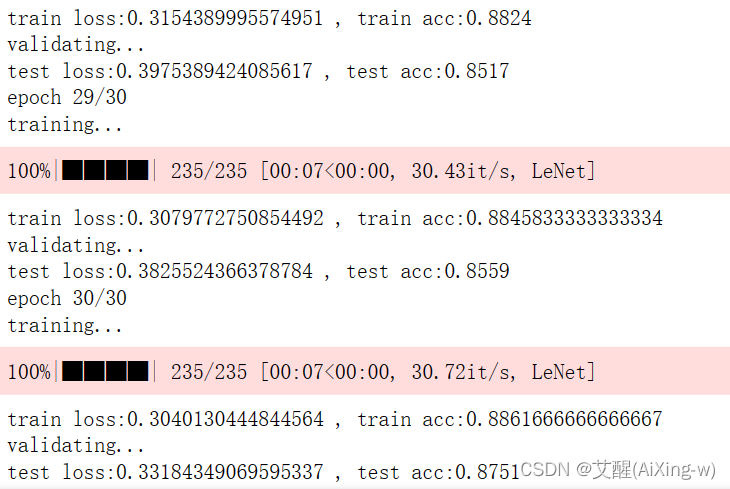

训练

def train(net, name, train_ter, test_iter, num_epochs, lr, device):

def init_weights(m):

if isinstance(m, nn.Linear) or isinstance(m, nn.Conv2d):

nn.init.xavier_uniform_(m.weight)

net.apply(init_weights)

print("device in : ", device)

net = net.to(device)

optimizer = torch.optim.SGD(net.parameters(), lr=lr)

loss = nn.CrossEntropyLoss()

for epoch in range(num_epochs):

print("epoch {}/{}".format(epoch+1, num_epochs))

metric = Accumulator(3)

net.train()

print('training...')

for X, y in tqdm(train_iter, ncols=50, postfix="{}".format(name)):

optimizer.zero_grad()

X, y = X.to(device), y.to(device)

y_hat = net(X)

l = loss(y_hat, y)

l.backward()

optimizer.step()

with torch.no_grad():

metric.add(l * X.shape[0], accuracy(y_hat, y), X.shape[0])

train_l = metric[0] / metric[2]

train_acc = metric[1] / metric[2]

tqdm.write('train loss:{} , train acc:{}'.format(train_l, train_acc))

metric.reset()

net.eval()

print('validating...')

for X, y in (test_iter):

X, y = X.to(device), y.to(device)

y_hat = net(X)

with torch.no_grad():

l = loss(y_hat, y)

metric.add(l * X.shape[0], accuracy(y_hat, y), X.shape[0])

test_l = metric[0] / metric[2]

test_acc = metric[1] / metric[2]

metric.reset()

tqdm.write('test loss:{} , test acc:{}'.format(test_l, test_acc))

train(net, 'LeNet', train_iter, test_iter, 30, 0.95, 'cuda')