功能介绍:

录音并实时获取RAW的音频格式数据,利用WebSocket上传数据到服务器,并实时获取语音识别结果,参考文档使用AudioCapturer开发音频录制功能(ArkTS),更详细接口信息请查看接口文档:AudioCapturer8+和@ohos.net.webSocket (WebSocket连接)。

知识点:

- 熟悉使用AudioCapturer录音并实时获取RAW格式数据。

- 熟悉使用WebSocket上传音频数据并获取识别结果。

- 熟悉对敏感权限的动态申请方式,本项目的敏感权限为

MICROPHONE。 - 关于如何搭建实时语音识别服务,可以参考我的另外一篇文章:《识别准确率竟如此高,实时语音识别服务》。

使用环境:

- API 9

- DevEco Studio 4.0 Release

- Windows 11

- Stage模型

- ArkTS语言

所需权限:

- ohos.permission.MICROPHONE

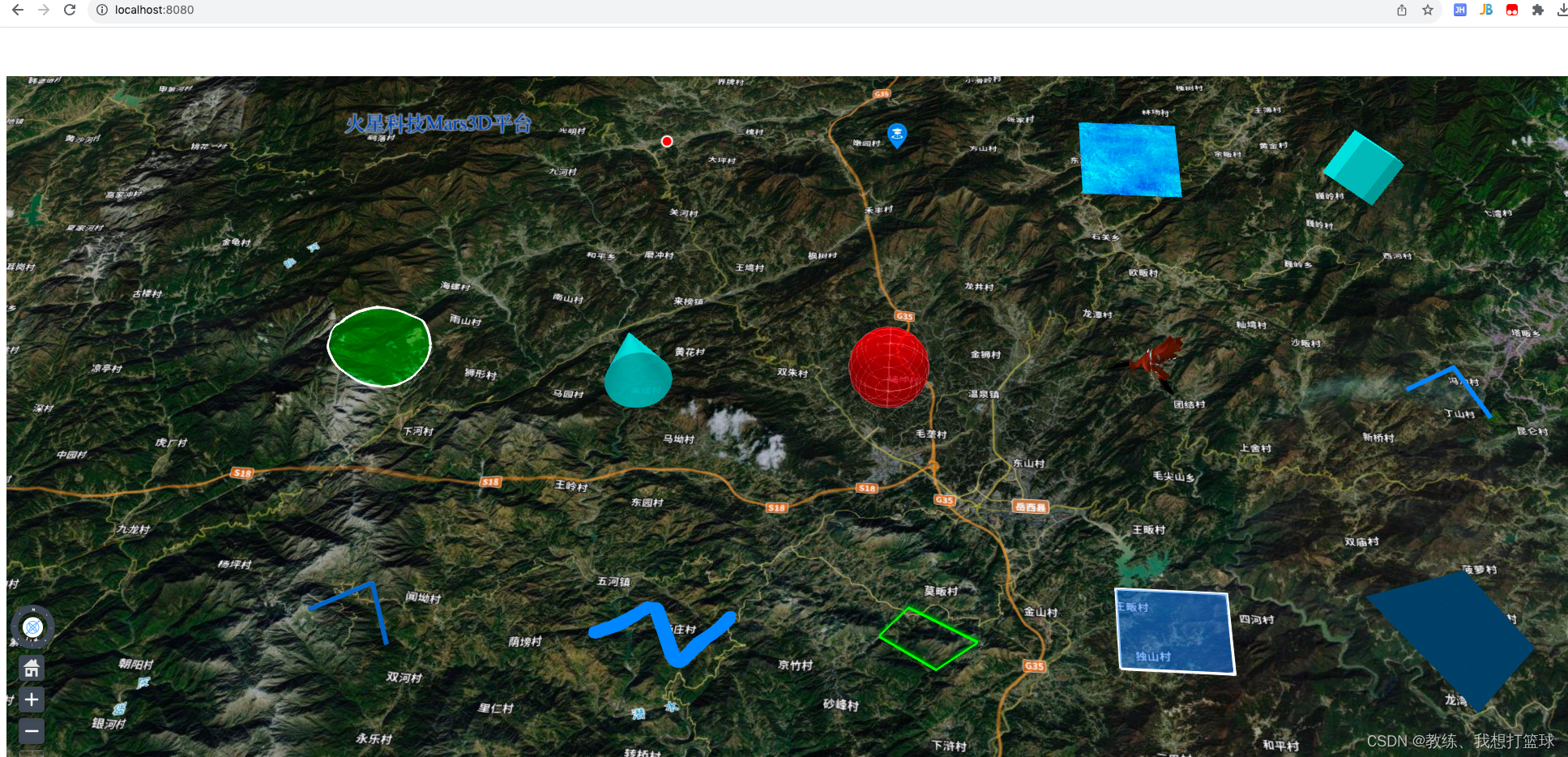

效果图:

核心代码:

src/main/ets/utils/Permission.ets是动态申请权限的工具:

import bundleManager from '@ohos.bundle.bundleManager';

import abilityAccessCtrl, { Permissions } from '@ohos.abilityAccessCtrl';

async function checkAccessToken(permission: Permissions): Promise<abilityAccessCtrl.GrantStatus> {

let atManager = abilityAccessCtrl.createAtManager();

let grantStatus: abilityAccessCtrl.GrantStatus;

// 获取应用程序的accessTokenID

let tokenId: number;

try {

let bundleInfo: bundleManager.BundleInfo = await bundleManager.getBundleInfoForSelf(bundleManager.BundleFlag.GET_BUNDLE_INFO_WITH_APPLICATION);

let appInfo: bundleManager.ApplicationInfo = bundleInfo.appInfo;

tokenId = appInfo.accessTokenId;

} catch (err) {

console.error(`getBundleInfoForSelf failed, code is ${err.code}, message is ${err.message}`);

}

// 校验应用是否被授予权限

try {

grantStatus = await atManager.checkAccessToken(tokenId, permission);

} catch (err) {

console.error(`checkAccessToken failed, code is ${err.code}, message is ${err.message}`);

}

return grantStatus;

}

export async function checkPermissions(permission: Permissions): Promise<boolean> {

let grantStatus: abilityAccessCtrl.GrantStatus = await checkAccessToken(permission);

if (grantStatus === abilityAccessCtrl.GrantStatus.PERMISSION_GRANTED) {

return true

} else {

return false

}

}

src/main/ets/utils/Recorder.ets是录音工具类,进行录音和获取录音数据。

import audio from '@ohos.multimedia.audio';

import { delay } from './Utils';

export default class AudioCapturer {

private audioCapturer: audio.AudioCapturer | undefined = undefined

private isRecording: boolean = false

private audioStreamInfo: audio.AudioStreamInfo = {

samplingRate: audio.AudioSamplingRate.SAMPLE_RATE_16000, // 音频采样率

channels: audio.AudioChannel.CHANNEL_1, // 录音通道数

sampleFormat: audio.AudioSampleFormat.SAMPLE_FORMAT_S16LE,

encodingType: audio.AudioEncodingType.ENCODING_TYPE_RAW // 音频编码类型

}

private audioCapturerInfo: audio.AudioCapturerInfo = {

// 音源类型,使用SOURCE_TYPE_VOICE_RECOGNITION会有减噪功能,如果设备不支持,该用普通麦克风:SOURCE_TYPE_MIC

source: audio.SourceType.SOURCE_TYPE_VOICE_RECOGNITION,

capturerFlags: 0 // 音频采集器标志

}

private audioCapturerOptions: audio.AudioCapturerOptions = {

streamInfo: this.audioStreamInfo,

capturerInfo: this.audioCapturerInfo

}

// 初始化,创建实例,设置监听事件

constructor() {

// 创建AudioCapturer实例

audio.createAudioCapturer(this.audioCapturerOptions, (err, capturer) => {

if (err) {

console.error(`创建录音器失败, 错误码:${err.code}, 错误信息:${err.message}`)

return

}

this.audioCapturer = capturer

console.info('创建录音器成功')

});

}

// 开始一次音频采集

async start(callback: (state: number, data?: ArrayBuffer) => void) {

// 当且仅当状态为STATE_PREPARED、STATE_PAUSED和STATE_STOPPED之一时才能启动采集

let stateGroup = [audio.AudioState.STATE_PREPARED, audio.AudioState.STATE_PAUSED, audio.AudioState.STATE_STOPPED]

if (stateGroup.indexOf(this.audioCapturer.state) === -1) {

console.error('启动录音失败')

callback(audio.AudioState.STATE_INVALID)

return

}

// 启动采集

await this.audioCapturer.start()

this.isRecording = true

let bufferSize = 1920

// let bufferSize: number = await this.audioCapturer.getBufferSize();

while (this.isRecording) {

let buffer = await this.audioCapturer.read(bufferSize, true)

if (buffer === undefined) {

console.error('读取录音数据失败')

} else {

callback(audio.AudioState.STATE_RUNNING, buffer)

}

}

callback(audio.AudioState.STATE_STOPPED)

}

// 停止采集

async stop() {

this.isRecording = false

// 只有采集器状态为STATE_RUNNING或STATE_PAUSED的时候才可以停止

if (this.audioCapturer.state !== audio.AudioState.STATE_RUNNING && this.audioCapturer.state !== audio.AudioState.STATE_PAUSED) {

console.warn('Capturer is not running or paused')

return

}

await delay(200)

// 停止采集

await this.audioCapturer.stop()

if (this.audioCapturer.state.valueOf() === audio.AudioState.STATE_STOPPED) {

console.info('录音停止')

} else {

console.error('录音停止失败')

}

}

// 销毁实例,释放资源

async release() {

// 采集器状态不是STATE_RELEASED或STATE_NEW状态,才能release

if (this.audioCapturer.state === audio.AudioState.STATE_RELEASED || this.audioCapturer.state === audio.AudioState.STATE_NEW) {

return

}

// 释放资源

await this.audioCapturer.release()

}

}

还需要一些其他的工具函数src/main/ets/utils/Utils.ets,这个主要用于睡眠等待:

// 睡眠

export function delay(milliseconds : number) {

return new Promise(resolve => setTimeout( resolve, milliseconds));

}

还需要在src/main/module.json5添加所需要的权限,注意是在module中添加,关于字段说明,也需要在各个的string.json添加:

"requestPermissions": [

{

"name": "ohos.permission.MICROPHONE",

"reason": "$string:record_reason",

"usedScene": {

"abilities": [

"EntryAbility"

],

"when": "always"

}

}

]

页面代码如下:

import abilityAccessCtrl, { Permissions } from '@ohos.abilityAccessCtrl';

import common from '@ohos.app.ability.common';

import webSocket from '@ohos.net.webSocket';

import AudioCapturer from '../utils/Recorder';

import promptAction from '@ohos.promptAction';

import { checkPermissions } from '../utils/Permission';

import audio from '@ohos.multimedia.audio';

// 需要动态申请的权限

const permissions: Array<Permissions> = ['ohos.permission.MICROPHONE'];

// 获取程序的上下文

const context = getContext(this) as common.UIAbilityContext;

@Entry

@Component

struct Index {

@State recordBtnText: string = '按下录音'

@State speechResult: string = ''

private offlineResult = ''

private onlineResult = ''

// 语音识别WebSocket地址

private asrWebSocketUrl = "ws://192.168.0.100:10095"

// 录音器

private audioCapturer?: AudioCapturer;

// 创建WebSocket

private ws;

// 页面显示时

async onPageShow() {

// 判断是否已经授权

let promise = checkPermissions(permissions[0])

promise.then((result) => {

if (result) {

// 初始化录音器

if (this.audioCapturer == null) {

this.audioCapturer = new AudioCapturer()

}

} else {

this.reqPermissionsAndRecord(permissions)

}

})

}

// 页面隐藏时

async onPageHide() {

if (this.audioCapturer != null) {

this.audioCapturer.release()

}

}

build() {

Row() {

RelativeContainer() {

Text(this.speechResult)

.id("resultText")

.width('95%')

.maxLines(10)

.fontSize(18)

.margin({ top: 10 })

.alignRules({

top: { anchor: '__container__', align: VerticalAlign.Top },

middle: { anchor: '__container__', align: HorizontalAlign.Center }

})

// 录音按钮

Button(this.recordBtnText)

.width('90%')

.id("recordBtn")

.margin({ bottom: 10 })

.alignRules({

bottom: { anchor: '__container__', align: VerticalAlign.Bottom },

middle: { anchor: '__container__', align: HorizontalAlign.Center }

})

.onTouch((event) => {

switch (event.type) {

case TouchType.Down:

console.info('按下按钮')

// 判断是否有权限

let promise = checkPermissions(permissions[0])

promise.then((result) => {

if (result) {

// 开始录音

this.startRecord()

this.recordBtnText = '录音中...'

} else {

// 申请权限

this.reqPermissionsAndRecord(permissions)

}

})

break

case TouchType.Up:

console.info('松开按钮')

// 停止录音

this.stopRecord()

this.recordBtnText = '按下录音'

break

}

})

}

.height('100%')

.width('100%')

}

.height('100%')

}

// 开始录音

startRecord() {

this.setWebSocketCallback()

this.ws.connect(this.asrWebSocketUrl, (err) => {

if (!err) {

console.log("WebSocket连接成功");

let jsonData = '{"mode": "2pass", "chunk_size": [5, 10, 5], "chunk_interval": 10, ' +

'"wav_name": "HarmonyOS", "is_speaking": true, "itn": false}'

// 要发完json数据才能录音

this.ws.send(jsonData)

// 开始录音

this.audioCapturer.start((state, data) => {

if (state == audio.AudioState.STATE_STOPPED) {

console.info('录音结束')

// 录音结束,要发消息告诉服务器,结束识别

let jsonData = '{"is_speaking": false}'

this.ws.send(jsonData)

} else if (state == audio.AudioState.STATE_RUNNING) {

// 发送语音数据

this.ws.send(data, (err) => {

if (err) {

console.log("WebSocket发送数据失败,错误信息:" + JSON.stringify(err))

}

});

}

})

} else {

console.log("WebSocket连接失败,错误信息: " + JSON.stringify(err));

}

});

}

// 停止录音

stopRecord() {

if (this.audioCapturer != null) {

this.audioCapturer.stop()

}

}

// 绑定WebSocket事件

setWebSocketCallback() {

// 创建WebSocket

this.ws = webSocket.createWebSocket();

// 接收WebSocket消息

this.ws.on('message', (err, value: string) => {

console.log("WebSocket接收消息,结果如下:" + value)

// 解析数据

let result = JSON.parse(value)

let is_final = result['is_final']

let mode = result['mode']

let text = result['text']

if (mode == '2pass-offline') {

this.offlineResult = this.offlineResult + text

this.onlineResult = ''

} else {

this.onlineResult = this.onlineResult + text

}

this.speechResult = this.offlineResult + this.onlineResult

// 如果是最后的数据就关闭WebSocket

if (is_final) {

this.ws.close()

}

});

// WebSocket关闭事件

this.ws.on('close', () => {

console.log("WebSocket关闭连接");

});

// WebSocket发生错误事件

this.ws.on('error', (err) => {

console.log("WebSocket出现错误,错误信息: " + JSON.stringify(err));

});

}

// 申请权限

reqPermissionsAndRecord(permissions: Array<Permissions>): void {

let atManager = abilityAccessCtrl.createAtManager();

// requestPermissionsFromUser会判断权限的授权状态来决定是否唤起弹窗

atManager.requestPermissionsFromUser(context, permissions).then((data) => {

let grantStatus: Array<number> = data.authResults;

let length: number = grantStatus.length;

for (let i = 0; i < length; i++) {

if (grantStatus[i] === 0) {

// 用户授权,可以继续访问目标操作

console.info('授权成功')

if (this.audioCapturer == null) {

this.audioCapturer = new AudioCapturer()

}

} else {

promptAction.showToast({ message: '授权失败,需要授权才能录音' })

return;

}

}

}).catch((err) => {

console.error(`requestPermissionsFromUser failed, code is ${err.code}, message is ${err.message}`);

})

}

}