文章目录

- 协方差矩阵

- 联合概率密度

- hessian矩阵

- marginalize

本节探讨信息矩阵、hessian矩阵与协方差矩阵的关系,阐明边缘化的原理。

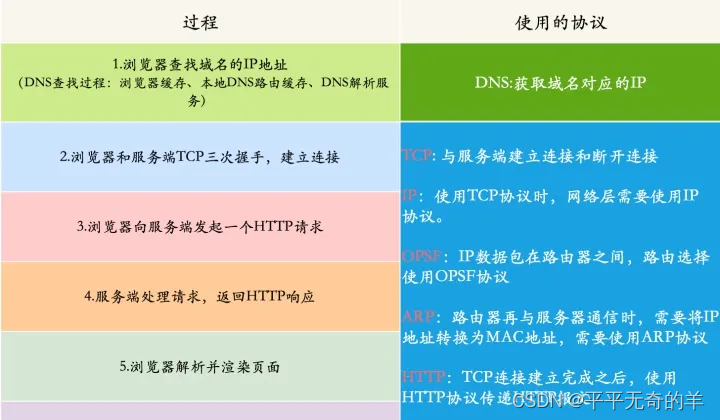

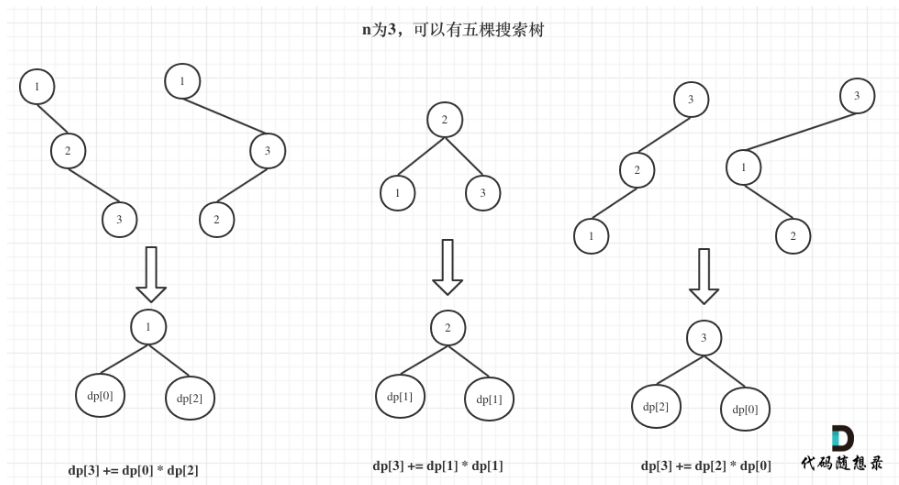

一个简单的示例,如下:

来自 David Mackay. “The humble Gaussian distribution”. In: (2006). 以及手写vio第四节。

箭头代表了约束方程(或可以理解为观测方程):

z 1 : z 2 : z 3 : x 2 = v 2 x 1 = w 1 x 2 + v 1 x 3 = w 3 x 2 + v 3 \begin{array}{} {{z_1}:}\\ {{z_2}:}\\ {{z_3}:} \end{array}\begin{array}{} {{x_2} = {v_2}}\\ {\,{x_1} = {w_1}{x_2} + {v_1}}\\ {\,{x_3} = {w_3}{x_2} + {v_3}} \end{array} z1:z2:z3:x2=v2x1=w1x2+v1x3=w3x2+v3

其中, v i v_i vi 相互独立,且各自服从零均值,协方差为 σ i 2 \sigma_i^2 σi2的高斯分布。

协方差矩阵

协方差计算公式:

C

o

v

(

X

,

Y

)

=

E

[

(

X

−

E

[

X

]

)

∗

(

Y

−

E

[

Y

]

)

=

E

[

X

Y

]

−

2

E

[

X

]

E

[

Y

]

+

E

[

X

]

E

[

Y

]

=

E

[

X

Y

]

−

E

[

X

]

E

[

Y

]

\begin{aligned} Cov(X,Y) &= E[(X - E[X]) * (Y - E[Y])\\ &= E[XY] - 2E[X]E[Y] + E[X]E[Y]\\ &= E[XY] - E[X]E[Y] \end{aligned}

Cov(X,Y)=E[(X−E[X])∗(Y−E[Y])=E[XY]−2E[X]E[Y]+E[X]E[Y]=E[XY]−E[X]E[Y]

或: C o v ( X , Y ) = E [ ( X − μ x ) ( Y − μ y ) ] Cov(X,Y) = E[(X - {\mu _x})(Y - {\mu _y})] Cov(X,Y)=E[(X−μx)(Y−μy)]

计算

x

1

,

x

2

,

x

3

x_1,x_2,x_3

x1,x2,x3之间的协方差矩阵:

Σ

11

=

E

(

x

1

x

1

)

=

E

(

(

w

1

v

2

+

v

1

)

(

w

1

v

2

+

v

1

)

)

=

w

1

2

E

(

v

2

2

)

+

2

w

1

E

(

v

1

v

2

)

+

E

(

v

1

2

)

=

w

1

2

σ

2

2

+

σ

1

2

Σ

22

=

σ

2

2

,

Σ

33

=

w

3

2

σ

2

2

+

σ

3

2

Σ

12

=

E

(

x

1

x

2

)

=

E

(

(

w

1

v

2

+

v

1

)

v

2

)

=

w

1

σ

2

2

Σ

13

=

E

(

(

w

1

v

2

+

v

1

)

(

w

3

v

2

+

v

3

)

)

=

w

1

w

3

σ

2

2

\begin{aligned} {{\rm{\Sigma }}_{11}} &= E({x_1}{x_1}) = E(({w_1}{v_2} + {v_1})({w_1}{v_2} + {v_1}))\\ &= w_1^2E(v_2^2) + 2{w_1}E({v_1}{v_2}) + E(v_1^2)\\ &= w_1^2\sigma _2^2 + \sigma _1^2\\ {{\rm{\Sigma }}_{22}} &= \sigma _2^2,\quad {{\rm{\Sigma }}_{33}} = w_3^2\sigma _2^2 + \sigma _3^2\\ {{\rm{\Sigma }}_{12}} &= E({x_1}{x_2}) = E(({w_1}{v_2} + {v_1}){v_2}) = {w_1}\sigma _2^2\\ {{\rm{\Sigma }}_{13}} &= E(({w_1}{v_2} + {v_1})({w_3}{v_2} + {v_3})) = {w_1}{w_3}\sigma _2^2 \end{aligned}

Σ11Σ22Σ12Σ13=E(x1x1)=E((w1v2+v1)(w1v2+v1))=w12E(v22)+2w1E(v1v2)+E(v12)=w12σ22+σ12=σ22,Σ33=w32σ22+σ32=E(x1x2)=E((w1v2+v1)v2)=w1σ22=E((w1v2+v1)(w3v2+v3))=w1w3σ22

最后得到协方差矩阵:

Σ

=

[

w

1

2

σ

2

2

+

σ

1

2

w

1

σ

2

2

w

1

w

3

σ

2

2

w

1

σ

2

2

σ

2

2

w

3

σ

2

2

w

1

w

3

σ

2

2

w

3

σ

2

2

w

3

2

σ

2

2

+

σ

3

2

]

\Sigma = \left[ {\begin{array}{} {w_1^2\sigma _2^2 + \sigma _1^2}&{{w_1}\sigma _2^2}&{{w_1}{w_3}\sigma _2^2}\\ {{w_1}\sigma _2^2}&{\sigma _2^2}&{{w_3}\sigma _2^2}\\ {{w_1}{w_3}\sigma _2^2}&{{w_3}\sigma _2^2}&{w_3^2\sigma _2^2 + \sigma _3^2} \end{array}} \right]

Σ=

w12σ22+σ12w1σ22w1w3σ22w1σ22σ22w3σ22w1w3σ22w3σ22w32σ22+σ32

联合概率密度

p ( x 1 , x 2 , x 3 ∣ z 1 , z 2 , z 3 ) = 1 C exp ( − x 2 2 2 σ 2 2 − ( x 1 − w 1 x 2 ) 2 2 σ 1 2 − ( x 3 − w 3 x 2 ) 2 2 σ 3 2 ) = 1 C exp ( − x 2 2 [ 1 2 σ 2 2 + w 1 2 2 σ 1 2 − w 3 2 2 σ 3 2 ] − x 1 2 1 2 σ 1 2 + 2 x 1 x 2 w 1 2 σ 1 2 − x 3 2 1 2 σ 3 2 + 2 x 3 x 2 w 3 2 σ 3 2 ) = 1 C exp ( − 1 2 [ x 1 x 2 x 3 ] [ 1 σ 1 2 − w 1 σ 1 2 0 − w 1 σ 1 2 w 1 2 σ 1 2 + 1 σ 2 2 + w 3 2 σ 3 2 − w 3 σ 3 2 0 − w 3 σ 3 2 1 σ 3 2 ] [ x 1 x 2 x 3 ] ) = 1 C exp ( − 1 2 [ x 1 x 2 x 3 ] Σ − 1 [ x 1 x 2 x 3 ] ) \begin{aligned}{} p({x_1},{x_2},{x_3}&|{z_1},{z_2},{z_3})\\ &= \frac{1}{C}\exp ( - \frac{{x_2^2}}{{2\sigma _2^2}} - \frac{{{{({x_1} - {w_1}{x_2})}^2}}}{{2\sigma _1^2}} - \frac{{{{({x_3} - {w_3}{x_2})}^2}}}{{2\sigma _3^2}})\\ \\ &= \frac{1}{C}\exp ( - x_2^2[\frac{1}{{2\sigma _2^2}} + \frac{{w_1^2}}{{2\sigma _1^2}} - \frac{{w_3^2}}{{2\sigma _3^2}}] - x_1^2\frac{1}{{2\sigma _1^2}} + 2{x_1}{x_2}\frac{{{w_1}}}{{2\sigma _1^2}} - x_3^2\frac{1}{{2\sigma _3^2}} + 2{x_3}{x_2}\frac{{{w_3}}}{{2\sigma _3^2}})\\ \\ & = \frac{1}{C}\exp ( - \frac{1}{2}\left[ {\begin{array}{} {{x_1}}&{{x_2}}&{{x_3}} \end{array}} \right]\left[ {\begin{array}{} {\frac{1}{{\sigma _1^2}}}&{ - \frac{{{w_1}}}{{\sigma _1^2}}}&0\\ { - \frac{{{w_1}}}{{\sigma _1^2}}}&{\frac{{w_1^2}}{{\sigma _1^2}} + \frac{1}{{\sigma _2^2}} + \frac{{w_3^2}}{{\sigma _3^2}}}&{ - \frac{{{w_3}}}{{\sigma _3^2}}}\\ 0&{ - \frac{{{w_3}}}{{\sigma _3^2}}}&{\frac{1}{{\sigma _3^2}}} \end{array}} \right]\left[ {\begin{array}{} {{x_1}}\\ {{x_2}}\\ {{x_3}} \end{array}} \right])\\ &= \frac{1}{C}\exp ( - \frac{1}{2}\left[ {\begin{array}{} {{x_1}}&{{x_2}}&{{x_3}} \end{array}} \right]{\Sigma ^{ - 1}}\left[ {\begin{array}{} {{x_1}}\\ {{x_2}}\\ {{x_3}} \end{array}} \right]) \end{aligned} p(x1,x2,x3∣z1,z2,z3)=C1exp(−2σ22x22−2σ12(x1−w1x2)2−2σ32(x3−w3x2)2)=C1exp(−x22[2σ221+2σ12w12−2σ32w32]−x122σ121+2x1x22σ12w1−x322σ321+2x3x22σ32w3)=C1exp(−21[x1x2x3] σ121−σ12w10−σ12w1σ12w12+σ221+σ32w32−σ32w30−σ32w3σ321 x1x2x3 )=C1exp(−21[x1x2x3]Σ−1 x1x2x3 )

从而我们可以得到协方差的逆矩阵,即信息矩阵:

Σ

−

1

=

[

1

σ

1

2

−

w

1

σ

1

2

0

−

w

1

σ

1

2

w

1

2

σ

1

2

+

1

σ

2

2

+

w

3

2

σ

3

2

−

w

3

σ

3

2

0

−

w

3

σ

3

2

1

σ

3

2

]

{\Sigma ^{ - 1}} = \left[ {\begin{array}{} {\frac{1}{{\sigma _1^2}}}&{ - \frac{{{w_1}}}{{\sigma _1^2}}}&0\\ { - \frac{{{w_1}}}{{\sigma _1^2}}}&{\frac{{w_1^2}}{{\sigma _1^2}} + \frac{1}{{\sigma _2^2}} + \frac{{w_3^2}}{{\sigma _3^2}}}&{ - \frac{{{w_3}}}{{\sigma _3^2}}}\\ 0&{ - \frac{{{w_3}}}{{\sigma _3^2}}}&{\frac{1}{{\sigma _3^2}}} \end{array}} \right]

Σ−1=

σ121−σ12w10−σ12w1σ12w12+σ221+σ32w32−σ32w30−σ32w3σ321

求最大似然估计: arg max x 1 , x 2 , x 3 p ( x 1 , x 2 , x 3 ∣ z 1 , z 2 , z 3 ) \mathop {\arg\max }\limits_{{x_1},{x_2},{x_3}} p({x_1},{x_2},{x_3}|{z_1},{z_2},{z_3}) x1,x2,x3argmaxp(x1,x2,x3∣z1,z2,z3)

可以转化为求

arg

max

x

1

,

x

2

,

x

3

log

(

p

(

x

1

,

x

2

,

x

3

∣

z

1

,

z

2

,

z

3

)

)

∝

−

1

2

[

x

1

x

2

x

3

]

[

1

σ

1

2

−

w

1

σ

1

2

0

−

w

1

σ

1

2

w

1

2

σ

1

2

+

1

σ

2

2

+

w

3

2

σ

3

2

−

w

3

σ

3

2

0

−

w

3

σ

3

2

1

σ

3

2

]

[

x

1

x

2

x

3

]

\mathop {\arg \max }\limits_{{x_1},{x_2},{x_3}} \log (p({x_1},{x_2},{x_3}|{z_1},{z_2},{z_3})) \\\propto - \frac{1}{2}\left[ {\begin{array}{} {{x_1}}&{{x_2}}&{{x_3}} \end{array}} \right]\left[ {\begin{array}{} {\frac{1}{{\sigma _1^2}}}&{ - \frac{{{w_1}}}{{\sigma _1^2}}}&0\\ { - \frac{{{w_1}}}{{\sigma _1^2}}}&{\frac{{w_1^2}}{{\sigma _1^2}} + \frac{1}{{\sigma _2^2}} + \frac{{w_3^2}}{{\sigma _3^2}}}&{ - \frac{{{w_3}}}{{\sigma _3^2}}}\\ 0&{ - \frac{{{w_3}}}{{\sigma _3^2}}}&{\frac{1}{{\sigma _3^2}}} \end{array}} \right]\left[ {\begin{array}{} {{x_1}}\\ {{x_2}}\\ {{x_3}} \end{array}} \right]

x1,x2,x3argmaxlog(p(x1,x2,x3∣z1,z2,z3))∝−21[x1x2x3]

σ121−σ12w10−σ12w1σ12w12+σ221+σ32w32−σ32w30−σ32w3σ321

x1x2x3

即求: arg min x 1 , x 2 , x 3 1 2 [ x 1 x 2 x 3 ] Σ − 1 [ x 1 x 2 x 3 ] \mathop {\arg \min }\limits_{{x_1},{x_2},{x_3}} \frac{1}{2}\left[ {\begin{array}{} {{x_1}}&{{x_2}}&{{x_3}} \end{array}} \right]{\Sigma ^{ - 1}}\left[ {\begin{array}{} {{x_1}}\\ {{x_2}}\\ {{x_3}} \end{array}} \right] x1,x2,x3argmin21[x1x2x3]Σ−1 x1x2x3

如此我们可以将问题转化为一个最小二乘问题,同时我们看出信息矩阵与协方差的数学意义。

hessian矩阵

根据约束方程创建最小二乘问题:

e

=

∑

i

=

1

3

∥

z

i

∥

2

H

=

∑

i

=

1

3

J

z

i

T

J

z

i

=

[

−

1

w

1

0

]

[

−

1

w

1

0

]

+

[

0

1

0

]

[

0

1

0

]

+

[

0

w

3

−

1

]

[

0

w

3

−

1

]

=

[

1

−

w

1

0

−

w

1

w

1

2

+

1

+

w

3

2

−

w

3

0

−

w

3

1

]

\begin{aligned}{} e &= \sum \limits_{i = 1}^3 \parallel {z_i}{\parallel _2}\\ H &= \sum \limits_{i = 1}^3 J_{zi}^T{J_{zi}}\\ &= \left[ {\begin{array}{} { - 1}\\ {{w_1}}\\ 0 \end{array}} \right]\left[ {\begin{array}{} { - 1}&{{w_1}}&0 \end{array}} \right] + \left[ {\begin{array}{} 0\\ 1\\ 0 \end{array}} \right]\left[ {\begin{array}{} 0&1&0 \end{array}} \right] + \left[ {\begin{array}{} 0\\ {{w_3}}\\ { - 1} \end{array}} \right]\left[ {\begin{array}{} 0&{{w_3}}&{ - 1} \end{array}} \right]\\ &= \left[ {\begin{array}{} 1&{ - {w_1}}&0\\ { - {w_1}}&{w_1^2 + 1 + w_3^2}&{ - {w_3}}\\ 0&{ - {w_3}}&1 \end{array}} \right] \end{aligned}

eH=i=1∑3∥zi∥2=i=1∑3JziTJzi=

−1w10

[−1w10]+

010

[010]+

0w3−1

[0w3−1]=

1−w10−w1w12+1+w32−w30−w31

当我们考虑变量方差

σ

i

2

\sigma_i^2

σi2时,问题变为:

arg

min

x

1

,

x

2

,

x

3

∑

i

=

1

3

∥

z

i

∥

σ

i

2

\mathop {\arg \min }\limits_{{x_1},{x_2},{x_3}} \sum \limits_{i = 1}^3 \parallel {z_i}{\parallel _{\sigma _i^2}}

x1,x2,x3argmini=1∑3∥zi∥σi2

我们得到加入方差的hessian矩阵,即为信息矩阵:

H

=

J

T

[

σ

1

2

0

0

0

σ

2

2

0

0

0

σ

3

2

]

J

=

∑

i

=

1

3

J

z

i

T

σ

i

2

J

z

i

=

[

1

σ

1

2

−

w

1

σ

1

2

0

−

w

1

σ

1

2

w

1

2

σ

1

2

+

1

σ

2

2

+

w

3

2

σ

3

2

−

w

3

σ

3

2

0

−

w

3

σ

3

2

1

σ

3

2

]

\begin{aligned}{} H &= {J^T}\left[ {\begin{array}{} {\sigma _1^2}&0&0\\ 0&{\sigma _2^2}&0\\ 0&0&{\sigma _3^2} \end{array}} \right]J\\ &= \sum \limits_{i = 1}^3 J_{zi}^T\sigma _{\rm{i}}^2{J_{zi}}\\ &= \left[ {\begin{array}{} {\frac{1}{{\sigma _1^2}}}&{ - \frac{{{w_1}}}{{\sigma _1^2}}}&0\\ { - \frac{{{w_1}}}{{\sigma _1^2}}}&{\frac{{w_1^2}}{{\sigma _1^2}} + \frac{1}{{\sigma _2^2}} + \frac{{w_3^2}}{{\sigma _3^2}}}&{ - \frac{{{w_3}}}{{\sigma _3^2}}}\\ 0&{ - \frac{{{w_3}}}{{\sigma _3^2}}}&{\frac{1}{{\sigma _3^2}}} \end{array}} \right] \end{aligned}

H=JT

σ12000σ22000σ32

J=i=1∑3JziTσi2Jzi=

σ121−σ12w10−σ12w1σ12w12+σ221+σ32w32−σ32w30−σ32w3σ321

由最大似然得到的最小二乘问题与使用观测约束建立的最小二乘问题等价:

arg min x 1 , x 2 , x 3 1 2 [ x 1 x 2 x 3 ] Σ − 1 [ x 1 x 2 x 3 ] → arg min x 1 , x 2 , x 3 ∑ i = 1 3 ∥ z i ∥ σ i 2 \mathop {\arg \min }\limits_{{x_1},{x_2},{x_3}} \frac{1}{2}\left[ {\begin{array}{} {{x_1}}&{{x_2}}&{{x_3}} \end{array}} \right]{\Sigma ^{ - 1}}\left[ {\begin{array}{} {{x_1}}\\ {{x_2}}\\ {{x_3}} \end{array}} \right]\quad \rightarrow \quad \mathop {\arg \min }\limits_{{x_1},{x_2},{x_3}} \sum \limits_{i = 1}^3 \parallel {z_i}{\parallel _{\sigma _i^2}} x1,x2,x3argmin21[x1x2x3]Σ−1 x1x2x3 →x1,x2,x3argmini=1∑3∥zi∥σi2

由此,我们便可以具体看出hessian矩阵与协方差矩阵之间的联系,当我们需要边缘化marginalize一个变量时,可以将信息矩阵求逆转化为相关的协方差矩阵,然后剔除掉变量后,再次求逆得到新的信息矩阵。

marginalize

通过schur补,我们可以将marginalize的过程公式化:

以Marg b为例:需要先将信息矩阵(此例中为

Λ

\mathrm{\Lambda}

Λ)求逆,得协方差矩阵

Σ

\mathrm{\Sigma}

Σ,提取与b无关的矩阵A,再对A求逆,即得到marg 后的信息矩阵。