目录

- 1. 分类变量

- 1.1 One-Hot编码(虚拟变量)

- 检查字符串编码的分类数据

- 1.2 数字可以编码分类变量

- 2. 分箱、离散化、线性模型与树

- 3. 交互特征与多相似特征

- 4. 单变量非线性变换

- 总结(2~4)

- 5. 自动化特征选择

- 5.1 单变量统计

- 5.2 基于模型的特征选择

- 5.3 迭代特征选择

- 6. 利用专家知识

- 任务:预测是否还有共享单车可供使用

- 加载数据

- 将数据可视化

- 观察并分析数据

- 确定输入特征特征与输出

- 尝试使用单一整数特征作为数据表示

- 定义一个函数(对数据进行划分、构建模型并将结果可视化)

- 使用随机森林作为第一个模型进行预测

- 分析结果为一条直线的原因

- 使用专家知识

- 仅使用每天的时刻作为特征并进行预测

- 添加星期几作为特征并进行预测

- 使用线性回归作为模型进行预测

- 将整数解释为分类变量并使用岭回归进行预测

- 让模型为星期几和时刻的每一种组合学到一个系数并进行预测

- 将模型学到的系数作图

- 为时刻和星期几特征创建特征名称

- 对所有交互特征进行命名,并仅保留系数不为零的那些特征

- 将系数可视化

1. 分类变量

-

使用成年人收入的数据集(adult数据集)

-

任务:预测一名工人的收入

-

特征

- 年龄

- 雇用方式

- 教育水平

- 性别

- 每周工作时长

- 职业

- 等等

-

数据集中的前几个条目

- 连续特征

- age

- hours-per-week

- 分类特征:来自一系列固定的可能取值(不是范围),表示的是定性属性(不是数量)

- workclass

- education

- gender

- occupation

- 连续特征

-

任务种类

- 分类任务

- 收入<=50K

- 收入>50K

- 回归任务

- 预测具体收入

- 分类任务

-

-

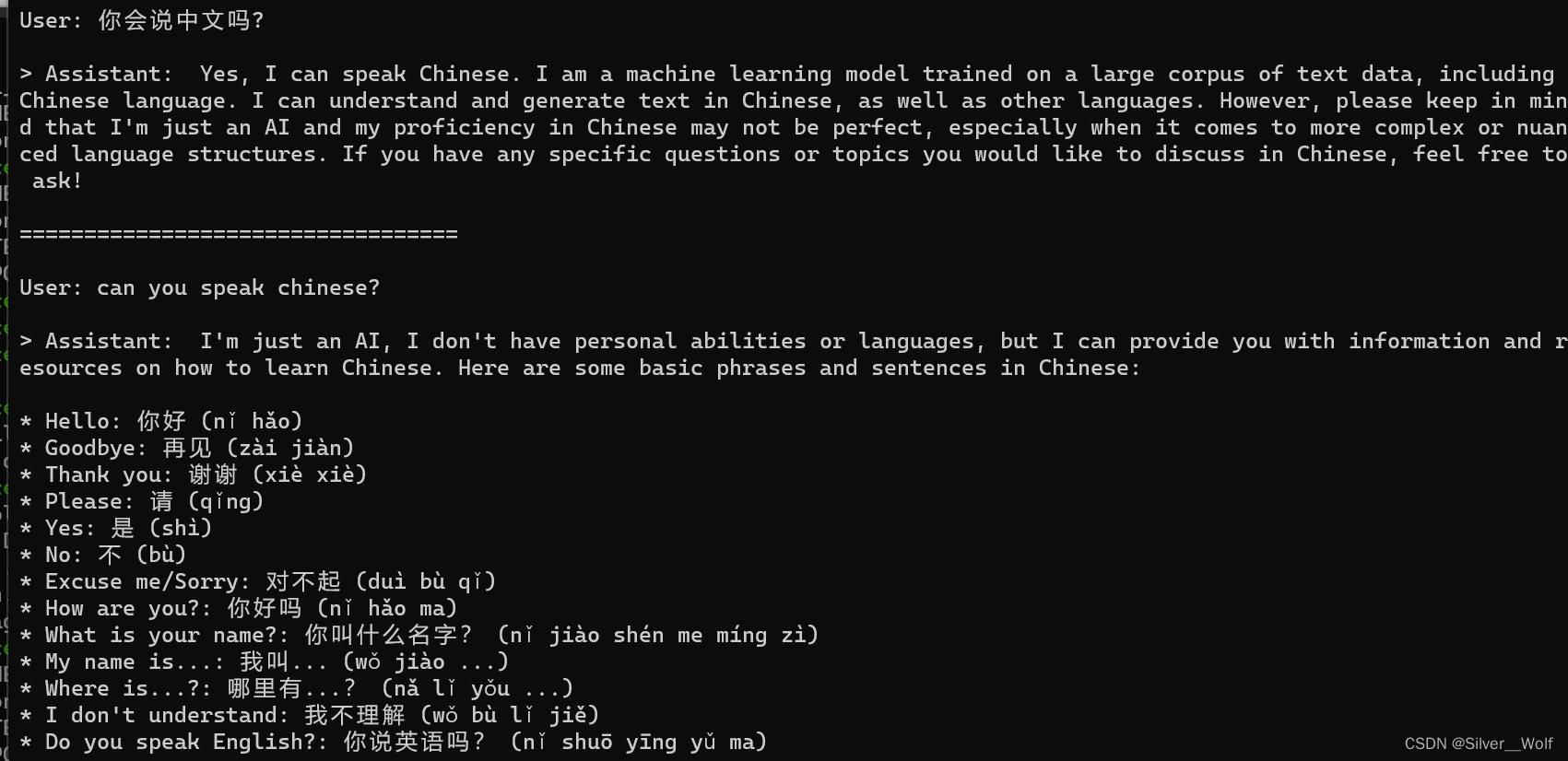

假设学习Logistic回归分析器

-

预测公式

y ^ = w [ 0 ] ∗ x [ 0 ] + w [ 1 ] ∗ x [ 1 ] + ⋯ + w [ p ] ∗ x [ p ] + b > 0 \hat{y} = w[0]*x[0] + w[1]*x[1] + \cdots + w[p]*x[p] + b > 0 y^=w[0]∗x[0]+w[1]∗x[1]+⋯+w[p]∗x[p]+b>0- w [ i ] w[i] w[i]:学到的系数

- b b b:学到的系数

- x [ i ] x[i] x[i]:输入特征

-

1.1 One-Hot编码(虚拟变量)

-

思想:将一个分类变量替换为一个或多个新特征

- 新特征取0和1

-

利用One-Hot编码来编码workclass特征

-

将数据转换为分类变量的One-Hot编码的两种方法

- 使用pandas

- get_dummies函数

- 使用scilit-learn

- OneHotEncoder函数

- 使用pandas

-

使用pandas加载数据

import pandas as pd # 文件中没有包含列名称的表头,因此我们传入header=None # 然后在"names"中显式地提供列名称 data = pd.read_csv("data/adult.data", header=None, index_col=False, names=['age', 'workclass', 'fnlwgt', 'education', 'education-num', 'marital-status', 'occupation', 'relationship', 'race', 'gender', 'capital-gain', 'capital-loss', 'hours-per-week', 'native-country', 'income']) # 为了便于说明,我们只选了其中几列 data = data[['age', 'workclass', 'education', 'gender', 'hours-per-week', 'occupation', 'income']] # 显示所有列 pd.set_option('display.max_columns', None) # 显示所有行 pd.set_option('display.max_rows', None) print(data.head()) # age workclass education gender hours-per-week occupation income # 0 39 State-gov Bachelors Male 40 Adm-clerical <=50K # 1 50 Self-emp-not-inc Bachelors Male 13 Exec-managerial <=50K # 2 38 Private HS-grad Male 40 Handlers-cleaners <=50K # 3 53 Private 11th Male 40 Handlers-cleaners <=50K # 4 28 Private Bachelors Female 40 Prof-specialty <=50K

检查字符串编码的分类数据

-

使用 pandas Series(Series 是 Data Frame 中单列对应的数据类型)的 value_counts 函数,以显示唯一值及其出现的次数

import pandas as pd data = pd.read_csv("data/adult.data", header=None, index_col=False, names=['age', 'workclass', 'fnlwgt', 'education', 'education-num', 'marital-status', 'occupation', 'relationship', 'race', 'gender', 'capital-gain', 'capital-loss', 'hours-per-week', 'native-country', 'income']) data = data[['age', 'workclass', 'education', 'gender', 'hours-per-week', 'occupation', 'income']] print(data.gender.value_counts()) # Male 21790 # Female 10771 # Name: gender, dtype: int64 -

使用get_dummies函数

- 自动变换所有对象类型的列或所有分类的列

import pandas as pd data = pd.read_csv("data/adult.data", header=None, index_col=False, names=['age', 'workclass', 'fnlwgt', 'education', 'education-num', 'marital-status', 'occupation', 'relationship', 'race', 'gender', 'capital-gain', 'capital-loss', 'hours-per-week', 'native-country', 'income']) data = data[['age', 'workclass', 'education', 'gender', 'hours-per-week', 'occupation', 'income']] print("Original features:\n", list(data.columns), "\n") # Original features: # ['age', 'workclass', 'education', 'gender', 'hours-per-week', 'occupation', 'income'] data_dummies = pd.get_dummies(data) print("Features:\n", list(data_dummies.columns)) # Features: # ['age', 'hours-per-week', 'workclass_ ?', 'workclass_ Federal-gov', 'workclass_ Local-gov', 'workclass_ Never-worked', 'workclass_ Private', 'workclass_ Self-emp-inc', 'workclass_ Self-emp-not-inc', 'workclass_ State-gov', 'workclass_ Without-pay', 'education_ 10th', 'education_ 11th', 'education_ 12th', 'education_ 1st-4th', 'education_ 5th-6th', 'education_ 7th-8th', 'education_ 9th', 'education_ Assoc-acdm', 'education_ Assoc-voc', 'education_ Bachelors', 'education_ Doctorate', 'education_ HS-grad', 'education_ Masters', 'education_ Preschool', 'education_ Prof-school', 'education_ Some-college', 'gender_ Female', 'gender_ Male', 'occupation_ ?', 'occupation_ Adm-clerical', 'occupation_ Armed-Forces', 'occupation_ Craft-repair', 'occupation_ Exec-managerial', 'occupation_ Farming-fishing', 'occupation_ Handlers-cleaners', 'occupation_ Machine-op-inspct', 'occupation_ Other-service', 'occupation_ Priv-house-serv', 'occupation_ Prof-specialty', 'occupation_ Protective-serv', 'occupation_ Sales', 'occupation_ Tech-support', 'occupation_ Transport-moving', 'income_ <=50K', 'income_ >50K'] -

使用 values 属性将 data_dummies 数据框转换为 NumPy 数组

- 仅提取包含特征的列

import pandas as pd data = pd.read_csv("data/adult.data", header=None, index_col=False, names=['age', 'workclass', 'fnlwgt', 'education', 'education-num', 'marital-status', 'occupation', 'relationship', 'race', 'gender', 'capital-gain', 'capital-loss', 'hours-per-week', 'native-country', 'income']) data = data[['age', 'workclass', 'education', 'gender', 'hours-per-week', 'occupation', 'income']] data_dummies = pd.get_dummies(data) features = data_dummies.loc[:, 'age':'occupation_ Transport-moving'] # 提取NumPy数组 X = features.values y = data_dummies['income_ >50K'].values print("X.shape: {} y.shape: {}".format(X.shape, y.shape)) # X.shape: (32561, 44) y.shape: (32561,) -

使用Logistic回归,并计算精确度

import pandas as pd from sklearn.linear_model import LogisticRegression from sklearn.model_selection import train_test_split data = pd.read_csv("data/adult.data", header=None, index_col=False, names=['age', 'workclass', 'fnlwgt', 'education', 'education-num', 'marital-status', 'occupation', 'relationship', 'race', 'gender', 'capital-gain', 'capital-loss', 'hours-per-week', 'native-country', 'income']) data = data[['age', 'workclass', 'education', 'gender', 'hours-per-week', 'occupation', 'income']] data_dummies = pd.get_dummies(data) features = data_dummies.loc[:, 'age':'occupation_ Transport-moving'] X = features.values y = data_dummies['income_ >50K'].values X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=0) logreg = LogisticRegression() logreg.fit(X_train, y_train) print("Test score: {:.3f}".format(logreg.score(X_test, y_test))) # Test score: 0.807

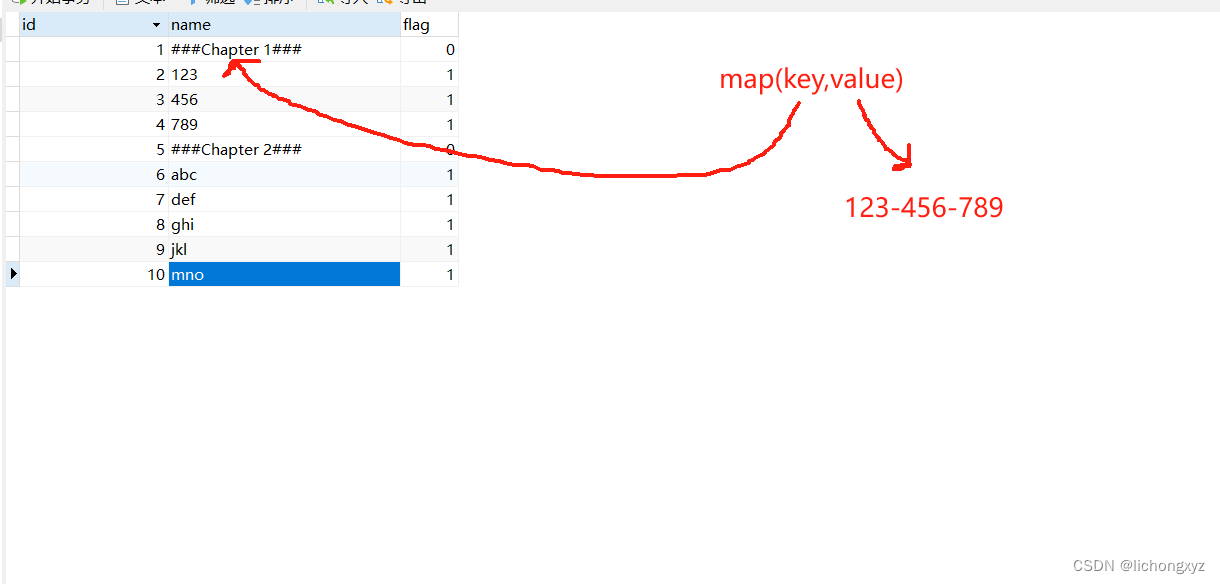

1.2 数字可以编码分类变量

-

pandas 的 get_dummies 函数将所欲的数字视为连续的,不会为其创建虚拟变量

- 解决的两种方法

- 使用 scikit-learn 的 OneHotEncoder,指定哪些变量是连续的、哪些变量是离散的

- 将数据框中的数据列转换为字符串

- 解决的两种方法

-

验证 get_dummies 只会编码字符串特征

import pandas as pd pd.set_option('display.max_columns', None) pd.set_option('display.max_rows', None) # 创建一个DataFrame,包含一个整数特征和一个分类字符串特征 demo_df = pd.DataFrame({'Integer Feature': [0, 1, 2, 1], 'Categorical Feature': ['socks', 'fox', 'socks', 'box']}) print(demo_df) # Integer Feature Categorical Feature # 0 0 socks # 1 1 fox # 2 2 socks # 3 1 box print(pd.get_dummies(demo_df)) # Integer Feature Categorical Feature_box Categorical Feature_fox Categorical Feature_socks # 0 0 0 0 1 # 1 1 0 1 0 # 2 2 0 0 1 # 3 1 1 0 0 -

使用 columns 参数显式地给出想要编码的列

import pandas as pd pd.set_option('display.max_columns', None) pd.set_option('display.max_rows', None) demo_df = pd.DataFrame({'Integer Feature': [0, 1, 2, 1], 'Categorical Feature': ['socks', 'fox', 'socks', 'box']}) demo_df['Integer Feature'] = demo_df['Integer Feature'].astype(str) print(pd.get_dummies(demo_df, columns=['Integer Feature', 'Categorical Feature'])) # Integer Feature_0 Integer Feature_1 Integer Feature_2 Categorical Feature_box Categorical Feature_fox Categorical Feature_socks # 0 1 0 0 0 0 1 # 1 0 1 0 0 1 0 # 2 0 0 1 0 0 1 # 3 0 1 0 1 0 0

2. 分箱、离散化、线性模型与树

-

线性回归模型与决策树回归在 wave 数据集上的对比

import numpy as np from matplotlib import pyplot as plt import mglearn from sklearn.linear_model import LinearRegression from sklearn.tree import DecisionTreeRegressor X, y = mglearn.datasets.make_wave(n_samples=100) line = np.linspace(-3, 3, 1000, endpoint=False).reshape(-1, 1) reg = DecisionTreeRegressor(min_samples_split=3).fit(X, y) plt.plot(line, reg.predict(line), label="decision tree") reg = LinearRegression().fit(X, y) plt.plot(line, reg.predict(line), label="linear regression") plt.plot(X[:, 0], y, 'o', c='k') plt.ylabel("Regression output") plt.xlabel("Input feature") plt.legend(loc="best") plt.tight_layout() plt.show()

- 线性模型:只能对线性关系建模,对于单个特征的情况就是直线

- 决策树:可以构建较为复杂的数据模型,但强烈依赖于数据表示

-

特征分箱(离散化):将线性模型划分为多个特征

-

将特征的输入范围划分成固定个数的箱子

- 数据点用其所在的箱子表示

- 划分出10个箱子

import numpy as np bins = np.linspace(-3, 3, 11) print("bins: {}".format(bins)) # bins: [-3. -2.4 -1.8 -1.2 -0.6 0. 0.6 1.2 1.8 2.4 3. ] -

记录每个点所处的箱子

- 使用 digitize 函数

import numpy as np import mglearn X, y = mglearn.datasets.make_wave(n_samples=100) bins = np.linspace(-3, 3, 11) which_bin = np.digitize(X, bins=bins) print("\nData points:\n", X[:5]) # Data points: # [[-0.75275929] # [ 2.70428584] # [ 1.39196365] # [ 0.59195091] # [-2.06388816]] print("\nBin membership for data points:\n", which_bin[:5]) # Bin membership for data points: # [[ 4] # [10] # [ 8] # [ 6] # [ 2]] -

使用 preprocessing 模块的 OneHotEncoder 将这个离散特征变换为 one-hot 编码

import numpy as np import mglearn from sklearn.preprocessing import OneHotEncoder X, y = mglearn.datasets.make_wave(n_samples=100) bins = np.linspace(-3, 3, 11) which_bin = np.digitize(X, bins=bins) # 使用OneHotEncoder进行变换 encoder = OneHotEncoder(sparse=False) # encoder.fit找到which_bin中的唯一值 encoder.fit(which_bin) # transform创建one-hot编码 X_binned = encoder.transform(which_bin) print(X_binned[:5]) # [[0. 0. 0. 1. 0. 0. 0. 0. 0. 0.] # [0. 0. 0. 0. 0. 0. 0. 0. 0. 1.] # [0. 0. 0. 0. 0. 0. 0. 1. 0. 0.] # [0. 0. 0. 0. 0. 1. 0. 0. 0. 0.] # [0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]] print("X_binned.shape: {}".format(X_binned.shape)) # X_binned.shape: (100, 10) -

在 one-hot 编码后的数据上构建新的线性模型和新的决策树模型

import numpy as np from matplotlib import pyplot as plt import mglearn from sklearn.linear_model import LinearRegression from sklearn.tree import DecisionTreeRegressor from sklearn.preprocessing import OneHotEncoder X, y = mglearn.datasets.make_wave(n_samples=100) line = np.linspace(-3, 3, 1000, endpoint=False).reshape(-1, 1) bins = np.linspace(-3, 3, 11) which_bin = np.digitize(X, bins=bins) encoder = OneHotEncoder(sparse=False) encoder.fit(which_bin) X_binned = encoder.transform(which_bin) line_binned = encoder.transform(np.digitize(line, bins=bins)) reg = LinearRegression().fit(X_binned, y) plt.plot(line, reg.predict(line_binned), label='linear regression binned') reg = DecisionTreeRegressor(min_samples_split=3).fit(X_binned, y) plt.plot(line, reg.predict(line_binned), label='decision tree binned') plt.plot(X[:, 0], y, 'o', c='k') plt.vlines(bins, -3, 3, linewidth=1, alpha=.2) plt.legend(loc="best") plt.ylabel("Regression output") plt.xlabel("Input feature") plt.tight_layout() plt.show()

-

线性模型灵活度上升

-

决策树模型灵活度下降

- 可以学习如何分箱对预测这些数据最为有用

-

对于特定的数据集,如果有充分的理由使用线性模型(数据集很大、维度很高,但有些特征与输出的关系是非线性的),则分箱可以很好地提高建模能力

3. 交互特征与多相似特征

-

对分箱数据添加斜率

import numpy as np from matplotlib import pyplot as plt import mglearn from sklearn.linear_model import LinearRegression from sklearn.preprocessing import OneHotEncoder X, y = mglearn.datasets.make_wave(n_samples=100) line = np.linspace(-3, 3, 1000, endpoint=False).reshape(-1, 1) bins = np.linspace(-3, 3, 11) which_bin = np.digitize(X, bins=bins) encoder = OneHotEncoder(sparse=False) encoder.fit(which_bin) X_binned = encoder.transform(which_bin) X_combined = np.hstack([X, X_binned]) print(X_combined.shape) # (100, 11) line_binned = encoder.transform(np.digitize(line, bins=bins)) reg = LinearRegression().fit(X_combined, y) line_combined = np.hstack([line, line_binned]) plt.plot(line, reg.predict(line_combined), label='linear regression combined') for bin in bins: plt.plot([bin, bin], [-3, 3], ':', c='k') plt.legend(loc="best") plt.ylabel("Regression output") plt.xlabel("Input feature") plt.plot(X[:, 0], y, 'o', c='k') plt.tight_layout() plt.show()

-

为每个箱子添加不同的斜率

import numpy as np from matplotlib import pyplot as plt import mglearn from sklearn.linear_model import LinearRegression from sklearn.preprocessing import OneHotEncoder X, y = mglearn.datasets.make_wave(n_samples=100) line = np.linspace(-3, 3, 1000, endpoint=False).reshape(-1, 1) bins = np.linspace(-3, 3, 11) which_bin = np.digitize(X, bins=bins) encoder = OneHotEncoder(sparse=False) encoder.fit(which_bin) X_binned = encoder.transform(which_bin) X_combined = np.hstack([X, X_binned]) X_product = np.hstack([X_binned, X * X_binned]) print(X_product.shape) # (100, 20) line_binned = encoder.transform(np.digitize(line, bins=bins)) line_product = np.hstack([line_binned, line * line_binned]) reg = LinearRegression().fit(X_product, y) line_combined = np.hstack([line_binned, line * line_binned]) plt.plot(line, reg.predict(line_product), label='linear regression combined') for bin in bins: plt.plot([bin, bin], [-3, 3], ':', c='k') plt.legend(loc="best") plt.ylabel("Regression output") plt.xlabel("Input feature") plt.plot(X[:, 0], y, 'o', c='k') plt.tight_layout() plt.show()

-

使用原始特征的多项式

- 在 processing 模块的 中实现

import mglearn from sklearn.preprocessing import PolynomialFeatures X, y = mglearn.datasets.make_wave(n_samples=100) # 包含直到x ** 10的多项式: # 默认的"include bias=True"添加恒等于1的常数特征 poly = PolynomialFeatures(degree=10, include_bias=False) poly.fit(X) X_poly = poly.transform(X) print("X_poly.shape: {}".format(X_poly.shape)) # X_poly.shape: (100, 10) print("Entries of X:\n{}".format(X[:5])) # Entries of X: # [[-0.75275929] # [ 2.70428584] # [ 1.39196365] # [ 0.59195091] # [-2.06388816]] print("Entries of X poly:\n{}".format(X_poly[:5])) # Entries of X poly: # [[-7.52759287e-01 5.66646544e-01 -4.26548448e-01 3.21088306e-01 # -2.41702204e-01 1.81943579e-01 -1.36959719e-01 1.03097700e-01 # -7.76077513e-02 5.84199555e-02] # [ 2.70428584e+00 7.31316190e+00 1.97768801e+01 5.34823369e+01 # 1.44631526e+02 3.91124988e+02 1.05771377e+03 2.86036036e+03 # 7.73523202e+03 2.09182784e+04] # [ 1.39196365e+00 1.93756281e+00 2.69701700e+00 3.75414962e+00 # 5.22563982e+00 7.27390068e+00 1.01250053e+01 1.40936394e+01 # 1.96178338e+01 2.73073115e+01] # [ 5.91950905e-01 3.50405874e-01 2.07423074e-01 1.22784277e-01 # 7.26822637e-02 4.30243318e-02 2.54682921e-02 1.50759786e-02 # 8.92423917e-03 5.28271146e-03] # [-2.06388816e+00 4.25963433e+00 -8.79140884e+00 1.81444846e+01 # -3.74481869e+01 7.72888694e+01 -1.59515582e+02 3.29222321e+02 # -6.79478050e+02 1.40236670e+03]] print("Polynomial feature names:\n{}".format(poly.get_feature_names_out())) # Polynomial feature names: # ['x0' 'x0^2' 'x0^3' 'x0^4' 'x0^5' 'x0^6' 'x0^7' 'x0^8' 'x0^9' 'x0^10'] -

多项式回归模型:将多项式特征与线性回归模型一起使用

import numpy as np from matplotlib import pyplot as plt import mglearn from sklearn.linear_model import LinearRegression from sklearn.preprocessing import PolynomialFeatures X, y = mglearn.datasets.make_wave(n_samples=100) line = np.linspace(-3, 3, 1000, endpoint=False).reshape(-1, 1) poly = PolynomialFeatures(degree=10, include_bias=False) poly.fit(X) X_poly = poly.transform(X) reg = LinearRegression().fit(X_poly, y) line_poly = poly.transform(line) plt.plot(line, reg.predict(line_poly), label='polynomial linear regression') plt.plot(X[:, 0], y, 'o', c='k') plt.ylabel("Regression output") plt.xlabel("Input feature") plt.legend(loc="best") plt.tight_layout() plt.show()

-

在原始数据上学到的核SVM模型

import numpy as np from matplotlib import pyplot as plt import mglearn from sklearn.svm import SVR X, y = mglearn.datasets.make_wave(n_samples=100) line = np.linspace(-3, 3, 1000, endpoint=False).reshape(-1, 1) for gamma in [1, 10]: svr = SVR(gamma=gamma).fit(X, y) plt.plot(line, svr.predict(line), label='SVR gamma={}'.format(gamma)) plt.plot(X[:, 0], y, 'o', c='k') plt.ylabel("Regression output") plt.xlabel("Input feature") plt.legend(loc="best") plt.tight_layout() plt.show()

-

交互特征和多项式特征的实际应用

from sklearn.datasets import load_boston from sklearn.model_selection import train_test_split from sklearn.preprocessing import MinMaxScaler, PolynomialFeatures from sklearn.linear_model import Ridge from sklearn.ensemble import RandomForestRegressor boston = load_boston() X_train, X_test, y_train, y_test = train_test_split(boston.data, boston.target, random_state=0) # 缩放数据 scaler = MinMaxScaler() X_train_scaled = scaler.fit_transform(X_train) X_test_scaled = scaler.transform(X_test) poly = PolynomialFeatures(degree=2).fit(X_train_scaled) # 使用最多2个原始特征的乘积组成的所有特征 X_train_poly = poly.transform(X_train_scaled) X_test_poly = poly.transform(X_test_scaled) print("X_train.shape: {}".format(X_train.shape)) # X_train.shape: (379, 13) print("X train poly.shape: {}".format(X_train_poly.shape)) # X_train_poly.shape: (379, 105) ridge = Ridge().fit(X_train_scaled, y_train) print("Score without interactions: {:.3f}".format(ridge.score(X_test_scaled, y_test))) # Score without interactions:0.621 ridge = Ridge().fit(X_train_poly, y_train) print("Score with interactions: {:.3f}".format(ridge.score(X_test_poly, y_test))) # Score with interactions: 0.753 rf = RandomForestRegressor(n_estimators=100).fit(X_train_scaled, y_train) print("Score without interactions: {:.3f}".format(rf.score(X_test_scaled, y_test))) # Score without interactions: 0.799 rf = RandomForestRegressor(n_estimators=100).fit(X_train_scaled, y_train) print("Score with interactions: {:.3f}".format(rf.score(X_test_poly, y_test))) # Score with interactions: 0.763

4. 单变量非线性变换

-

基于树的模型只关注特征的顺序

-

线性模型和神经网络依赖于每个特征的尺度和分布

- log和exp函数可以帮助调节数据的相对比例

-

大部分模型都在每个特征大致遵循高斯分布时表现最好

- 每个特征的直方图应该具有类似于熟悉的“钟形曲线”的形状

-

创建一个模拟数据集

import numpy as np rnd = np.random.RandomState(0) X_org = rnd.normal(size=(1000, 3)) w = rnd.normal(size=3) X = rnd.poisson(10 * np.exp(X_org)) y = np.dot(X_org, w) print("Number of feature appearances:\n{}".format(np.bincount(X[:, 0]))) # Number of feature appearances: # [28 38 68 48 61 59 45 56 37 40 35 34 36 26 23 26 27 21 23 23 18 21 10 9 # 17 9 7 14 12 7 3 8 4 5 5 3 4 2 4 1 1 3 2 5 3 8 2 5 # 2 1 2 3 3 2 2 3 3 0 1 2 1 0 0 3 1 0 0 0 1 3 0 1 # 0 2 0 1 1 0 0 0 0 1 0 0 2 2 0 1 1 0 0 0 0 1 1 0 # 0 0 0 0 0 0 1 0 0 0 0 0 1 1 0 0 1 0 0 0 0 0 0 0 # 1 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1]- bincount:从0开始

-

将计数可视化

import numpy as np from matplotlib import pyplot as plt rnd = np.random.RandomState(0) X_org = rnd.normal(size=(1000, 3)) w = rnd.normal(size=3) X = rnd.poisson(10 * np.exp(X_org)) y = np.dot(X_org, w) bins = np.bincount(X[:, 0]) plt.bar(range(len(bins)), bins) plt.ylabel("Number of appearances") plt.xlabel("Value") plt.tight_layout() plt.show()![X[, 0]特征取值的直方图](https://img-blog.csdnimg.cn/direct/6a53f4b83b4a4789b9fefd32b806db5b.png#pic_center)

-

使用岭回归进行拟合(Ridge)

import numpy as np from sklearn.model_selection import train_test_split from sklearn.linear_model import Ridge rnd = np.random.RandomState(0) X_org = rnd.normal(size=(1000, 3)) w = rnd.normal(size=3) X = rnd.poisson(10 * np.exp(X_org)) y = np.dot(X_org, w) X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=0) score = Ridge().fit(X_train, y_train).score(X_test, y_test) print("Test score: {:.3f}".format(score)) # Test score: 0.622 -

使用对数变换

import numpy as np from matplotlib import pyplot as plt from sklearn.model_selection import train_test_split rnd = np.random.RandomState(0) X_org = rnd.normal(size=(1000, 3)) w = rnd.normal(size=3) X = rnd.poisson(10 * np.exp(X_org)) y = np.dot(X_org, w) X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=0) X_train_log = np.log(X_train + 1) X_test_log = np.log(X_test + 1) plt.hist(X_train_log[:, 0], bins=25) plt.ylabel("Number of appearances") plt.xlabel("Value") plt.tight_layout() plt.show()![对X[, 0]特征取值进行对数变换后的直方图](https://img-blog.csdnimg.cn/direct/2527b9f733514394ad87b1564e8c2544.png#pic_center)

-

对新数据进行岭回归拟合

import numpy as np from sklearn.linear_model import Ridge from sklearn.model_selection import train_test_split rnd = np.random.RandomState(0) X_org = rnd.normal(size=(1000, 3)) w = rnd.normal(size=3) X = rnd.poisson(10 * np.exp(X_org)) y = np.dot(X_org, w) X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=0) X_train_log = np.log(X_train + 1) X_test_log = np.log(X_test + 1) score = Ridge().fit(X_train_log, y_train).score(X_test_log, y_test) print("Test score: {:.3f}".format(score)) # Test score: 0.875

总结(2~4)

- 线性模型和朴素贝叶斯模型:在给定数据集上的性能有很大影响

- 对于复杂度较低的模型更是这样

- 基于树的模型:通常能够自己发现重要的交互项,大多数情况下不需要显式地变换数据

- SVM、最近邻和神经网络:有时可能会从使用分箱、交互项或多项式中受益,但其效果通常不如线性模型那么明显

5. 自动化特征选择

5.1 单变量统计

-

计算每个特征和目标值之间的关系是否存在统计显著性,并选择具有最高置信度的特征

-

对于分类问题:称为方差分析

-

测试的关键性质:单变量

- 只单独考虑每个特征

- 如果一个特征只有在与另一个特征合并时才具有信息量,那么这个特征将被舍弃

- 只单独考虑每个特征

-

计算速度很快,且不需要构建模型

-

完全独立于可能在特征选择之后应用的模型

-

使用单变量特征选择的步骤

- 选择一项测试

- 分类问题:f_classif

- 回归问题:f_regression

- 基于测试中确定的p值来选择一种舍弃特征的方法

- 所有舍弃参数的方法都使用阈值来舍弃所有p值过大的特征

- 计算阈值的方法

- SelectKBest:选择固定数量的k个特征

- SelectPercentile:选择固定百分比的特征

- 计算阈值的方法

- 所有舍弃参数的方法都使用阈值来舍弃所有p值过大的特征

- 选择一项测试

-

在cancer数据集上应用单变量特征选择

import numpy as np from matplotlib import pyplot as plt from sklearn.datasets import load_breast_cancer from sklearn.feature_selection import SelectPercentile from sklearn.model_selection import train_test_split cancer = load_breast_cancer() # 获得确定性的随机数 rng = np.random.RandomState(42) noise = rng.normal(size=(len(cancer.data), 50)) # 向数据中添加噪声特征 # 前30个特征来自数据集,后50个是噪声 X_w_noise = np.hstack([cancer.data, noise]) X_train, X_test, y_train, y_test = train_test_split(X_w_noise, cancer.target, random_state=0, test_size=.5) # 使用f_classif(默认值)和SelectPercentile来选择50%的特征 select = SelectPercentile(percentile=50) select.fit(X_train, y_train) # 对训练集进行变换 X_train_selected = select.transform(X_train) print("X_train.shape: {}".format(X_train.shape)) # X_train.shape: (284, 80) print("X_train_selected.shape: {}".format(X_train_selected.shape)) # X_train_selected.shape: (284, 40) mask = select.get_support() print(mask) # [ True True True True True True True True True False True False # True True True True True True False False True True True True # True True True True True True False False False True False True # False False True False False False False True False False True False # False True False True False False False False False False True False # True False False False False True False True False False False False # True True False True False False False False] # 将遮罩可视化——黑色为True,白色为False plt.matshow(mask.reshape(1, -1), cmap='gray_r') plt.xlabel("Sample index") plt.tight_layout() plt.show()

- 大多数选择的特征都是原始特征,并且大多数噪声特征都已被删除

-

对比Logistic回归在所有特征与仅使用所选特征的性能

import numpy as np from sklearn.datasets import load_breast_cancer from sklearn.feature_selection import SelectPercentile from sklearn.model_selection import train_test_split from sklearn.linear_model import LogisticRegression cancer = load_breast_cancer() rng = np.random.RandomState(42) noise = rng.normal(size=(len(cancer.data), 50)) X_w_noise = np.hstack([cancer.data, noise]) X_train, X_test, y_train, y_test = train_test_split(X_w_noise, cancer.target, random_state=0, test_size=.5) select = SelectPercentile(percentile=50) select.fit(X_train, y_train) X_train_selected = select.transform(X_train) # 对测试数据进行变换 X_test_selected = select.transform(X_test) lr = LogisticRegression(max_iter=1000) lr.fit(X_train, y_train) print("Score with all features: {:.3f}".format(lr.score(X_test, y_test))) # Score with all features: 0.933 lr.fit(X_train_selected, y_train) print("Score with only selected features: {:.3f}".format(lr.score(X_test_selected, y_test))) # Score with only selected features: 0.937

5.2 基于模型的特征选择

-

使用一个监督机器学习模型来判断每个特征的重要性,并且仅保留最重要的特征

-

用于特征选择的监督模型不需要与用于最终监督建模的模型相同

-

特征选择模型需要为每个特征提供某种重要性度量

- 决策树和基于决策树的模型:feature_importances_属性

- 直接编码每个特征的重要性

- 线性模型:系数的绝对值

- 决策树和基于决策树的模型:feature_importances_属性

-

同时考虑所有特征

- 可以获取交互项

-

使用基于模型的特征选择

- 使用 SelectFromModel 变换器

import numpy as np from matplotlib import pyplot as plt from sklearn.datasets import load_breast_cancer from sklearn.model_selection import train_test_split from sklearn.feature_selection import SelectFromModel from sklearn.ensemble import RandomForestClassifier cancer = load_breast_cancer() rng = np.random.RandomState(42) noise = rng.normal(size=(len(cancer.data), 50)) X_w_noise = np.hstack([cancer.data, noise]) X_train, X_test, y_train, y_test = train_test_split(X_w_noise, cancer.target, random_state=0, test_size=.5) select = SelectFromModel(RandomForestClassifier(n_estimators=100, random_state=42), threshold="median") select.fit(X_train, y_train) X_train_l1 = select.transform(X_train) print("X_train.shape: {}".format(X_train.shape)) # X_train.shape: (284, 80) print("X_train_l1.shape: {}".format(X_train_l1.shape)) # X_train_l1.shape: (284, 40) mask = select.get_support() # 将遮罩可视化——黑色为True,白色为False plt.matshow(mask.reshape(1, -1), cmap='gray_r') plt.xlabel("Sample index") plt.tight_layout() plt.show()

- 除了两个原始特征,其他原始特征都被选中

-

性能评分

import numpy as np from sklearn.datasets import load_breast_cancer from sklearn.linear_model import LogisticRegression from sklearn.model_selection import train_test_split from sklearn.feature_selection import SelectFromModel from sklearn.ensemble import RandomForestClassifier cancer = load_breast_cancer() rng = np.random.RandomState(42) noise = rng.normal(size=(len(cancer.data), 50)) X_w_noise = np.hstack([cancer.data, noise]) X_train, X_test, y_train, y_test = train_test_split(X_w_noise, cancer.target, random_state=0, test_size=.5) select = SelectFromModel(RandomForestClassifier(n_estimators=100, random_state=42), threshold="median") select.fit(X_train, y_train) X_train_l1 = select.transform(X_train) X_test_l1 = select.transform(X_test) score = LogisticRegression(max_iter=1000).fit(X_train_l1, y_train).score(X_test_l1, y_test) print("Test score: {:.3f}".format(score)) # Test score: 0.944

5.3 迭代特征选择

-

构建一系列模型,每个模型都使用不同数量的特征

-

两种基本方法

- 开始时没有特征,然后逐个添加特征,直到满足某个条件

- 从所有特征开始,然后逐个删除特征,直到满足某个条件

-

计算成本较高

-

特殊方法:递归特征消除(RFE)

- 从所有特征开始构建模型,并根据模型舍弃最不重要的特征,然后使用除被舍弃特征之外的所有特征来构建一个模型,直到仅剩下预设数量的特征

-

使用随机森林确定特征重要性

import numpy as np from matplotlib import pyplot as plt from sklearn.datasets import load_breast_cancer from sklearn.model_selection import train_test_split from sklearn.ensemble import RandomForestClassifier from sklearn.feature_selection import RFE cancer = load_breast_cancer() rng = np.random.RandomState(42) noise = rng.normal(size=(len(cancer.data), 50)) X_w_noise = np.hstack([cancer.data, noise]) X_train, X_test, y_train, y_test = train_test_split(X_w_noise, cancer.target, random_state=0, test_size=.5) select = RFE(RandomForestClassifier(n_estimators=100, random_state=42), n_features_to_select=40) select.fit(X_train, y_train) # 将选中的特征可视化 mask = select.get_support() plt.matshow(mask.reshape(1, -1), cmap='gray_r') plt.xlabel("Sample index") plt.tight_layout() plt.show()

-

测试性能

import numpy as np from sklearn.datasets import load_breast_cancer from sklearn.linear_model import LogisticRegression from sklearn.model_selection import train_test_split from sklearn.ensemble import RandomForestClassifier from sklearn.feature_selection import RFE cancer = load_breast_cancer() rng = np.random.RandomState(42) noise = rng.normal(size=(len(cancer.data), 50)) X_w_noise = np.hstack([cancer.data, noise]) X_train, X_test, y_train, y_test = train_test_split(X_w_noise, cancer.target, random_state=0, test_size=.5) select = RFE(RandomForestClassifier(n_estimators=100, random_state=42), n_features_to_select=40) select.fit(X_train, y_train) X_train_rfe = select.transform(X_train) X_test_rfe = select.transform(X_test) # 使用RFE做特征选择时Logistic回归模型的精度 score = LogisticRegression(max_iter=1000).fit(X_train_rfe, y_train).score(X_test_rfe, y_test) print("Test score: {:.3f}".format(score)) # Test score: 0.951 # 使用在RFE内使用的模型来进行预测的精度 print("Test score: {:.3f}".format(select.score(X_test, y_test))) # Test score: 0.951- 只要选择了正确的特征,线性模型的表现就与随机森林一样好

6. 利用专家知识

- 可以将关于任务属性的先验知识编码到特征中,以辅助机器学习算法

- 添加一个特征并不会强制机器学习算法使用它

任务:预测是否还有共享单车可供使用

加载数据

- 将数据重新采样为每3个小时一个数据

import mglearn

citibike=mglearn.datasets.load_citibike()

print("Citi Bike data:\n{}".format(citibike.head()))

# Citi Bike data:

# starttime

# 2015-08-01 00:00:00 3

# 2015-08-01 03:00:00 0

# 2015-08-01 06:00:00 9

# 2015-08-01 09:00:00 41

# 2015-08-01 12:00:00 39

# Freq: 3H, Name: one, dtype: int64

将数据可视化

import pandas as pd

from matplotlib import pyplot as plt

import mglearn

citibike = mglearn.datasets.load_citibike()

xticks = pd.date_range(start=citibike.index.min(), end=citibike.index.max(), freq='D')

plt.figure(figsize=(10, 3))

plt.xticks(xticks, xticks.strftime("%a %m-%d"), rotation=90, ha="left")

plt.plot(citibike, linewidth=1)

plt.xlabel("Date")

plt.ylabel("Rentals")

plt.tight_layout()

plt.show()

观察并分析数据

- 对时间序列上的预测任务的评估目标:希望从过去学习并预测未来

- 划分数据

- 训练集:前23天(184个数据点)

- 测试集:后8天(64个数据点)

确定输入特征特征与输出

- 唯一特征(输入特征):日期和时间

- 输出:接下来3个小时内租车的数量

尝试使用单一整数特征作为数据表示

import time

import mglearn

citibike = mglearn.datasets.load_citibike()

# 利用"%s"将时间转换为POSIX时间(时间戳)

X = citibike.index.strftime("%s")

for n, i in enumerate(X):

timeArray = time.strptime(i, "%Y-%m-%d %H:%M:%S")

timestamp = time.mktime(timeArray)

X = X.drop(i)

X = X.insert(n, timestamp)

X = X.astype("int").values.reshape(-1, 1)

# 提取目标值(租车数量)

y = citibike.values

定义一个函数(对数据进行划分、构建模型并将结果可视化)

import time

import pandas as pd

from matplotlib import pyplot as plt

import mglearn

citibike = mglearn.datasets.load_citibike()

xticks = pd.date_range(start=citibike.index.min(), end=citibike.index.max(), freq='D')

# 使用前184个数据点用于训练,剩余的数据点用于测试

n_train = 184

# 对给定特征集上的回归进行评估和作图的函数

def eval_on_features(features, target, regressor):

# 将给定特征划分为训练集和测试集

X_train, X_test = features[:n_train], features[n_train:]

# 同样划分目标数组

y_train, y_test = target[:n_train], target[n_train:]

regressor.fit(X_train, y_train)

print("Test-set R^2: {:.2f}".format(regressor.score(X_test, y_test)))

y_pred = regressor.predict(X_test)

y_pred_train = regressor.predict(X_train)

plt.figure(figsize=(10, 3))

plt.xticks(range(0, len(X), 8), xticks.strftime("%a %m-%d"), rotation=90, ha="left")

plt.plot(range(n_train), y_train, label="train")

plt.plot(range(n_train, len(y_test) + n_train), y_test, '-', label="test")

plt.plot(range(n_train), y_pred_train, '--', label="prediction train")

plt.plot(range(n_train, len(y_test) + n_train), y_pred, '--', label="prediction test")

plt.legend(loc=(1.01, 0))

plt.xlabel("Date")

plt.ylabel("Rentals")

plt.tight_layout()

plt.show()

X = citibike.index.strftime("%s")

for n, i in enumerate(X):

timeArray = time.strptime(i, "%Y-%m-%d %H:%M:%S")

timestamp = time.mktime(timeArray)

X = X.drop(i)

X = X.insert(n, timestamp)

X = X.astype("int").values.reshape(-1, 1)

y = citibike.values

使用随机森林作为第一个模型进行预测

- 随机森林需要很少的数据预处理

import time

import pandas as pd

from matplotlib import pyplot as plt

import mglearn

from sklearn.ensemble import RandomForestRegressor

citibike = mglearn.datasets.load_citibike()

xticks = pd.date_range(start=citibike.index.min(), end=citibike.index.max(), freq='D')

n_train = 184

def eval_on_features(features, target, regressor):

X_train, X_test = features[:n_train], features[n_train:]

y_train, y_test = target[:n_train], target[n_train:]

regressor.fit(X_train, y_train)

print("Test-set R^2: {:.2f}".format(regressor.score(X_test, y_test)))

y_pred = regressor.predict(X_test)

y_pred_train = regressor.predict(X_train)

plt.figure(figsize=(10, 3))

plt.xticks(range(0, len(X), 8), xticks.strftime("%a %m-%d"), rotation=90, ha="left")

plt.plot(range(n_train), y_train, label="train")

plt.plot(range(n_train, len(y_test) + n_train), y_test, '-', label="test")

plt.plot(range(n_train), y_pred_train, '--', label="prediction train")

plt.plot(range(n_train, len(y_test) + n_train), y_pred, '--', label="prediction test")

plt.legend(loc=(1.01, 0))

plt.xlabel("Date")

plt.ylabel("Rentals")

plt.tight_layout()

plt.show()

X = citibike.index.strftime("%s")

for n, i in enumerate(X):

timeArray = time.strptime(i, "%Y-%m-%d %H:%M:%S")

timestamp = time.mktime(timeArray)

X = X.drop(i)

X = X.insert(n, timestamp)

X = X.astype("int").values.reshape(-1, 1)

y = citibike.values

regressor = RandomForestRegressor(n_estimators=100, random_state=0)

eval_on_features(X, y, regressor)

# Test-set R^2: -0.04

- 训练集上预测效果较好

- 测试集上预测结果是一条直线

分析结果为一条直线的原因

- 测试集中时间戳的值超出了训练集中特征取值的范围

- 测试集中的时间戳要晚于训练集中的所有数据点

- 树以及随机森林无法外推到训练集之外的特征范围

- 只能预测训练集中最近数据带你的目标值(最后一次观测到的时间)

使用专家知识

- 通过观察图像得到的两个非常重要的因素

- 一天内的时间

- 一周的星期几

- 添加这两个重要特征

- 删除时间戳

- 学不到任何东西

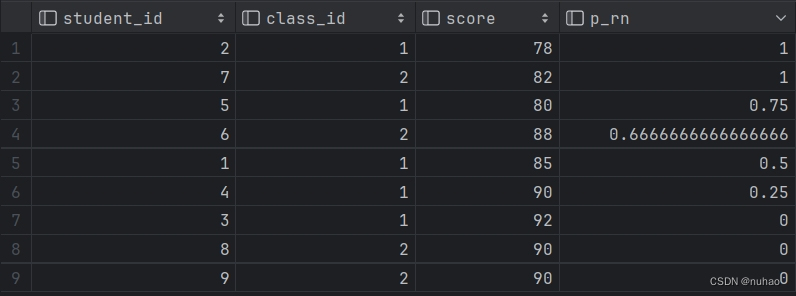

仅使用每天的时刻作为特征并进行预测

import pandas as pd

from matplotlib import pyplot as plt

import mglearn

from sklearn.ensemble import RandomForestRegressor

citibike = mglearn.datasets.load_citibike()

xticks = pd.date_range(start=citibike.index.min(), end=citibike.index.max(), freq='D')

n_train = 184

def eval_on_features(features, target, regressor):

X_train, X_test = features[:n_train], features[n_train:]

y_train, y_test = target[:n_train], target[n_train:]

regressor.fit(X_train, y_train)

print("Test-set R^2: {:.2f}".format(regressor.score(X_test, y_test)))

y_pred = regressor.predict(X_test)

y_pred_train = regressor.predict(X_train)

plt.figure(figsize=(10, 3))

plt.xticks(range(0, len(X_hour), 8), xticks.strftime("%a %m-%d"), rotation=90, ha="left")

plt.plot(range(n_train), y_train, label="train")

plt.plot(range(n_train, len(y_test) + n_train), y_test, '-', label="test")

plt.plot(range(n_train), y_pred_train, '--', label="prediction train")

plt.plot(range(n_train, len(y_test) + n_train), y_pred, '--', label="prediction test")

plt.legend(loc=(1.01, 0))

plt.xlabel("Date")

plt.ylabel("Rentals")

plt.tight_layout()

plt.show()

X_hour = citibike.index.hour.values.reshape(-1, 1)

y = citibike.values

regressor = RandomForestRegressor(n_estimators=100, random_state=0)

eval_on_features(X_hour, y, regressor)

# Test-set R^2: 0.60

- 预测结果对每一天都相同

- 原因:将所有天的每个小时进行归类并进行训练

添加星期几作为特征并进行预测

import numpy as np

import pandas as pd

from matplotlib import pyplot as plt

import mglearn

from sklearn.ensemble import RandomForestRegressor

citibike = mglearn.datasets.load_citibike()

xticks = pd.date_range(start=citibike.index.min(), end=citibike.index.max(), freq='D')

n_train = 184

def eval_on_features(features, target, regressor):

X_train, X_test = features[:n_train], features[n_train:]

y_train, y_test = target[:n_train], target[n_train:]

regressor.fit(X_train, y_train)

print("Test-set R^2: {:.2f}".format(regressor.score(X_test, y_test)))

y_pred = regressor.predict(X_test)

y_pred_train = regressor.predict(X_train)

plt.figure(figsize=(10, 3))

plt.xticks(range(0, len(X_hour_week), 8), xticks.strftime("%a %m-%d"), rotation=90, ha="left")

plt.plot(range(n_train), y_train, label="train")

plt.plot(range(n_train, len(y_test) + n_train), y_test, '-', label="test")

plt.plot(range(n_train), y_pred_train, '--', label="prediction train")

plt.plot(range(n_train, len(y_test) + n_train), y_pred, '--', label="prediction test")

plt.legend(loc=(1.01, 0))

plt.xlabel("Date")

plt.ylabel("Rentals")

plt.tight_layout()

plt.show()

X_hour_week = np.hstack([citibike.index.dayofweek.values.reshape(-1, 1), citibike.index.hour.values.reshape(-1, 1)])

y = citibike.values

regressor = RandomForestRegressor(n_estimators=100, random_state=0)

eval_on_features(X_hour_week, y, regressor)

# Test-set R^2: 0.84

- 模型学到的内容:8月前23天中星期几与时刻每种组合的平均数量

使用线性回归作为模型进行预测

import numpy as np

import pandas as pd

from matplotlib import pyplot as plt

from sklearn.linear_model import LinearRegression

import mglearn

citibike = mglearn.datasets.load_citibike()

xticks = pd.date_range(start=citibike.index.min(), end=citibike.index.max(), freq='D')

n_train = 184

def eval_on_features(features, target, regressor):

X_train, X_test = features[:n_train], features[n_train:]

y_train, y_test = target[:n_train], target[n_train:]

regressor.fit(X_train, y_train)

print("Test-set R^2: {:.2f}".format(regressor.score(X_test, y_test)))

y_pred = regressor.predict(X_test)

y_pred_train = regressor.predict(X_train)

plt.figure(figsize=(10, 3))

plt.xticks(range(0, len(X_hour_week), 8), xticks.strftime("%a %m-%d"), rotation=90, ha="left")

plt.plot(range(n_train), y_train, label="train")

plt.plot(range(n_train, len(y_test) + n_train), y_test, '-', label="test")

plt.plot(range(n_train), y_pred_train, '--', label="prediction train")

plt.plot(range(n_train, len(y_test) + n_train), y_pred, '--', label="prediction test")

plt.legend(loc=(1.01, 0))

plt.xlabel("Date")

plt.ylabel("Rentals")

plt.tight_layout()

plt.show()

X_hour_week = np.hstack([citibike.index.dayofweek.values.reshape(-1, 1), citibike.index.hour.values.reshape(-1, 1)])

y = citibike.values

regressor = LinearRegression()

eval_on_features(X_hour_week, y, regressor)

# Test-set R^2: 0.13

- 预测效果很差

- 原因:一周的星期几和一周内的时间均为整数编码,被解释为连续变量

- 线性模型只能学到关于每天时间的线性函数

- 时间越晚,数量越多

- 线性模型只能学到关于每天时间的线性函数

- 原因:一周的星期几和一周内的时间均为整数编码,被解释为连续变量

将整数解释为分类变量并使用岭回归进行预测

- 使用 OneHotEncoder

import numpy as np

import pandas as pd

from matplotlib import pyplot as plt

from sklearn.linear_model import Ridge

from sklearn.preprocessing import OneHotEncoder

import mglearn

citibike = mglearn.datasets.load_citibike()

xticks = pd.date_range(start=citibike.index.min(), end=citibike.index.max(), freq='D')

n_train = 184

def eval_on_features(features, target, regressor):

X_train, X_test = features[:n_train], features[n_train:]

y_train, y_test = target[:n_train], target[n_train:]

regressor.fit(X_train, y_train)

print("Test-set R^2: {:.2f}".format(regressor.score(X_test, y_test)))

y_pred = regressor.predict(X_test)

y_pred_train = regressor.predict(X_train)

plt.figure(figsize=(10, 3))

plt.xticks(range(0, len(X_hour_week_onehot), 8), xticks.strftime("%a %m-%d"), rotation=90, ha="left")

plt.plot(range(n_train), y_train, label="train")

plt.plot(range(n_train, len(y_test) + n_train), y_test, '-', label="test")

plt.plot(range(n_train), y_pred_train, '--', label="prediction train")

plt.plot(range(n_train, len(y_test) + n_train), y_pred, '--', label="prediction test")

plt.legend(loc=(1.01, 0))

plt.xlabel("Date")

plt.ylabel("Rentals")

plt.tight_layout()

plt.show()

enc = OneHotEncoder()

X_hour_week = np.hstack([citibike.index.dayofweek.values.reshape(-1, 1), citibike.index.hour.values.reshape(-1, 1)])

X_hour_week_onehot = enc.fit_transform(X_hour_week).toarray()

y = citibike.values

regressor = Ridge()

eval_on_features(X_hour_week_onehot, y, regressor)

# Test-set R^2: 0.62

- 线性模型为一周内的每天都学到了一个系数,为一天内的每个时刻都学到了一个系数

- 一周7天共享一天内每个时刻

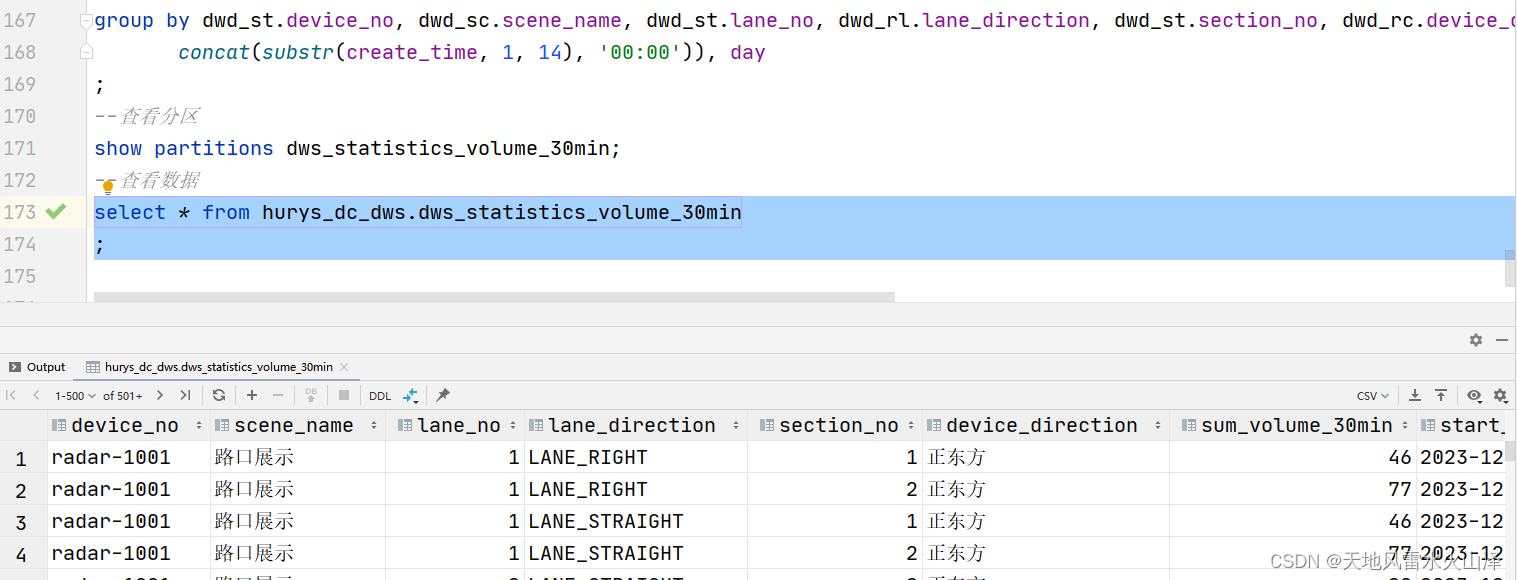

让模型为星期几和时刻的每一种组合学到一个系数并进行预测

import numpy as np

import pandas as pd

from matplotlib import pyplot as plt

from sklearn.linear_model import Ridge

from sklearn.preprocessing import OneHotEncoder, PolynomialFeatures

import mglearn

citibike = mglearn.datasets.load_citibike()

xticks = pd.date_range(start=citibike.index.min(), end=citibike.index.max(), freq='D')

n_train = 184

def eval_on_features(features, target, regressor):

X_train, X_test = features[:n_train], features[n_train:]

y_train, y_test = target[:n_train], target[n_train:]

regressor.fit(X_train, y_train)

print("Test-set R^2: {:.2f}".format(regressor.score(X_test, y_test)))

y_pred = regressor.predict(X_test)

y_pred_train = regressor.predict(X_train)

plt.figure(figsize=(10, 3))

plt.xticks(range(0, len(X_hour_week_onehot), 8), xticks.strftime("%a %m-%d"), rotation=90, ha="left")

plt.plot(range(n_train), y_train, label="train")

plt.plot(range(n_train, len(y_test) + n_train), y_test, '-', label="test")

plt.plot(range(n_train), y_pred_train, '--', label="prediction train")

plt.plot(range(n_train, len(y_test) + n_train), y_pred, '--', label="prediction test")

plt.legend(loc=(1.01, 0))

plt.xlabel("Date")

plt.ylabel("Rentals")

plt.tight_layout()

plt.show()

enc = OneHotEncoder()

poly_transformer = PolynomialFeatures(degree=2, interaction_only=True, include_bias=False)

X_hour_week = np.hstack([citibike.index.dayofweek.values.reshape(-1, 1), citibike.index.hour.values.reshape(-1, 1)])

X_hour_week_onehot = enc.fit_transform(X_hour_week).toarray()

X_hour_week_onehot_poly = poly_transformer.fit_transform(X_hour_week_onehot)

y = citibike.values

regressor = Ridge()

eval_on_features(X_hour_week_onehot_poly, y, regressor)

# Test-set R^2: 0.85

- 优点

- 可以很清楚地看到学到的内容

- 对每个星期几和时刻的交互项学到了一个系数

- 可以很清楚地看到学到的内容

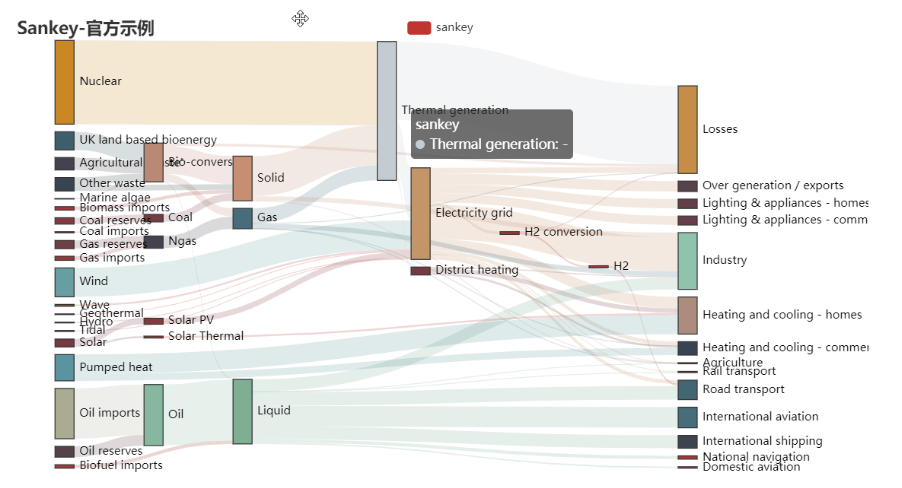

将模型学到的系数作图

为时刻和星期几特征创建特征名称

hour = ["%02d:00" % i for i in range(0, 24, 3)]

day = ["Mon", "Tue", "Wed", "Thu", "Fri", "Sat", "Sun"]

features = day + hour

对所有交互特征进行命名,并仅保留系数不为零的那些特征

features_poly = poly_transformer.get_feature_names_out(features)

features_nonzero = np.array(features_poly)[regressor.coef_ != 0]

coef_nonzero = regressor.coef_[regressor.coef_ != 0]

将系数可视化

import numpy as np

from matplotlib import pyplot as plt

from sklearn.linear_model import Ridge

from sklearn.preprocessing import OneHotEncoder, PolynomialFeatures

import mglearn

citibike = mglearn.datasets.load_citibike()

n_train = 184

def eval_on_features(features, target, regressor):

X_train, X_test = features[:n_train], features[n_train:]

y_train, y_test = target[:n_train], target[n_train:]

regressor.fit(X_train, y_train)

enc = OneHotEncoder()

poly_transformer = PolynomialFeatures(degree=2, interaction_only=True, include_bias=False)

X_hour_week = np.hstack([citibike.index.dayofweek.values.reshape(-1, 1), citibike.index.hour.values.reshape(-1, 1)])

X_hour_week_onehot = enc.fit_transform(X_hour_week).toarray()

X_hour_week_onehot_poly = poly_transformer.fit_transform(X_hour_week_onehot)

y = citibike.values

regressor = Ridge()

eval_on_features(X_hour_week_onehot_poly, y, regressor)

hour = ["%02d:00" % i for i in range(0, 24, 3)]

day = ["Mon", "Tue", "Wed", "Thu", "Fri", "Sat", "Sun"]

features = day + hour

features_poly = poly_transformer.get_feature_names_out(features)

features_nonzero = np.array(features_poly)[regressor.coef_ != 0]

coef_nonzero = regressor.coef_[regressor.coef_ != 0]

plt.figure(figsize=(15, 2))

plt.plot(coef_nonzero, 'o')

plt.xticks(np.arange(len(coef_nonzero)), features_nonzero, rotation=90)

plt.xlabel("Feature name")

plt.ylabel("Feature magnitude")

plt.tight_layout()

plt.show()

![[GN] 设计模式—— 创建型模式](https://img-blog.csdnimg.cn/direct/a7efabd4689241e28faea3df62e1be3b.png)

![[React源码解析] Fiber (二)](https://img-blog.csdnimg.cn/direct/5b41117a39704839a139cc0c60ddfd86.png)