Kubernetes集群环境搭建

文章目录

- Kubernetes集群环境搭建

- 一、环境初始化

- 1、查看操作系统的版本

- 2、主机名解析

- 3、时钟同步

- 4、禁用swap分区

- 5、开启IP转发,和修改内核信息---三个节点都需要配置

- 6、配置IPVS功能(三个节点都做)

- 二、安装docker

- 1、切换镜像源(三个节点都做)

- 2、安装docker-ce(三个节点都做)

- 3、添加一个配置文件,配置docker仓库加速器(三个节点都做)

- 三、安装kubernetes组件

- 1、切换成国内的镜像源

- 2、安装kubeadm kubelet kubectl工具(三个节点都做)

- 3、配置containerd(三个节点都做)

- 4、部署k8s的master节点(在master节点运行)

- 5、安装pod网络插件

- 6、将node节点加入k8s集群

- 7、创建pod,运行nginx容器进行测试

- 8、修改默认网页

环境

| 主机 | IP地址 | 组件 |

|---|---|---|

| master | 192.168.89.151 | docker,kubectl,kubeadm,kubelet |

| node1 | 192.168.89.10 | docker,kubectl,kubeadm,kubelet |

| node2 | 192.168.89.20 | docker,kubectl,kubeadm,kubelet |

本次环境搭建需要安装三台Linux系统(一主二从),内置centos7.5系统,然后在每台linux中分别安装docker。kubeadm(1.25.4),kubelet(1.25.4),kubelet(1.25.4)

一、主机安装

安装虚拟机过程中注意下面选项的设置:

1、操作系统环境:cpu2个 内存2G 硬盘50G centos7+

2、语言:中文简体/英文

3、软件选择:基础设施服务器

4、分区选择:自动分区/手动分区

5、网络配置:按照下面配置网络地址信息

网络地址:192.168.89.(151、10、20)

子网掩码:255.255.255.0

默认网关:192.168.89.2

DNS:8.8.8.8

6、主机名设置:

Master节点:master

Node节点:node1

Node节点:node2

一、环境初始化

1、查看操作系统的版本

[root@master ~]# cat /etc/redhat-release //此方式下安装kubernetes集群要求Centos版本要在7.5或之上

CentOS Stream release 8

[root@master ~]# systemctl stop postfix //三台都关闭

Failed to stop postfix.service: Unit postfix.service not loaded.

2、主机名解析

[root@master ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.89.151 master.example.com master

192.168.89.10 node1.example.com node1

192.168.89.20 node2.example.com node2

[root@master ~]# scp /etc/hosts root@192.168.89.10:/etc/hosts

root@192.168.89.10's password:

hosts 100% 294 192.5KB/s 00:00

[root@master ~]# scp /etc/hosts root@192.168.89.20:/etc/hosts

root@192.168.89.20's password:

hosts 100% 294 164.0KB/s 00:00

//关闭三台主机防火墙

[root@master ~]# systemctl status firewalld

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead) since Thu 2022-11-17 15:04:46 CST; 1min 12s ago

Docs: man:firewalld(1)

[root@master ~]# getenforce

Permissive

3、时钟同步

[root@master ~]# vim /etc/chrony.conf

# Serve time even if not synchronized to a time source.

local stratum 10 //将此行的#去掉

[root@master ~]# systemctl restart chronyd.service

[root@master ~]# systemctl enable chronyd.service

Created symlink /etc/systemd/system/multi-user.target.wants/chronyd.service → /usr/lib/systemd/system/chronyd.service.

[root@master ~]# hwclock -w

[root@node1 ~]# vim /etc/chrony.conf

#pool 2.centos.pool.ntp.org iburst

server master.example.com iburst

[root@node1 ~]# systemctl restart chronyd

[root@node1 ~]# systemctl enable chronyd

[root@node1 ~]# hwclock -w

[root@node2 ~]# vim /etc/chrony.conf

#pool 2.centos.pool.ntp.org iburst

server master.example.com iburst

[root@node2 ~]# systemctl restart chronyd

[root@node2 ~]# systemctl enable chronyd

[root@node2 ~]# hwclock -w

4、禁用swap分区

[root@master ~]# vim /etc/fstab

#/dev/mapper/cs-swap none swap defaults 0 0 //注释掉swap分区那一行

[root@master ~]# swapoff -a

[root@node1 ~]# vim /etc/fstab

#/dev/mapper/cs-swap none swap defaults 0 0

[root@node1 ~]# swapoff -a

[root@node2 ~]# vim /etc/fstab

#/dev/mapper/cs-swap none swap defaults 0 0

[root@node2 ~]# swapoff -a

5、开启IP转发,和修改内核信息—三个节点都需要配置

[root@master ~]# vim /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@master ~]# modprobe br_netfilter

[root@master ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@node1 ~]# vim /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@node1 ~]# modprobe br_netfilter

[root@node1 ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@node2 ~]# vim /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@node2 ~]# modprobe br_netfilter

[root@node2 ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

6、配置IPVS功能(三个节点都做)

[root@master ~]# vim /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

[root@master ~]# chmod +x /etc/sysconfig/modules/ipvs.modules

[root@master ~]# bash /etc/sysconfig/modules/ipvs.modules

[root@master ~]# lsmod | grep -e ip_vs

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs 172032 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 172032 2 nf_nat,ip_vs

nf_defrag_ipv6 20480 2 nf_conntrack,ip_vs

libcrc32c 16384 5 nf_conntrack,nf_nat,nf_tables,xfs,ip_vs

[root@master ~]# reboot

[root@node1 ~]# vim /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

[root@node1 ~]# chmod +x /etc/sysconfig/modules/ipvs.modules

[root@node1 ~]# bash /etc/sysconfig/modules/ipvs.modules

[root@node1 ~]# lsmod | grep -e ip_vs

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs 172032 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 172032 3 nf_nat,nft_ct,ip_vs

nf_defrag_ipv6 20480 2 nf_conntrack,ip_vs

libcrc32c 16384 5 nf_conntrack,nf_nat,nf_tables,xfs,ip_vs

[root@node1 ~]# reboot

[root@node2 ~]# vim /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

[root@node2 ~]# chmod +x /etc/sysconfig/modules/ipvs.modules

[root@node2 ~]# bash /etc/sysconfig/modules/ipvs.modules

[root@node2 ~]# lsmod | grep -e ip_vs

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs 172032 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 172032 3 nf_nat,nft_ct,ip_vs

nf_defrag_ipv6 20480 2 nf_conntrack,ip_vs

libcrc32c 16384 5 nf_conntrack,nf_nat,nf_tables,xfs,ip_vs

[root@node2 ~]# reboot

二、安装docker

1、切换镜像源(三个节点都做)

[root@master yum.repos.d]# wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-vault-8.5.2111.repo

[root@master yum.repos.d]# dnf -y install epel-release

[root@master yum.repos.d]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo //三个节点都要下载镜像源

2、安装docker-ce(三个节点都做)

[root@master yum.repos.d]# dnf -y install docker-ce --allowerasing

[root@master ~]# systemctl restart docker

[root@master ~]# systemctl enable docker

Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /usr/lib/systemd/system/docker.service.

[root@master ~]#

3、添加一个配置文件,配置docker仓库加速器(三个节点都做)

[root@master ~]# cat > /etc/docker/daemon.json << EOF

> {

> "registry-mirrors": ["https://14lrk6zd.mirror.aliyuncs.com"],

> "exec-opts": ["native.cgroupdriver=systemd"],

> "log-driver": "json-file",

> "log-opts": {

> "max-size": "100m"

> },

> "storage-driver": "overlay2"

> }

> EOF

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl restart docker

三、安装kubernetes组件

1、切换成国内的镜像源

[root@master ~]# cat > /etc/yum.repos.d/kubernetes.repo << EOF

> [kubernetes]

> name=Kubernetes

> baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

> enabled=1

> gpgcheck=0

> repo_gpgcheck=0

> gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

[root@master ~]#

2、安装kubeadm kubelet kubectl工具(三个节点都做)

[root@master ~]# dnf -y install kubeadm kubelet kubectl

[root@master ~]# systemctl restart kubelet

[root@master ~]# systemctl enable kubelet

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service.

[root@master ~]#

3、配置containerd(三个节点都做)

[root@master ~]# containerd config default > /etc/containerd/config.toml

[root@master ~]# vim /etc/containerd/config.toml

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.6"

[root@master ~]# systemctl restart containerd

[root@master ~]# systemctl enable containerd

Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /usr/lib/systemd/system/containerd.service.

[root@master ~]#

4、部署k8s的master节点(在master节点运行)

[root@master ~]# kubeadm init \

> --apiserver-advertise-address=192.168.89.151 \

> --image-repository registry.aliyuncs.com/google_containers \

> --kubernetes-version v1.25.4 \

> --service-cidr=10.96.0.0/12 \

> --pod-network-cidr=10.244.0.0/16

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.89.151:6443 --token jpgc9j.w22gtd12wedzp3km \

--discovery-token-ca-cert-hash sha256:b4d942684d920d03d4f5646f9876117bfeeee2c06f90b871a35980ab7fefa6fa

[root@master ~]#

[root@master ~]# vim /etc/profile.d/kuber.sh

export KUBECONFIG=/etc/kubernetes/admin.conf

[root@master ~]# source /etc/profile.d/kuber.sh

5、安装pod网络插件

[root@master ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

[root@master ~]# kubectl apply -f kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

6、将node节点加入k8s集群

[root@node1 ~]# kubeadm join 192.168.89.151:6443 --token jpgc9j.w22gtd12wedzp3km \

> --discovery-token-ca-cert-hash sha256:b4d942684d920d03d4f5646f9876117bfeeee2c06f90b871a35980ab7fefa6fa

[preflight] Running pre-flight checks

[WARNING FileExisting-tc]: tc not found in system path

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@node1 ~]#

[root@node2 ~]# kubeadm join 192.168.89.151:6443 --token jpgc9j.w22gtd12wedzp3km \

> --discovery-token-ca-cert-hash sha256:b4d942684d920d03d4f5646f9876117bfeeee2c06f90b871a35980ab7fefa6fa

[preflight] Running pre-flight checks

[WARNING FileExisting-tc]: tc not found in system path

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@node2 ~]#

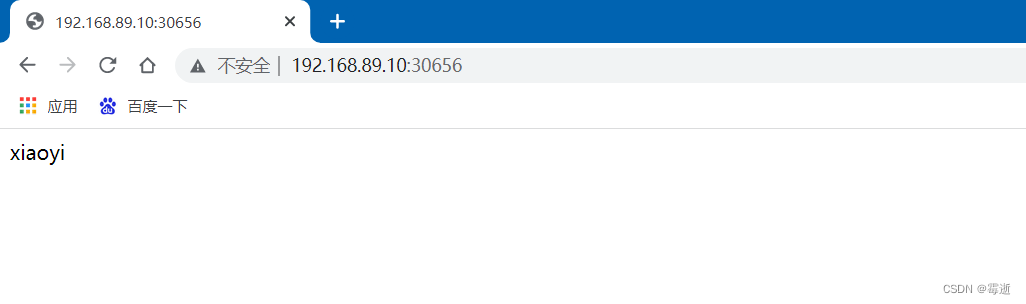

7、创建pod,运行nginx容器进行测试

[root@master ~]# kubectl create deployment nginx --image nginx

deployment.apps/nginx created

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-76d6c9b8c-sfb7x 0/1 Pending 0 13s

[root@master ~]# kubectl expose deployment nginx --port 80 --type NodePort

service/nginx exposed

[root@master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-76d6c9b8c-sfb7x 1/1 Running 0 4m53s 10.244.1.3 node1.example.com <none> <none>

[root@master ~]# kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 122m

nginx NodePort 10.109.89.19 <none> 80:30656/TCP 5m13s

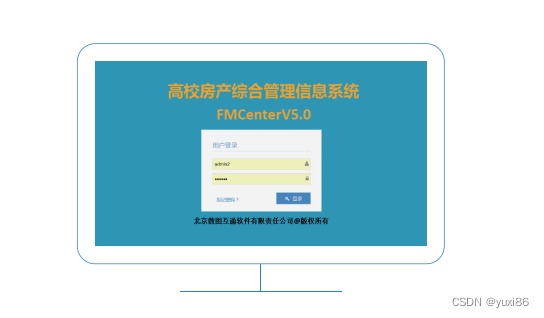

浏览器访问

8、修改默认网页

[root@master ~]# kubectl exec -it pod/nginx-76d6c9b8c-sfb7x -- /bin/bash

root@nginx-76d6c9b8c-sfb7x:/# cd /usr/share/nginx/html/

root@nginx-76d6c9b8c-sfb7x:/usr/share/nginx/html# echo "xiaoyi" > index.html

root@nginx-76d6c9b8c-sfb7x:/usr/share/nginx/html#