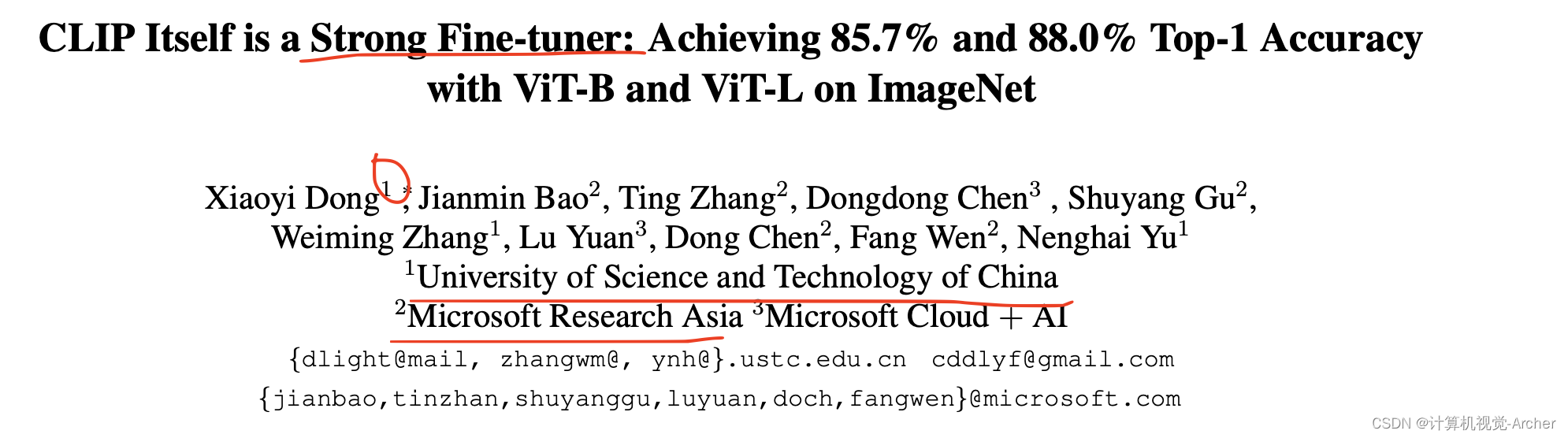

标题

摘要

Recent studies have shown that CLIP has achieved remarkable success in performing zero-shot inference while its fine-tuning performance is not satisfactory.

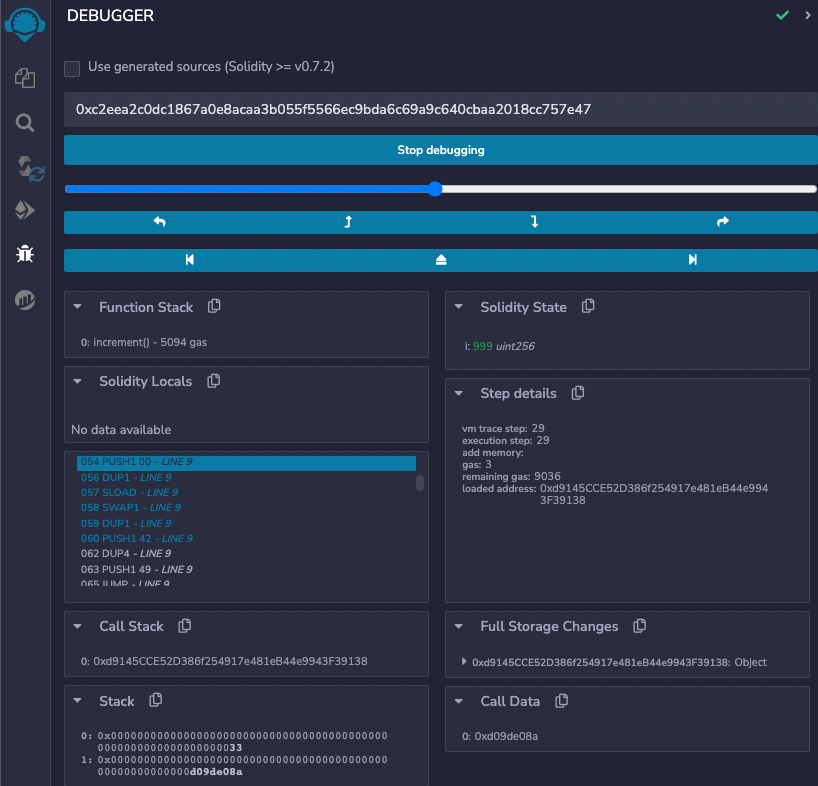

In this paper, we identify that fine-tuning performance is significantly impacted by hyper-parameter choices.

We examine var- ious key hyper-parameters and empirically evaluate their impact in fine-tuning CLIP for classification tasks through a comprehensive study.

We find that the fine-tuning perfor- mance of CLIP is substantially underestimated.

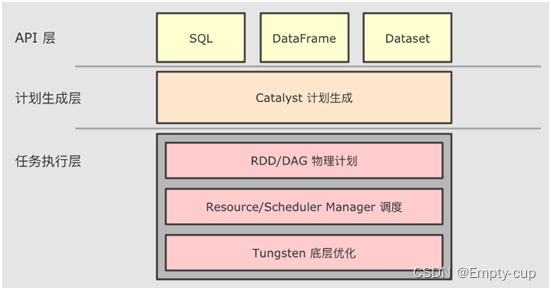

Equipped with hyper-parameter refinement, we demonstrate CLIP itself is better or at least competitive in fine-tuning compared with large-scale supervised pre-training approaches or lat- est works that use CLIP as prediction targets in Masked Image Modeling.

Specifically, CLIP ViT-Base/16 and CLIP ViT-Large/