参考:2021-06-23 基于AprilTag的位姿估计,原理,完整代码(相机坐标系、世界坐标系) - 简书

Apriltag使用之二:方位估计(定位)_arczee的博客-CSDN博客_apriltag位姿估计

1.AprilTag概述

AprilTag是一种视觉基准系统,类似的视觉基准标签还有:ArUco、ARTag、TopoTag等。而AprilTag因其对旋转、光照、模糊具有良好的鲁棒性,因此被广泛应用于目标跟踪、室内定位、增强现实等领域。本文主要展示使用AprilTag标签,分别获取AprilTag标签在相机坐标系下和世界坐标系下位置。

生成AprilTag不同家族标签图像教程

2.AprilTag 相关资料

AprilTag论文三部曲:

-

AprilTag: A robust and flexible visual fiducial system. --2011

-

AprilTag 2: Efficient and robust fiducial detection. --2016

-

Flexible Layouts for Fiducial Tags. --2019(AprilTag3)

一些应用AprilTag的论文:

- UAV Autonomous Landing Technology Based on AprilTags Vision Positioning Algorithm.

- Pose Estimation for Multicopters Based on Monocular Vision and AprilTag.

- Image Digital Zoom Based Single Target Apriltag Recognition Algorithm in Large Scale Changes on the Distance.

上述资料打包下载地址:https://cloud.189.cn/web/share?code=JRJRJbNfe2ay(访问码:7l96)

AprilTag论文源码地址:https://github.com/AprilRobotics/apriltag

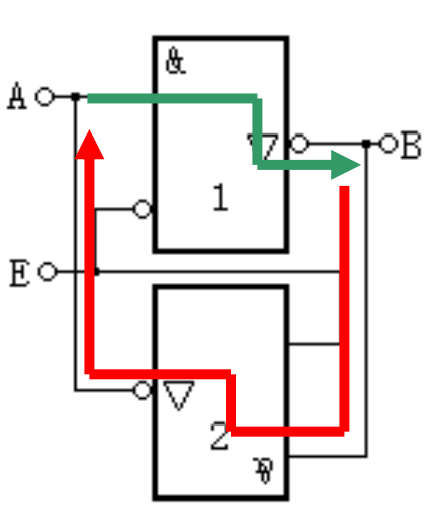

3.AprilTag 原理

检测标签

-

检测线

-

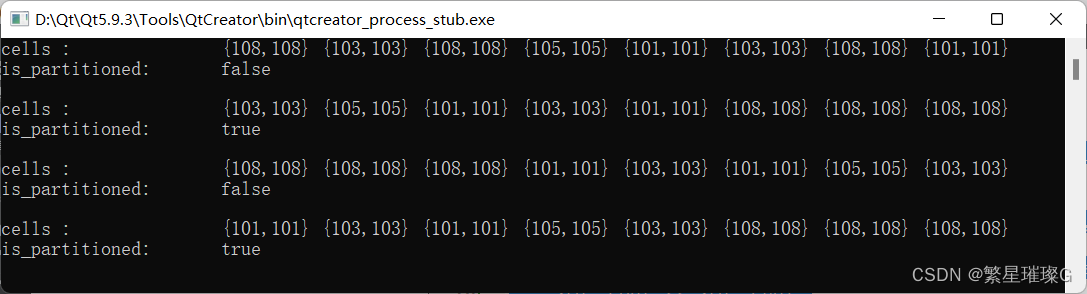

检测矩形(基于深度优先搜索

-

单应性变换与外参估计

-

外参估计

-

建立两种阈值模型(超过该阈值即认为”1“,少于该阈值即认为是”0“),一种基于“黑色”像素强度的空间变化,一种基于“白色“像素强度的空间变化模型:

-

该模型有四个参数,能用最小二乘回归求解。最后阈值大小由两种模型预测的平均值取得。根据不同黑白色块解码标签得到不同的码型,将解码得到的码型与储存在本地的码型库相对比,排除其它码型的干扰,判断是否为正确的标签。

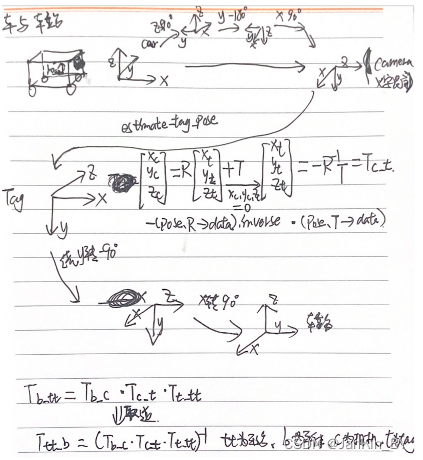

解算位姿

现在我们已知了由世界坐标系至相机坐标系的旋转矩阵和平移矩阵,现在可以根据这两个矩阵,估计相机在世界坐标系下的位置和相机坐标系下标签的位置。

1. 相机在世界坐标系下的位置

根据相机坐标系与世界坐标系之间的关系可知:

因为相机在相机坐标系下的坐标为,因此可得:

4.为ApriTag论文源码 opencv_demo.cc 添加位姿估计的代码

运行环境

Ubuntu 18.04

Opencv 3.14

IDE: Clion

推荐在Linux环境下,先安装基于C++的OpenCV lib,再编译执行下列代码。

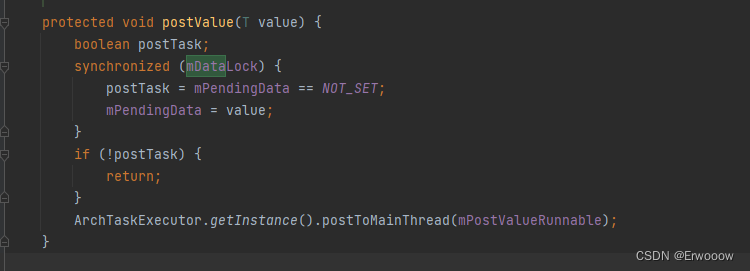

/*调试经验:

1.相机位姿的精度主要取决于tag四个角点的检测像素精度。根据以前测试经验,存在角点误检,导致位姿误差大。主要有两种情况:

一、运动(特别是转弯)过程中照片有点模糊

二、相机与tag存在较大的倾角(30度以外误差比较大)

三、距离越远,误差越大,2m以外谨慎使用。

可能还要其他情况导致误检。

另外相机的内参也会影响计算的位姿,一定要标定好相机内参(重投影误差<0.15),做好畸变校正。

故需要加入严格的判断,最好让相机是正对mark。

*/

#include "tag_station_detection.hpp"

CREATE_LOGex(TagStationDetectionTask);

TagStationDetection::TagStationDetection()

{

td_ = apriltag_detector_create();

tf_ = tag36h11_create();

// apriltag_detector_add_family(td_, tf_);

apriltag_detector_add_family_bits(td_, tf_, 3);

td_->quad_decimate = 1.0; // Decimate input image by factor //降低图片分辨率,经实验该参数可大幅提升检测时间且不影响检测结果

td_->quad_sigma = 0; // No blur //高斯降噪,如果噪声较大的图片,可使用该参数

td_->nthreads = 2; // 2 treads provided //使用几个线程来运行

td_->debug = 0; // No debuging output

td_->refine_edges = 1;

info_.tagsize = APRILTAG_DETECTED_SIZE;

cv::Mat K, D;

if (HIGH_RESOLUTION)

{

K = (cv::Mat_<double>(3, 3) << 471.33715, 0.0, 437.19435, 0.0, 472.0701, 275.1862, 0.0, 0.0, 1.0);

D = (cv::Mat_<double>(5, 1) << -0.305921, 0.060122, -0.000640, 0.001716, 0.000000);

}

else

{

// K = (cv::Mat_<double>(3, 3) << 343.029387, 0.0, 320.552669, 0.0, 342.238068, 200.402539, 0.0, 0.0, 1.0);

// D = (cv::Mat_<double>(5, 1) << -0.343152, 0.095372, -0.003143, -0.001278, 0.000000);

K = (cv::Mat_<double>(3, 3) << 319.1774094207188, 0.0, 315.9695951747343, 0.0, 319.5137994758127, 199.07306955419622, 0.0, 0.0, 1.0);

D = (cv::Mat_<double>(5, 1) << -0.3204769598908819, 0.07196729379479753, -0.0019994529282904004, 0.0008377275431221735, 0.0);

}

cv::Size img_size(img_width_, img_height_);

initUndistortRectifyMap(K, D, cv::Mat(), K, img_size, CV_16SC2, map1_, map2_);//图片去畸变

cv::Mat new_camera_matric = cv::getOptimalNewCameraMatrix(K, D, img_size, 1);

cv::initUndistortRectifyMap(K, D, cv::Mat(), new_camera_matric, img_size, CV_16SC2, map1_, map2_);

if (TAG_IMG_UNDISTORT)

K = new_camera_matric;

info_.fx = K.at<double>(0, 0);

info_.fy = K.at<double>(1, 1);

info_.cx = K.at<double>(0, 2);

info_.cy = K.at<double>(1, 2);

Eigen::Vector3d ea_cam_slope(0, 0, -37.0 / 180 * CV_PI);

// Eigen::Vector3d ea_cam_slope(0, 0, 0.0 / 180 * CV_PI);

//绕不同轴进行旋转 旋转相机 ZYX 相机变换

rot_cam_slope_ = Eigen::AngleAxisd(ea_cam_slope[0], Eigen::Vector3d::UnitZ()) * //默认为单位矩阵,绕z轴旋转0度,结果为单位矩阵

Eigen::AngleAxisd(ea_cam_slope[1], Eigen::Vector3d::UnitY()) * //绕y轴旋转0弧度,结果不变

Eigen::AngleAxisd(ea_cam_slope[2], Eigen::Vector3d::UnitX()); //绕x轴旋转-37/180×pi弧度 绕x轴右乘

//绕z轴旋转90度,绕y轴旋转-180 绕x轴旋转90,在z方向向上平移 0.1m

Eigen::Quaterniond quat_b_c(0.5, -0.5, 0.5, -0.5); //w,x,y,z,四元数4×1 90 -180 90 -0.5i+0.5j-0.5k+0.5

Eigen::Matrix3d rot_mat_b_c;

rot_mat_b_c = quat_b_c.toRotationMatrix();//四元数转矩阵

Eigen::Translation3d trans_b_c(0.0, 0.0, 0.1);

Tb_c_ = trans_b_c * rot_mat_b_c;

//绕z轴旋转0度,绕y轴旋转-90度,绕x轴旋转90度,平移0

Eigen::Quaterniond quat_t_tt(0.5, 0.5, -0.5, 0.5); // w,x,y,z

Eigen::Matrix3d rot_mat_t_tt = quat_t_tt.toRotationMatrix();

Eigen::Translation3d trans_t_tt(0, 0, 0);

Tt_tt_ = trans_t_tt * rot_mat_t_tt;

//不进行旋转,在x负方向平移-0.21米

Eigen::Quaterniond quat_car(1, 0, 0, 0); // w,x,y,z

Eigen::Matrix3d rot_car = quat_car.toRotationMatrix();

Eigen::Translation3d trans_car(-0.21, 0, 0);

T_car_ = trans_car * rot_car;

}

TagStationDetection::~TagStationDetection()

{

}

bool TagStationDetection::OpenCam(int cam_id)

{

LINFO(TagStationDetectionTask, "Enabling video capture");

cap_ = cv::VideoCapture(cam_id);

cap_.set(cv::CAP_PROP_FRAME_WIDTH, img_width_);

cap_.set(cv::CAP_PROP_FRAME_HEIGHT, img_height_);

cv::TickMeter meter;

meter.start();

if (!cap_.isOpened())

{

LERROR(TagStationDetectionTask, "Couldn't open video capture device");

return false;

}

else

{

meter.stop();

LINFO(TagStationDetectionTask,

"Detector initialized in " << meter.getTimeSec() << "s"

<< ", " << cap_.get(cv::CAP_PROP_FRAME_WIDTH)

<< "x" << cap_.get(cv::CAP_PROP_FRAME_HEIGHT)

<< " @" << cap_.get(cv::CAP_PROP_FPS) << "FPS");

meter.reset();

return true;

}

}

void TagStationDetection::ReleaseCam()

{

cap_.release();

cv::destroyAllWindows();

}

bool TagStationDetection::OpenVideo(const std::string &video_name)

{

cap_.open(video_name.c_str());

if (!cap_.isOpened())

{

std::cerr << "Couldn't open video " << video_name.c_str() << std::endl;

return false;

}

else

return true;

}

bool TagStationDetection::DetectCamFrameTag(TagPose &tag_pose)

{

bool if_found = false;

cap_ >> frame_;

if (frame_.empty())

return if_found;

if (TAG_IMG_UNDISTORT)

{

cv::Mat UndistortImage;

remap(frame_, UndistortImage, map1_, map2_, cv::INTER_LINEAR);//去畸变

frame_ = UndistortImage;

}

cvtColor(frame_, gray_, cv::COLOR_BGR2GRAY);

image_u8_t im = {.width = gray_.cols,

.height = gray_.rows,

.stride = gray_.cols,

.buf = gray_.data};//转为apriltag库的格式

zarray_t *detections = apriltag_detector_detect(td_, &im);//检测tag

/*typedef struct apriltag_detection apriltag_detection_t;

struct apriltag_detection

{

apriltag_family_t *family;

int id;//tag的id

int hamming;

float decision_margin;

matd_t *H; //代表从(-1,1), (1,1), (1,-1),(-1,-1)转到像素坐标

double c[2]; //tag中心点在像素坐标下的坐标

double p[4][2]; //像素坐标下的坐标,4个点从左下角开始逆时针旋转

};*/

for (int i = 0; i < zarray_size(detections); i++)

{

apriltag_detection_t *det;

zarray_get(detections, i, &det);

if (det->id == 0)//第0个tag

{

if (det->decision_margin > 50.0)

{

if_found = true;

info_.det = det;

apriltag_pose_t pose;

// pose origin : center of tag

//使用apriltag自带的库求解。这就会涉及库的坐标系和我们的坐标系转换

/*apriltag_detection_info_t tag_info;

tag_info.cx=cameraParam.m_cx;

tag_info.cy=cameraParam.m_cy;

tag_info.fx=cameraParam.m_fx;

tag_info.fy=cameraParam.m_fy;

tag_info.tagsize=find_mark.length;

tag_info.det=det;

apriltag_pose_t pose;

estimate_tag_pose(&tag_info, &pose);

Vector3d ori_relative_P;

Matrix3d ori_rotation_matrix3d;

memcpy(&ori_relative_P, pose.t->data, sizeof(Vector3d));

memcpy(&ori_rotation_matrix3d, pose.R->data, sizeof(Matrix3d));*/

double err = estimate_tag_pose(&info_, &pose);

CalculatePose(pose, tag_pose);

if (APRILTAG_DETECTED_IMG_VISUALIZATION)

DetectedVisualization(det);

}

break;

}

else

continue;

}

if (not if_found)

{

if (APRILTAG_DETECTED_IMG_VISUALIZATION)

DetectedVisualization();

}

apriltag_detections_destroy(detections);

return if_found;

}

bool TagStationDetection::DetectImg(const std::string &img_name, TagPose &tag_pose)

{

bool if_found = false;

cv::Mat imge_raw = cv::imread(img_name.c_str());

if (TAG_IMG_UNDISTORT)

{

cv::Mat UndistortImage;

remap(imge_raw, UndistortImage, map1_, map2_, cv::INTER_LINEAR);

// cv::imwrite("UndistortImage.png", UndistortImage);

frame_ = UndistortImage;

}

else

frame_ = imge_raw;

cvtColor(frame_, gray_, cv::COLOR_BGR2GRAY);

image_u8_t im = {.width = gray_.cols,

.height = gray_.rows,

.stride = gray_.cols,

.buf = gray_.data};

zarray_t *detections = apriltag_detector_detect(td_, &im);

for (int i = 0; i < zarray_size(detections); i++)

{

apriltag_detection_t *det;

zarray_get(detections, i, &det);

if (det->id == 0)

{

if_found = true;

info_.det = det;

apriltag_pose_t pose;

double err = estimate_tag_pose(&info_, &pose);

CalculatePose(pose, tag_pose);

if (APRILTAG_DETECTED_IMG_VISUALIZATION)

DetectedVisualization(det, 1);

break;

}

else

continue;

}

if (not if_found)

LWARN(TagStationDetectionTask, "No effective tag detected");

apriltag_detections_destroy(detections);

return if_found;

}

void TagStationDetection::DetectedVisualization(apriltag_detection_t *det, int d)

{

line(frame_, cv::Point(det->p[0][0], det->p[0][1]),

cv::Point(det->p[1][0], det->p[1][1]),

cv::Scalar(0, 0xff, 0), 2);

line(frame_, cv::Point(det->p[0][0], det->p[0][1]),

cv::Point(det->p[3][0], det->p[3][1]),

cv::Scalar(0, 0, 0xff), 2);

line(frame_, cv::Point(det->p[1][0], det->p[1][1]),

cv::Point(det->p[2][0], det->p[2][1]),

cv::Scalar(0xff, 0, 0), 2);

line(frame_, cv::Point(det->p[2][0], det->p[2][1]),

cv::Point(det->p[3][0], det->p[3][1]),

cv::Scalar(0xff, 0, 0), 2);

std::cout << "pixel coord: (" << det->p[0][0] << ", " << det->p[0][1] << "); "

<< " (" << det->p[1][0] << ", " << det->p[1][1] << ")" << std::endl;

std::stringstream ss;

ss << det->id;

cv::String text = ss.str();

int fontface = cv::FONT_HERSHEY_SCRIPT_SIMPLEX;

double fontscale = 1.0;

int baseline;

cv::Size textsize = cv::getTextSize(text, fontface, fontscale, 2,

&baseline);

putText(frame_, text, cv::Point(det->c[0] - textsize.width / 2, det->c[1] + textsize.height / 2),

fontface, fontscale, cv::Scalar(0xff, 0x99, 0), 2);

imshow("Tag Detections", frame_);

cv::waitKey(d);

}

void TagStationDetection::DetectedVisualization()

{

imshow("Tag Detections", frame_);

cv::waitKey(1);

}

void TagStationDetection::CalculatePose(const apriltag_pose_t &pose, TagPose &tag_pose)

{

// pose.R->data a matrix structure for holding double-precision values with

// data in row-major order (i.e. index = row*ncols + col).

Eigen::Matrix3d rot_mat_cam_tag = rot_cam_slope_ * (Eigen::Map<Eigen::Matrix3d>(pose.R->data).inverse());//转置

//pose.R->data tag系下camera位置 (pose.R->data).inverse()=(pose.R->data).transpose() Tt_c(-1)=Tc_t

Eigen::Vector3d trans_vector = -Eigen::Map<Eigen::Vector3d>(pose.t->data);

Eigen::Translation3d translation(trans_vector[0], trans_vector[1], trans_vector[2]);

//Translation3d是表示平移的一种形式,构造函数为Translation3d(x,y,z),它可以直接和旋转向量、四元数、旋转矩阵相乘,得到的结果为Affine3d类型

Eigen::Affine3d Tc_t = translation * rot_mat_cam_tag;//相机坐标系下tag位置 -R(-1)*T

Eigen::Affine3d T_target;

T_target.matrix() = (Tb_c_.matrix() * Tc_t.matrix() * Tt_tt_.matrix()).inverse();//计算tag坐标系下相机的位置 Tb_tt(-1)=Ttt_b

Eigen::Vector3d rpy_target = R2ypr(T_target.rotation());

tag_pose.x = T_target.matrix()(0, 3);

tag_pose.y = T_target.matrix()(1, 3);

tag_pose.yaw = rpy_target(0);

Eigen::Affine3d T_target_car;

T_target_car.matrix() = T_target.matrix() * T_car_.matrix();

tag_pose.y_car = T_target_car.matrix()(1, 3);

std::cout << "pose x,y,z,yaw: " << tag_pose.x << ", " << tag_pose.y << ", " << T_target.matrix()(2, 3) << std::endl;

}

Eigen::Vector3d TagStationDetection::R2ypr(const Eigen::Matrix3d &R)

{

Eigen::Vector3d n = R.col(0);

Eigen::Vector3d o = R.col(1);

Eigen::Vector3d a = R.col(2);

Eigen::Vector3d ypr(3);

double y = atan2(n(1), n(0));

double p = atan2(-n(2), n(0) * cos(y) + n(1) * sin(y));

double r = atan2(a(0) * sin(y) - a(1) * cos(y), -o(0) * sin(y) + o(1) * cos(y));

ypr(0) = y;

ypr(1) = p;

ypr(2) = r;

return ypr;

}

void TagStationPub::init()

{

ctx_ = zmq::context_t{1};

publisher_ = zmq::socket_t(ctx_, zmq::socket_type::pub);

publisher_.connect("tcp://127.0.0.1:5555");

}

void TagStationPub::TagPosePublish(const TagPose &tag_pose)

{

pose_2d_.data = tag_pose;

Pose2dPublish();

LINFO(TagStationDetectionTask,

"pub tag pose (x, y, yaw, y_car): " << pose_2d_.data.x << ", " << pose_2d_.data.y

<< ", " << pose_2d_.data.yaw << ". " << pose_2d_.data.y_car);

}

void TagStationPub::TagPosePublish()

{

pose_2d_.data.x = 15;

pose_2d_.data.y = 15;

pose_2d_.data.yaw = 15;

pose_2d_.data.y_car = 15;

Pose2dPublish();

LWARN(TagStationDetectionTask,

"no detection, pub: " << pose_2d_.data.x << ", " << pose_2d_.data.y

<< ", " << pose_2d_.data.yaw << ". " << pose_2d_.data.y_car);

}

void TagStationPub::Pose2dPublish()

{

publisher_.send(zmq::str_buffer("/charge_station_detection "), zmq::send_flags::sndmore);

zmq::message_t msg(sizeof(pose_2d_));

memcpy(msg.data(), pose_2d_.buffer, sizeof(pose_2d_));

publisher_.send(msg);

}apritag_vision: 充电站与apritag之间进行定位,确定车站与车之间的位置关系

![[附源码]java毕业设计咖啡销售管理系统-](https://img-blog.csdnimg.cn/8a415ff63ffb4f6cb33997f86a72fe4d.png)