一 XPath的概念

1 XPath是对XML进行查询的表达式

① Axes(路径) / 及 //;

② 第几个子节点[1] 等;

③ 属性@

④ 条件 []

⑤ 例如

/books/book/@title

//price

para[@type=“warning”][5]

2 使用XPath

① XmlDocument doc=new XmlDocument();

② doc.LoadXml(strXml);

③ XmlElement root=doc.DocumentElement;

④ XmlNodeList nodes=

root.SelectNodes(strXPath);

XmlNode node=root.SelectSingleNode(strXPath);

node的.NodeType .InnerXml及.Value;

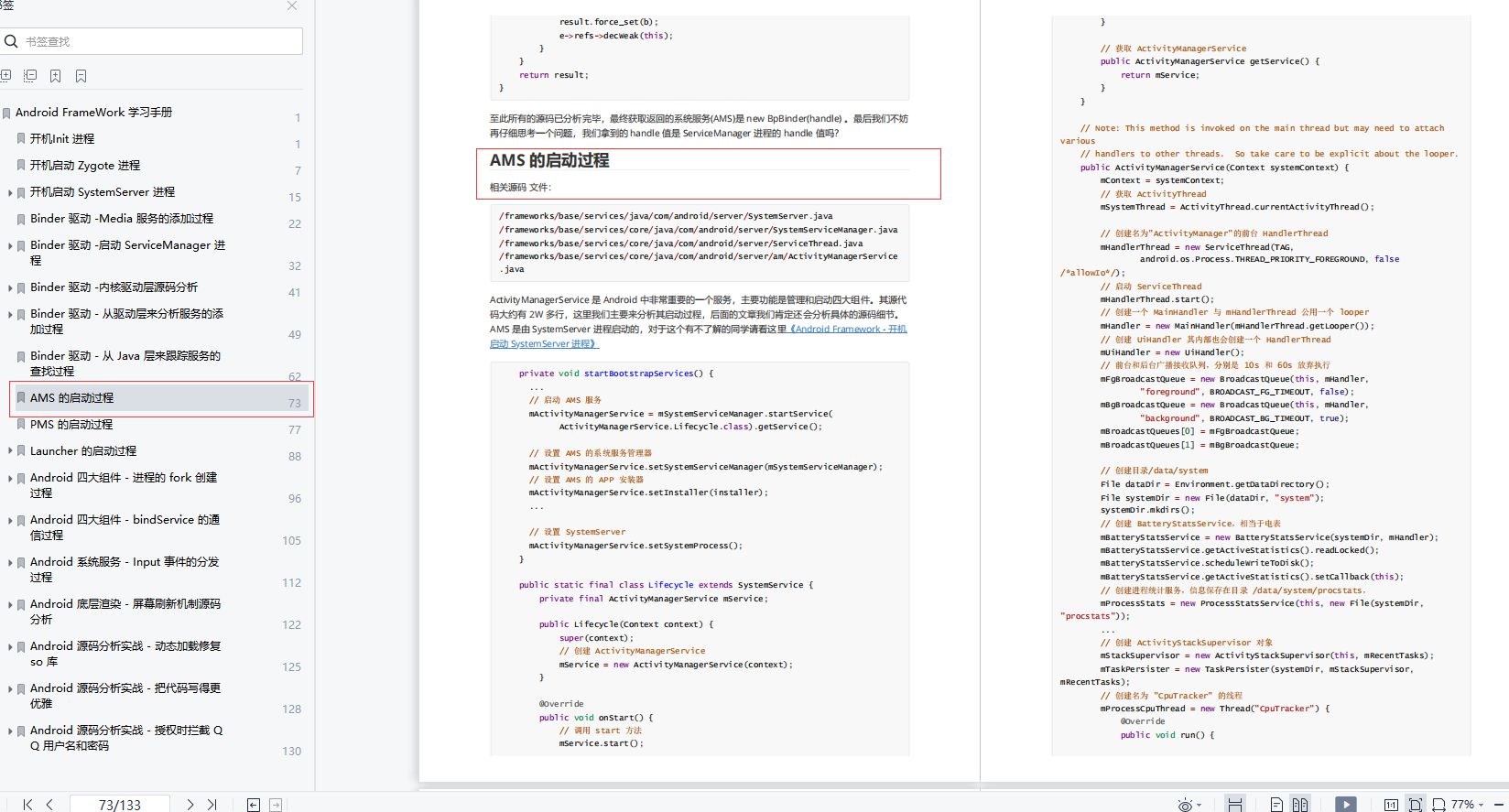

3 使用Xlst进行转换

XMLDocument doc=new XMLDocument();

doc.Load(@".\BookList.xml");

XPathNavigator nav=doc.Createnavigator();

nav.MoveToRoot();

XslTransform xt=new XslTransform();

xt.Load(@".\BookList.xslt");

XmlTextWriter writer=new XmlTextWriter(Console.Out);

xt.Transform(nav,null,writer);

using System;

using System.Collections;

using System.Collections.Generic;

using System.IO;

using System.Linq;

using System.Net;

using System.Text;

using System.Text.RegularExpressions;

using System.Threading;

using System.Threading.Tasks;

namespace 网络爬虫

{

public class Crawler

{

private WebClient webClient = new WebClient();

private Hashtable urls = new Hashtable();

private int count = 0;

static void Main(string[] args)

{

Crawler myCrawler = new Crawler();

string startUrl = "http://www.cnblogs.com/dstang2000";

if (args.Length >= 1)

startUrl = args[0];

myCrawler.urls.Add(startUrl, false);//加入初始页面

new Thread(new ThreadStart(myCrawler.Crawl)).Start();//开始爬行

Console.ReadKey();

}

private void Crawl()

{

Console.WriteLine("开始爬行了.....");

while(true)

{

string current = null;

foreach(string url in urls.Keys)//找到一个还没有下载过的链接

{

if ((bool)urls[url])

continue;//已经下载过的,不再下载

current = url;

}

if (current == null || count > 10)

break;

Console.WriteLine("爬行" + current + "页面!");

string html = DownLoad(current);//下载

urls[current] = true;

count++;

Parse(html);//解析,并加入新的链接

}

Console.WriteLine("爬行结束");

}

public string DownLoad(string url)

{

try

{

HttpWebRequest req = (HttpWebRequest)WebRequest.Create(url);

req.Timeout = 30000;

HttpWebResponse response = (HttpWebResponse)req.GetResponse();

byte[] buffer = ReadInstreamIntoMemory(response.GetResponseStream());

string fileName = count.ToString();

FileStream fs = new FileStream(fileName, FileMode.OpenOrCreate);

fs.Write(buffer, 0, buffer.Length);

fs.Close();

string html = Encoding.UTF8.GetString(buffer);

return html;

}

catch

{ }

return "";

}

public void Parse(string html)

{

string strRef = @"(href|HREF|src|SRC)[ ]*=[ ]*[""'][^""'#>]+[""']";

MatchCollection matches = new Regex(strRef).Matches(html);

foreach(Match match in matches)

{

strRef = match.Value.Substring(match.Value.IndexOf('=') + 1).Trim('"', '\'', '#', ' ', '>');

if (strRef.Length == 0)

continue;

if (urls[strRef] == null)

urls[strRef] = false;

}

}

private static byte[] ReadInstreamIntoMemory(Stream stream)

{

int bufferSize = 16384;

byte[] buffer = new byte[bufferSize];

MemoryStream ms = new MemoryStream();

while(true)

{

int numBytesRead = stream.Read(buffer, 0, bufferSize);

if (numBytesRead <= 0)

break;

ms.Write(buffer, 0, numBytesRead);

}

return ms.ToArray();

}

}

}