阳阳的一周,算是挺过来了,现在只剩感冒了,迷迷糊糊的干了一周,混口饭吃不容易呀!简单记录一下遇到的问题吧!

连接hive(星环)数据库失败

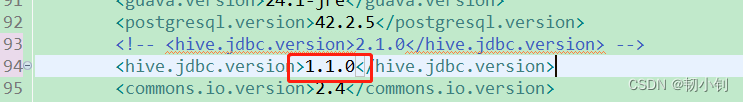

- 方案一 : 海豚调度2.0.5使用的hive包是2.0版本,星环库包装的是hive 1.0版本,因此连接不上,将hive包降为1.0(

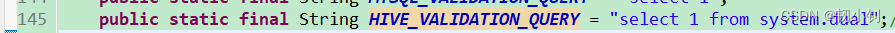

<hive.jdbc.version>1.1.0</hive.jdbc.version>),同时修改VALIDATION_QUERY为"select 1 from system.dual"即可

org.apache.dolphinscheduler.spi.utils.Constants

- 方案二(推荐) : 删除

hive-jdbcjar包,引入星环驱动包inceptor-driver-4.8.3.jar(下了半天没成功,如果连接星环库肯定项目里面有这个包,直接拿过来吧),连接过程中若是包某类或方法不存在,则为jar包冲突,需要继续删除hive相关包(目前遇到的只有hive1.0的service包冲突,2.0只删除了jdbc包,其它没报冲突),同样也需要修改VALIDATION_QUERY为"select 1 from system.dual",同上

推荐理由:hive1.0 不支持存储过程调用方法,会报错,详情如下<dependency> <groupId>inceptor.hive</groupId> <artifactId>inceptor.driver</artifactId> <version>4.8.3</version> </dependency>[ERROR] 2022-12-12 17:54:44.313 TaskLogLogger-class org.apache.dolphinscheduler.plugin.task.procedure.ProcedureTask:[123] - procedure task error java.sql.SQLException: Method not supported at org.apache.hive.jdbc.HiveConnection.prepareCall(HiveConnection.java:922) at com.zaxxer.hikari.pool.ProxyConnection.prepareCall(ProxyConnection.java:316) at com.zaxxer.hikari.pool.HikariProxyConnection.prepareCall(HikariProxyConnection.java) at org.apache.dolphinscheduler.plugin.task.procedure.ProcedureTask.handle(ProcedureTask.java:107) at org.apache.dolphinscheduler.server.worker.runner.TaskExecuteThread.run(TaskExecuteThread.java:191) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) at com.google.common.util.concurrent.TrustedListenableFutureTask$TrustedFutureInterruptibleTask.runInterruptibly(TrustedListenableFutureTask.java:125) at com.google.common.util.concurrent.InterruptibleTask.run(InterruptibleTask.java:57) at com.google.common.util.concurrent.TrustedListenableFutureTask.run(TrustedListenableFutureTask.java:78) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) at java.lang.Thread.run(Thread.java:745)

执行星环(hive) sql节点,工作流实例一周执行中,任务实例则为提交状态,一直不执行

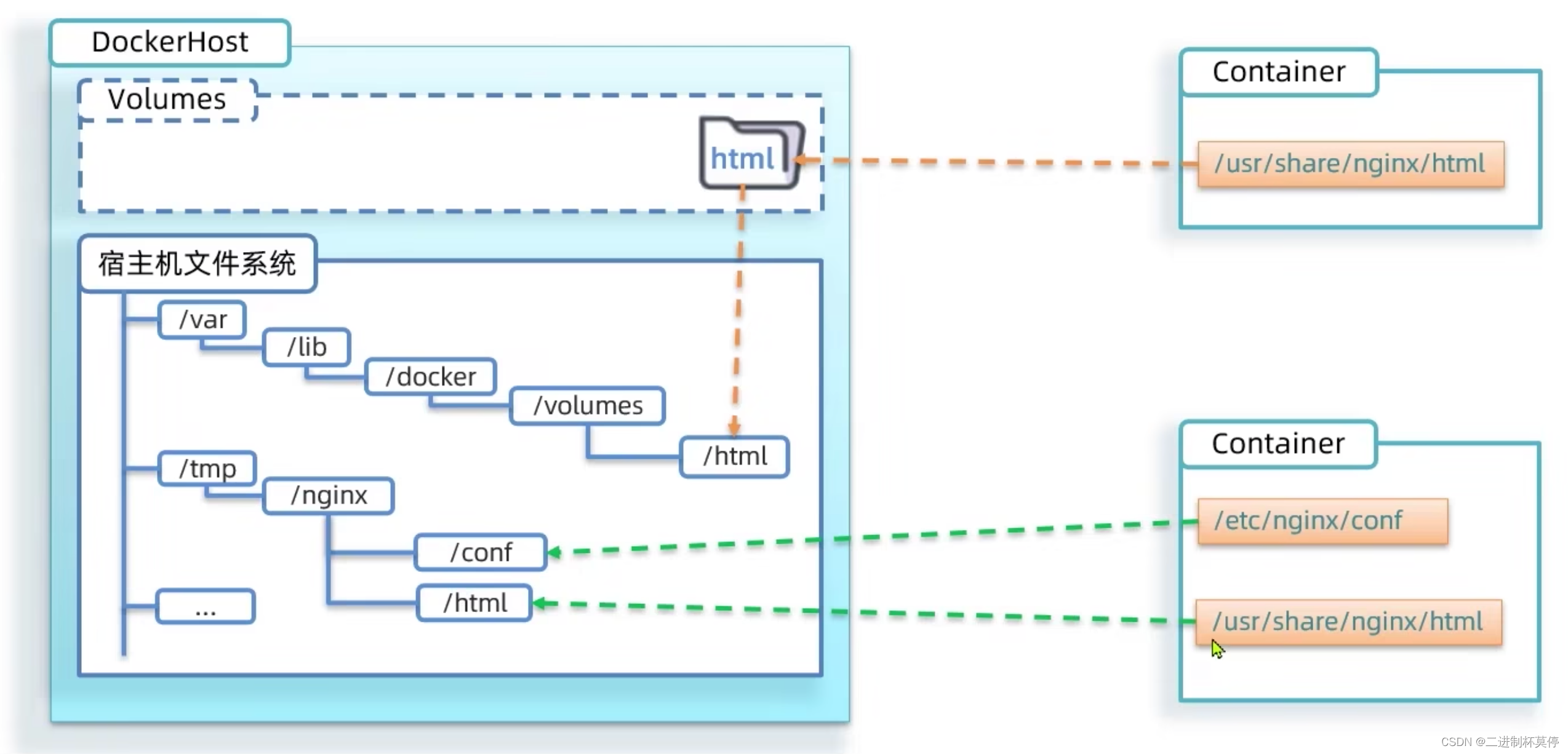

原因是common.properties 配置文件中的resource.storage.type=HDFS,配置了HDFS存储,但是并未按照hadoop集群,master一直尝试连接hdfs服务器,因此出现上述情况。将resource.storage.type=HDFS改为resource.storage.type=NONE,重启服务即可

执行星环(hive) 存储过程节点,超过30秒变报超时错

-

错误详情

org.apache.dolphinscheduler.plugin.task.procedure.ProcedureTask [ERROR] 2022-12-22 20:49:10.275 TaskLogLogger-class org.apache.dolphinscheduler.plugin.task.procedure.ProcedureTask:[123] - procedure task error java.sql.SQLException: org.apache.thrift.transport.TTransportException: java.net.SocketTimeoutException: Read timed out at org.apache.hive.jdbc.HivePreparedStatement2.executeInternal(HivePreparedStatement2.java:158) at org.apache.hive.jdbc.HiveStatement.execute(HiveStatement.java:419) at org.apache.hive.jdbc.HivePreparedStatement2.execute(HivePreparedStatement2.java:165) at org.apache.hive.jdbc.HiveCallableStatement.execute(HiveCallableStatement.java:41) at com.zaxxer.hikari.pool.ProxyPreparedStatement.execute(ProxyPreparedStatement.java:44) at com.zaxxer.hikari.pool.HikariProxyCallableStatement.execute(HikariProxyCallableStatement.java) at org.apache.dolphinscheduler.plugin.task.procedure.ProcedureTask.handle(ProcedureTask.java:116) at org.apache.dolphinscheduler.server.worker.runner.TaskExecuteThread.run(TaskExecuteThread.java:191) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) at com.google.common.util.concurrent.TrustedListenableFutureTask$TrustedFutureInterruptibleTask.runInterruptibly(TrustedListenableFutureTask.java:125) at com.google.common.util.concurrent.InterruptibleTask.run(InterruptibleTask.java:57) at com.google.common.util.concurrent.TrustedListenableFutureTask.run(TrustedListenableFutureTask.java:78) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) at java.lang.Thread.run(Thread.java:745) Caused by: org.apache.thrift.transport.TTransportException: java.net.SocketTimeoutException: Read timed out at org.apache.thrift.transport.TIOStreamTransport.read(TIOStreamTransport.java:129) at org.apache.thrift.transport.TTransport.readAll(TTransport.java:86) at org.apache.thrift.transport.TSaslTransport.readLength(TSaslTransport.java:376) at org.apache.thrift.transport.TSaslTransport.readFrame(TSaslTransport.java:453) at org.apache.thrift.transport.TSaslTransport.read(TSaslTransport.java:435) at org.apache.thrift.transport.TSaslClientTransport.read(TSaslClientTransport.java:37) at org.apache.thrift.transport.TTransport.readAll(TTransport.java:86) at org.apache.thrift.protocol.TBinaryProtocol.readAll(TBinaryProtocol.java:429) at org.apache.thrift.protocol.TBinaryProtocol.readI32(TBinaryProtocol.java:318) at org.apache.thrift.protocol.TBinaryProtocol.readMessageBegin(TBinaryProtocol.java:219) at org.apache.thrift.TServiceClient.receiveBase(TServiceClient.java:69) at org.apache.hive.service.cli.thrift.TCLIService$Client.recv_ExecutePreCompiledStatement(TCLIService.java:763) at org.apache.hive.service.cli.thrift.TCLIService$Client.ExecutePreCompiledStatement(TCLIService.java:750) at org.apache.hive.jdbc.HivePreparedStatement2.executeInternal(HivePreparedStatement2.java:141) ... 14 common frames omitted -

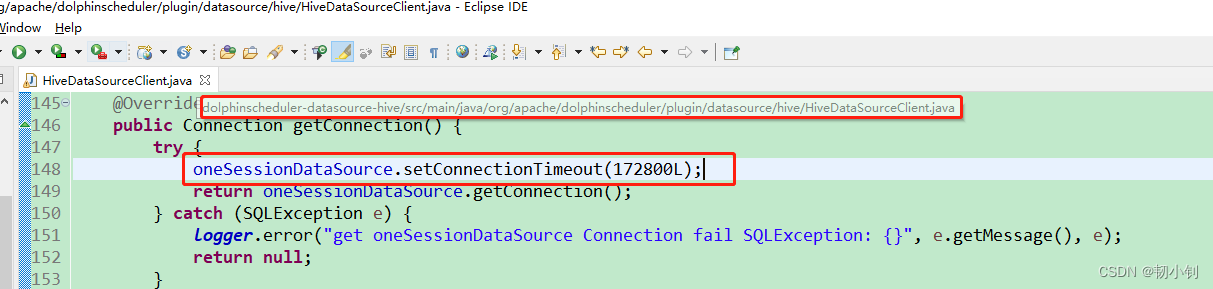

解决办法:修改

HiveDataSourceClient类中的hive连接超时时间

-

HiveDataSourceClient

/* * Licensed to the Apache Software Foundation (ASF) under one or more * contributor license agreements. See the NOTICE file distributed with * this work for additional information regarding copyright ownership. * The ASF licenses this file to You under the Apache License, Version 2.0 * (the "License"); you may not use this file except in compliance with * the License. You may obtain a copy of the License at * * http://www.apache.org/licenses/LICENSE-2.0 * * Unless required by applicable law or agreed to in writing, software * distributed under the License is distributed on an "AS IS" BASIS, * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. * See the License for the specific language governing permissions and * limitations under the License. */ package org.apache.dolphinscheduler.plugin.datasource.hive; import static org.apache.dolphinscheduler.spi.task.TaskConstants.HADOOP_SECURITY_AUTHENTICATION_STARTUP_STATE; import static org.apache.dolphinscheduler.spi.task.TaskConstants.JAVA_SECURITY_KRB5_CONF; import static org.apache.dolphinscheduler.spi.task.TaskConstants.JAVA_SECURITY_KRB5_CONF_PATH; import java.io.IOException; import java.lang.reflect.Field; import java.sql.Connection; import java.sql.SQLException; import java.util.concurrent.Executors; import java.util.concurrent.ScheduledExecutorService; import java.util.concurrent.TimeUnit; import org.apache.dolphinscheduler.plugin.datasource.api.client.CommonDataSourceClient; import org.apache.dolphinscheduler.plugin.datasource.api.provider.JdbcDataSourceProvider; import org.apache.dolphinscheduler.plugin.datasource.utils.CommonUtil; import org.apache.dolphinscheduler.spi.datasource.BaseConnectionParam; import org.apache.dolphinscheduler.spi.enums.DbType; import org.apache.dolphinscheduler.spi.utils.Constants; import org.apache.dolphinscheduler.spi.utils.PropertyUtils; import org.apache.dolphinscheduler.spi.utils.StringUtils; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.security.UserGroupInformation; import org.slf4j.Logger; import org.slf4j.LoggerFactory; import com.zaxxer.hikari.HikariDataSource; import sun.security.krb5.Config; public class HiveDataSourceClient extends CommonDataSourceClient { private static final Logger logger = LoggerFactory.getLogger(HiveDataSourceClient.class); private ScheduledExecutorService kerberosRenewalService; private Configuration hadoopConf; protected HikariDataSource oneSessionDataSource; private UserGroupInformation ugi; public HiveDataSourceClient(BaseConnectionParam baseConnectionParam, DbType dbType) { super(baseConnectionParam, dbType); } @Override protected void preInit() { logger.info("PreInit in {}", getClass().getName()); this.kerberosRenewalService = Executors.newSingleThreadScheduledExecutor(); } @Override protected void initClient(BaseConnectionParam baseConnectionParam, DbType dbType) { logger.info("Create Configuration for hive configuration."); this.hadoopConf = createHadoopConf(); logger.info("Create Configuration success."); logger.info("Create UserGroupInformation."); this.ugi = createUserGroupInformation(baseConnectionParam.getUser()); logger.info("Create ugi success."); super.initClient(baseConnectionParam, dbType); this.oneSessionDataSource = JdbcDataSourceProvider.createOneSessionJdbcDataSource(baseConnectionParam, dbType); logger.info("Init {} success.", getClass().getName()); } @Override protected void checkEnv(BaseConnectionParam baseConnectionParam) { super.checkEnv(baseConnectionParam); checkKerberosEnv(); } private void checkKerberosEnv() { String krb5File = PropertyUtils.getString(JAVA_SECURITY_KRB5_CONF_PATH); Boolean kerberosStartupState = PropertyUtils.getBoolean(HADOOP_SECURITY_AUTHENTICATION_STARTUP_STATE, false); if (kerberosStartupState && StringUtils.isNotBlank(krb5File)) { System.setProperty(JAVA_SECURITY_KRB5_CONF, krb5File); try { Config.refresh(); Class<?> kerberosName = Class.forName("org.apache.hadoop.security.authentication.util.KerberosName"); Field field = kerberosName.getDeclaredField("defaultRealm"); field.setAccessible(true); field.set(null, Config.getInstance().getDefaultRealm()); } catch (Exception e) { throw new RuntimeException("Update Kerberos environment failed.", e); } } } private UserGroupInformation createUserGroupInformation(String username) { String krb5File = PropertyUtils.getString(Constants.JAVA_SECURITY_KRB5_CONF_PATH); String keytab = PropertyUtils.getString(Constants.LOGIN_USER_KEY_TAB_PATH); String principal = PropertyUtils.getString(Constants.LOGIN_USER_KEY_TAB_USERNAME); try { UserGroupInformation ugi = CommonUtil.createUGI(getHadoopConf(), principal, keytab, krb5File, username); try { Field isKeytabField = ugi.getClass().getDeclaredField("isKeytab"); isKeytabField.setAccessible(true); isKeytabField.set(ugi, true); } catch (NoSuchFieldException | IllegalAccessException e) { logger.warn(e.getMessage()); } kerberosRenewalService.scheduleWithFixedDelay(() -> { try { ugi.checkTGTAndReloginFromKeytab(); } catch (IOException e) { logger.error("Check TGT and Renewal from Keytab error", e); } }, 5, 5, TimeUnit.MINUTES); return ugi; } catch (IOException e) { throw new RuntimeException("createUserGroupInformation fail. ", e); } } protected Configuration createHadoopConf() { Configuration hadoopConf = new Configuration(); hadoopConf.setBoolean("ipc.client.fallback-to-simple-auth-allowed", true); return hadoopConf; } protected Configuration getHadoopConf() { return this.hadoopConf; } @Override public Connection getConnection() { try { oneSessionDataSource.setConnectionTimeout(172800L);//设置连接超时时间 2天 //oneSessionDataSource.setIdleTimeout(60000L);// 非必须(空闲超时时间),保持默认值就行 //oneSessionDataSource.setMaxLifetime(600000L);// 非必须(最大生命周期),保持默认值就行 return oneSessionDataSource.getConnection(); } catch (SQLException e) { logger.error("get oneSessionDataSource Connection fail SQLException: {}", e.getMessage(), e); return null; } } @Override public void close() { super.close(); logger.info("close HiveDataSourceClient."); kerberosRenewalService.shutdown(); this.ugi = null; this.oneSessionDataSource.close(); this.oneSessionDataSource = null; } }