redis常用的三种集群方式是:主从复制,sentinel 哨兵模式,cluster模式,本节我想详细记录下cluster集群的动手部署方式。cluster是比较主流的方式,优缺点可以百度查下。

Redis-Cluster采用无中心结构,每个节点保存数据和整个集群状态,每个节点都和其他所有节点连接。注意cluster采用的是哈希槽算法,Redis集群预分好16384个桶或槽,当需要在 Redis 集群中放置一个 key-value 时,根据 CRC16(key) mod 16384的值,决定将一个key放到哪个桶中。

1.1 创建6个redis实例

集群中至少应该有奇数个节点,所以至少有三个节点,每个节点至少有一个备份节点,所以下面使用6节点(主节点、备份节点由redis-cluster集群确定)。我这里没有这么多机器哈。我就在一个机器上来演示搭建。

docker run -d --name redis-node-1 --net host --privileged=true -v /data/redis/share/redis-node-1:/data redis --cluster-enabled yes --appendonly yes --port 6381

# 对这个命令稍微解释下:

# --cluster-enabled yes 开启redis集群

# -- net host 使用宿主机的Ip和端口,默认

# --appendonly yes 开启redis的日志持久化模式

# --privileged=true 来保证权限的

docker run -d --name redis-node-2 --net host --privileged=true -v /data/redis/share/redis-node-2:/data redis --cluster-enabled yes --appendonly yes --port 6382

docker run -d --name redis-node-3 --net host --privileged=true -v /data/redis/share/redis-node-3:/data redis --cluster-enabled yes --appendonly yes --port 6383

docker run -d --name redis-node-4 --net host --privileged=true -v /data/redis/share/redis-node-4:/data redis --cluster-enabled yes --appendonly yes --port 6384

docker run -d --name redis-node-5 --net host --privileged=true -v /data/redis/share/redis-node-5:/data redis --cluster-enabled yes --appendonly yes --port 6385

docker run -d --name redis-node-6 --net host --privileged=true -v /data/redis/share/redis-node-6:/data redis --cluster-enabled yes --appendonly yes --port 63861.2 构建集群关系

上面已经假设已经启动好了6台集群的redis,然后就是来构建集群关系。

1.2.1 进入到一台redis中:docker exec -it redis-node-1 /bin/bash

docker exec -it redis-node-1 /bin/bash12.2 创建集群

redis-cli --cluster create 10.0.16.15:6381 10.0.16.15:6382 10.0.16.15:6383 10.0.16.15:6384 10.0.16.15:6385 10.0.16.15:6386 --cluster-replicas 1

--cluster create # 集群创建命令

--cluster-replicas 1 #表示为每个master创建一个slave节点

[root@VM-16-15-centos ~]# docker exec -it redis-node-1 /bin/bash

root@VM-16-15-centos:/data# redis-cli --cluster create 10.0.16.15:6381 10.0.16.15:6382 10.0.16.15:6383 10.0.16.15:6384 10.0.16.15:6385 10.0.16.15:6386 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 10.0.16.15:6385 to 10.0.16.15:6381

Adding replica 10.0.16.15:6386 to 10.0.16.15:6382

Adding replica 10.0.16.15:6384 to 10.0.16.15:6383

>>> Trying to optimize slaves allocation for anti-affinity

[WARNING] Some slaves are in the same host as their master

M: dc8db285420c6745340ef6844a27b57819be72d0 10.0.16.15:6381

slots:[0-5460] (5461 slots) master

M: 8b01ad849a90a83e6195a7158f5aaa312c386d53 10.0.16.15:6382

slots:[5461-10922] (5462 slots) master

M: 27cb66abc15e4f17dfc4e1fbb9ae18dcf3289b85 10.0.16.15:6383

slots:[10923-16383] (5461 slots) master

S: 8af142737cf096211cebfa384912b0b3b4482e51 10.0.16.15:6384

replicates 8b01ad849a90a83e6195a7158f5aaa312c386d53

S: 26c491cbf52ef2bc240d173d09e06e48d89d1817 10.0.16.15:6385

replicates 27cb66abc15e4f17dfc4e1fbb9ae18dcf3289b85

S: 5f48a6b99d0cfc5b18491916cf6c58b33dc485e0 10.0.16.15:6386

replicates dc8db285420c6745340ef6844a27b57819be72d0

Can I set the above configuration? (type 'yes' to accept): yes #yes表示同意上面的配置

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

.

>>> Performing Cluster Check (using node 10.0.16.15:6381)

M: dc8db285420c6745340ef6844a27b57819be72d0 10.0.16.15:6381

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 26c491cbf52ef2bc240d173d09e06e48d89d1817 10.0.16.15:6385

slots: (0 slots) slave

replicates 27cb66abc15e4f17dfc4e1fbb9ae18dcf3289b85

M: 27cb66abc15e4f17dfc4e1fbb9ae18dcf3289b85 10.0.16.15:6383

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: 8b01ad849a90a83e6195a7158f5aaa312c386d53 10.0.16.15:6382

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 8af142737cf096211cebfa384912b0b3b4482e51 10.0.16.15:6384

slots: (0 slots) slave

replicates 8b01ad849a90a83e6195a7158f5aaa312c386d53

S: 5f48a6b99d0cfc5b18491916cf6c58b33dc485e0 10.0.16.15:6386

slots: (0 slots) slave

replicates dc8db285420c6745340ef6844a27b57819be72d0

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

root@VM-16-15-centos:/data# 1.2.3 查看集群状态

redis-cli -p 6381 # 连接进入到6381这个端口的redis中

cluster info # 集群信息

cluster nodes # 查看集群节点

可以看到集群就已经创建好了。

1.3 主从容错切换

1.3.1 单机登录到redis

我们先来登录集群:

docker exec -it redis-node-1 redis-cli -p 6381 # 登录到了第一台主机redis

登录之后,我们在保存一条记录试试:

set key1 value1 # 报错:(error) MOVED 9189 10.0.16.15:6382

[root@VM-16-15-centos ~]# docker exec -it redis-node-1 redis-cli -p 6381

127.0.0.1:6381> cluster nodes

26c491cbf52ef2bc240d173d09e06e48d89d1817 10.0.16.15:6385@16385 slave 27cb66abc15e4f17dfc4e1fbb9ae18dcf3289b85 0 1641606558393 3 connected

27cb66abc15e4f17dfc4e1fbb9ae18dcf3289b85 10.0.16.15:6383@16383 master - 0 1641606560000 3 connected 10923-16383 # 范围3

8b01ad849a90a83e6195a7158f5aaa312c386d53 10.0.16.15:6382@16382 master - 0 1641606559000 2 connected 5461-10922 # 范围2

dc8db285420c6745340ef6844a27b57819be72d0 10.0.16.15:6381@16381 myself,master - 0 1641606559000 1 connected 0-5460 # 槽位范围1

8af142737cf096211cebfa384912b0b3b4482e51 10.0.16.15:6384@16384 slave 8b01ad849a90a83e6195a7158f5aaa312c386d53 0 1641606560399 2 connected

5f48a6b99d0cfc5b18491916cf6c58b33dc485e0 10.0.16.15:6386@16386 slave dc8db285420c6745340ef6844a27b57819be72d0 0 1641606557000 1 connected

127.0.0.1:6381> set key1 value1 # 看这里竟然报错了???

(error) MOVED 9189 10.0.16.15:6382

127.0.0.1:6381>报错原因:那是因为根据它的算法是取模 16384,总共3台主机平分了16384,而key1计算之后是没有落在0-5460这个范围,从错误提示来看(error) MOVED 9189 10.0.16.15:6382,因为是落在第二台主机上面,而我登录的是在第一台机器上面去set,所以不匹配报错。

1.3.2 集群路由

上节中单机登录的方式,可能会存在计算之后的槽位不匹配导致不能保存,docker exec -it redis-node-1 redis-cli -p 6381,我们可以通过加一个参数 -c ,来优化路由,就是让它自动保存到对应槽位范围的机器上面去。

[root@VM-16-15-centos ~]# docker exec -it redis-node-1 redis-cli -c -p 6381 # 注意 -c

127.0.0.1:6381> flushall # 清空下缓存 ,慎用

OK

127.0.0.1:6381> set key1 value1 # 重新设置,发现可以保存,看到会redirected调整到二号主机

-> Redirected to slot [9189] located at 10.0.16.15:6382

OK

10.0.16.15:6382>查看集群情况:

docker exec -it redis-node-1 /bin/bash -c # 进入到一台机器

redis-cli --cluster check 10.0.16.15:6381 # 查看集群情况

[root@VM-16-15-centos ~]# docker exec -it redis-node-1 /bin/bash

root@VM-16-15-centos:/data# redis-cli --cluster check 10.0.16.15:6381

10.0.16.15:6381 (dc8db285...) -> 2 keys | 5461 slots | 1 slaves.

10.0.16.15:6383 (27cb66ab...) -> 1 keys | 5461 slots | 1 slaves.

10.0.16.15:6382 (8b01ad84...) -> 1 keys | 5462 slots | 1 slaves.

[OK] 4 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 10.0.16.15:6381)

M: dc8db285420c6745340ef6844a27b57819be72d0 10.0.16.15:6381

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 26c491cbf52ef2bc240d173d09e06e48d89d1817 10.0.16.15:6385

slots: (0 slots) slave

replicates 27cb66abc15e4f17dfc4e1fbb9ae18dcf3289b85

M: 27cb66abc15e4f17dfc4e1fbb9ae18dcf3289b85 10.0.16.15:6383

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: 8b01ad849a90a83e6195a7158f5aaa312c386d53 10.0.16.15:6382

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 8af142737cf096211cebfa384912b0b3b4482e51 10.0.16.15:6384

slots: (0 slots) slave

replicates 8b01ad849a90a83e6195a7158f5aaa312c386d53

S: 5f48a6b99d0cfc5b18491916cf6c58b33dc485e0 10.0.16.15:6386

slots: (0 slots) slave

replicates dc8db285420c6745340ef6844a27b57819be72d0

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

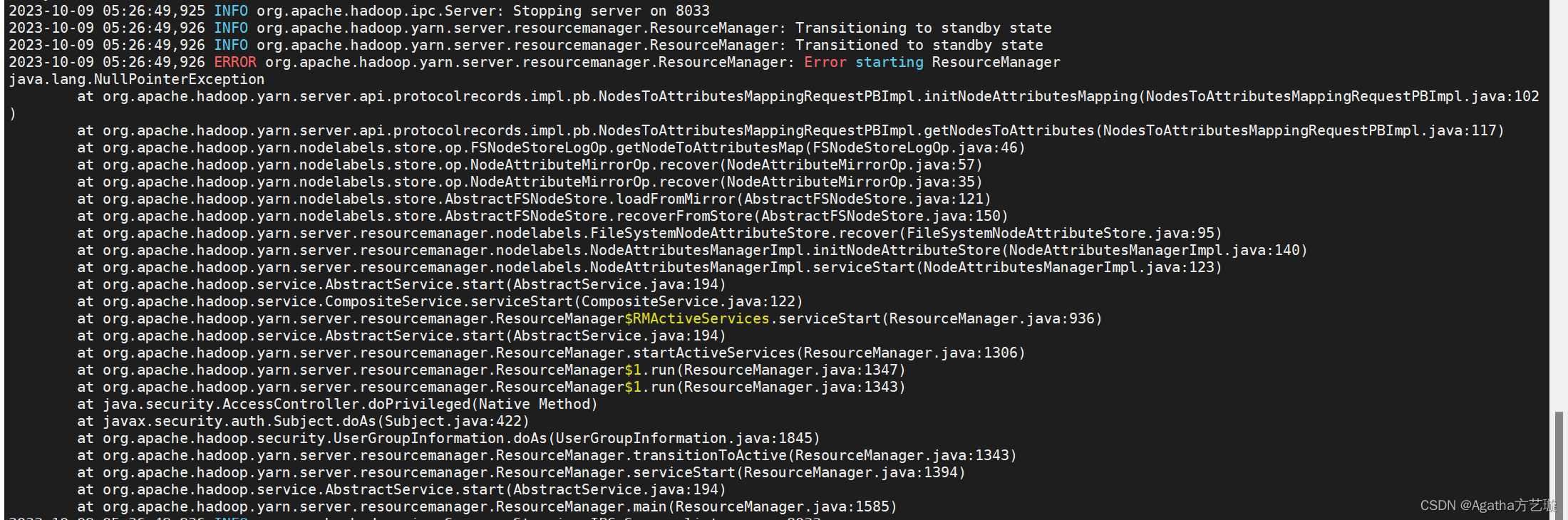

[OK] All 16384 slots covered.1.3.3 机停从机上位

先来停掉第一台6381这台机器:docker stop redis-node-1

docker exec -it redis-node-2 bash

可以看到我停掉了一台master之后,原来的从机就变成主机了。上图中有4个master,但是其中一个是fail,disconnected,所以运行的是5台机器,3主,2从。

如果这个时候,我又把第一台机器启动:docker restart redis-node-1

那么再通过cluster nodes 可以看到6台机器又变成了3主3从。但是注意,第一台机器原来是master,现在恢复了之后它变成了slave哈,不会有原来的地位(如果要原来的地位,那必须现在的master停机再启动)。所以看到健壮性还是挺强的。

1.4 主从扩容

假设我们近期可能要流量大增,所以需要扩大一组redis,一主一从。注意哈希槽的重新分配。

1.4.1 新增实例

假设实际工作中新增是两台机器。

docker run -d --name redis-node-7 --net host --privileged=true -v /data/redis/share/redis-node-7:/data redis --cluster-enabled yes --appendonly yes --port 6387

docker run -d --name redis-node-8 --net host --privileged=true -v /data/redis/share/redis-node-8:/data redis --cluster-enabled yes --appendonly yes --port 63881.4.2 新加入到集群

将新增的6387作为master节点加入集群。

redis-cli --cluster add-node ip:6387 ip:6381 # 6387要作为master新增节点,而6381是原来集群节点里面的领路人,相当于6387需要找到6381来作为领路人来加入到集群这个组织。

注意加入到集群之后,有个问题是它没有获取到槽位:

root@VM-16-15-centos:/data# redis-cli --cluster check 10.0.16.15:6381

10.0.16.15:6387 (ce79c8b8...) -> 0 keys | 0 slots | 0 slaves. # 注意没有槽位没有从机

10.0.16.15:6383 (27cb66ab...) -> 1 keys | 5461 slots | 1 slaves.

10.0.16.15:6382 (8b01ad84...) -> 1 keys | 5462 slots | 1 slaves.

10.0.16.15:6386 (5f48a6b9...) -> 2 keys | 5461 slots | 1 slaves.

[OK] 4 keys in 4 masters.

0.00 keys per slot on average.1.4.3 重新分配槽位

命令: redis-cli --cluster reshard 10.0.16.15:6381

redis-cli --cluster reshard 10.0.16.15:6381 # 开始重新分配

How many slots do you want to move (from 1 to 16384)? 4096 # 16384/4 = 4096

What is the receiving node ID? ce79c8b8072d79bd16ad79ecc65c6c0949be2a79

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: all # 注意这里all然后我们来再次查看结果:

root@VM-16-15-centos:/data# redis-cli --cluster check 10.0.16.15:6387

10.0.16.15:6387 (ce79c8b8...) -> 1 keys | 4096 slots | 0 slaves. # 现在已经分配了槽位

10.0.16.15:6383 (27cb66ab...) -> 1 keys | 4096 slots | 1 slaves.

10.0.16.15:6382 (8b01ad84...) -> 1 keys | 4096 slots | 1 slaves.

10.0.16.15:6386 (5f48a6b9...) -> 1 keys | 4096 slots | 1 slaves.

[OK] 4 keys in 4 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 10.0.16.15:6387)

M: ce79c8b8072d79bd16ad79ecc65c6c0949be2a79 10.0.16.15:6387

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

M: 27cb66abc15e4f17dfc4e1fbb9ae18dcf3289b85 10.0.16.15:6383

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

M: 8b01ad849a90a83e6195a7158f5aaa312c386d53 10.0.16.15:6382

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

S: 8af142737cf096211cebfa384912b0b3b4482e51 10.0.16.15:6384

slots: (0 slots) slave

replicates 8b01ad849a90a83e6195a7158f5aaa312c386d53

S: 26c491cbf52ef2bc240d173d09e06e48d89d1817 10.0.16.15:6385

slots: (0 slots) slave

replicates 27cb66abc15e4f17dfc4e1fbb9ae18dcf3289b85

S: dc8db285420c6745340ef6844a27b57819be72d0 10.0.16.15:6381

slots: (0 slots) slave

replicates 5f48a6b99d0cfc5b18491916cf6c58b33dc485e0

M: 5f48a6b99d0cfc5b18491916cf6c58b33dc485e0 10.0.16.15:6386

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.但是要注意,我们新加入的这个节点分配了4096个槽位,但是它的槽位部署连续的:

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master 分成了三段。是之前上一次的三个主机master都分割点过来凑起来了4096个槽位,并不是4个主机重新从0开始分配哈。

1.4.4 主节点下添加从节点

命令:redis-cli --cluster add-node ip:新slave端口 (空格) ip:新master端口 --cluster-slave --cluster-master-id 新主机节点ID

redis-cli --cluster add-node 10.0.16.15:6388 10.0.16.15:6387 --cluster-slave --cluster-master-id ce79c8b8072d79bd16ad79ecc65c6c0949be2a79

这个命令的意思就是新节点6388,要加入到6387下作为从节点,--cluster-master-id 表示具体的你要加入到哪个maser节点下面,master节点有节点id.

root@VM-16-15-centos:/data# redis-cli --cluster add-node 10.0.16.15:6388 10.0.16.15:6387 --cluster-slave --cluster-master-id ce79c8b8072d79bd16ad79ecc65c6c0949be2a79

>>> Adding node 10.0.16.15:6388 to cluster 10.0.16.15:6387

>>> Performing Cluster Check (using node 10.0.16.15:6387)

M: ce79c8b8072d79bd16ad79ecc65c6c0949be2a79 10.0.16.15:6387

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

M: 27cb66abc15e4f17dfc4e1fbb9ae18dcf3289b85 10.0.16.15:6383

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

M: 8b01ad849a90a83e6195a7158f5aaa312c386d53 10.0.16.15:6382

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

S: 8af142737cf096211cebfa384912b0b3b4482e51 10.0.16.15:6384

slots: (0 slots) slave

replicates 8b01ad849a90a83e6195a7158f5aaa312c386d53

S: 26c491cbf52ef2bc240d173d09e06e48d89d1817 10.0.16.15:6385

slots: (0 slots) slave

replicates 27cb66abc15e4f17dfc4e1fbb9ae18dcf3289b85

S: dc8db285420c6745340ef6844a27b57819be72d0 10.0.16.15:6381

slots: (0 slots) slave

replicates 5f48a6b99d0cfc5b18491916cf6c58b33dc485e0

M: 5f48a6b99d0cfc5b18491916cf6c58b33dc485e0 10.0.16.15:6386

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 10.0.16.15:6388 to make it join the cluster.

Waiting for the cluster to join

>>> Configure node as replica of 10.0.16.15:6387.

[OK] New node added correctly.加入了从机之后我们可以看到:

root@VM-16-15-centos:/data# redis-cli --cluster check 10.0.16.15:6381

10.0.16.15:6387 (ce79c8b8...) -> 1 keys | 4096 slots | 1 slaves.

10.0.16.15:6383 (27cb66ab...) -> 1 keys | 4096 slots | 1 slaves.

10.0.16.15:6382 (8b01ad84...) -> 1 keys | 4096 slots | 1 slaves.

10.0.16.15:6386 (5f48a6b9...) -> 1 keys | 4096 slots | 1 slaves.

[OK] 4 keys in 4 masters.1.5 缩容

现在的需求是我们流量高峰过了。为了节省资源所以我们就来把6387和6388这两台机器撤掉。

先删除从机6388

命令:redis-cli --cluster del-node ip:从机端口 从机6388节点ID

redis-cli --cluster del-node 10.0.16.15:6388 e330f45d41eed0676139f22e72fec428ca891f46

docker exec -it c3c956862138 /bin/bash

redis-cli -p 6388 -c

cluster nodes # 可以查看节点id,e330f45d41eed0676139f22e72fec428ca891f46

root@VM-16-15-centos:/data# redis-cli --cluster del-node 10.0.16.15:6388 e330f45d41eed0676139f22e72fec428ca891f46

>>> Removing node e330f45d41eed0676139f22e72fec428ca891f46 from cluster 10.0.16.15:6388

>>> Sending CLUSTER FORGET messages to the cluster...

>>> Sending CLUSTER RESET SOFT to the deleted node.

root@VM-16-15-centos:/data#

# 删除了之后,我们来重新分配下槽位

redis-cli --cluster reshard 10.0.16.15:6381

root@VM-16-15-centos:/data# redis-cli --cluster reshard 10.0.16.15:6381

>>> Performing Cluster Check (using node 10.0.16.15:6381)

S: dc8db285420c6745340ef6844a27b57819be72d0 10.0.16.15:6381

slots: (0 slots) slave

replicates 5f48a6b99d0cfc5b18491916cf6c58b33dc485e0

M: ce79c8b8072d79bd16ad79ecc65c6c0949be2a79 10.0.16.15:6387

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

M: 27cb66abc15e4f17dfc4e1fbb9ae18dcf3289b85 10.0.16.15:6383

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

M: 8b01ad849a90a83e6195a7158f5aaa312c386d53 10.0.16.15:6382

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

S: 26c491cbf52ef2bc240d173d09e06e48d89d1817 10.0.16.15:6385

slots: (0 slots) slave

replicates 27cb66abc15e4f17dfc4e1fbb9ae18dcf3289b85

M: 5f48a6b99d0cfc5b18491916cf6c58b33dc485e0 10.0.16.15:6386

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

S: 8af142737cf096211cebfa384912b0b3b4482e51 10.0.16.15:6384

slots: (0 slots) slave

replicates 8b01ad849a90a83e6195a7158f5aaa312c386d53

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 4096

What is the receiving node ID? 8b01ad849a90a83e6195a7158f5aaa312c386d53 #6382的id

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: ce79c8b8072d79bd16ad79ecc65c6c0949be2a79 # 这里是6387要删除的节点,不是6388

# 然后再删除第七个节点 6387 命令:redis-cli --cluster del-node ip:端口 6387节点ID

redis-cli --cluster del-node 10.0.16.15:6387 ce79c8b8072d79bd16ad79ecc65c6c0949be2a79redis下搭建cluster集群大家可以尝试按照我的步骤可以完全搭建成功。欢迎点赞收藏,以备后续用到查找方便。

![[nltk_data] Error loading stopwords: <urlopen error [WinError 10054]](https://img-blog.csdnimg.cn/482933ab62254fb5b19232ef99b2d975.png)

![[硬件基础]-快速了解555定时器](https://img-blog.csdnimg.cn/4e4777f47be444f580dcdab1209f35ac.png#pic_center)