大模型tokenizer词句连贯性问题

现象

from transformers import LlamaTokenizerFast

import numpy as np

tokenizer = LlamaTokenizerFast.from_pretrained("heilerich/llama-tokenizer-fast")

origin_prompt = "Hi, I'm Minwoo Park from seoul, korea."

ids = tokenizer.encode(origin_prompt)

print("转换token ids", ids)

def run(num=7):

print("=" * 20)

print(f"此时token 被分为 {num}组")

ids_list = np.array_split(ids, num)

result_str = ""

for ids_ in ids_list:

id_str = tokenizer.decode(ids_)

print("id: str ", ids_, id_str)

result_str += id_str

print("最终结果", result_str)

print("原始数据", origin_prompt)

run(4)

run(7)

run(10)

转换token ids [1, 6324, 29892, 306, 29915, 29885, 3080, 827, 29877, 4815, 515, 409, 5059, 29892, 413, 487, 29874, 29889]

====================

此时token 被分为 4组

id: str [ 1 6324 29892 306 29915] <s> Hi, I'

id: str [29885 3080 827 29877 4815] m Minwoo Park

id: str [ 515 409 5059 29892] from seoul,

id: str [ 413 487 29874 29889] korea.

最终结果 <s> Hi, I'm Minwoo Parkfrom seoul,korea.

原始数据 Hi, I'm Minwoo Park from seoul, korea.

====================

此时token 被分为 7组

id: str [ 1 6324 29892] <s> Hi,

id: str [ 306 29915 29885] I'm

id: str [ 3080 827 29877] Minwoo

id: str [4815 515 409] Park from se

id: str [ 5059 29892] oul,

id: str [413 487] kore

id: str [29874 29889] a.

最终结果 <s> Hi,I'mMinwooPark from seoul,korea.

原始数据 Hi, I'm Minwoo Park from seoul, korea.

====================

此时token 被分为 10组

id: str [ 1 6324] <s> Hi

id: str [29892 306] , I

id: str [29915 29885] 'm

id: str [3080 827] Minwo

id: str [29877 4815] o Park

id: str [515 409] from se

id: str [ 5059 29892] oul,

id: str [413 487] kore

id: str [29874] a

id: str [29889] .

最终结果 <s> Hi, I'mMinwoo Parkfrom seoul,korea.

原始数据 Hi, I'm Minwoo Park from seoul, korea.

解决办法

from transformers import LlamaTokenizerFast

import numpy as np

tokenizer = LlamaTokenizerFast.from_pretrained("heilerich/llama-tokenizer-fast")

origin_prompt = "Hi, I'm Minwoo Park from seoul, korea."

ids = tokenizer.encode(origin_prompt)[1:]

print("转换token ids", ids)

def run1(num=7):

print("=" * 20)

print(f"此时token 被分为 {num}组")

ids_list = np.array_split(ids, num)

result_str = ""

tmp_ids = []

for ids_ in ids_list:

tmp_ids.extend(ids_)

id_str = tokenizer.decode(tmp_ids).replace(result_str, "")

print("id: str ", ids_, id_str)

result_str += id_str

print("最终结果", result_str)

print("原始数据", origin_prompt)

run1(4)

run1(7)

run1(10)

转换token ids [6324, 29892, 306, 29915, 29885, 3080, 827, 29877, 4815, 515, 409, 5059, 29892, 413, 487, 29874, 29889]

====================

此时token 被分为 4组

id: str [ 6324 29892 306 29915 29885] Hi, I'm

id: str [ 3080 827 29877 4815] Minwoo Park

id: str [ 515 409 5059 29892] from seoul,

id: str [ 413 487 29874 29889] korea.

最终结果 Hi, I'm Minwoo Park from seoul, korea.

原始数据 Hi, I'm Minwoo Park from seoul, korea.

====================

此时token 被分为 7组

id: str [ 6324 29892 306] Hi, I

id: str [29915 29885 3080] 'm Min

id: str [ 827 29877 4815] woo Park

id: str [515 409] from se

id: str [ 5059 29892] oul,

id: str [413 487] kore

id: str [29874 29889] a.

最终结果 Hi, I'm Minwoo Park from seoul, korea.

原始数据 Hi, I'm Minwoo Park from seoul, korea.

====================

此时token 被分为 10组

id: str [ 6324 29892] Hi,

id: str [ 306 29915] I'

id: str [29885 3080] m Min

id: str [ 827 29877] woo

id: str [4815 515] Park from

id: str [ 409 5059] seoul

id: str [29892 413] , k

id: str [487] ore

id: str [29874] a

id: str [29889] .

最终结果 Hi, I'm Minwoo Park from seoul, korea.

原始数据 Hi, I'm Minwoo Park from seoul, korea.

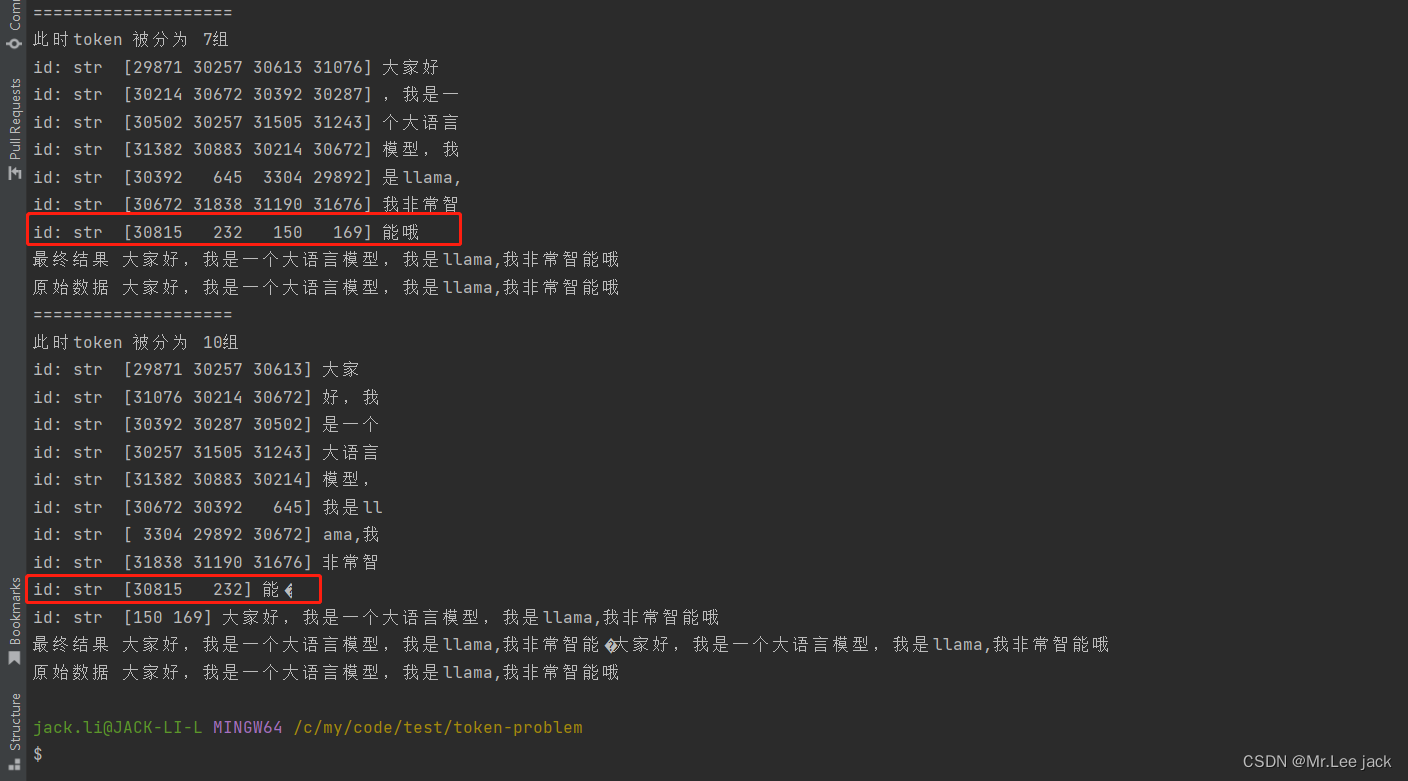

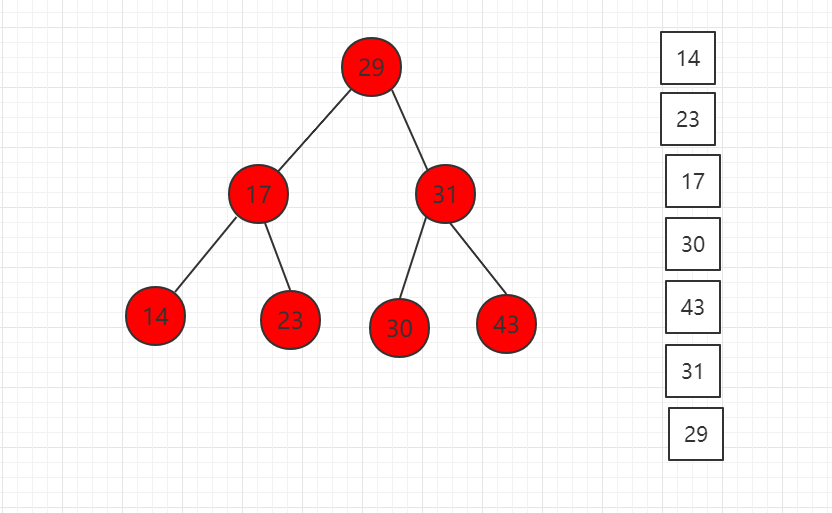

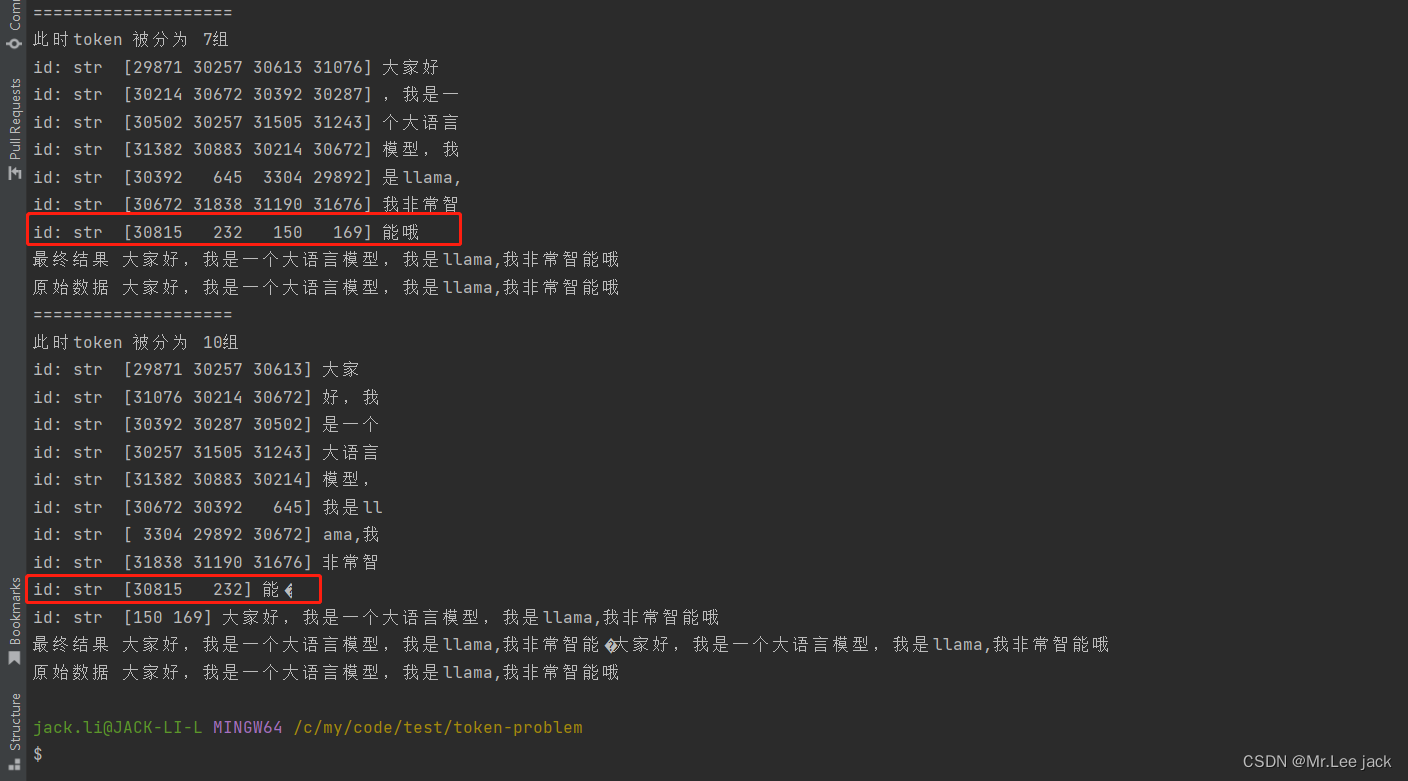

- 中文效果

- 一个哦字对应3个token,但是被拆开了,所以为了不影响后面数据转换,需要对这部分之前的数据进行修复,修复完成后即可保证后面的数据