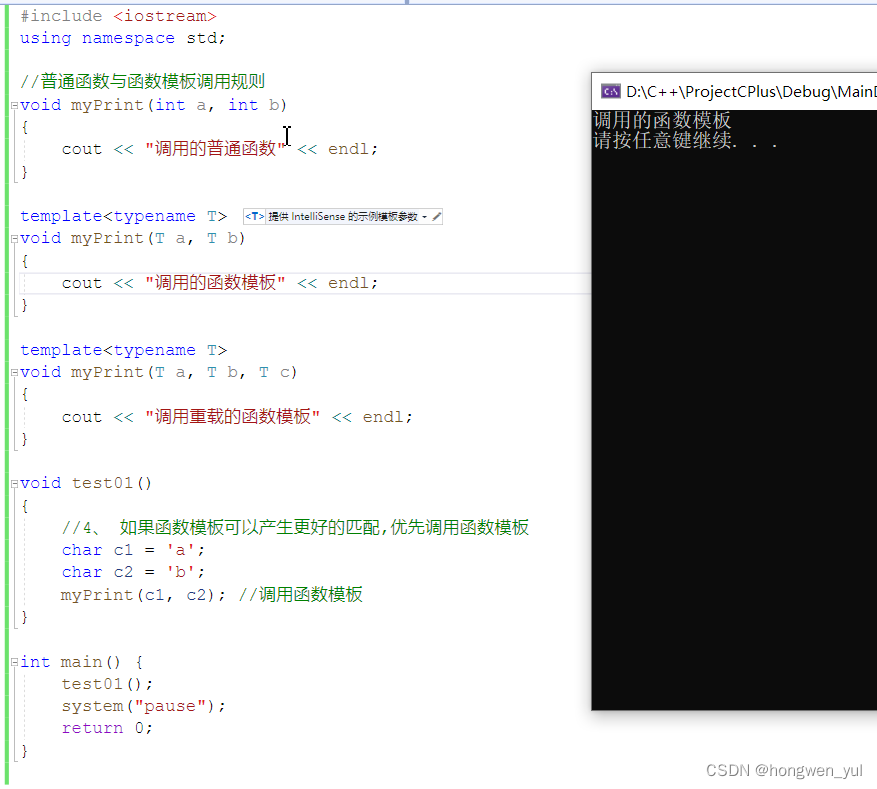

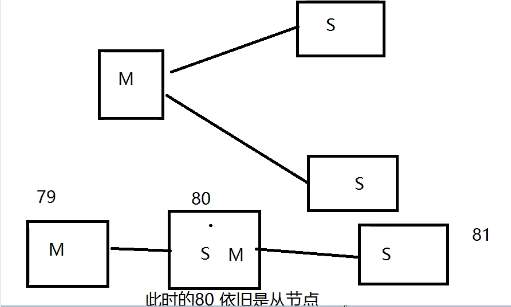

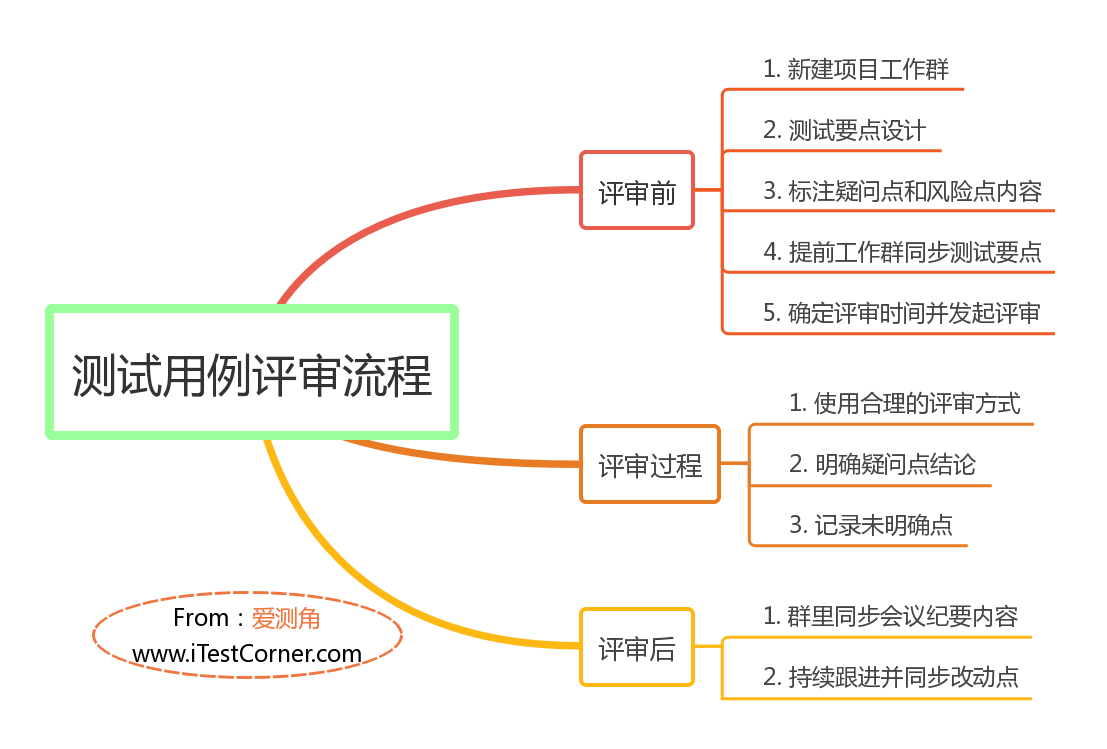

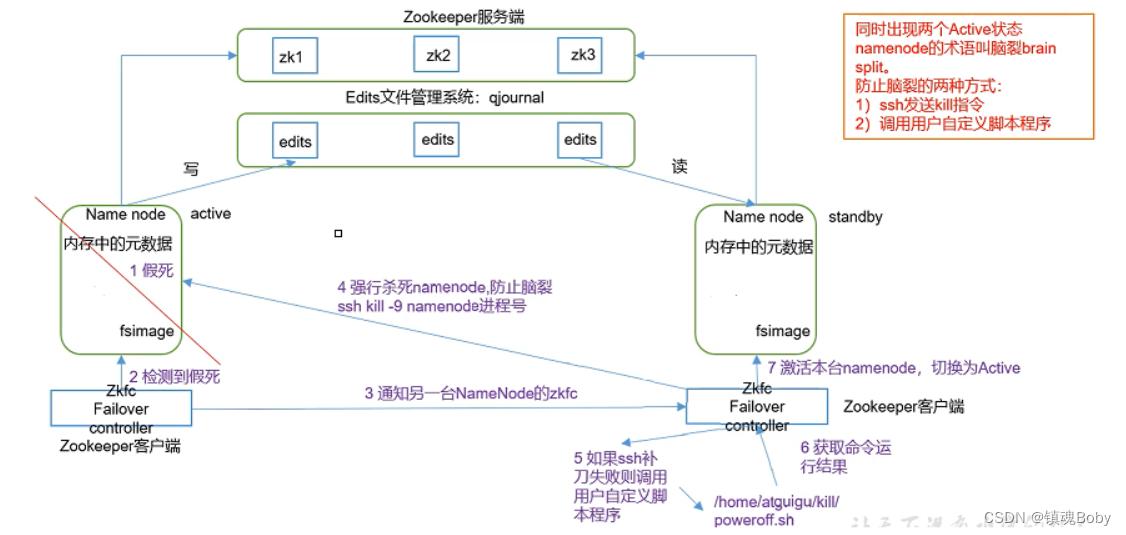

6-zookeeper-hadoop-ha故障转移机制,原理简述:

HA概述(2.X版本架构)。

1)、HA(High available),即高可用(7*24小时不间断服务。)

1、zookeeper协调服务,通知

2、zkfc是一个zookeeper的一个客户端,用于帮助namenode和zookeeper进行联系,管理namenode的状态。

3、3步骤通过zookeeper通知领一个客户端。

HDFS-HA集群配置

规划

| hadoop102 | hadoop103 | hadoop104 |

|---|---|---|

| NameNode | NameNode | |

| JournalNode | JournalNode | JournalNode |

| DataNode | DataNode | DataNode |

| ZK | ZK | ZK |

| zkfc | zkfc | zkfc |

| ResourceManager | ||

| NodeManager | NodeManager | NodeManager |

1.拷贝原有hadoop。

[root@hadoop102 module]# mkdir ha

[root@hadoop102 module]# cp -r hadoop-3.1.4/ ha/

[root@hadoop102 hadoop]# pwd

/opt/module/ha/hadoop-3.1.4/etc/hadoop

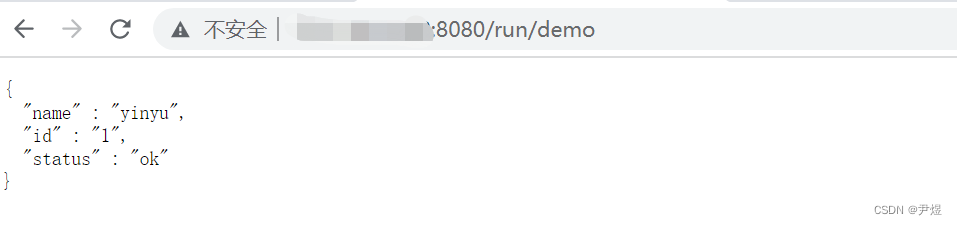

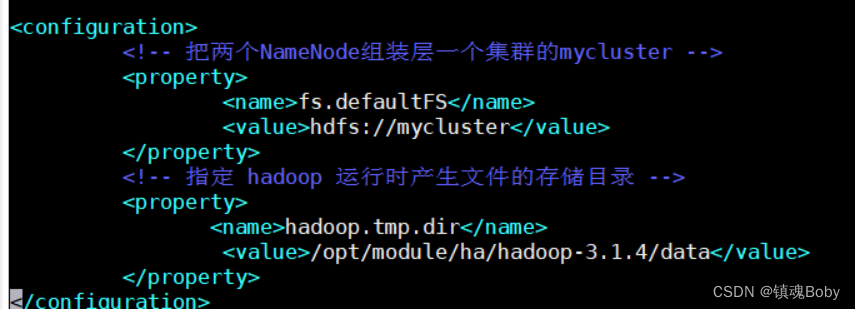

2、配置core-site.xml

core-site.xml

fs.defaultFS

hdfs://mycluster

hadoop.tmp.dir

/opt/module/ha/hadoop-3.1.4/data

3、配置hdfs.xml

core-site.xml

dfs.nameservices

mycluster

dfs.ha.namenode.mycluster

nn1,nn2

dfs.namenode.rpc-address.mycluster.nn1

hadoop102:9000

dfs.namenode.rpc-address.mycluster.nn2

hadoop102:9000

dfs.namenode.http-address.mycluster.nn1

hadoop102:50070

dfs.namenode.http-address.mycluster.nn2

hadoop102:50070

<!-- 指定Namenode元数据在JournalNode上的存放位置 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hadoop102:8485;hadoop103:8485;hadoop104:8485/mycluster</value>

</property>

dfs.ha.fencing.methods

sshfence

dfs.ha.fencing.ss.private-key-files

/root/.ssh/id_rsa

dfs.journalnode.edits.dir

/opt/module/ha/hadoop-3.1.4/data/jn

dfs.permissions.enable

false

dfs.client.failover.proxy.provider.mycluster

org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

dfs.ha.automatic-failover.enabled

true

4、分发ha

[root@hadoop102 module]# xsync ha

5、启动qjm集群,数据存储的地方。

[root@hadoop102 hadoop-3.1.4]# sbin/hadoop-daemons.sh start journalnode

WARNING: Use of this script to start HDFS daemons is deprecated.

WARNING: Attempting to execute replacement “hdfs --workers --daemon start” instead.

[root@hadoop102 hadoop-3.1.4]# hdfs --workers --daemon start

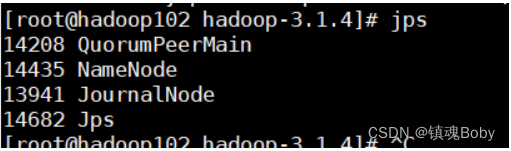

[root@hadoop102 zookeeper-3.4.10]# jpsall

=============== 192.168.1.102 ===============

14208 QuorumPeerMain

13941 JournalNode

14255 Jps

=============== 192.168.1.103 ===============

13249 QuorumPeerMain

13174 JournalNode

13287 Jps

=============== 192.168.1.104 ===============

13280 Jps

13251 QuorumPeerMain

13175 JournalNode

6、格式化

[root@hadoop102 hadoop-3.1.4]# bin/hdfs namenode -format

7、启动namenode

[root@hadoop102 hadoop-3.1.4]# hdfs -daemon start

出错,暂停[root@hadoop102 hadoop-3.1.4]# hdfs --workers --daemon stop(需要继续操作)

8、hadoop103拉取namenode

[root@hadoop103 hadoop-3.1.4]#bin/hdfs namenode -bootstrapStandby

9、hadoop103启动

[root@hadoop103 hadoop-3.1.4]#sbin/hadoop-daemon.sh start namenode

[root@hadoop103 hadoop-3.1.4]#sbin/hadoop-daemon.sh start datanode

10、手动standb切换,将nn1切换为active模式

[root@hadoop102 hadoop-3.1.4]#bin/hdfs haadmin transitionToActive nn1

自动故障转移测试

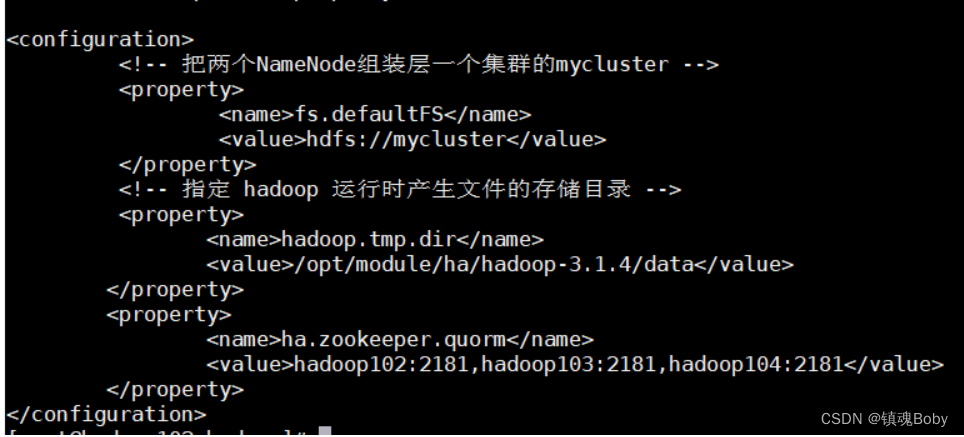

修改core-site.xml

fs.defaultFS hdfs://mycluster hadoop.tmp.dir /opt/module/ha/hadoop-3.1.4/data ha.zookeeper.quorm hadoop102:2181,hadoop103:2181,hadoop104:2181

修改hdfs-site.xml

dfs.nameservices mycluster dfs.ha.namenode.mycluster nn1,nn2 dfs.namenode.rpc-address.mycluster.nn1 hadoop102:9000 dfs.namenode.rpc-address.mycluster.nn2 hadoop102:9000 dfs.namenode.http-address.mycluster.nn1 hadoop102:50070 dfs.namenode.http-address.mycluster.nn2 hadoop102:50070 <!-- 指定Namenode元数据在JournalNode上的存放位置 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hadoop102:8485;hadoop103:8485;hadoop104:8485/mycluster</value>

</property>

<!-- 配置隔离机制,即同一时刻只能有一台服务器对外相应,确保没有两个active,不会出现脑裂现象 -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<!-- 使用隔离机制时使用ssh无秘钥登录 -->

<property>

<name>dfs.ha.fencing.ss.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

<!-- 声明journalnode服务器存储目录-->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/opt/module/ha/hadoop-3.1.4/data/jn</value>

</property>

<!-- 关闭权限检查-->

<property>

<name>dfs.permissions.enable</name>

<value>false</value>

</property>

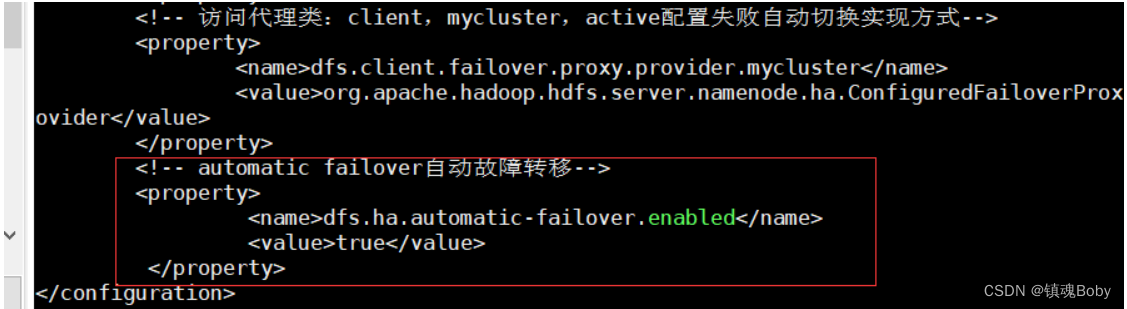

<!-- 访问代理类:client,mycluster,active配置失败自动切换实现方式-->

<property>

<name>dfs.client.failover.proxy.provider.mycluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- automatic failover自动故障转移-->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

分发etc

[root@hadoop102 hadoop-3.1.4]# xsync etc/

启动QJM集群

[root@hadoop102 hadoop-3.1.4]# hdfs --workers --daemon start

格式化,记得删除data和logs

???Re-format filesystem in Storage Directory root= /opt/module/hadoop-3.1.4/data/dfs/name; location= null ? (Y or N)

[root@hadoop102 hadoop-3.1.4]# bin/hdfs namenode -format

初始化HA在Zookeeper中的状态

[root@hadoop102 hadoop-3.1.4]# bin/hdfs zkfc -formatZK

同步

[root@hadoop103 hadoop-3.1.4]#bin/hdfs namenode -bootstrapStandby

启动HDFS服务

[root@hadoop102 hadoop-3.1.4]# sbin/start-dfs.sh

namenode启

[root@hadoop103 hadoop-3.1.4]#sbin/hadoop-daemon.sh start namenode

学习路径:https://space.bilibili.com/302417610/,如有侵权,请联系q进行删除:3623472230