1.背景和意义

由于地球的弹性结构,地球大尺度的质量迁移会导致地球产生负荷变形。地球的环境负载如大气、海洋、陆地水等的变化会使得固体地球产生明显的位移变化,为了准确研究有关地球物理信号,需要对弹性的负荷变形进行有效计算并扣除。通常利用格林函数法和球谐函数法,两者在数学上是等价的。

早期研究采用一维模型来表征地球内部结构及密度和速度等特征,当前进行负荷位移建模也普遍采用一维地球模型。

2.利用不同的地球模型计算love数和green函数

(1)地球模型的下载与处理

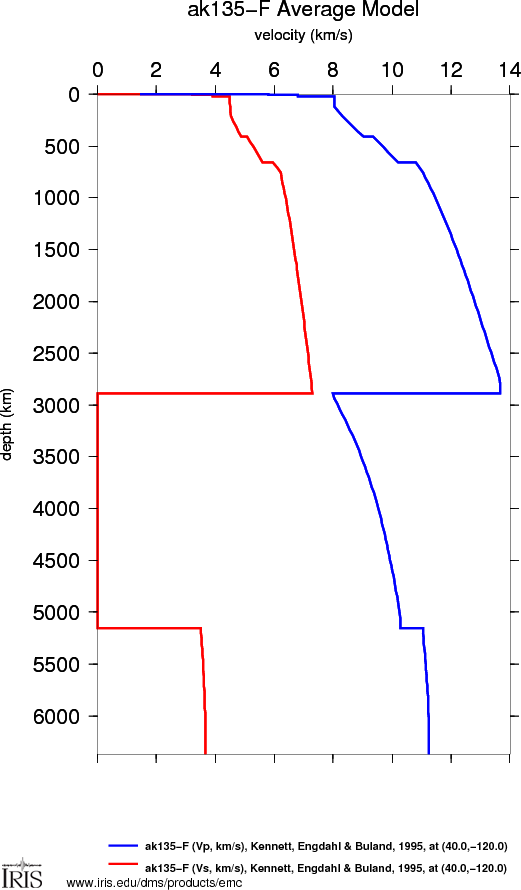

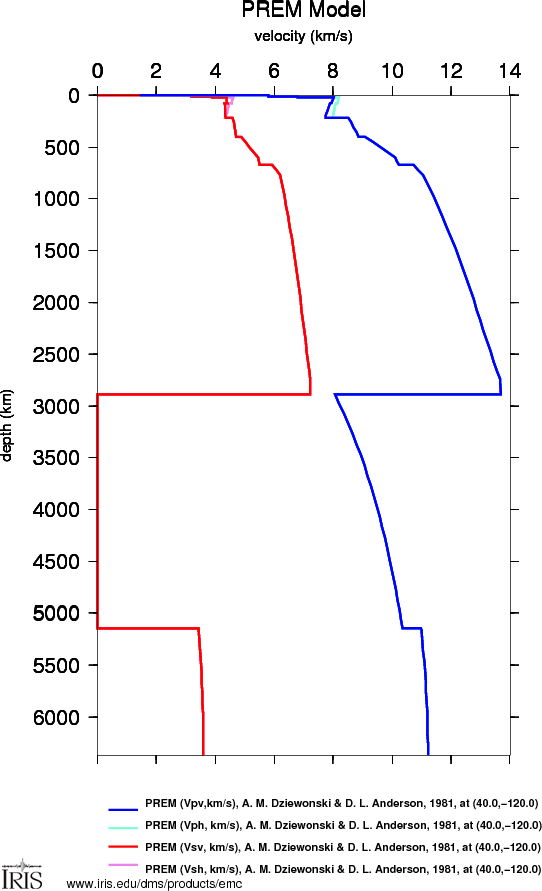

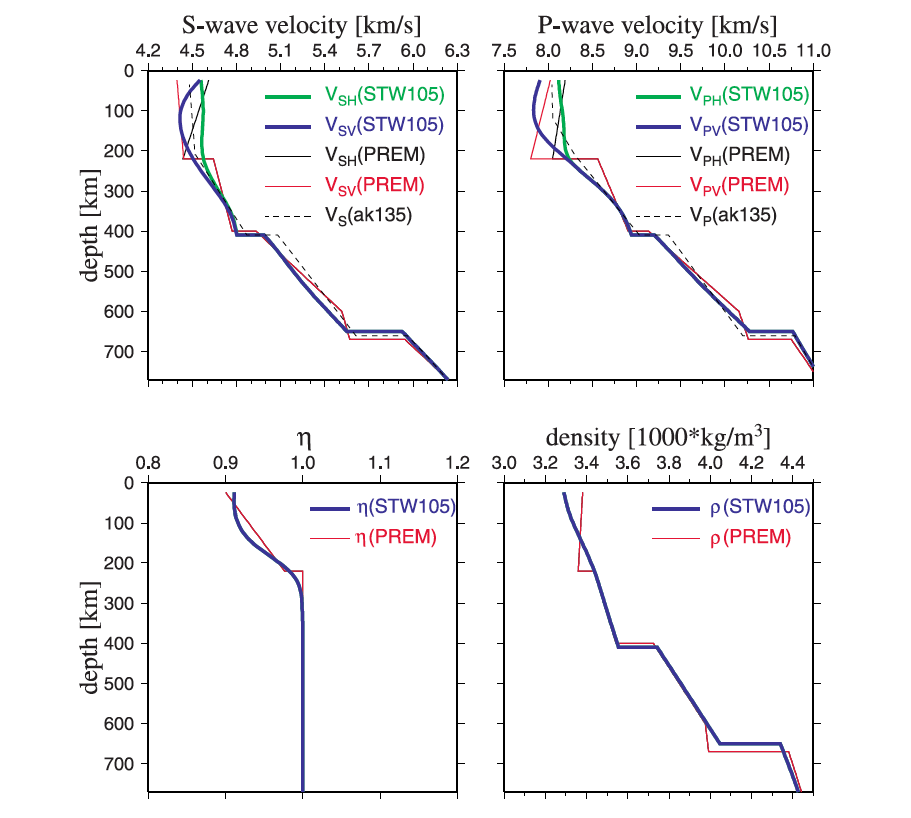

本文选择了PREM、STW105和AK135地球模型。地球模型的下载网址为

http://ds.iris.edu/ds/products/emc-ak135-f/不同地球模型的示意图

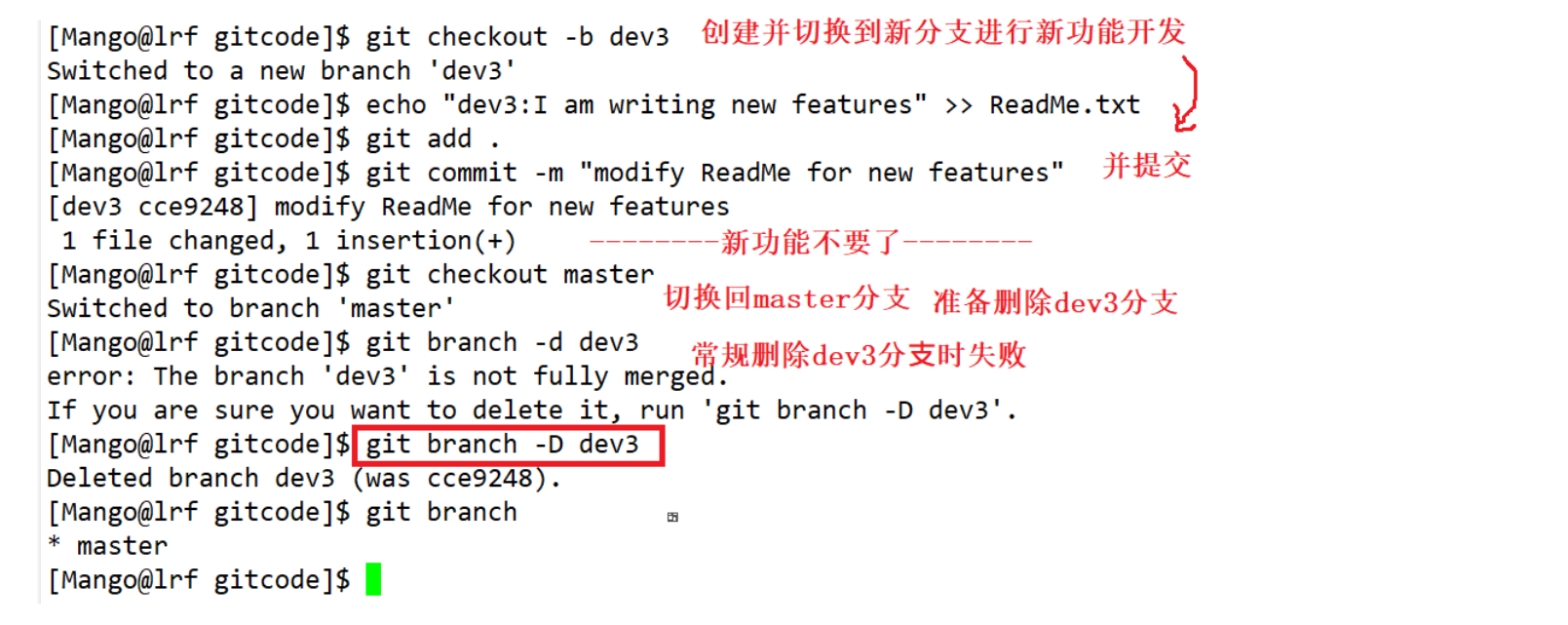

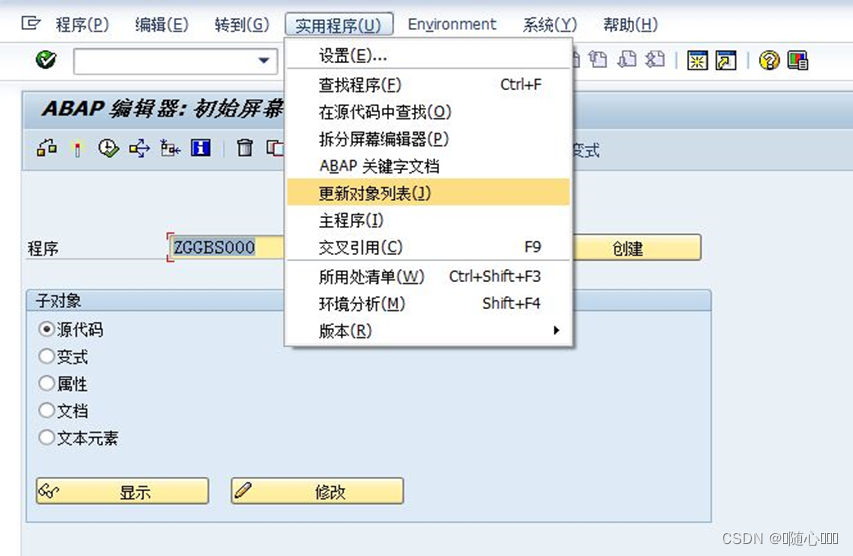

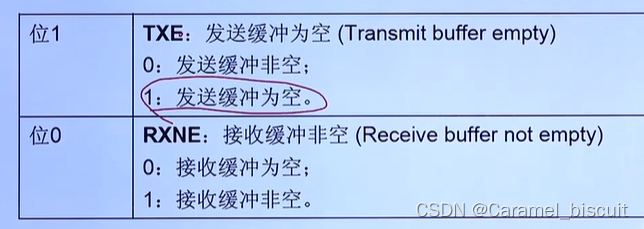

(2)计算love数(CF参考系)

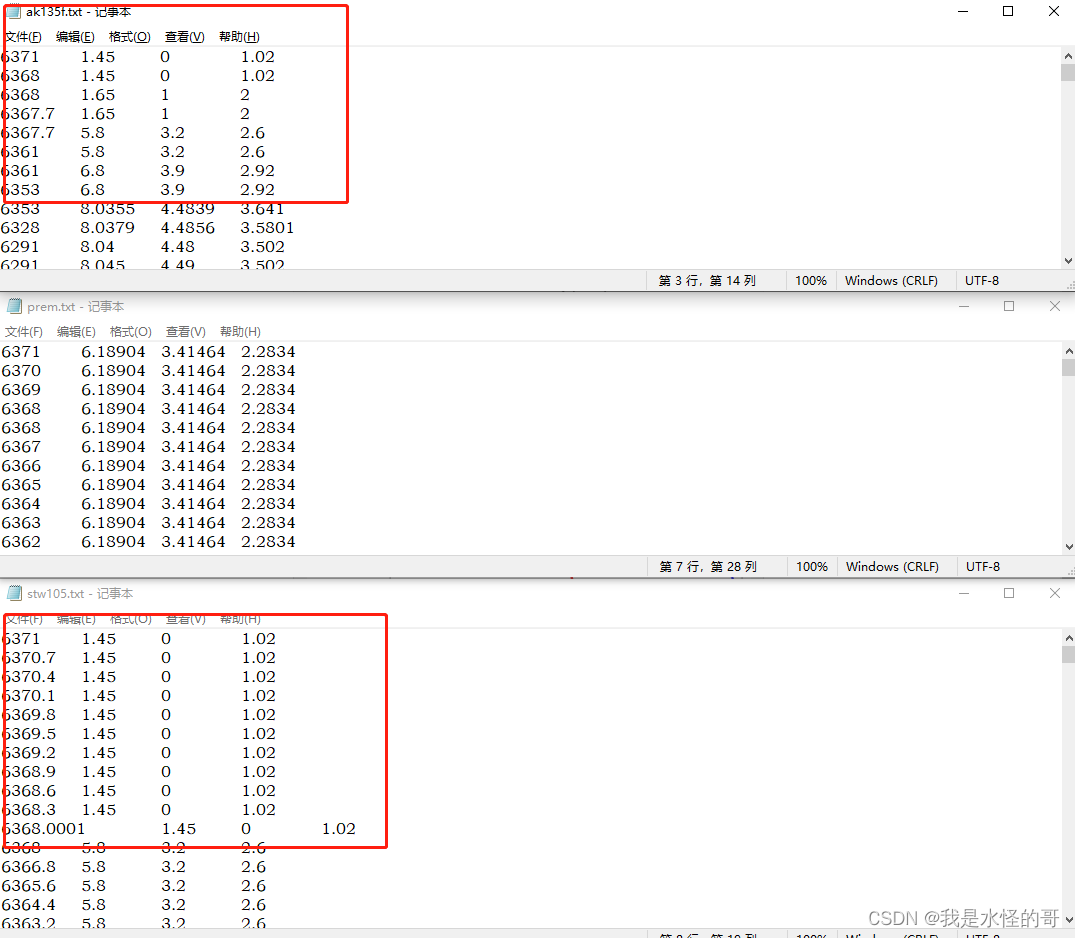

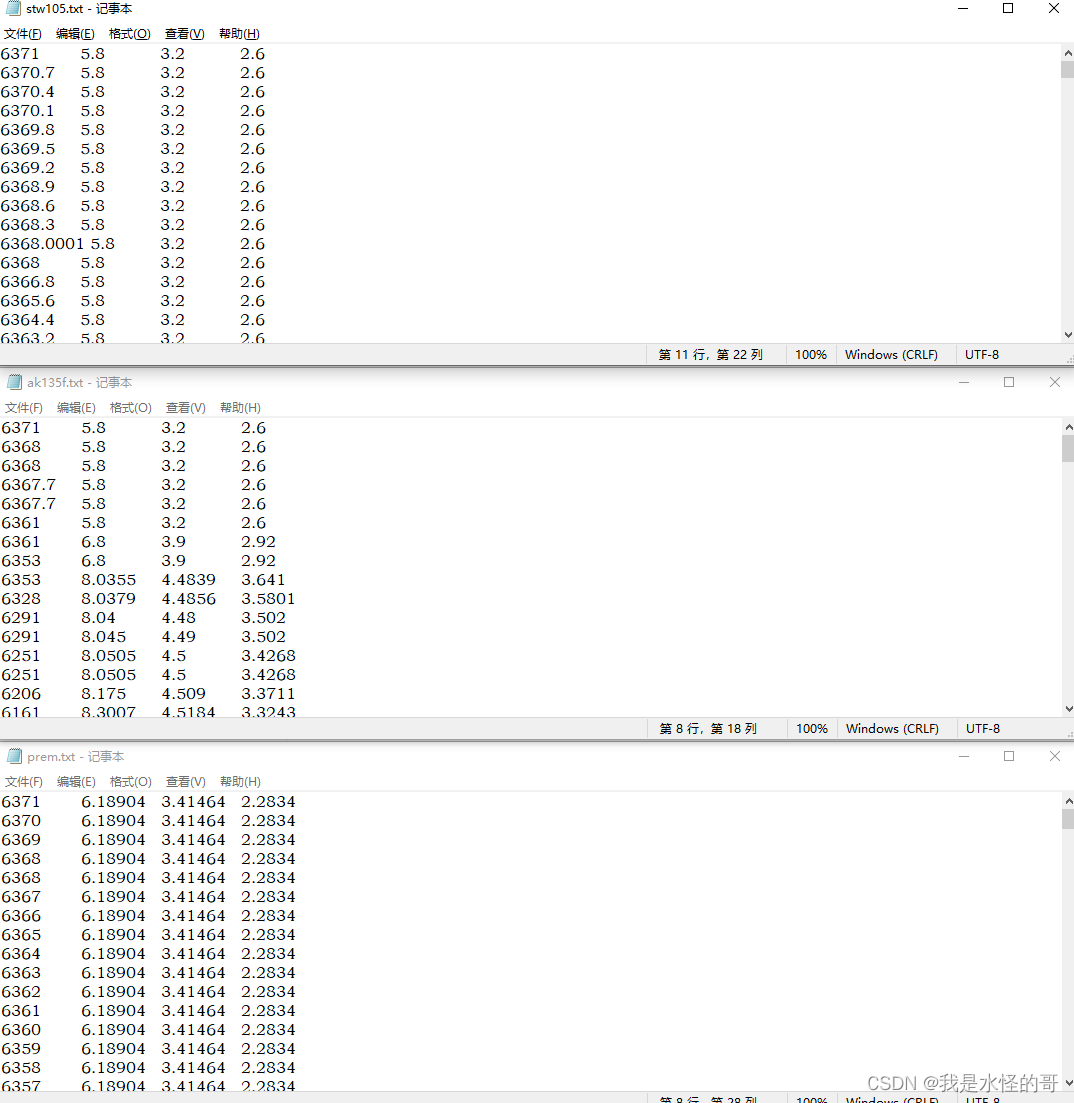

本文使用Martens et al. (2019)提供的LoadDef程序中的run_ln.py函数计算了PREM、STW105和AK135模型10000阶次的负荷勒夫 数,并将该结果作为输入数据,使用run_gf.py函数计算了 相应的负荷格林函数。所有的用于数据输入的地球模型包含地球半径(r)、Vp、Vs和密度p四个参数。前期的模型处理:如下图,由于部分的地球模型,比如ak135和是stw105,地表包含水体等,为了计算负荷love数,需要将这部分密度进行替换,通常是用地下5km部分的密度进行替换。

修改好的地球模型

采用LoadDef代码进行计算,得到不同地球模型的love数,可以看到三个地球模型得到的结果还是比较接近。

(3)计算位移格林函数(CF参考系)

使用run_gf.py函数计算了 相应的负荷格林函数

(4)准备负荷源

(4.1)GFZ提供的负荷产品

这里主要的负荷产品有:

- NTAL Non-Tidal Atmospheric Loading

- NTOL Non-Tidal Oceanic Loading

- HYDL Hydrological Loading

这里我以HYDL Hydrological Loading产品为例进行读取,其时间分辨率为24 h.

file = 'ESMGFZ_HYDL_cf_v1.3_24h_2023.nc';

ncdisp(file)

lon = ncread(file,'lon');

lat = ncread(file,'lat');

duV = ncread(file,'duV');

time = ncread(file,'time');

[lon,lat] = meshgrid(lon,lat);

O.lon = lon;

O.lat = lat;

O.rg = duV(:,:,12)';

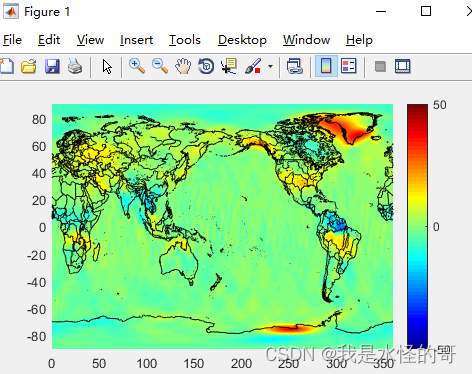

rg_plot(O)读取结果,单位:m。

(4.2)选择GRACE数据作为负荷源

将球谐系数转成格网值,代码:

clear;

%% envirionment setting

addpath functions

%% read in data

a = load('data/csr06_gsm_2004-12_2005-02.mat'); % SH

SH0 = cSH(a.SH); % convert to the data format used here.

SH0 = SH0(3); % only use the first month

%% filter

os_smooth.iGauss=300D3; %km

os_smooth.iPxMy=1;

[SH_filter] = CS_smooth (SH0,os_smooth);

%% check the results

% compare your figures with these in the check/ folder.

figure('position',[1,1,1000,600]);

imon = 1;

SH_t0 = SH0(imon);

SH_t1 = SH_filter(imon);

cran = [-50,50];

%%

[LLZ]=LLZ_forward_ns(SH_t1, 'to', 'ewh','resolution',1);

wzq_plot(LLZ)

caxis(cran);

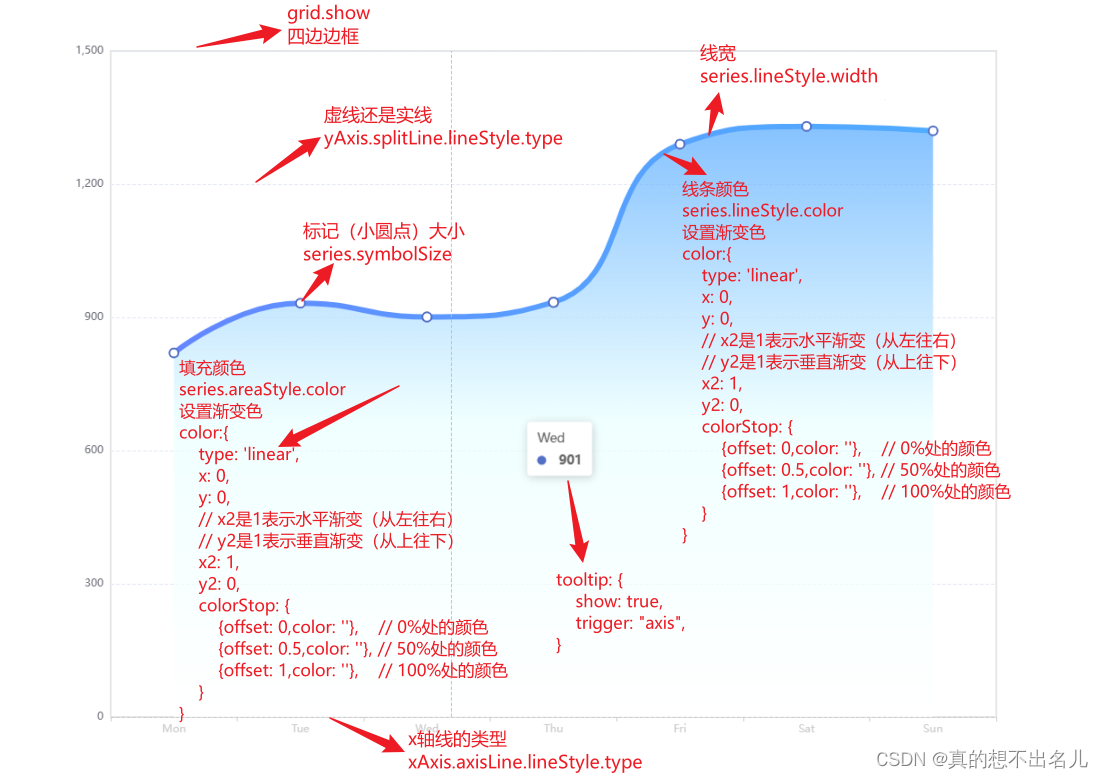

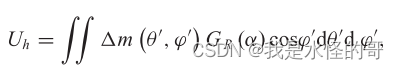

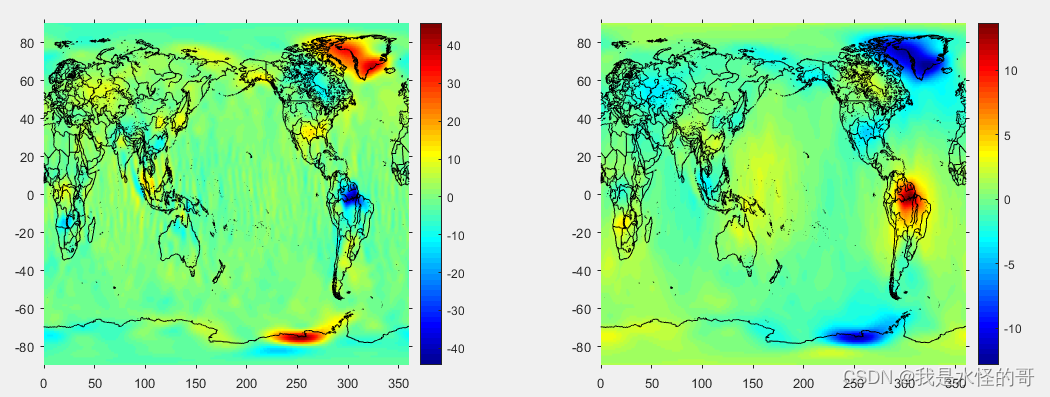

进行球谐函数积分可以得到以下的结果:左图:质量变化 ,cm;右图:垂直位移,mm

进行格林函数积分得到的结果:左图:质量变化,cm;右图:垂直位移,mm.

或者使用GRACE mascon数据,下载链接

下面是2005年10月的JPL GRACE mascon的质量变化信号

file = 'convgf_grace_rmTM1False_rmSM2False_20051001-20061001_GRACE_Tellus_RL06_JPL_ScalingFalse_20051016000000.nc';

ncdisp(file)

lon = ncread(file,'longitude');

lat = ncread(file,'latitude');

duV = ncread(file,'amplitude');

O.lon = reshape(lon,720,360);

O.lat = reshape(lat,720,360);

O.rg = reshape(duV,720,360).*100;

rg_plot(O)

caxis([-50,50])结果:单位:cm

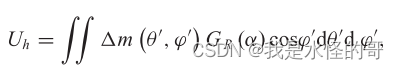

3. 进行格林函数卷积计算

原理:全球的格林函数,使用在地球的形状中心作为地球的参考系(CF)和地球的质心(CM)处计算的全球Green函数,基于弹性地球模型"AK135"(Kennett et al.,1995)给出的负荷 Love数值,由Wang(2012)提供,生成了以"cf"或"cm"命名的负荷love数。下面提供了基于ak135地球模型计算的负荷love数:

http://rz-vm115.gfz-potsdam.de:8080/repository/entry/show?entryid=2f0ff1d8-b0bb-4591-a5ea-44cea2d0baad一阶的负荷love数定义如下,下表给出了各个不同参考系下的转换关系:

| CE | CM | CF |

| h(1) = -0.28954527 | CE -1.0 | 2/3(h-l) = -0.26310049 |

| l(1) = 0.10510547 | CE -1.0 | -1/3(h-l) = 0.13155025 |

| k(1) = 0.0 | CE -1.0 | -1/3h-2/3l = 0.02644478 |

而且当阶趋于无穷时,有

h(inf) = -5.4630734

l(inf) = 0.000041954371

k(inf) = -0.000056284140计算垂直位移

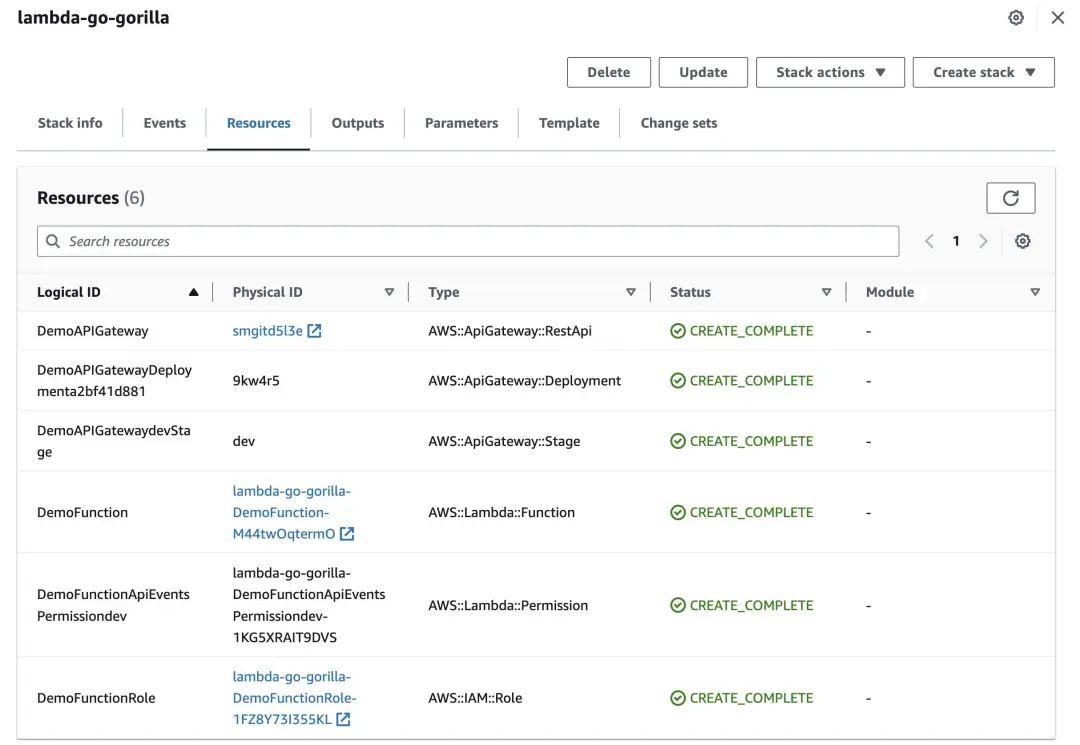

(1)利用Martens提供的LoadDef包,可以计算负荷变形,但是第一步需要准备负荷源。需要运行的函数为:gen_grace_tellus_rl06.py,位于【..\LoadDef-main\LoadDef-main\GRDGEN\load_files 】目录下,点击运行后,可以在目录【..\LoadDef-main\LoadDef-main\output\Grid_Files\nc\GRACE】得到每个月的负荷源,名称为【convgf_grace_rmTM1False_rmSM2False_20051001-20061001_GRACE_Tellus_RL06_JPL_ScalingFalse_20051016000000】

注意:使用的负荷源是GRACE mascon的质量变化,当然也可以根据需要换成其他的负荷源。

本文中计算的是全球的格网点,分辨率为181*361。为了计算所需要的计算点,可以将坐标点数据存放在一个txt文件中,并存放于文件目录下:【..\LoadDef-main\LoadDef-main\input\Station_Locations】

(2)进行全球的负荷卷积计算。下面本人经过修改的,可以计算全球区域负荷变形的python代码,计算垂直、东和北向的位移,需要添加到对应的目录下:【以下的计算使用的还是基于PREM地球模型计算得到的love数,如果需要比较不同地球模型的差异,可以替换程序读取的love数文件】

# -*- coding: utf-8 -*-

"""

Created on Mon Jul 31 14:36:40 2023

@author: 我是水怪的哥

"""

# IMPORT PRINT FUNCTION

from __future__ import print_function

# IMPORT MPI MODULE

from mpi4py import MPI

# MODIFY PYTHON PATH TO INCLUDE 'LoadDef' DIRECTORY

import sys

import os

sys.path.append(os.getcwd() + "/../")

# IMPORT PYTHON MODULES

import numpy as np

# import scipy as sc

import datetime

# 此处是添加函数的意思,即把需要的函数添加进来

from CONVGF.CN import load_convolution_region

from CONVGF.utility import read_region

# from CONVGF.utility import read_lsmask

# --------------- SPECIFY USER INPUTS --------------------- #

# Reference Frame (used for filenames) [Blewitt 2003]

rfm = "cf"

# Greens Function File

# :: May be load Green's function file output directly from run_gf.py (norm_flag = False)

# :: May be from a published table, normalized according to Farrell (1972) conventions [theta, u_norm, v_norm]

pmod = "PREM" # you can change Greens function based on PREM|AK135|STW105

grn_file = ("../output/Greens_Functions/" + rfm + "_" + pmod + ".txt")

norm_flag = False

# Full Path to Load Directory and Prefix of Filename

loadfile_directory = ("../output/Grid_Files/nc/GRACE/")

# Prefix for the Load Files (Load Directory will be Searched for all Files Starting with this Prefix)

# :: Note: For Load Files Organized by Date, the End of Filename Name Must be in the Format yyyymmddhhmnsc.txt

# :: Note: If not organized by date, files may be organized by tidal harmonic, for example (i.e. a unique filename ending)

# :: Note: Output names (within output files) will be determined by extension following last underscore character (e.g., date/harmonic/model)

loadfile_prefix = ("convgf_grace_rmTM1False_rmSM2False_20051001-20061001_GRACE_Tellus_RL06_JPL_ScalingFalse_20051016000000")

# LoadFile Format: ["nc", "txt"]

loadfile_format = "nc"

# Are the Load Files Organized by Datetime?

# :: If False, all Files that match the loadfile directory and prefix will be analyzed.

time_series = True

# Date Range for Computation (Year,Month,Day,Hour,Minute,Second)

# :: Note: Only used if 'time_series' is True

frst_date = [2005,10,1,0,0,0]

last_date = [2005,11,1,0,0,0]

# Are the load values on regular grids (speeds up interpolation); If unsure, leave as false.

regular = True

# Load Density

# Recommended: 1025-1035 for oceanic loads (e.g., FES2014, ECCO2); 1 for atmospheric loads (e.g. ECMWF)

ldens = 1000.0

# region for calculation

sta_file = ("../input/Station_Locations/region.txt")

# Optional: Additional string to include in output filenames (e.g. "_2019")

outstr = ("_" + pmod)

# ------------------ END USER INPUTS ----------------------- #

# -------------------- SETUP MPI --------------------------- #

# Get the Main MPI Communicator That Controls Communication Between Processors

comm = MPI.COMM_WORLD

# Get My "Rank", i.e. the Processor Number Assigned to Me

rank = comm.Get_rank()

# Get the Total Number of Other Processors Used

size = comm.Get_size()

# ---------------------------------------------------------- #

# -------------------- BEGIN CODE -------------------------- #

# Ensure that the Output Directories Exist

if (rank == 0):

if not (os.path.isdir("../output/Convolution/")):

os.makedirs("../output/Convolution/")

# Check format of load files

if not (loadfile_format == "nc"):

if not (loadfile_format == "txt"):

print(":: Error: Invalid format for load files. See scripts in the /GRDGEN/load_files/ folder. Acceptable formats: netCDF, txt.")

# Read global grid

lon,lat = read_region.main(sta_file)

# Determine Number of Stations Read In

if isinstance(lat,float) == True: # only 1 station

numel = 1

else:

numel = len(lat)

# Loop Through Each Station

for jj in range(0,numel):

# Remove Index If Only 1 Station

if (numel == 1): # only 1 station read in

my_lat = lat

my_lon = lon

else:

my_lat = lat[jj]

my_lon = lon[jj]

# Output File Name

cnv_out = loadfile_prefix + outstr + ".txt"

# Convert Start and End Dates to Datetimes

if (time_series == True):

frstdt = datetime.datetime(frst_date[0],frst_date[1],frst_date[2],frst_date[3],frst_date[4],frst_date[5])

lastdt = datetime.datetime(last_date[0],last_date[1],last_date[2],last_date[3],last_date[4],last_date[5])

# Determine Number of Matching Load Files

load_files = []

if os.path.isdir(loadfile_directory):

for mfile in os.listdir(loadfile_directory): # Filter by Load Directory

if mfile.startswith(loadfile_prefix): # Filter by File Prefix

if (time_series == True):

if (loadfile_format == "txt"):

mydt = datetime.datetime.strptime(mfile[-18:-4],'%Y%m%d%H%M%S') # Convert Filename String to Datetime

elif (loadfile_format == "nc"):

mydt = datetime.datetime.strptime(mfile[-17:-3],'%Y%m%d%H%M%S') # Convert Filename String to Datetime

else:

print(":: Error: Invalid format for load files. See scripts in the /GRDGEN/load_files/ folder. Acceptable formats: netCDF, txt.")

if ((mydt >= frstdt) & (mydt <= lastdt)): # Filter by Date Range

load_files.append(loadfile_directory + mfile) # Append File to List

else:

load_files.append(loadfile_directory + mfile) # Append File to List

else:

sys.exit('Error: The loadfile directory does not exist. You may need to create it. The /GRDGEN/load_files/ folder contains utility scripts to convert common models into LoadDef-compatible formats, and will automatically create a loadfile directory.')

# Test for Load Files

if not load_files:

sys.exit('Error: Could not find load files. You may need to generate them. The /GRDGEN/load_files/ folder contains utility scripts to convert common models into LoadDef-compatible formats.')

# Sort the Filenames

load_files = np.asarray(load_files)

fidx = np.argsort(load_files)

load_files = load_files[fidx]

# Set Lat/Lon/Name for Current Station

slat = my_lat

slon = my_lon

# Determine the Chunk Sizes for the Convolution

total_files = len(load_files)

nominal_load = total_files // size # Floor Divide

# Final Chunk Might Be Different in Size Than the Nominal Load

if rank == size - 1:

procN = total_files - rank * nominal_load

else:

procN = nominal_load

# Perform the Convolution for Each Station

if (rank == 0):

eamp,epha,namp,npha,vamp,vpha = load_convolution_region.main(grn_file,norm_flag,load_files,regular,\

slat,slon,cnv_out,loadfile_format,rank,procN,comm,load_density=ldens)

# For Worker Ranks, Run the Code But Don't Return Any Variables

else:

load_convolution_region.main(grn_file,norm_flag,load_files,regular,\

slat,slon,cnv_out,loadfile_format,rank,procN,comm,load_density=ldens)

# out data

cnv_file = ("../output/Convolution/GRACE_global" + cnv_out)

# write results

with open(cnv_file, "a", encoding="utf-8") as f:

np.savetxt(f, np.c_[my_lon,my_lat,eamp,epha,namp,npha,vamp,vpha], fmt='%.8f', delimiter=' ')# 以写的格式打开先打开文件

# Make Sure All Jobs Have Finished Before Continuing

comm.Barrier()

# --------------------- END CODE --------------------------- #

需要修改添加的函数如下,也可以在本人的资源处下载。

read_region.py [..\LoadDef-main\LoadDef-main\CONVGF\utility]

# *********************************************************************

# FUNCTION TO READ IN A STATION LOCATION FILE

#

# Copyright (c) 2014-2019: HILARY R. MARTENS, LUIS RIVERA, MARK SIMONS

#

# This file is part of LoadDef.

#

# LoadDef is free software: you can redistribute it and/or modify

# it under the terms of the GNU General Public License as published by

# the Free Software Foundation, either version 3 of the License, or

# any later version.

#

# LoadDef is distributed in the hope that it will be useful,

# but WITHOUT ANY WARRANTY; without even the implied warranty of

# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

# GNU General Public License for more details.

#

# You should have received a copy of the GNU General Public License

# along with LoadDef. If not, see <https://www.gnu.org/licenses/>.

#

# *********************************************************************

import numpy as np

def main(filename):

lat,lon = np.loadtxt(filename,usecols=(0,1),unpack=True)

sta = np.loadtxt(filename,usecols=(2,),dtype='U',unpack=True)

return lat,lon,sta

perform_convolution_region.py [..\LoadDef-main\LoadDef-main\CONVGF\CN]# -*- coding: utf-8 -*-

"""

Created on Mon Jul 31 15:02:33 2023

@author: 我是水怪的哥

"""

# *********************************************************************

# FUNCTION TO CONVOLVE LOAD GREEN'S FUNCTIONS WITH A MASS-LOAD MODEL

#

# Copyright (c) 2014-2019: HILARY R. MARTENS, LUIS RIVERA, MARK SIMONS

#

# This file is part of LoadDef.

#

# LoadDef is free software: you can redistribute it and/or modify

# it under the terms of the GNU General Public License as published by

# the Free Software Foundation, either version 3 of the License, or

# any later version.

#

# LoadDef is distributed in the hope that it will be useful,

# but WITHOUT ANY WARRANTY; without even the implied warranty of

# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

# GNU General Public License for more details.

#

# You should have received a copy of the GNU General Public License

# along with LoadDef. If not, see <https://www.gnu.org/licenses/>.

#

# *********************************************************************

import numpy as np

import scipy as sc

from scipy import interpolate

from CONVGF.utility import read_AmpPha

from CONVGF.CN import convolve_global_grid

from CONVGF.CN import interpolate_load

from CONVGF.CN import interpolate_lsmask

from CONVGF.CN import coef2amppha

from CONVGF.CN import mass_conservation

import sys

import os

from math import pi

import matplotlib.pyplot as plt

import matplotlib.cm as cm

def main(loadfile,ilat,ilon,iarea,load_density,ur,ue,un,mydt,regular,mass_cons,lf_format):

# Check load file format

if (lf_format == "bbox"): # list of cells, rather than traditional load files

# Select the Appropriate Cell ID

cslat = loadfile[0]

cnlat = loadfile[1]

cwlon = loadfile[2]

celon = loadfile[3]

yes_idx = np.where((ilat >= cslat) & (ilat <= cnlat) & (ilon >= cwlon) & (ilon <= celon)); yes_idx = yes_idx[0]

print(':: Number of convolution grid points within load cell: ', len(yes_idx))

# Find ilat and ilon within cell

ic1 = np.zeros(len(ilat),)

ic2 = np.zeros(len(ilat),)

ic1[yes_idx] = 1. # Amplitude of 1 (ic1 = 1 only inside cell), phase of zero (keep ic2 = 0 everywhere)

# Optionally plot the load cell

#### Set flag to "False" to turn off plotting of the load cell; "True" to turn it on

plot_fig = False

if plot_fig:

print(':: Plotting the load cell. [perform_convolution.py]')

cslat_plot = cslat - 0.5

cnlat_plot = cnlat + 0.5

cwlon_plot = cwlon - 0.5

celon_plot = celon + 0.5

idx_plot = np.where((ilon >= cwlon_plot) & (ilon <= celon_plot) & (ilat >= cslat_plot) & (ilat <= cnlat_plot)); idx_plot = idx_plot[0]

ilon_plot = ilon[idx_plot]

ilat_plot = ilat[idx_plot]

ic1_plot = ic1[idx_plot]

plt.scatter(ilon_plot,ilat_plot,c=ic1_plot,s=1,cmap=cm.BuPu)

plt.colorbar(orientation='horizontal')

fig_name = ("../output/Figures/" + "_" + str(cslat) + "_" + str(cnlat) + "_" + str(cwlon) + "_" + str(celon) + ".png")

plt.savefig(fig_name,format="png")

#plt.show()

plt.close()

else: # traditional load file

# Read the File

llat,llon,amp,pha,llat1dseq,llon1dseq,amp2darr,pha2darr = read_AmpPha.main(loadfile,lf_format,regular_grid=regular)

# Find Where Amplitude is NaN (if anywhere) and Set to Zero

nanidx = np.isnan(amp); amp[nanidx] = 0.; pha[nanidx] = 0.

# Convert Amp/Pha Arrays to Real/Imag

real = np.multiply(amp,np.cos(np.multiply(pha,pi/180.)))

imag = np.multiply(amp,np.sin(np.multiply(pha,pi/180.)))

# Interpolate Load at Each Grid Point onto the Integration Mesh

ic1,ic2 = interpolate_load.main(ilat,ilon,llat,llon,real,imag,regular)

# Multiply the Load Heights by the Load Density

ic1 = np.multiply(ic1,load_density)

ic2 = np.multiply(ic2,load_density)

# Enforce Mass Conservation

if (mass_cons == True):

print('here mass')

# For Land and Whole-Globe Models (like atmosphere and continental water)

print(':: Warning: Enforcing Mass Conservation Over Entire Globe.')

ic1,ic2 = mass_conservation.main(ic1,ic2,iarea)

# Perform the Convolution at Each Grid Point

c1e,c2e,c1n,c2n,c1v,c2v = convolve_global_grid.main(ic1,ic2,ur,ue,un)

# Sum Over All Grid Cells

ec1 = np.sum(c1e)

ec2 = np.sum(c2e)

nc1 = np.sum(c1n)

nc2 = np.sum(c2n)

vc1 = np.sum(c1v)

vc2 = np.sum(c2v)

# Convert Coefficients to Amplitude and Phase

# Note: Conversion to mm from meters also happens here!

eamp,epha,namp,npha,vamp,vpha = coef2amppha.main(ec1,ec2,nc1,nc2,vc1,vc2)

# Return Variables

return eamp,epha,namp,npha,vamp,vpha

load_convolution_region.py [..\LoadDef-main\LoadDef-main\CONVGF\CN]# -*- coding: utf-8 -*-

"""

Created on Mon Jul 31 15:01:13 2023

@author: 我是水怪的哥

"""

# Import Python Modules

from __future__ import print_function

from mpi4py import MPI

import numpy as np

import datetime

import scipy as sc

import netCDF4

from scipy import interpolate

from CONVGF.utility import read_greens_fcn_file

from CONVGF.utility import read_greens_fcn_file_norm

from CONVGF.utility import normalize_greens_fcns

from CONVGF.CN import compute_specific_greens_fcns

from CONVGF.CN import convolve_global_grid

from CONVGF.CN import generate_integration_mesh

from CONVGF.CN import intmesh2geogcoords

from CONVGF.CN import integrate_greens_fcns

from CONVGF.CN import interpolate_load

from CONVGF.CN import compute_angularDist_azimuth

from CONVGF.CN import coef2amppha

from CONVGF.CN import perform_convolution_region

from CONVGF.CN import interpolate_lsmask

from CONVGF.utility import read_lsmask

import sys

import os

from math import pi

"""

Compute predicted surface displacements caused by surface mass loading by convolving

displacement load Green's functions with a model for the surface mass load.

Parameters

----------

load_density : Density of the surface mass load (kg/m^3)

Default is 1030.0

rad : Mean Earth radius (m)

Default is 6371000.

# -- Mesh Paramters --

delinc1 : angular distance (degrees) increment for inner zone

Default is 0.0002

delinc2 : angular distance (degrees) increment for zone 2

Default is 0.001

delinc3 : angluar distance (degrees) increment for zone 3

Default is 0.01

delinc4 : angluar distance (degrees) increment for zone 4

Default is 0.1

delinc5 : angluar distance (degrees) increment for zone 5

Default is 0.5

delinc6 : angular distance (degrees) increment for outer zone

Default is 1.0

izb : inner zone boundary (degrees; 0<izb<z2b)

Default is 0.02

z2b : zone 2 boundary (degrees; izb<z2b<z3b)

Default is 0.1

z3b : zone 3 boundary (degrees; z2b<z3b<z4b)

Default is 1.0

z4b : zone 4 boundary (degrees; z3b<z4b<z5b)

Default is 10.0

z5b : zone 5 boundary (degrees; z4b<z5b<180)

Default is 90.0

azminc : azimuthal increment # NOTE: Maybe should match azminc with delinc5 (i.e., keep azminc consistent with theta increment at 90 degrees from station,

# where azimuth and theta increments are consistent in horizontal distance along planet's surface)

# :: azminc*sin(theta) ~ delinc

Default is 0.5

mass_cons : option to enforce mass conservation by removing the spatial mean from the load grid

Default is False

"""

def main(grn_file,norm_flag,load_files,regular,rlat,rlon,cnv_out,loadfile_format,rank,procN,comm,\

load_density=1030.0,rad=6371000.,delinc1=0.0002,delinc2=0.001,delinc3=0.01,delinc4=0.1,delinc5=0.5,delinc6=1.0,\

izb=0.02,z2b=0.1,z3b=1.0,z4b=10.0,z5b=90.0,azminc=0.5,mass_cons=False):

# Determine Number of Load Files

if isinstance(load_files,float) == True:

numel = 1

else:

numel = len(load_files)

# Only the Main Processor Will Do This:

if (rank == 0):

# Print Number of Files Read In

display = ':: Number of Files Read In: ' + repr(numel)

print(display)

print(" ")

# Determine whether load file was supplied as a list of cells, or as traditional load files

if (loadfile_format == "bbox"): # list of cells

# Ensure only one file is read in for this format

if (numel > 1):

sys.exit(":: Error: For load files in 'bbox' format (i.e., a list of bounding boxes), only one file can be read in at a time. [load_convolution.py]")

# Read in the file

loadgrid = load_files[0]

lcext = loadgrid[-2::]

if (lcext == 'xt'):

file_ids = np.loadtxt(loadgrid,usecols=(4,),unpack=True,dtype='U')

slat,nlat,wlon,elon = np.loadtxt(loadgrid,usecols=(0,1,2,3),unpack=True)

elif (lcext == 'nc'):

f = netCDF4.Dataset(loadgrid)

file_ids = f.variables['cell_ids'][:]

slat = f.variables['slatitude'][:]

nlat = f.variables['nlatitude'][:]

wlon = f.variables['wlongitude'][:]

elon = f.variables['elongitude'][:]

f.close()

# Ensure that Bounding Box Longitudes are in Range 0-360

for yy in range(0,len(wlon)):

if (wlon[yy] < 0.):

wlon[yy] += 360.

if (elon[yy] < 0.):

elon[yy] += 360.

# Generate an array of cell indices

file_idx = np.linspace(0,len(file_ids),num=(len(file_ids)),endpoint=False)

np.random.shuffle(file_idx)

else:

# Generate an Array of File Indices

file_idx = np.linspace(0,numel,num=numel,endpoint=False)

np.random.shuffle(file_idx)

# Create Array of Filename Extensions

file_ids = []

for qq in range(0,numel):

mfile = load_files[qq]

str_components = mfile.split('_')

cext = str_components[-1]

if (loadfile_format == "txt"):

file_ids.append(cext[0:-4])

elif (loadfile_format == "nc"):

file_ids.append(cext[0:-3])

else:

print(':: Error. Invalid file format for load models. [load_convolution.py]')

sys.exit()

# Initialize Arrays

eamp = np.empty((len(file_ids),))

epha = np.empty((len(file_ids),))

namp = np.empty((len(file_ids),))

npha = np.empty((len(file_ids),))

vamp = np.empty((len(file_ids),))

vpha = np.empty((len(file_ids),))

# Read Greens Function File

if norm_flag == True:

theta,u,v,unormFarrell,vnormFarrell = read_greens_fcn_file_norm.main(grn_file,rad)

else:

theta,u,v,unormFarrell,vnormFarrell = read_greens_fcn_file.main(grn_file)

# Normalize Greens Functions (Agnew Convention)

unorm,vnorm = normalize_greens_fcns.main(theta,u,v,rad)

# Interpolate Greens Functions

tck_gfu = interpolate.splrep(theta,unorm,k=3)

tck_gfv = interpolate.splrep(theta,vnorm,k=3)

xr = np.linspace(0.0001,3.,1000)

# Generate Integration Mesh

print(':: Generating the Integration Mesh. Please Wait...')

gldel,glazm,ldel,lazm,unit_area = generate_integration_mesh.main(delinc1,delinc2, \

delinc3,delinc4,delinc5,delinc6,izb,z2b,z3b,z4b,z5b,azminc)

# Integrate Greens Functions

uint,vint = integrate_greens_fcns.main(gldel,glazm,ldel,lazm,tck_gfu,tck_gfv)

# Compute Geographic Coordinates of Integration Mesh Cell Midpoints

ilat,ilon,iarea = intmesh2geogcoords.main(rlat,rlon,ldel,lazm,unit_area)

# Ensure that Station Location is in Range 0-360

if (rlon < 0.):

rlon += 360.

# Determine the Land-Sea Mask: Interpolate onto Mesh

# print(':: Interpolating the Land-Sea Mask. Please Wait...')

# print(':: Number of Grid Points: %s | Size of LSMask: %s' %(str(len(ilat)), str(lsmask.shape)))

# print(':: Finished LSMask Interpolation.')

# Compute Angular Distance and Azimuth at Receiver Due to Load

delta,haz = compute_angularDist_azimuth.main(rlat,rlon,ilat,ilon)

# Compute Greens Functions Specific to Receiver and Grid (Geographic Coordinates)

ur,ue,un = compute_specific_greens_fcns.main(haz,uint,vint)

# If I'm a Worker, I Know Nothing About the Data

else:

file_idx = file_ids = eamp = epha = namp = npha = vamp = vpha = None

ldel = lazm = uint = vint = ilat = ilon = iarea = delta = haz = ur = ue = un = None

slat = nlat = wlon = elon = None

# Create a Data Type for the Convolution Results

cntype = MPI.DOUBLE.Create_contiguous(1)

cntype.Commit()

# Gather the Processor Workloads for All Processors

sendcounts = comm.gather(procN, root=0)

# Scatter the File Locations (By Index)

d_sub = np.empty((procN,))

comm.Scatterv([file_idx, (sendcounts, None), cntype], d_sub, root=0)

# All Processors Get Certain Arrays and Parameters; Broadcast Them

ilat = comm.bcast(ilat, root=0)

ilon = comm.bcast(ilon, root=0)

iarea = comm.bcast(iarea, root=0)

ur = comm.bcast(ur, root=0)

ue = comm.bcast(ue, root=0)

un = comm.bcast(un, root=0)

load_density = comm.bcast(load_density, root=0)

file_ids = comm.bcast(file_ids, root=0)

file_idx = comm.bcast(file_idx, root=0)

if (loadfile_format == "bbox"):

slat = comm.bcast(slat, root=0)

nlat = comm.bcast(nlat, root=0)

wlon = comm.bcast(wlon, root=0)

elon = comm.bcast(elon, root=0)

# Loop Through the Files

eamp_sub = np.empty((len(d_sub),))

epha_sub = np.empty((len(d_sub),))

namp_sub = np.empty((len(d_sub),))

npha_sub = np.empty((len(d_sub),))

vamp_sub = np.empty((len(d_sub),))

vpha_sub = np.empty((len(d_sub),))

for ii in range(0,len(d_sub)):

current_d = int(d_sub[ii]) # Index

if (loadfile_format == "bbox"):

cslat = slat[current_d]

cnlat = nlat[current_d]

cwlon = wlon[current_d]

celon = elon[current_d]

mfile = [cslat,cnlat,cwlon,celon]

file_id = file_ids[current_d] # File Extension

print(':: Working on Cell: %s | Number: %6d of %6d | Rank: %6d' %(file_id, (ii+1), len(d_sub), rank))

else:

mfile = load_files[current_d] # Vector-Format

file_id = file_ids[current_d] # File Extension

print(':: Working on File: %s | Number: %6d of %6d | Rank: %6d' %(file_id, (ii+1), len(d_sub), rank))

# Check if Loadfile Exists

if not (os.path.isfile(mfile)): # file does not exist

eamp_sub[ii] = np.nan

epha_sub[ii] = np.nan

namp_sub[ii] = np.nan

npha_sub[ii] = np.nan

vamp_sub[ii] = np.nan

vpha_sub[ii] = np.nan

continue

# Compute Convolution for Current File

eamp_sub[ii],epha_sub[ii],namp_sub[ii],npha_sub[ii],vamp_sub[ii],vpha_sub[ii] = perform_convolution_region.main(\

mfile,ilat,ilon,iarea,load_density,ur,ue,un,file_id,regular,mass_cons,loadfile_format)

return eamp_sub,epha_sub,namp_sub,npha_sub,vamp_sub,vpha_sub

else:

# For Worker Ranks, Return Nothing

return

# # Gather Results

# comm.Gatherv(eamp_sub, [eamp, (sendcounts, None), MPI.DOUBLE], root=0)

# comm.Gatherv(epha_sub, [epha, (sendcounts, None), MPI.DOUBLE], root=0)

# comm.Gatherv(namp_sub, [namp, (sendcounts, None), MPI.DOUBLE], root=0)

# comm.Gatherv(npha_sub, [npha, (sendcounts, None), MPI.DOUBLE], root=0)

# comm.Gatherv(vamp_sub, [vamp, (sendcounts, None), MPI.DOUBLE], root=0)

# comm.Gatherv(vpha_sub, [vpha, (sendcounts, None), MPI.DOUBLE], root=0)

# # Make Sure Everyone Has Reported Back Before Moving On

# comm.Barrier()

# # Free Data Type

# cntype.Free()

# # Print Output to Files and Return Variables

# if (rank == 0):

# # Re-organize Files

# narr,nidx = np.unique(file_idx,return_index=True)

# eamp = eamp[nidx]; namp = namp[nidx]; vamp = vamp[nidx]

# epha = epha[nidx]; npha = npha[nidx]; vpha = vpha[nidx]

# # Prepare Output Files

# cnv_file = ("../output/Convolution/GRACE_global" + cnv_out)

# cnv_head = ("../output/Convolution/"+str(np.random.randint(500))+"cn_head.txt")

# cnv_body = ("../output/Convolution/"+str(np.random.randint(500))+"cn_body.txt")

# # Prepare Data for Output (Create a Structured Array)

# rlat_arr = np.ones((len(eamp),)) * rlat

# rlon_arr = np.ones((len(eamp),)) * rlon

# if (loadfile_format == "bbox"):

# all_cnv_data = np.array(list(zip(file_ids,rlat_arr,rlon_arr,eamp,epha,namp,npha,vamp,vpha,slat,nlat,wlon,elon)), dtype=[('file_ids','U25'), \

# ('rlat_arr',float),('rlon_arr',float),('eamp',float),('epha',float),('namp',float),('npha',float), \

# ('vamp',float),('vpha',float),('slat',float),('nlat',float),('wlon',float),('elon',float)])

# else:

# all_cnv_data = np.array(list(zip(file_ids,rlat_arr,rlon_arr,eamp,epha,namp,npha,vamp,vpha)), dtype=[('file_ids','U25'), \

# ('rlat_arr',float),('rlon_arr',float),('eamp',float),('epha',float),('namp',float),('npha',float), \

# ('vamp',float),('vpha',float)])

# # Write Header Info to File

# hf = open(cnv_head,'w')

# if (loadfile_format == "bbox"):

# cnv_str = 'Extension/Epoch Lat(+N,deg) Lon(+E,deg) E-Amp(mm) E-Pha(deg) N-Amp(mm) N-Pha(deg) V-Amp(mm) V-Pha(deg) S-Lat(deg) N-Lat(deg) W-Lon(deg) E-Lon(deg) \n'

# else:

# cnv_str = 'Extension/Epoch Lat(+N,deg) Lon(+E,deg) E-Amp(mm) E-Pha(deg) N-Amp(mm) N-Pha(deg) V-Amp(mm) V-Pha(deg) \n'

# hf.write(cnv_str)

# hf.close()

# # Write Convolution Results to File

# if (loadfile_format == "bbox"):

# np.savetxt(cnv_body,all_cnv_data,fmt=["%s"] + ["%.8f",]*12,delimiter=" ")

# else:

# np.savetxt(cnv_body,all_cnv_data,fmt=["%s"] + ["%.8f",]*8,delimiter=" ")

# # Combine Header and Body Files

# filenames_cnv = [cnv_head, cnv_body]

# with open(cnv_file,'w') as outfile:

# for fname in filenames_cnv:

# with open(fname) as infile:

# outfile.write(infile.read())

# # Remove Header and Body Files

# os.remove(cnv_head)

# os.remove(cnv_body)

# Return Amplitude and Phase Response Values

# return eamp,epha,namp,npha,vamp,vpha,comm

# else:

# # For Worker Ranks, Return Nothing

# return

下面计算的2005年10月的GRACE mascon作为负荷源得到的三维地球弹性变形结果【三维位移的振幅图】:东西向水平位移最大振幅、南北向水平位移最大振幅、垂直位移最大振幅:单位:mm

目前还存在的问题:

(1)该计算过程非常地耗时,后续可以添加并行计算,提高运行效率;

(2)计算的结果仅仅是三维位移的最大振幅,后续可计算其余的量。

参考资料

SAGE: Data Services Products: EMC-STW105

Preliminary Reference Earth Model (PREM)

SAGE: Data Services Products: EMC-ak135-f

Home

3.1 Earth’s Layers: Crust, Mantle, and Core – Physical Geology, First University of Saskatchewan Edition (usask.ca)

李长海, et al. (2023). "不同地球模型对中国陆区大气负荷位移建模的影响." 地球物理学报.

Martens, H.R., Rivera, L., & Simons, M. (2019). LoadDef: A Python-based toolkit to model elastic deformation caused by surface mass loading on spherically symmetric bodies. Earth and Space Science, 6. https://doi.org/10.1029/2018ea000462.

Yi, S. and N. Sneeuw (2022). "A novel spatial filter to reduce north–south striping noise in GRACE spherical harmonic coefficients." Journal of Geodesy 96(4).

W.E Farrell. Deformation of the earth by surface loads. Rev. Geophys. and Spac. Phys., 10(3):751-797, 1972

H. Wang et al. Load Love numbers and Green's functions for elastic Earth models PREM, iasp91, ak135, and modified models with refined crustal structure from Crust 2.0. Computers and Geosciences, 49, 190-199, 2012

B.L.N. Kennett et al. Constraints on seismic velocities in the Earth from travel times. Geophys. J. Int. 122, 108-124.

Wen, Z., et al. (2023). "Contribution of loading deformation to the GNSS vertical velocity field in the Chinese mainland." Geophysical Journal International.

Martens, H. R. (2016). "USING EARTH DEFORMATION CAUSED BY SURFACE MASS LOADING TO CONSTRAIN THE ELASTIC STRUCTURE OF THE CRUST AND MANTLE." California Institute of Technology.

Kennett B.L.N., E.R. Engdahl and R. Buland. 1995. “Constraints on seismic velocities in the earth from travel times” Geophys. J. Int. 122, 108–124. https://doi.org/10.1111/j.1365-246X.1995.tb03540.x