一、准备2台物理机器master-1、master-2,目标虚拟VIP。

VIP:172.50.2.139

master-1:172.50.2.41

master-2:172.50.2.42

二、然后分别在2台物理机器master-1、master-2上使用docker-compose安装mysql8,并配置互为主从。1)配置master-1

cd /

mkdir docker

cd /docker

mkdir mysql8

cd mysql8

mkdir mysql8data

# master_1_init.sql见文件[master-1]

touch master_1_init.sql

# Dockerfile_master_1见文件[master-1]

touch Dockerfile_master_1

# docker-compose.yml见文件[master-1]

touch docker-compose.yml[master-1]文件 master_1_init.sql

CREATE USER 'repl'@'%' IDENTIFIED WITH caching_sha2_password BY 'repl';

grant replication slave, replication client on *.* to 'repl'@'%';

flush privileges;

[master-1]文件 Dockerfile_master_1

FROM mysql:8.0.31

MAINTAINER "yh<573968217@qq.com>"

ENV TZ=Asia/Shanghai

RUN ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo $TZ > /etc/timezone

COPY ./master_1_init.sql /docker-entrypoint-initdb.d

[master-1]文件 docker-compose.yml

version: "3.9"

services:

mysql8-master-1:

build:

context: ./

dockerfile: ./Dockerfile_master_1

image: mysql8-master-1

restart: always

container_name: mysql8-master-1

volumes:

- /docker/mysql8/mysql8data/conf/my.cnf:/etc/mysql/my.cnf

- /docker/mysql8/mysql8data/data:/var/lib/mysql

- /docker/mysql8/mysql8data/logs:/var/log/mysql

ports:

- 3306:3306

environment:

- MYSQL_ROOT_PASSWORD=123456

- MYSQL_ROOT_HOST=%

privileged: true

network_mode: "host"

extra_hosts:

# master_1容器可以使用的host访问master_2

- mysql8-master-2:172.50.2.42

command: ['--server-id=1',

'--default-authentication-plugin=mysql_native_password',

'--sync_binlog=1',

'--log-bin=mysql8-master-1-bin',

'--binlog-ignore-db=mysql,information_schema,performance_schema,sys',

'--binlog_cache_size=256M',

'--binlog_format=ROW',

'--relay_log=mysql8-master-1-relay',

'--lower_case_table_names=1',

'--character-set-server=utf8mb4',

'--collation-server=utf8mb4_general_ci',

'--sql_mode=STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_ENGINE_SUBSTITUTION']

如果是只有一台机子模拟集群(就需要自建网络)

version: "3.9"

services:

mysql8-master-1:

build:

context: ./

dockerfile: ./Dockerfile_master_1

image: mysql8-master-1

restart: always

container_name: mysql8-master-1

volumes:

- /yhcloud/docker_compose_keep_alive_mysql8_both_master/mysql8data/conf/my.cnf:/etc/mysql/my.cnf

- /yhcloud/docker_compose_keep_alive_mysql8_both_master/mysql8data/data:/var/lib/mysql

- /yhcloud/docker_compose_keep_alive_mysql8_both_master/mysql8data/logs:/var/log/mysql

ports:

- 3308:3306

networks:

proxy:

ipv4_address: 172.50.2.42

environment:

- MYSQL_ROOT_PASSWORD=123456

- MYSQL_ROOT_HOST=%

privileged: true

extra_hosts:

# master_1容器可以使用的host访问master_2

- mysql8-master-2:169.17.0.11

command: ['--server-id=1',

'--default-authentication-plugin=mysql_native_password',

'--sync_binlog=1',

'--log-bin=mysql8-master-1-bin',

'--binlog-ignore-db=mysql,information_schema,performance_schema,sys',

'--binlog_cache_size=256M',

'--binlog_format=ROW',

'--relay_log=mysql8-master-1-relay',

'--lower_case_table_names=1',

'--character-set-server=utf8mb4',

'--collation-server=utf8mb4_general_ci',

'--sql_mode=STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_ENGINE_SUBSTITUTION']

networks:

proxy:

ipam:

config:

- subnet: 172.50.0.0/24 #启动容器的网段

2)配置master-2

cd /

mkdir docker

cd /docker

mkdir mysql8

cd mysql8

mkdir mysql8data

# master_2_init.sql见文件[master-2]

touch master_2_init.sql

# Dockerfile_master_2见文件[master-2]

touch Dockerfile_master_2

# docker-compose.yml见文件[master-2]

touch docker-compose.yml[master-2]文件 master_2_init.sql

CREATE USER 'repl'@'%' IDENTIFIED WITH caching_sha2_password BY 'repl';

grant replication slave, replication client on *.* to 'repl'@'%';

flush privileges;[master-2]文件 Dockerfile_master_2

FROM mysql:8.0.31

MAINTAINER "yh<573968217@qq.com>"

ENV TZ=Asia/Shanghai

RUN ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo $TZ > /etc/timezone

COPY ./master_2_init.sql /docker-entrypoint-initdb.d

[master-2]文件 docker-compose.yml

version: "3.9"

services:

mysql8-master-2:

build:

context: ./

dockerfile: ./Dockerfile_master_2

image: mysql8-master-2

restart: always

container_name: mysql8-master-2

volumes:

- /docker/mysql8/mysql8data/conf/my.cnf:/etc/mysql/my.cnf

- /docker/mysql8/mysql8data/data:/var/lib/mysql

- /docker/mysql8/mysql8data/logs:/var/log/mysql

ports:

- 3306:3306

environment:

- MYSQL_ROOT_PASSWORD=123456

- MYSQL_ROOT_HOST=%

privileged: true

network_mode: "host"

extra_hosts:

# master_1容器可以使用的host访问master_2

- mysql8-master-1:172.50.2.41

command: ['--server-id=2',

'--default-authentication-plugin=mysql_native_password',

'--sync_binlog=1',

'--log-bin=mysql8-master-2-bin',

'--binlog-ignore-db=mysql,information_schema,performance_schema,sys',

'--binlog_cache_size=256M',

'--binlog_format=ROW',

'--relay_log=mysql8-master-2-relay',

'--lower_case_table_names=1',

'--character-set-server=utf8mb4',

'--collation-server=utf8mb4_general_ci',

'--sql_mode=STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_ENGINE_SUBSTITUTION']

3)启动master-1、master-2数据库服务

[master-1] [master-2]分别执行:

# mysql8真正启动需要一些时间,navicat链接成功说明启动成功

docker-compose up -d注意:如果是服务器,或者虚拟机,要打开3306端口

# 查看防火墙

systemctl status firewalld

# 查看3306端口是否打开【no/yes】

firewall-cmd --zone=public --query-port=3306/tcp

# 打开3306端口

firewall-cmd --zone=public --add-port=3306/tcp --permanent

# 防火墙重载

firewall-cmd --reload

# 再次查看3306状态 【yes】

firewall-cmd --zone=public --query-port=3306/tcp连接navicat

4)启动master-1、master-2互为主从

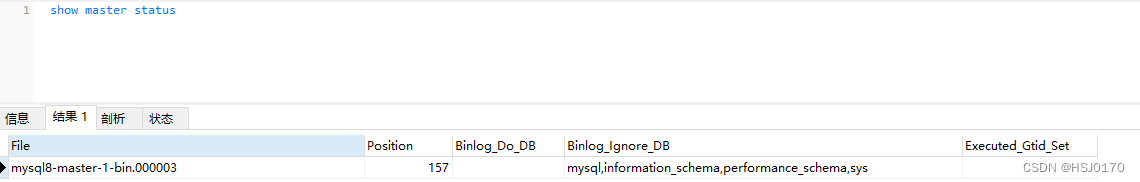

①查看[master-1]作为主节点 的bin-log-file、bin-log-pos:

show master status

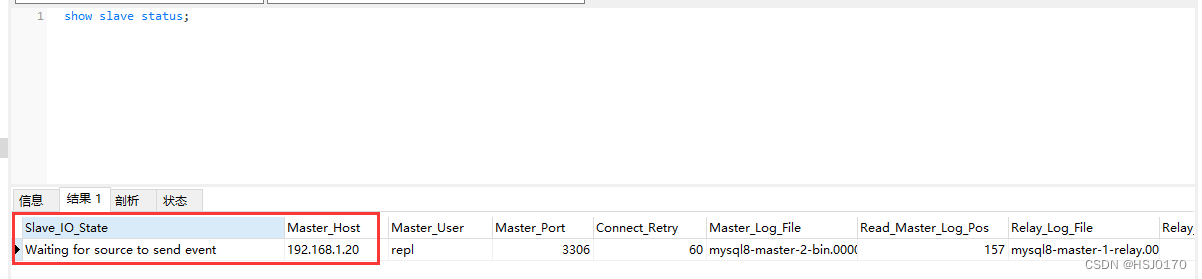

配置及其查看[master-1]作为从节点的slave并启动(需要获取master-2主节点的日志)(注意:master_log_file、master_log_pos为你自己的数据库实际值):

change master to master_host='172.50.2.42', master_user='repl',master_password='repl',master_port=3306,GET_MASTER_PUBLIC_KEY=1,master_log_file='mysql8-master-2-bin.000003', master_log_pos= 157, master_connect_retry=60;

start slave;

show slave status;

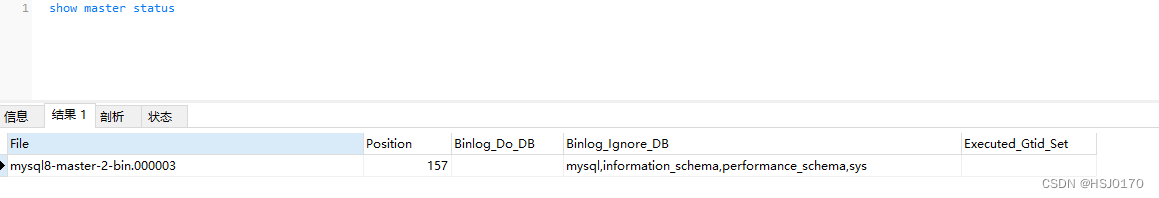

②查看[master-2] 作为主节点 的bin-log-file、bin-log-pos:

show master status

配置及其查看[master-2]作为从节点的slave并启动(需要获取master-1主节点的日志)(注意:master_log_file、master_log_pos为你自己的数据库实际值):

change master to master_host='172.50.2.41', master_user='repl',master_password='repl',master_port=3306,GET_MASTER_PUBLIC_KEY=1,master_log_file='mysql8-master-1-bin.000003', master_log_pos= 157, master_connect_retry=60;

start slave;

show slave status;

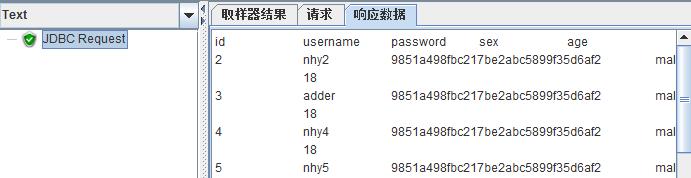

三、验证

1)[master-1]执行操作:

#创建一个测试库db_test

create database db_test;

#使用db_test

USE db_test;

#创建一张测试表tb_user

create table tb_user (id int,name varchar(20));

#插入一条数据

insert into tb_user(id,name) values (1, 'tomcat');

#查看数据

select * from tb_user;2)[master-2]执行操作:

#使用db_test

USE db_test;

#查看数据

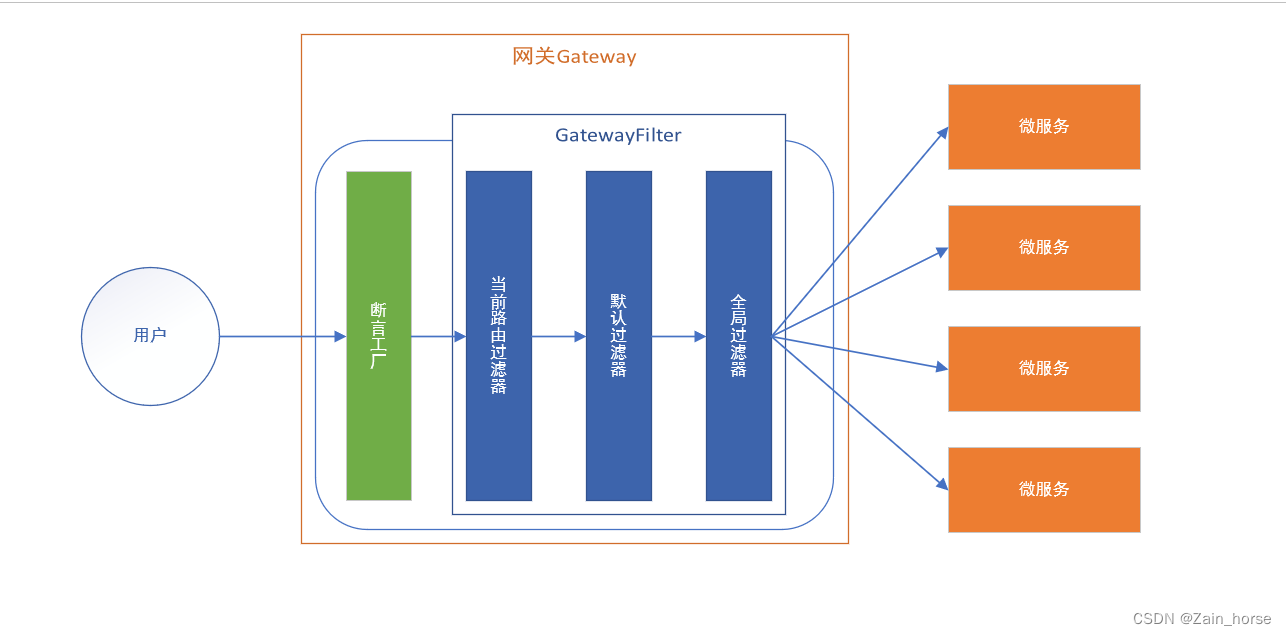

select * from tb_user;四、keep-alive 配置VIP

另一篇文章

https://blog.51cto.com/u_15906694/6274799

1)master-1、master-2安装依赖包\

yum install -y curl gcc openssl-devel libnl3-devel net-snmp-devel2)master-1、master-2 使用yum安装keepalived

yum install -y keepalived3)master-1配置keepalived.conf

(MASTER或BACKUP,MASTER会抢占VIP),

本次原则master-1提供服务,master-1宕机VIP漂移到master-2继续服务

cd /etc/keepalived/

#备份原始的

mv keepalived.conf keepalived.conf.bak

#编辑新的,keepalived.conf见文件

vim keepalived.confkeepalived.conf文件:

global_defs {

router_id MySQL-Master-1

}

vrrp_instance MAIN {

state MASTER

interface ens33

virtual_router_id 1

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass hsj

}

virtual_ipaddress {

172.50.2.139

}

}

#配置virtual_server ip为上面配置的虚拟vip地址 端口为mysql的端口

virtual_server 172.50.2.139 3306 {

delay_loop 6

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

#real_server 该配置为实际物理机ip地址 以及实际物理机mysql端口

real_server 172.50.2.41 3306 {

#当该ip 端口连接异常时,执行该脚本

notify_down /etc/keepalived/shutdown.sh

TCP_CHECK {

#实际物理机ip地址

connect_ip 172.50.2.41

#实际物理机port端口

connect_port 3306

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}4)master-2配置keepalived.conf

cd /etc/keepalived/

#备份原始的

mv keepalived.conf keepalived.conf.bak

#编辑新的,keepalived.conf见文件

vim keepalived.confkeepalived.conf文件:

global_defs {

router_id MySQL-Master-2

}

vrrp_instance MAIN {

state BACKUP

# 输入ifconfig查看网卡地址

interface ens33

virtual_router_id 1

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass hsj

}

virtual_ipaddress {

172.50.2.139

}

}

#配置virtual_server ip为上面配置的虚拟vip地址 端口为mysql的端口

virtual_server 172.50.2.139 3306 {

delay_loop 6

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

#real_server 该配置为实际物理机ip地址 以及实际物理机mysql端口

real_server 172.50.2.42 3306 {

#当该ip 端口连接异常时,执行该脚本

notify_down /etc/keepalived/shutdown.sh

TCP_CHECK {

#实际物理机ip地址

connect_ip 172.50.2.42

#实际物理机port端口

connect_port 3306

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}shutdown.sh文件

#!/bin/bash

#该脚本是在mysql服务出现异常时,将keepalived应用停止,从而使虚拟vip主机自动连接到另一台mysql上

killall keepalived注意:如果有firewall 防火墙,放行VRRP协议 (用于keepalived搭建高可用规则)

firewall-cmd --direct --permanent --add-rule ipv4 filter INPUT 0 --in-interface enp4s0 --destination 224.0.0.18 --protocol vrrp -j ACCEPT

firewall-cmd --reloadPS:其实上面那命令并没有卵用,目前现象就是如果两边防火墙都关闭后:主节点keepalive的手动杀死后,从节点会晋升。此时把主节点重启,vip有会飘逸会主节点。跟我想要的效果不一样。我想要得是就算主节点down了重启后,vip还是在从节点上,从节点down机后,vip再自动漂移到主节点。事实上,加了防火墙后,从节点启动的时候会因为接收不到主节点的广播,会抢占vip。所以就造成VIP在从节点上,同时两台机子上的keepalive都是master。此时就算从节点宕机,vip也不会飘移了。因为主节点那台机子觉得自己还是master就不会去抢占。就会导致虚拟vip访问不起,mysql服务业访问不起。后面有空再解决,先记录下来!!!

5)master-1、master-2同时启动keepalived服务

systemctl start keepalivedsystemctl start keepalived 启动keepalived服务

systemctl stop keepalived 停止keepalived服务

systemctl enable keepalived 设置keepalived服务开机自启动

systemctl disable keepalived 禁止keepalived服务开机自启动

6)测试master-1宕机ip漂移

关闭master-1宿主机实例,模拟master-1宕机。

通过VIP:192.168.1.139 链接数据库操作任然正常,进行操作insert:

insert into tb_user(id,name) values (9, '听妈妈的话');8)测试master-2宕机ip漂移

关闭master-2宿主机实例,模拟master-2宕机。

通过VIP:192.168.1.139 链接数据库操作任然正常,进行操作insert:

insert into tb_user(id,name) values (10, '听爸爸的话');结语:

1、当sync_binlog=0的时候,表示每次提交事务都只write,不fsync

2、当sync_binlog=1的时候,表示每次提交事务都执行fsync

3、当sync_binlog=N的时候,表示每次提交事务都write,但积累N个事务后才fsync。

一般在公司的大部分应用场景中,我们建议将此参数的值设置为1,因为这样的话能够保证数据的安全性,但是如果出现主从复制的延迟问题,可以考虑将此值设置为100~1000中的某个数值,非常不建议设置为0,因为设置为0的时候没有办法控制丢失日志的数据量,但是如果是对安全性要求比较高的业务系统,这个参数产生的意义就不是那么大了。

-- 查看并行的slave的线程的个数,默认是0.表示单线程

show global variables like 'slave_parallel_workers';

-- 根据实际情况保证开启多少线程

set global slave_parallel_workers = 4;

-- 设置并发复制的方式,默认是一个线程处理一个库,值为database

show global variables like '%slave_parallel_type%';

-- 停止slave

stop slave;

-- 设置属性值

set global slave_parallel_type='logical_check';

-- 开启slave

start slave

-- 查看线程数

show full processlist;

阿里云部署需要:havip + 弹性公网

高可用虚拟IP(High-Availability Virtual IP Address,简称HaVip)HaVip功能正在公测,您可以登录阿里云配额中心控制台进行自助申请。

—2023-01-12

HaVip具有以下特点:

1、HaVip是1个浮动的私网IP,不会固定在指定的ECS实例或弹性网卡上。ECS实例或弹性网卡可通过ARP协议宣告来更改与HaVip的绑定关系。

2、HaVip具有子网属性,仅支持绑定到同一交换机下的ECS实例或弹性网卡上。

3、1个HaVip支持同时绑定10个ECS实例或同时绑定10个弹性网卡,但1个HaVip不能既绑定ECS实例又绑定弹性网卡。