hive和datax數據採集數量對不上

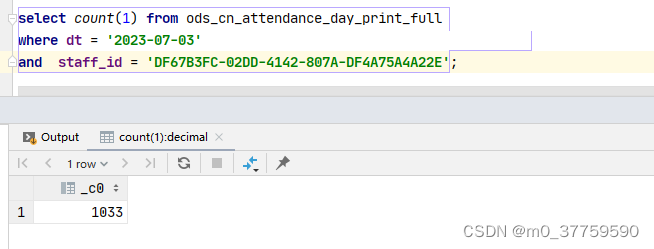

對數據的時候發現有些對不上,在hive中 staff_id = 'DF67B3FC-02DD-4142-807A-DF4A75A4A22E’的數據只有1033

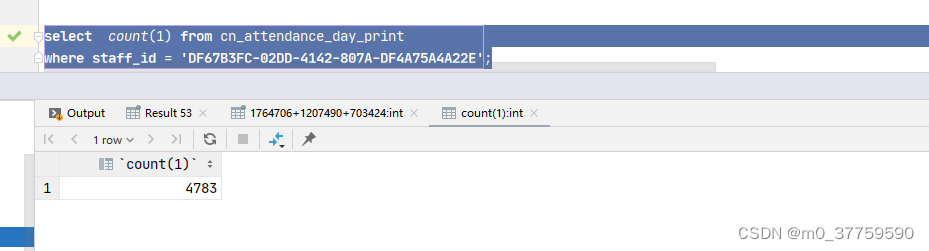

而在mysql中發現staff_id = 'DF67B3FC-02DD-4142-807A-DF4A75A4A22E’的數據有4783條記錄(昨天的記錄是4781)

這個數據即使是由於離線採集也不會相差這麼大,肯定是哪裡出現了問題

原因:

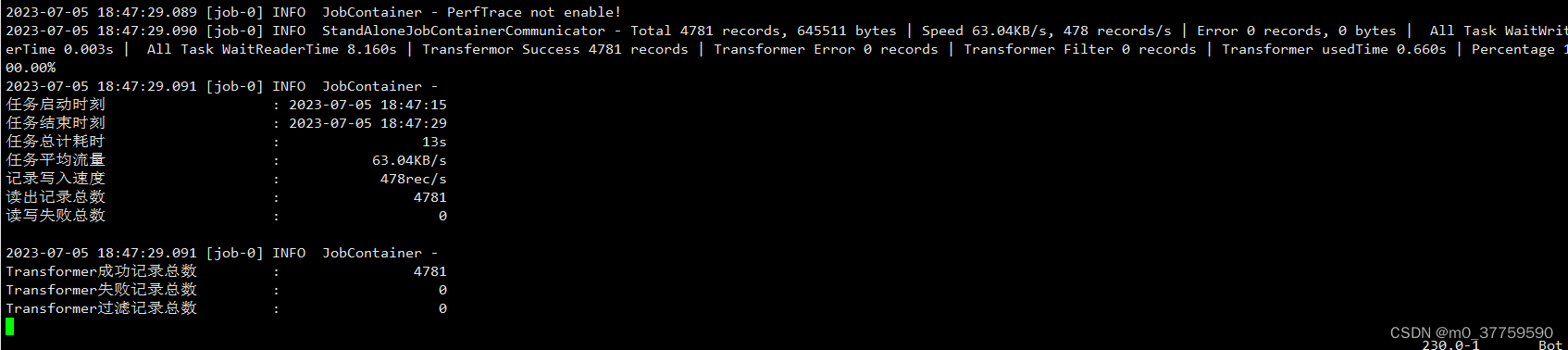

在datax中修改cn_attendance_day_print的job文件,只要 staff_id = 'DF67B3FC-02DD-4142-807A-DF4A75A4A22E’的記錄

發現採集過來的確實是4781條數據

那麼datax到hdfs的鏈路是正確的

所以需要去查看一下剛剛採集過來的數據,由於之前的記錄都刪除了,所以懶得再去復現了,說一下最終的處理結果

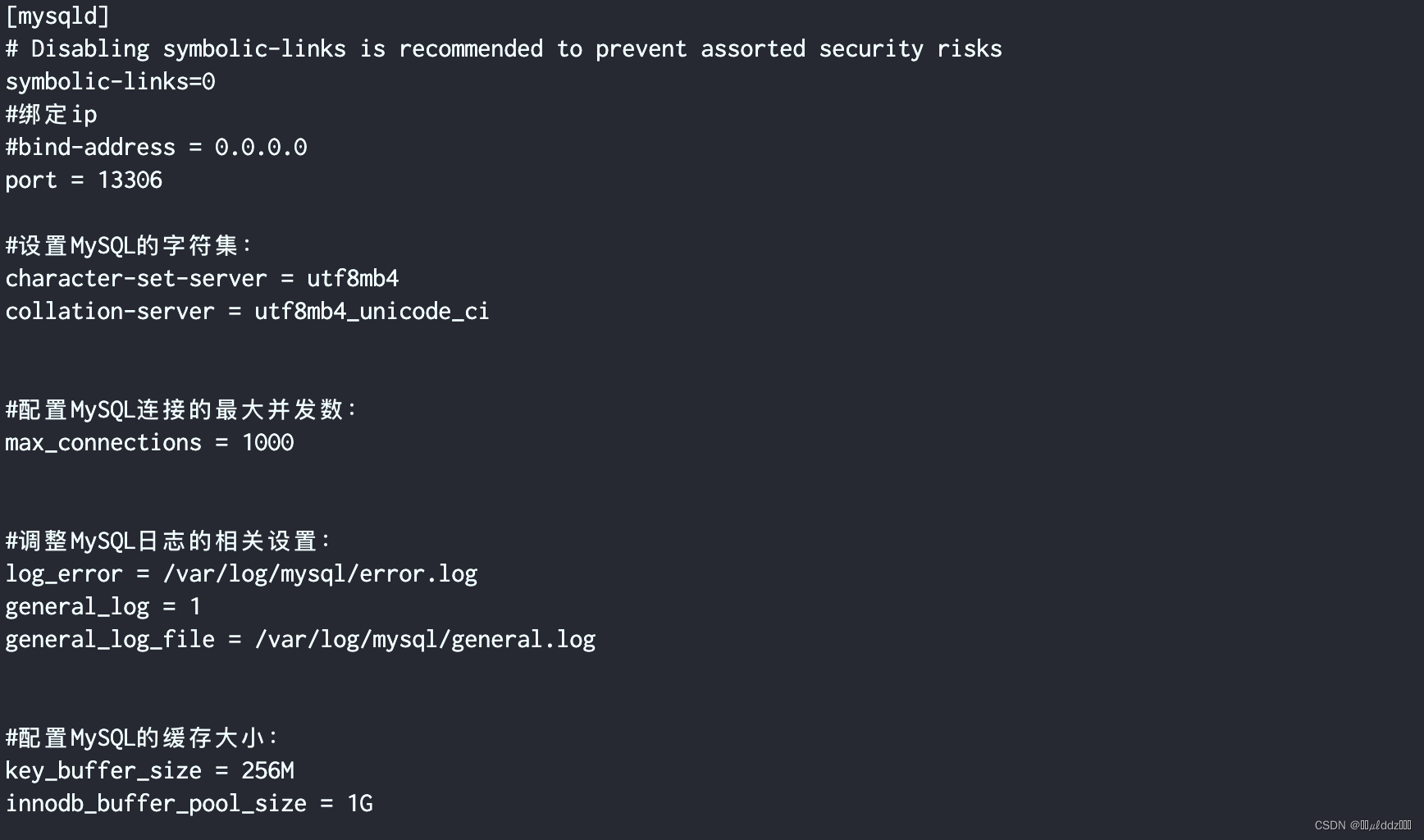

後面發現原因是由於大小寫的原因,hive是區分數據大小寫的,但是在mysql中這邊設置了全局大小寫不區分

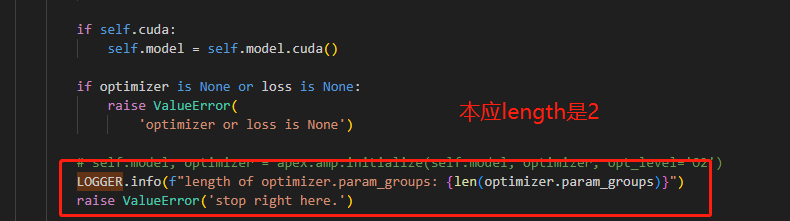

解決方案

在datax中將string類型的數據全部轉為大寫或者小寫

示例如下:

{

"job": {

"content": [{

"transformer": [{

"parameter": {

"code": "for(int i=0;i<record.getColumnNumber();i++){if(record.getColumn(i).getByteSize()!=0){Column column = record.getColumn(i); def str = column.asString(); def newStr=null; newStr=str.replaceAll(\"[\\r\\n]\",\"\"); record.setColumn(i, new StringColumn(newStr)); };};return record;",

"extraPackage": []

},

"name": "dx_groovy"

}],

"writer": {

"parameter": {

"writeMode": "append",

"fieldDelimiter": "\u0001",

"column": [{

"type": "string",

"name": "id"

}, {

"type": "string",

"name": "username"

}, {

"type": "string",

"name": "user_id"

}, {

"type": "string",

"name": "superior_id"

}, {

"type": "string",

"name": "finger_print_number"

}],

"path": "${targetdir}",

"fileType": "text",

"defaultFS": "hdfs://mycluster:8020",

"compress": "gzip",

"fileName": "cn_staff"

},

"name": "hdfswriter"

},

"reader": {

"parameter": {

"username": "dw_readonly",

"column": ["id", "username", "user_id", "superior_id", "finger_print_number"],

"connection": [{

"table": ["cn_staff"],

"jdbcUrl": ["jdbc:mysql://*******"]

}],

"password": "******",

"splitPk": ""

},

"name": "mysqlreader"

}

}],

"setting": {

"speed": {

"channel": 3

},

"errorLimit": {

"record": 0,

"percentage": 0.02

}

}

}

}

![NSS [NSSCTF 2022 Spring Recruit]ezgame](https://img-blog.csdnimg.cn/img_convert/06a6d4cde1d831a1cdccd0d9793403b9.png)