DM

- Data Migration

- 架构与原理

- 适用场景

- 下载安装组件

- 编辑初始化配置文件

- 执行部署命令

- 查看DM集群

- 检查DM集群情况

- 启动集群

- DM配置

- 概览

- 上游数据库(数据源)配置

- 任务配置

- 过滤配置

- 分库分表合并迁移

- 性能优化

- 常见问题

- dmctl

- 检查与启动任务

- 暂停任务

- 恢复任务

- 查询任务

- 停止任务

- 实验

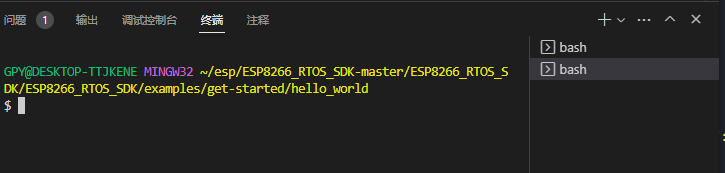

- 部署DM集群

- MySQL同步数据到TiDB

Data Migration

TiDB Data Migration (DM) 是一款便捷的数据迁移工具,支持从与 MySQL 协议兼容的数据库(MySQL、MariaDB、Aurora MySQL)到 TiDB 的全量数据迁移和增量数据同步,同时可以进行表的过滤操作,并且可以进行分库分表的合并迁移。

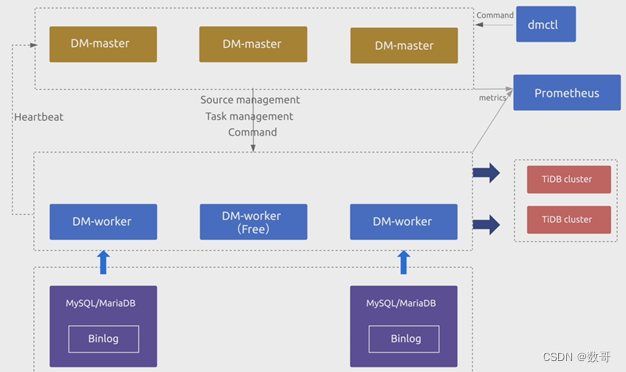

架构与原理

- dmctl 是用来控制DM集群的命令行工具。

- dm-master 负责管理和调度数据迁移任务的各项操作,它是一个高可用架构

- dm-worker负责执行具体的数据迁移任务。DM-worker只能对应一个mysql。多余的节点是用作冗余高可用的。

适用场景

1、数据从MySQL迁移到TiDB

2、源库表与目标库异构表的迁移

3、分表分库的合并迁移

下载安装组件

tiup install dm dmctl

编辑初始化配置文件

#全局变量适用于配置中的其他组件。如果组件实例中缺少一个特定值,则相应的全局变量将用作默认值。

global:

user: "tidb"

ssh_port: 22

deploy_dir: "/dm-deploy"

data_dir: "/dm-data"

server_configs:

master:

log-level: info

# rpc-timeout: "30s"

# rpc-rate-limit: 10.0

# rpc-rate-burst: 40

worker:

log-level: info

master_servers:

- host: 10.0.1.11

name: master1

ssh_port: 22

port: 8261

# peer_port: 8291

# deploy_dir: "/dm-deploy/dm-master-8261"

# data_dir: "/dm-data/dm-master-8261"

# log_dir: "/dm-deploy/dm-master-8261/log"

# numa_node: "0,1"

# 下列配置项用于覆盖 `server_configs.master` 的值。

config:

log-level: info

# rpc-timeout: "30s"

# rpc-rate-limit: 10.0

# rpc-rate-burst: 40

- host: 10.0.1.18

name: master2

ssh_port: 22

port: 8261

- host: 10.0.1.19

name: master3

ssh_port: 22

port: 8261

# 如果不需要确保 DM 集群高可用,则可只部署 1 个 DM-master 节点,且部署的 DM-worker 节点数量不少于上游待迁移的 MySQL/MariaDB 实例数。

# 如果需要确保 DM 集群高可用,则推荐部署 3 个 DM-master 节点,且部署的 DM-worker 节点数量大于上游待迁移的 MySQL/MariaDB 实例数(如 DM-worker 节点数量比上游实例数多 2 个)。

worker_servers:

- host: 10.0.1.12

ssh_port: 22

port: 8262

# deploy_dir: "/dm-deploy/dm-worker-8262"

# log_dir: "/dm-deploy/dm-worker-8262/log"

# numa_node: "0,1"

# 下列配置项用于覆盖 `server_configs.worker` 的值。

config:

log-level: info

- host: 10.0.1.19

ssh_port: 22

port: 8262

monitoring_servers:

- host: 10.0.1.13

ssh_port: 22

port: 9090

# deploy_dir: "/tidb-deploy/prometheus-8249"

# data_dir: "/tidb-data/prometheus-8249"

# log_dir: "/tidb-deploy/prometheus-8249/log"

grafana_servers:

- host: 10.0.1.14

port: 3000

# deploy_dir: /tidb-deploy/grafana-3000

alertmanager_servers:

- host: 10.0.1.15

ssh_port: 22

web_port: 9093

# cluster_port: 9094

# deploy_dir: "/tidb-deploy/alertmanager-9093"

# data_dir: "/tidb-data/alertmanager-9093"

# log_dir: "/tidb-deploy/alertmanager-9093/log"

执行部署命令

建议使用最新版本

tiup dm deploy ${name} ${version} ./topology.yaml --user root

查看DM集群

tiup dm list

检查DM集群情况

tiup dm display ${name}

启动集群

tiup dm start ${name}

DM配置

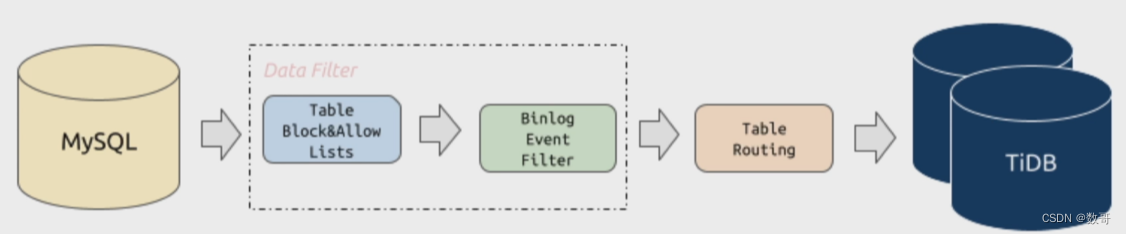

概览

- table block lists: 对表和库进行过滤

- binog event filter: 对操作 sql进行过滤

- table routing: 表路由,例如对mysql的t1映射到tidb叫ti1,最有用的是针对分片。

上游数据库(数据源)配置

1、编辑配置文件

# MySQL1 Configuration.

source-id: "mysql-replica-01"

# DM-worker 是否使用全局事务标识符 (GTID) 拉取 binlog。使用前提是在上游 MySQL 已开启 GTID 模式。

enable-gtid: false

# 是否开启relay log

enable-relay: false

relay-binlog-name: '' # 拉取上游binlog的起始文件名

relay-binlog-gtid: '' # 拉取上游binlog 的起始gtid

from:

host: "172.16.10.81"

user: "root"

password: "VjX8cEeTX+qcvZ3bPaO4h0C80pe/1aU="

port: 3306

2、加载到dm中

tiup dmctl --master-addr=192.168.16.13:8261 operate-source create mysql-source-conf1.yaml

tiup dmctl --master-addr=192.168.16.13:8261 operate-source create mysql-source-conf2.yaml

3、查看

[root@tidb2 ~]# tiup dmctl --master-addr=192.168.16.13:8261 operate-source show

tiup is checking updates for component dmctl ...

Starting component `dmctl`: /root/.tiup/components/dmctl/v7.2.0/dmctl/dmctl --master-addr=192.168.16.13:8261 operate-source show

{

"result": true,

"msg": "",

"sources": [

{

"result": true,

"msg": "",

"source": "mysql-replica-01",

"worker": "dm-192.168.16.13-8263"

},

{

"result": true,

"msg": "",

"source": "mysql-replica-02",

"worker": "dm-192.168.16.13-8262"

}

]

}

任务配置

# 任务名,多个同时运行的任务不能重名。

name: "test"

# 全量+增量 (all) 迁移模式。

task-mode: "all"

# 下游 TiDB 配置信息。

target-database:

host: "172.16.10.83"

port: 4000

user: "root"

password: ""

# 当前数据迁移任务需要的全部上游 MySQL 实例配置。

mysql-instances:

-

# 上游实例或者复制组 ID,参考 `inventory.ini` 的 `source_id` 或者 `dm-master.toml` 的 `source-id 配置`。

source-id: "mysql-replica-01"

# 需要迁移的库名或表名的黑白名单的配置项名称,用于引用全局的黑白名单配置,全局配置见下面的 `block-allow-list` 的配置。

block-allow-list: "global" # 如果 DM 版本早于 v2.0.0-beta.2 则使用 black-white-list。

# dump 处理单元的配置项名称,用于引用全局的 dump 处理单元配置。

mydumper-config-name: "global"

-

source-id: "mysql-replica-02"

block-allow-list: "global" # 如果 DM 版本早于 v2.0.0-beta.2 则使用 black-white-list。

mydumper-config-name: "global"

# 黑白名单全局配置,各实例通过配置项名引用。

block-allow-list: # 如果 DM 版本早于 v2.0.0-beta.2 则使用 black-white-list。

global:

do-tables: # 需要迁移的上游表的白名单。

- db-name: "test_db" # 需要迁移的表的库名。

tbl-name: "test_table" # 需要迁移的表的名称。

# dump 处理单元全局配置,各实例通过配置项名引用。

mydumpers:

global:

extra-args: ""

block-allow-list: 针对表

filter-rules: 针对语句

route-rules: 针对路由,表到哪个位置

过滤配置

block-allow-list:

bw-rule-1: # 规则名称

do-dbs: ["test.*", "user"] # 迁移哪些库,支持通配符 "*" 和 "?",do-dbs 和 ignore-dbs 只需要配置一个,如果两者同时配置只有 do-dbs 会生效

# ignore-dbs: ["mysql", "account"] # 忽略哪些库,支持通配符 "*" 和 "?"

do-tables: # 迁移哪些表,do-tables 和 ignore-tables 只需要配置一个,如果两者同时配置只有 do-tables 会生效

- db-name: "test.*"

tbl-name: "t.*"

- db-name: "user"

tbl-name: "information"

bw-rule-2: # 规则名称

ignore-tables: # 忽略哪些表

- db-name: "user"

tbl-name: "log"

- filters:

filters: # 定义过滤数据源特定操作的规则,可以定义多个规则

filter-rule-1: # 规则名称

schema-pattern: "test_*" # 匹配数据源的库名,支持通配符 "*" 和 "?"

table-pattern: "t_*" # 匹配数据源的表名,支持通配符 "*" 和 "?"

events: ["truncate table", "drop table"] # 匹配上 schema-pattern 和 table-pattern 的库或者表的操作类型

action: Ignore # 迁移(Do)还是忽略(Ignore)

filter-rule-2:

schema-pattern: "test"

events: ["all dml"]

action: Do

- routes

routes: # 定义数据源表迁移到目标 TiDB 表的路由规则,可以定义多个规则

route-rule-1: # 规则名称

schema-pattern: "test_*" # 匹配数据源的库名,支持通配符 "*" 和 "?"

table-pattern: "t_*" # 匹配数据源的表名,支持通配符 "*" 和 "?"

target-schema: "test" # 目标 TiDB 库名

target-table: "t" # 目标 TiDB 表名

route-rule-2:

schema-pattern: "test_*"

target-schema: "test"

分库分表合并迁移

假设源数据库中,sales库中有一张分片存储的表有三个分片:order_beijing,order_shanghai和order_guangzhou。目前希望迁移到目标库sales库中的order表。可以配置如下

routes:

sale-route-rule:

schema-pattern: "sales" # 源库的sales库

table-pattern: "order*" # 源库的sales库中以order_开头的表

target-schema: "sales" # 目标库的sales库

target-table: "order" # 目标库的slaes库的order表

性能优化

- chunk-filesize: 文件大小

- rows: 每个线程导出的行数

- threads: 默认是四个

- pool-size: 64M

- worker-count: 默认16个

- batch: 每个事物默认的DML数量,默认是100个

常见问题

1、 如何处理不兼容的DDL语句

tiup dmctl --master-addr ip:port handle-error test skip

2、自增主键冲突处理

当DM对分库分表进行合并迁移的场景中,可能会出现自增主键冲突的情况,解决方法如下:

- 去掉自增主键的主键属性

- 使用联合主键

3、版本兼容

| 数据源 | 级别 | 备注 |

|---|---|---|

| MySQL | 5.6 | 正式支持 |

| MySQL | 5.7 | 正式支持 |

| MySQL | 8.0 | 实验支持 |

| MariaDB | < 10.1.2 | 不兼容 时间类型的 binlog 不兼容 |

| MariaDB | 10.1.2 ~ 10.5.10 | 实验支持 |

| MariaDB | > 10.5.10 | 不兼容 |

dmctl

检查与启动任务

tiup dmctl --master-addr 101.101.10.32:8261 start-task ./task.yaml

tiup dmctl check-task ./task.yaml

暂停任务

tiup dmctl --master-addr 101.101.10.32:8261 pause-task ./task.yaml

恢复任务

tiup dmctl --master-addr 101.101.10.32:8261 resume-task test

查询任务

tiup dmctl --master-addr 101.101.10.32:8261 query-status test

停止任务

tiup dmctl --master-addr 101.101.10.32:8261 stop-task test

实验

部署DM集群

1、安装dm组件

tiup install dm dmctl

2、生成配置文件

[root@tidb2 ~]# tiup dm template >scale-out-dm.yaml

[root@tidb2 ~]# more scale-out-dm.yaml

#全局变量适用于配置中的其他组件。如果组件实例中缺少一个特定值,则相应的全局变量将用作默

认值。

global:

user: "root"

ssh_port: 22

deploy_dir: "/dm-deploy"

data_dir: "/dm-data"

server_configs:

master:

log-level: info

worker:

log-level: info

master_servers:

- host: 192.168.16.13

name: master1

ssh_port: 22

port: 8261

config:

log-level: info

worker_servers:

- host: 192.168.16.13

ssh_port: 22

port: 8262

deploy_dir: "/dm-deploy/dm-worker-8262"

log_dir: "/dm-deploy/dm-worker-8262/log"

config:

log-level: info

- host: 192.168.16.13

ssh_port: 22

port: 8263

deploy_dir: "/dm-deploy/dm-worker-8263"

log_dir: "/dm-deploy/dm-worker-8263/log"

config:

log-level: info

3、部署集群

[root@tidb2 ~]# tiup dm deploy dm-test v2.0.1 ./scale-out-dm.yaml --user=root

tiup is checking updates for component dm ...timeout(2s)!

Starting component `dm`: /root/.tiup/components/dm/v1.12.3/tiup-dm deploy dm-test v2.0.1 ./scale-out-dm.yaml --user=root

+ Detect CPU Arch Name

- Detecting node 192.168.16.13 Arch info ... Done

+ Detect CPU OS Name

- Detecting node 192.168.16.13 OS info ... Done

Please confirm your topology:

Cluster type: dm

Cluster name: dm-test

Cluster version: v2.0.1

Role Host Ports OS/Arch Directories

---- ---- ----- ------- -----------

dm-master 192.168.16.13 8261/8291 linux/x86_64 /dm-deploy/dm-master-8261,/dm-data/dm-master-8261

dm-worker 192.168.16.13 8262 linux/x86_64 /dm-deploy/dm-worker-8262,/dm-data/dm-worker-8262

dm-worker 192.168.16.13 8263 linux/x86_64 /dm-deploy/dm-worker-8263,/dm-data/dm-worker-8263

Attention:

1. If the topology is not what you expected, check your yaml file.

2. Please confirm there is no port/directory conflicts in same host.

Do you want to continue? [y/N]: (default=N) yes

+ Generate SSH keys ... Done

+ Download TiDB components

- Download dm-master:v2.0.1 (linux/amd64) ... Done

- Download dm-worker:v2.0.1 (linux/amd64) ... Done

+ Initialize target host environments

- Prepare 192.168.16.13:22 ... Done

+ Deploy TiDB instance

- Copy dm-master -> 192.168.16.13 ... Done

- Copy dm-worker -> 192.168.16.13 ... Done

- Copy dm-worker -> 192.168.16.13 ... Done

+ Copy certificate to remote host

+ Init instance configs

- Generate config dm-master -> 192.168.16.13:8261 ... Done

- Generate config dm-worker -> 192.168.16.13:8262 ... Done

- Generate config dm-worker -> 192.168.16.13:8263 ... Done

+ Init monitor configs

Enabling component dm-master

Enabling instance 192.168.16.13:8261

Enable instance 192.168.16.13:8261 success

Enabling component dm-worker

Enabling instance 192.168.16.13:8263

Enabling instance 192.168.16.13:8262

Enable instance 192.168.16.13:8263 success

Enable instance 192.168.16.13:8262 success

Cluster `dm-test` deployed successfully, you can start it with command: `tiup dm start dm-test`

4、查看集群

[root@tidb2 ~]# tiup dm list

tiup is checking updates for component dm ...

Starting component `dm`: /root/.tiup/components/dm/v1.12.3/tiup-dm list

Name User Version Path PrivateKey

---- ---- ------- ---- ----------

dm-test root v2.0.1 /root/.tiup/storage/dm/clusters/dm-test /root/.tiup/storage/dm/clusters/dm-test/ssh/id_rsa

5、启动集群

[root@tidb2 ~]# tiup dm start dm-test

6、查看TiUP工具

[root@tidb2 ~]# tiup dmctl -V

tiup is checking updates for component dmctl ...

Starting component `dmctl`: /root/.tiup/components/dmctl/v7.2.0/dmctl/dmctl -V

Release Version: v7.2.0

Git Commit Hash: 33e21a7e12ec792ad6ffb53144c86b2872a6a216

Git Branch: heads/refs/tags/v7.2.0

UTC Build Time: 2023-06-21 14:48:02

Go Version: go version go1.20.5 linux/amd64

Failpoint Build: false

MySQL同步数据到TiDB

实现目的:

- 3306实例中的user库中所有的表同步到TiDB数据库的test1中,3307中的user库所有表同步到TiDB的test2中

- 3306和3307中的store库中的表原样同步到TiDB数据库的store库中,除了3307 store库的store_sz,它会同步到TiDB的store_suzhou表。

- 3306和3307的salesdb库中的表sales做了分表,它们会合并同步到TiDB的slaesdb库的sales表中。

- 3306和3307的user库不会复制删除操作,user库的trace表不会复制truncate drop delete操作,store库不会复制删除操作,store库的表不会复制truncate drop delete操作

- 3306和3307中的log不会参与复制

1、创建用户和权限

mysql> create user 'dm'@'%' identified by '123456';

Query OK, 0 rows affected (0.02 sec)

mysql> grant all privileges on *.* to 'dm'@'%';

Query OK, 0 rows affected (0.01 sec)

2、造数

mysql> CREATE DATABASE `log` DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci; # 注意下游的tidb是否支持对应的字符集和排序规则

Query OK, 1 row affected (0.01 sec)

mysql> CREATE DATABASE `user` DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci;

Query OK, 1 row affected (0.01 sec)

mysql> CREATE DATABASE `store` DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci;

Query OK, 1 row affected (0.01 sec)

mysql> CREATE DATABASE `salesdb` DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci;

Query OK, 1 row affected (0.02 sec)

3、TiDB端创建用户和数据

[root@tidb2 ~]# mysql -uroot -p -P4000 -h192.168.16.13

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> create user 'dm'@'%' identified by '123456';

Query OK, 0 rows affected (0.10 sec)

mysql> grant all privileges on *.* to 'dm'@'%';

Query OK, 0 rows affected (0.08 sec)

mysql> create database user_north;

Query OK, 0 rows affected (0.21 sec)

mysql> create database user_east;

Query OK, 0 rows affected (0.16 sec)

mysql> create database store;

Query OK, 0 rows affected (0.14 sec)

mysql> use user_north;

Database changed

mysql> create table information(id int primary key,info varchar(64));

Query OK, 0 rows affected (0.19 sec)

mysql> create table trace(id int primary key,content varchar(64));

Query OK, 0 rows affected (0.20 sec)

mysql> use user_east

Database changed

mysql> create table information(id int primary key,info varchar(64));

Query OK, 0 rows affected (0.21 sec)

mysql> create table trace(id int primary key,content varchar(64));

Query OK, 0 rows affected (0.13 sec)

mysql> use store;

Database changed

mysql> create table store_bj(id int primary key,pname varchar(64));

Query OK, 0 rows affected (0.18 sec)

mysql> create table store_tj(id int primary key,pname varchar(64));

Query OK, 0 rows affected (0.13 sec)

mysql> create table store_sh(id int primary key,pname varchar(64));

Query OK, 0 rows affected (0.12 sec)

mysql> create table store_suzhou(id int primary key,pname varchar(64)));

Query OK, 0 rows affected (0.13 sec)

mysql> show tables;

+-----------------+

| Tables_in_store |

+-----------------+

| store_bj |

| store_sh |

| store_suzhou |

| store_tj |

+-----------------+

4 rows in set (0.00 sec)

mysql> create database salesdb;

Query OK, 0 rows affected (0.13 sec)

mysql> use salesdb;

Database changed

mysql> create table sales(id int primary key,pname varchar(20),cnt int);

Query OK, 0 rows affected (0.16 sec)

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| INFORMATION_SCHEMA |

| METRICS_SCHEMA |

| PERFORMANCE_SCHEMA |

| mysql |

| salesdb |

| store |

| test |

| user_east |

| user_north |

+--------------------+

9 rows in set (0.01 sec)

mysql> create database log;

Query OK, 0 rows affected (0.12 sec)

mysql> use log;

Database changed

mysql> create table messages(id int primary key,msg varchar(64));

Query OK, 0 rows affected (0.17 sec)

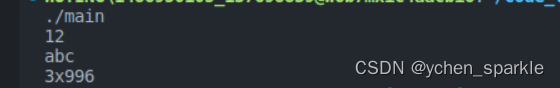

4、密码生成

[root@tidb2 ~]# tiup dmctl -encrypt '123456'

tiup is checking updates for component dmctl ...

Starting component `dmctl`: /root/.tiup/components/dmctl/v7.2.0/dmctl/dmctl -encrypt 123456

VIbuIFL8wJlW0/x6id6Cy0Byj3YFJUY=

5、编辑数据源配置文件

[root@tidb2 ~]# more mysql-source-conf1.yaml

source-id: "mysql-replica-01"

from:

host: "192.168.16.13"

user: "dm"

password: "VIbuIFL8wJlW0/x6id6Cy0Byj3YFJUY="

port: 3306

[root@tidb2 ~]# more mysql-source-conf2.yaml

source-id: "mysql-replica-02"

from:

host: "192.168.16.13"

user: "dm"

password: "VIbuIFL8wJlW0/x6id6Cy0Byj3YFJUY="

port: 3307

6、将配置文件加载到DM中

[root@tidb2 ~]# tiup dm display dm-test

tiup is checking updates for component dm ...

Starting component `dm`: /root/.tiup/components/dm/v1.12.3/tiup-dm display dm-test

Cluster type: dm

Cluster name: dm-test

Cluster version: v2.0.1

Deploy user: root

SSH type: builtin

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

192.168.16.13:8261 dm-master 192.168.16.13 8261/8291 linux/x86_64 Healthy|L /dm-data/dm-master-8261 /dm-deploy/dm-master-8261

192.168.16.13:8262 dm-worker 192.168.16.13 8262 linux/x86_64 Free /dm-data/dm-worker-8262 /dm-deploy/dm-worker-8262

Total nodes: 2

[root@tidb2 ~]# tiup dmctl --master-addr=192.168.16.13:8261 operate-source create mysql-source-conf1.yaml

tiup is checking updates for component dmctl ...

Starting component `dmctl`: /root/.tiup/components/dmctl/v7.2.0/dmctl/dmctl --master-addr=192.168.16.13:8261 operate-source create mysql-source-conf1.yaml

{

"result": true,

"msg": "",

"sources": [

{

"result": true,

"msg": "",

"source": "mysql-replica-01",

"worker": "dm-192.168.16.13-8263"

}

]

}

[root@tidb2 ~]# tiup dmctl --master-addr=192.168.16.13:8261 operate-source create mysql-source-conf2.yaml

tiup is checking updates for component dmctl ...

Starting component `dmctl`: /root/.tiup/components/dmctl/v7.2.0/dmctl/dmctl --master-addr=192.168.16.13:8261 operate-source create mysql-source-conf2.yaml

{

"result": true,

"msg": "",

"sources": [

{

"result": true,

"msg": "",

"source": "mysql-replica-02",

"worker": "dm-192.168.16.13-8262"

}

]

}

[root@tidb2 ~]# tiup dm display dm-test

tiup is checking updates for component dm ...timeout(2s)!

Starting component `dm`: /root/.tiup/components/dm/v1.12.3/tiup-dm display dm-test

Cluster type: dm

Cluster name: dm-test

Cluster version: v2.0.1

Deploy user: root

SSH type: builtin

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

192.168.16.13:8261 dm-master 192.168.16.13 8261/8291 linux/x86_64 Healthy|L /dm-data/dm-master-8261 /dm-deploy/dm-master-8261

192.168.16.13:8262 dm-worker 192.168.16.13 8262 linux/x86_64 Bound /dm-data/dm-worker-8262 /dm-deploy/dm-worker-8262

192.168.16.13:8263 dm-worker 192.168.16.13 8263 linux/x86_64 Bound /dm-data/dm-worker-8263 /dm-deploy/dm-worker-8263

Total nodes: 3

# 绑定了数据源后, 状态就变成了 Bound,一个dm-worker只能对应一个数据源

7、查看已加载的数据源

[root@tidb2 ~]# tiup dmctl --master-addr=192.168.16.13:8261 get-config source mysql-replica-01

[root@tidb2 ~]# tiup dmctl --master-addr=192.168.16.13:8261 get-config source mysql-replica-02

8、查看数据源和dm-work的关系

[root@tidb2 ~]# tiup dmctl --master-addr=192.168.16.13:8261 operate-source show

tiup is checking updates for component dmctl ...timeout(2s)!

Starting component `dmctl`: /root/.tiup/components/dmctl/v7.2.0/dmctl/dmctl --master-addr=192.168.16.13:8261 operate-source show

{

"result": true,

"msg": "",

"sources": [

{

"result": true,

"msg": "",

"source": "mysql-replica-01",

"worker": "dm-192.168.16.13-8263"

},

{

"result": true,

"msg": "",

"source": "mysql-replica-02",

"worker": "dm-192.168.16.13-8262"

}

]

}

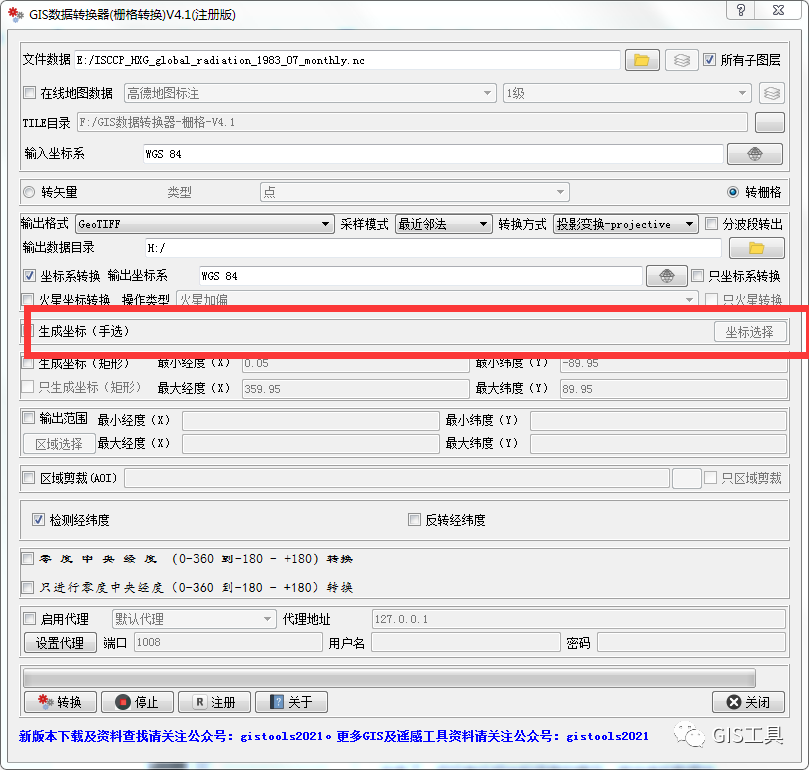

9、按照规则,配置DM任务

9.1 任务信息如下

通过dm-task 文件进行配置

name: "dm-taskX"

task-mode: all

ignore-checking-items: ["auto_increment_ID"]

name: 任务名

task-mode: 复制方式,all(全量+增量)

ignore-checking-items: ["auto_increment_ID"]忽略自增主键检测

9.2 目标TiDB数据库配置

target-database:

host: "192.168.16.13"

port: 4000

user: "dm"

password: "123456"

9.3、规则一说明

规则一: 3306中 user 库中所有的表同步到TiDB数据库的user_north中,3307中的user库中所有表同步到TiDB中的user_east中去。使用table routings实现:

routes:

instance-1-user-rule:

schema-pattern: "user"

target-schema: "user_north"

instance-2-user-rule:

schema-pattern: "user"

target-schema: "user_east"

9.4、规则二说明

规则二: 3306和3307中的store库中的表原样同步到TiDB数据库中的store库中的表,除了3307中的store_sz,它会同步到TiDB的store_suzhou。使用table routings实现:

routes:

instance-2-store-rule:

schema-pattern: "store"

table-pattern: "store_sz"

target-schema: "store"

target-table: "store_suzhou"

9.5、规则三说明

规则三: 3306和3307中的salesdb库中的sales做了分表,同步到TiDB中的sales库的sales表中。使用table routings实现

sale-route-rule:

schema-pattern: "salesdb"

target-schema: "salesdb"

9.5、规则四说明

规则四: 3306和3307的user库不会复制删除操作,user库中的trace表不会复制truncate,drop和delete操作,store库不会复制删除操作,store库的表不会复制truncate,drop和delete操作。使用binlog event filter实现

filters:

trace-filter-rule: #user库中的trace表不会复制truncate,drop和delete操作

schema-pattern: "user"

table-pattern: "trace"

events: ["truncate table","drop table","delete"]

action: ignore

user-filter-rule: # 3306和3307的user库不会复制删库操作

schema-pattern: "user"

events: ["drop database"]

action: ignore

store-filter-rule: # store库不会复制删库,truncate,drop和delete表的操作

schema-pattern: "store"

events: ["drop database","truncate table","drop table","delete"]

action: ignore

9.5、规则五说明

规则五: 3306和3307实例中的log库不会参与复制,使用binlog event filter实现:

block-allow-list:

log-ignored:

ignore-dbs: ["log"]

9.5、实例关联规则

将3306和3307的两个实例关联上述规则

mysql-instance:

-

source-id: "mysql-replica-01"

route-rules: ["instance-1-user-rule","sale-route-rule"]

filter-rules: ["trace-filter-rule","user-filter-rule","store-filter-rule"]

block-allow-list: "log-ignored"

mydumper-config-name: "global"

loader-config-name: "global"

syncer-config-name: "global"

-

source-id: "mysql-replica-02"

route-rules: ["instance-2-user-rule","instance-2-store-rule","sale-route-rule"]

filter-rules: ["trace-filter-rule","user-filter-rule","store-filter-rule"]

block-allow-list: "log-ignored"

mydumper-config-name: "global"

loader-config-name: "global"

syncer-config-name: "global"

10、最终配置如下:

[root@tidb2 ~]# more dm_sync.yaml

name: "dm-taskX"

task-mode: all

ignore-checking-items: ["auto_increment_ID"]

target-database:

host: "192.168.16.13"

port: 4000

user: "dm"

password: "123456"

mysql-instances:

-

source-id: "mysql-replica-01"

route-rules: ["instance-1-user-rule","sale-route-rule"]

filter-rules: ["trace-filter-rule","user-filter-rule","store-filter-rule"]

block-allow-list: "log-ignored"

mydumper-config-name: "global"

loader-config-name: "global"

syncer-config-name: "global"

-

source-id: "mysql-replica-02"

route-rules: ["instance-2-user-rule","instance-2-store-rule","sale-route-rule"]

filter-rules: ["trace-filter-rule","user-filter-rule","store-filter-rule"]

block-allow-list: "log-ignored"

mydumper-config-name: "global"

loader-config-name: "global"

syncer-config-name: "global"

# 所有实例的公有配置

routes:

instance-1-user-rule:

schema-pattern: "user"

target-schema: "user_north"

instance-2-user-rule:

schema-pattern: "user"

target-schema: "user_east"

instance-2-store-rule:

schema-pattern: "store"

table-pattern: "store_sz"

target-schema: "store"

target-table: "store_suzhou"

sale-route-rule:

schema-pattern: "salesdb"

target-schema: "salesdb"

filters:

trace-filter-rule:

schema-pattern: "user"

table-pattern: "trace"

events: ["truncate table","drop table","delete"]

action: ignore

user-filter-rule:

schema-pattern: "user"

events: ["drop database"]

action: ignore

store-filter-rule:

schema-pattern: "store"

events: ["drop database","truncate table","drop table","delete"]

action: ignore

# 黑白名单

block-allow-list:

log-ignored:

ignore-dbs: ["log"]

mydumpers:

global:

threads: 2

chunk-filesize: 64

loaders:

global:

pool-size: 16

dir: "./dumped_data"

syncers:

global:

worker-count: 2

batch: 100

11、检查

对上游MySQL源数据库进行检查,得到期待结果,如下

[root@tidb2 ~]# tiup dmctl --master-addr=192.168.16.13:8261 check-task dm_sync.yaml

tiup is checking updates for component dmctl ...

Starting component `dmctl`: /root/.tiup/components/dmctl/v7.2.0/dmctl/dmctl --master-addr=192.168.16.13:8261 check-task dm_sync.yaml.bak

{

"result": true,

"msg": "check pass!!!"

}

12、创建复制任务,并默认开始

[root@tidb2 ~]# tiup dmctl --master-addr=192.168.16.13:8261 start-task dm_sync.yaml

13、查询任务状态,查看是否正常

tiup dmctl --master-addr=192.168.16.13:8261 query-status dm_sync.yaml

14、暂停任务

tiup dmctl --master-addr=192.168.16.13:8261 pause-task dm_sync.yaml

15、恢复任务

tiup dmctl --master-addr=192.168.16.13:8261 resume-task dm_sync.yaml

16、停止任务

tiup dmctl --master-addr=192.168.16.13:8261 stop-task dm_sync.yaml