文章目录

- 1.ActiveMQ消息队列主从集群模式

- 1.1.主从集群架构

- 1.2.环境规划

- 2.部署ActiveMQ主从高可用集群

- 2.1.部署Zookeeper集群

- 2.1.1.搭建Zookeeper三节点

- 2.1.2.配置Zookeeper三节点

- 2.1.3.配置Zookeeper各个节点的myid

- 2.1.4.启动Zookeeper集群

- 2.2.部署ActiveMQ主从集群

- 2.2.1.部署ActiveMQ三节点

- 2.2.2.配置ActiveMQ各个节点的TCP协议通信端口以及消息持久化类型

- 2.2.3.配置ActiveMQ各节点WEB后台管理系统的端口号

- 2.2.4.启动ActiveMQ集群

- 3.在Zookeeper上查询ActiveMQ主节点

- 4.访问ActiveMQ集群

- 5.程序连接ActiveMQ集群的方式

- 6.模拟ActiveMQ主节点宕机验证是否会故障切换

1.ActiveMQ消息队列主从集群模式

1.1.主从集群架构

ActiveMQ集群模式官方文档:https://activemq.apache.org/clustering

ActiveMQ的主从集群是主从+高可用集群,主节点提供服务,备用节点同步主节点数据,当主节点挂掉后,备用节点接替主节点完成消息的传递。

ActiveMQ消息队列想要实现消息的复制,官方给出的解决方案是使用RAID磁盘、SAN、共享存储、主从集群、JDBC数据库等等。

ActiveMQ主从高可用集群,需要使用Zookeeper集群注册所有的ActiveMQ节点,由Zookeeper实现主备切换时的选举,在主从高可用集群中,只有一个ActiveMQ节点处于工作状态,被视为Master节点,其他的ActiveMQ节点处于待机状态,当主节点挂掉后,Zookeeper会通过选举机制将集群中的某个ActiveMQ节点提升为Master,继续提供服务。

在ActiveMQ集群中,客户端只能连接Master不能连接Slave,Slave会连接Master并同步存储状态,也就是同步消息数据,Slave不接收客户端的连接,所有的存储操作都会被复制到连接Master的Slave节点上,每个Slave都会同步Master上的数据。

在ActiveMQ集群中,当Master点击后,最新同步更新的Slave会被提升为Master节点,故障的原Master节点在恢复后重新加入到集群时,会连接现有Master节点并进入Slave模式,不会直接顶替现有Master工作。

由于ActiveMQ集群引入了Zookeeper集群,因此集群中的节点数至少为3台,Zookeeper选举过程当选Master的票数一定要大于其他节点才可以选举成功,如果集群中只有1个Slave,那么将无法选举,当Master挂掉后,还剩下2台Slave,由Zookeeper选举其中一台为Master。

1.2.环境规划

| Zookeeper集群地址 | ActiveMQ集群Web端口 | ActiveMQ集群协议端口 |

|---|---|---|

| 192.168.81.210:2181 | 192.168.81.210:8161 | 192.168.81.210:61616 |

| 192.168.81.210:2182 | 192.168.81.210:8162 | 192.168.81.210:61617 |

| 192.168.81.210:2183 | 192.168.81.210:8163 | 192.168.81.210:61618 |

2.部署ActiveMQ主从高可用集群

2.1.部署Zookeeper集群

Zookeeper集群主要负责ActiveMQ节点的选举,Zookeeper集群会选举出一个主节点leader,然后随机选举出ActiveMQ其中一个节点作为ActiveMQ的Master,提供消息服务,其余Slave节点处于待机状态。

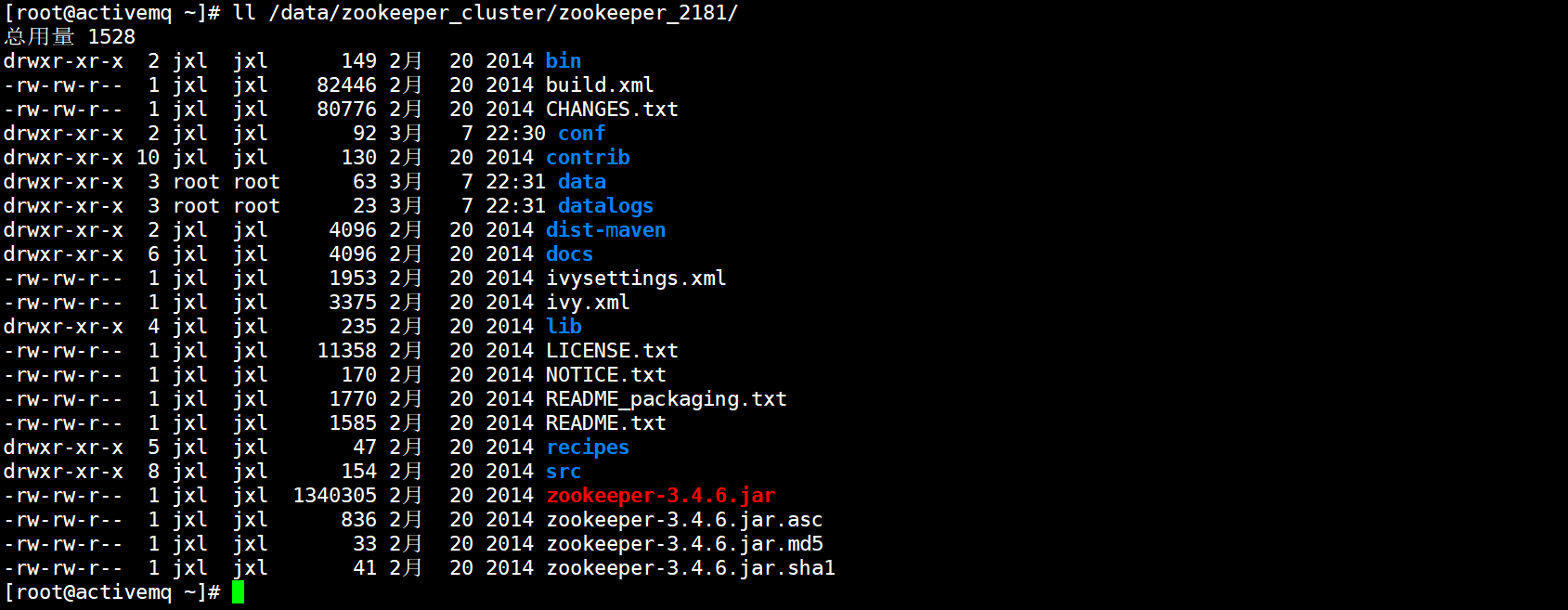

2.1.1.搭建Zookeeper三节点

1.解压zookeeper二进制文件

[root@activemq ~]# tar xf zookeeper-3.4.6.tar.gz

2.规划zookeeper安装目录

[root@activemq ~]# mkdir /data/zookeeper_cluster/{zookeeper_2181,zookeeper_2182,zookeeper_2183}/{data,datalogs} -p

[root@activemq ~]# tree /data/zookeeper_cluster/

/data/zookeeper_cluster/

├── zookeeper_2181

│ ├── data

│ └── datalogs

├── zookeeper_2182

│ ├── data

│ └── datalogs

└── zookeeper_2183

├── data

└── datalogs

3.部署zookeeper三节点

[root@activemq ~]# cp -rp zookeeper-3.4.6/* /data/zookeeper_cluster/zookeeper_2181/

[root@activemq ~]# cp -rp zookeeper-3.4.6/* /data/zookeeper_cluster/zookeeper_2182/

[root@activemq ~]# cp -rp zookeeper-3.4.6/* /data/zookeeper_cluster/zookeeper_2183/

2.1.2.配置Zookeeper三节点

1.zookeeper-2181节点

[root@activemq ~]# cd /data/zookeeper_cluster/zookeeper_2181/conf

[root@activemq conf]# cp zoo_sample.cfg zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

clientPort=2181 #zookeeper端口号

dataDir=/data/zookeeper_cluster/zookeeper_2181/data #数据目录

dataLogDir=/data/zookeeper_cluster/zookeeper_2181/datalogs #日志目录

server.1=192.168.81.210:2881:3881 #集群通信地址

server.2=192.168.81.210:2882:3882

server.3=192.168.81.210:2883:3883

2.zookeeper-2182节点

[root@activemq ~]# cp /data/zookeeper_cluster/zookeeper_2181/conf/zoo.cfg /data/zookeeper_cluster/zookeeper_2182/conf/

[root@activemq ~]# vim /data/zookeeper_cluster/zookeeper_2182/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

clientPort=2182

dataDir=/data/zookeeper_cluster/zookeeper_2182/data

dataLogDir=/data/zookeeper_cluster/zookeeper_2182/datalogs

server.1=192.168.81.210:2881:3881

server.2=192.168.81.210:2882:3882

server.3=192.168.81.210:2883:3883

3.zookeep-2183节点

[root@activemq ~]# cp /data/zookeeper_cluster/zookeeper_2181/conf/zoo.cfg /data/zookeeper_cluster/zookeeper_2183/conf/

[root@activemq ~]# vim /data/zookeeper_cluster/zookeeper_2183/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

clientPort=2183

dataDir=/data/zookeeper_cluster/zookeeper_2183/data

dataLogDir=/data/zookeeper_cluster/zookeeper_2183/datalogs

server.1=192.168.81.210:2881:3881

server.2=192.168.81.210:2882:3882

server.3=192.168.81.210:2883:3883

2.1.3.配置Zookeeper各个节点的myid

[root@activemq ~]# echo 1 > /data/zookeeper_cluster/zookeeper_2181/data/myid

[root@activemq ~]# echo 2 > /data/zookeeper_cluster/zookeeper_2182/data/myid

[root@activemq ~]# echo 3 > /data/zookeeper_cluster/zookeeper_2183/data/myid

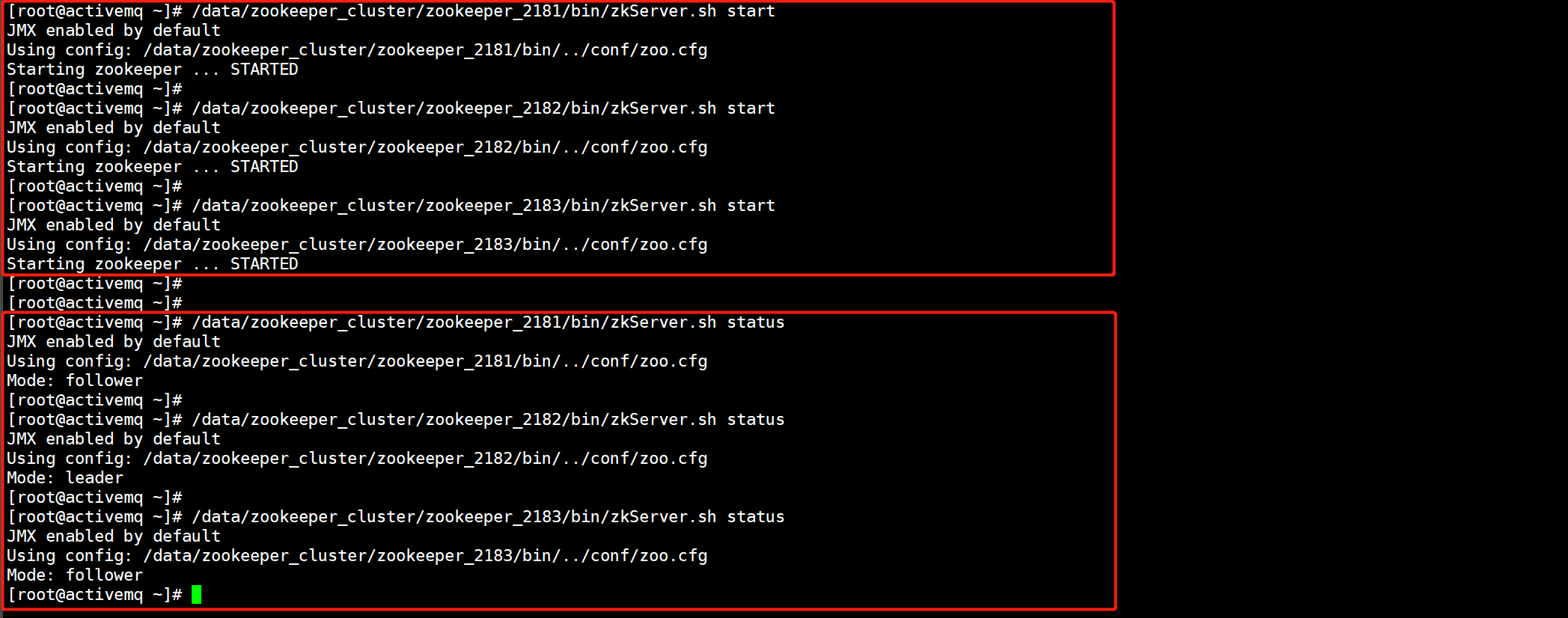

2.1.4.启动Zookeeper集群

1.启动各个zookeeper节点

[root@activemq ~]# /data/zookeeper_cluster/zookeeper_2181/bin/zkServer.sh start

JMX enabled by default

Using config: /data/zookeeper_cluster/zookeeper_2181/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@activemq ~]# /data/zookeeper_cluster/zookeeper_2182/bin/zkServer.sh start

JMX enabled by default

Using config: /data/zookeeper_cluster/zookeeper_2182/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@activemq ~]# /data/zookeeper_cluster/zookeeper_2183/bin/zkServer.sh start

JMX enabled by default

Using config: /data/zookeeper_cluster/zookeeper_2183/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

2.查看各个节点的状态

[root@activemq ~]# /data/zookeeper_cluster/zookeeper_2181/bin/zkServer.sh status

JMX enabled by default

Using config: /data/zookeeper_cluster/zookeeper_2181/bin/../conf/zoo.cfg

Mode: follower

[root@activemq ~]# /data/zookeeper_cluster/zookeeper_2182/bin/zkServer.sh status

JMX enabled by default

Using config: /data/zookeeper_cluster/zookeeper_2182/bin/../conf/zoo.cfg

Mode: leader #leader为主节点

[root@activemq ~]# /data/zookeeper_cluster/zookeeper_2183/bin/zkServer.sh status

JMX enabled by default

Using config: /data/zookeeper_cluster/zookeeper_2183/bin/../conf/zoo.cfg

Mode: followe

2.2.部署ActiveMQ主从集群

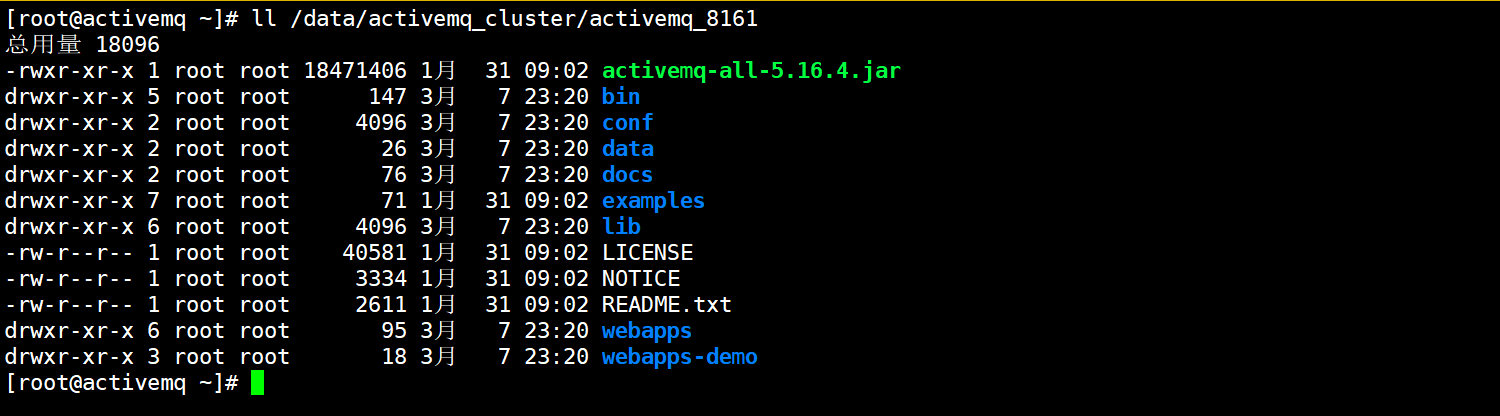

2.2.1.部署ActiveMQ三节点

1.解压activemq二进制文件

[root@activemq ~]# tar xf apache-activemq-5.16.4-bin.tar.gz

2.规划zookeeper安装目录

[root@activemq ~]# mkdir /data/activemq_cluster/{activemq_8161,activemq_8162,activemq_8163} -p

3.部署activemq三节点

[root@activemq ~]# cp -rp apache-activemq-5.16.4/* /data/activemq_cluster/activemq_8161/

[root@activemq ~]# cp -rp apache-activemq-5.16.4/* /data/activemq_cluster/activemq_8162/

[root@activemq ~]# cp -rp apache-activemq-5.16.4/* /data/activemq_cluster/activemq_8163/

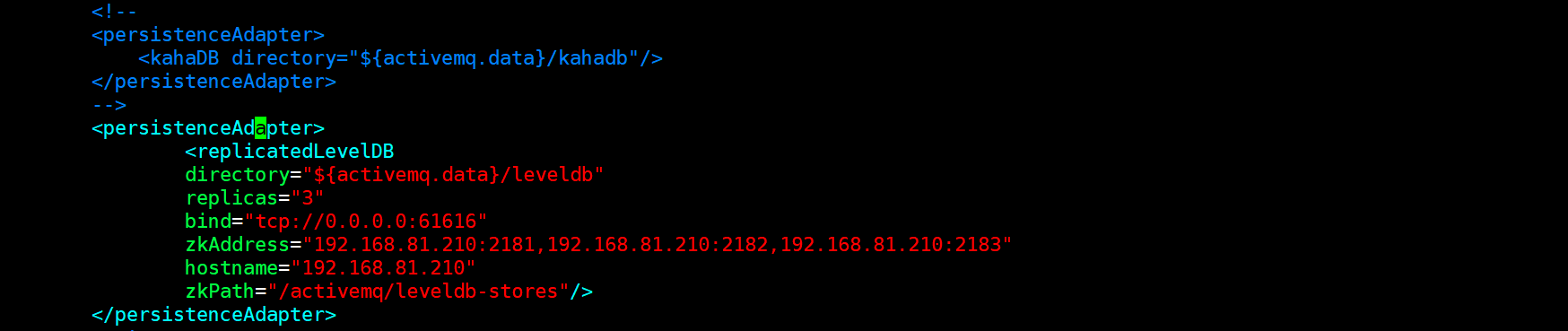

2.2.2.配置ActiveMQ各个节点的TCP协议通信端口以及消息持久化类型

每个节点都有单独的通信地址,故障切换后可以直接切换。

1.ActiveMQ-8161节点

[root@activemq ~]# vim /data/activemq_cluster/activemq_8161/conf/activemq.xml

<broker xmlns="http://activemq.apache.org/schema/core" brokerName="prod-activemq-cluster" dataDirectory="${activemq.data}"> #40行修改,同一个集群中的brkername要保持一致

<persistenceAdapter> #86行添加,注释掉默认的kahadb持久化

<replicatedLevelDB

directory="${activemq.data}/leveldb"

replicas="3"

bind="tcp://0.0.0.0:61616"

zkAddress="192.168.81.210:2181,192.168.81.210:2182,192.168.81.210:2183"

hostname="192.168.81.210"

zkPath="/activemq/leveldb-stores"/>

</persistenceAdapter>

2.ActiveMQ-8162节点

[root@activemq ~]# cp /data/activemq_cluster/activemq_8161/conf/activemq.xml /data/activemq_cluster/activemq_8162/conf/activemq.xml

[root@activemq ~]# vim /data/activemq_cluster/activemq_8162/conf/activemq.xml

<broker xmlns="http://activemq.apache.org/schema/core" brokerName="prod-activemq-cluster" dataDirectory="${activemq.data}">

<persistenceAdapter>

<replicatedLevelDB

directory="${activemq.data}/leveldb"

replicas="3"

bind="tcp://0.0.0.0:61617"

zkAddress="192.168.81.210:2181,192.168.81.210:2182,192.168.81.210:2183"

hostname="192.168.81.210"

zkPath="/activemq/leveldb-stores"/>

</persistenceAdapter>

3.ActiveMQ-8163节点

[root@activemq ~]# cp /data/activemq_cluster/activemq_8161/conf/activemq.xml /data/activemq_cluster/activemq_8163/conf/activemq.xml

[root@activemq ~]# vim /data/activemq_cluster/activemq_8163/conf/activemq.xml

<broker xmlns="http://activemq.apache.org/schema/core" brokerName="prod-activemq-cluster" dataDirectory="${activemq.data}">

<persistenceAdapter>

<replicatedLevelDB

directory="${activemq.data}/leveldb"

replicas="3"

bind="tcp://0.0.0.0:61618"

zkAddress="192.168.81.210:2181,192.168.81.210:2182,192.168.81.210:2183"

hostname="192.168.81.210"

zkPath="/activemq/leveldb-stores"/>

</persistenceAdapter>

2.2.3.配置ActiveMQ各节点WEB后台管理系统的端口号

每个节点都有独立的后台管理系统页面,从节点不提供服务,只同步数据。

1.ActiveMQ-8161节点

[root@activemq ~]# vim /data/activemq_cluster/activemq_8161/conf/jetty.xml

<bean id="jettyPort" class="org.apache.activemq.web.WebConsolePort" init-method="start">

<!-- the default port number for the web console -->

<property name="host" value="0.0.0.0"/>

<property name="port" value="8161"/>

</bean>

2.ActiveMQ-8162节点

[root@activemq ~]# vim /data/activemq_cluster/activemq_8162/conf/jetty.xml

<bean id="jettyPort" class="org.apache.activemq.web.WebConsolePort" init-method="start">

<!-- the default port number for the web console -->

<property name="host" value="0.0.0.0"/>

<property name="port" value="8162"/>

</bean>

3.ActiveMQ-8163节点

[root@activemq ~]# vim /data/activemq_cluster/activemq_8163/conf/jetty.xml

<bean id="jettyPort" class="org.apache.activemq.web.WebConsolePort" init-method="start">

<!-- the default port number for the web console -->

<property name="host" value="0.0.0.0"/>

<property name="port" value="8163"/>

</bean>

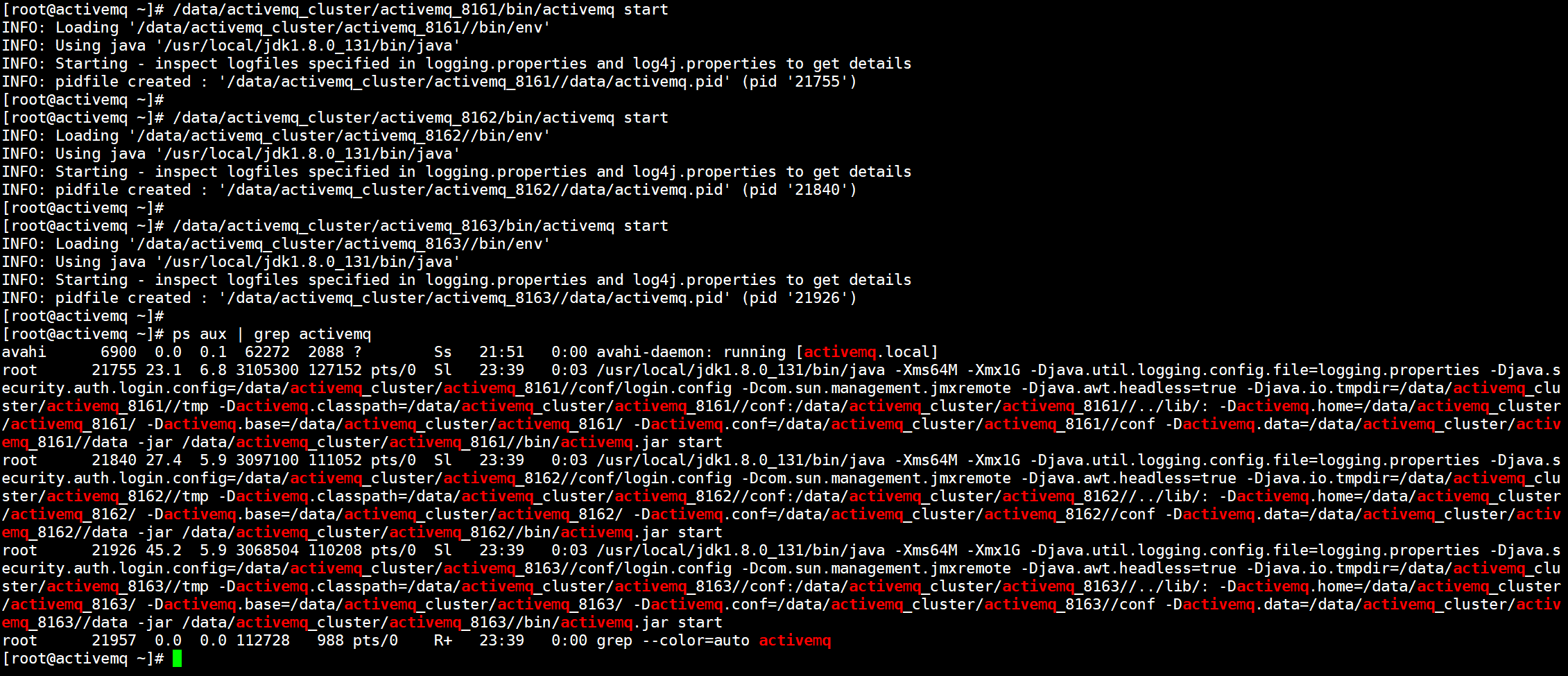

2.2.4.启动ActiveMQ集群

[root@activemq ~]# /data/activemq_cluster/activemq_8161/bin/activemq start

INFO: Loading '/data/activemq_cluster/activemq_8161//bin/env'

INFO: Using java '/usr/local/jdk1.8.0_131/bin/java'

INFO: Starting - inspect logfiles specified in logging.properties and log4j.properties to get details

INFO: pidfile created : '/data/activemq_cluster/activemq_8161//data/activemq.pid' (pid '21002')

[root@activemq ~]# /data/activemq_cluster/activemq_8162/bin/activemq start

INFO: Loading '/data/activemq_cluster/activemq_8162//bin/env'

INFO: Using java '/usr/local/jdk1.8.0_131/bin/java'

INFO: Starting - inspect logfiles specified in logging.properties and log4j.properties to get details

INFO: pidfile created : '/data/activemq_cluster/activemq_8162//data/activemq.pid' (pid '21085')

[root@activemq ~]# /data/activemq_cluster/activemq_8163/bin/activemq start

INFO: Loading '/data/activemq_cluster/activemq_8163//bin/env'

INFO: Using java '/usr/local/jdk1.8.0_131/bin/java'

INFO: Starting - inspect logfiles specified in logging.properties and log4j.properties to get details

INFO: pidfile created : '/data/activemq_cluster/activemq_8163//data/activemq.pid' (pid '21176')

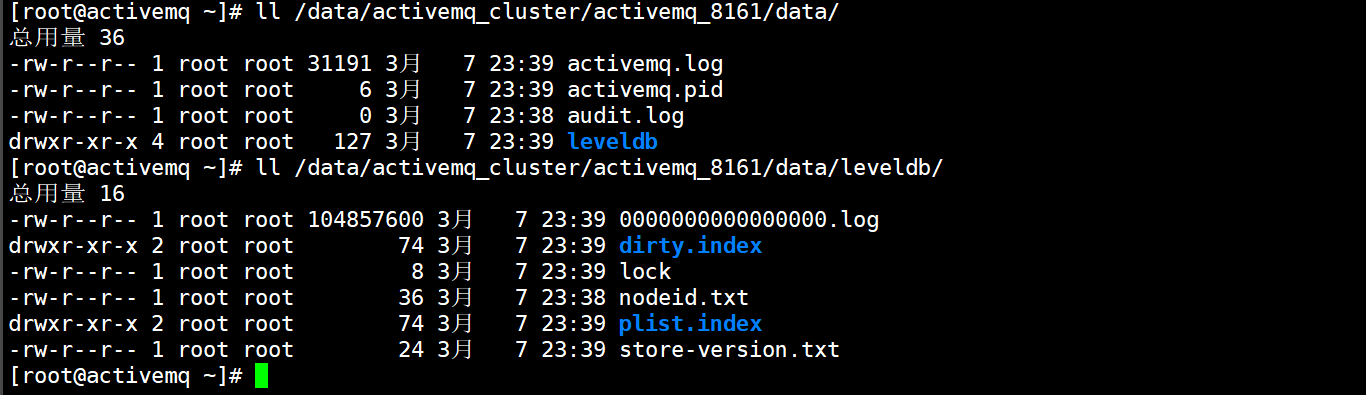

集群搭建完毕后会在ActiveMQ安装目录的data目录中增加一个leveldb的目录,存放持久化数据。

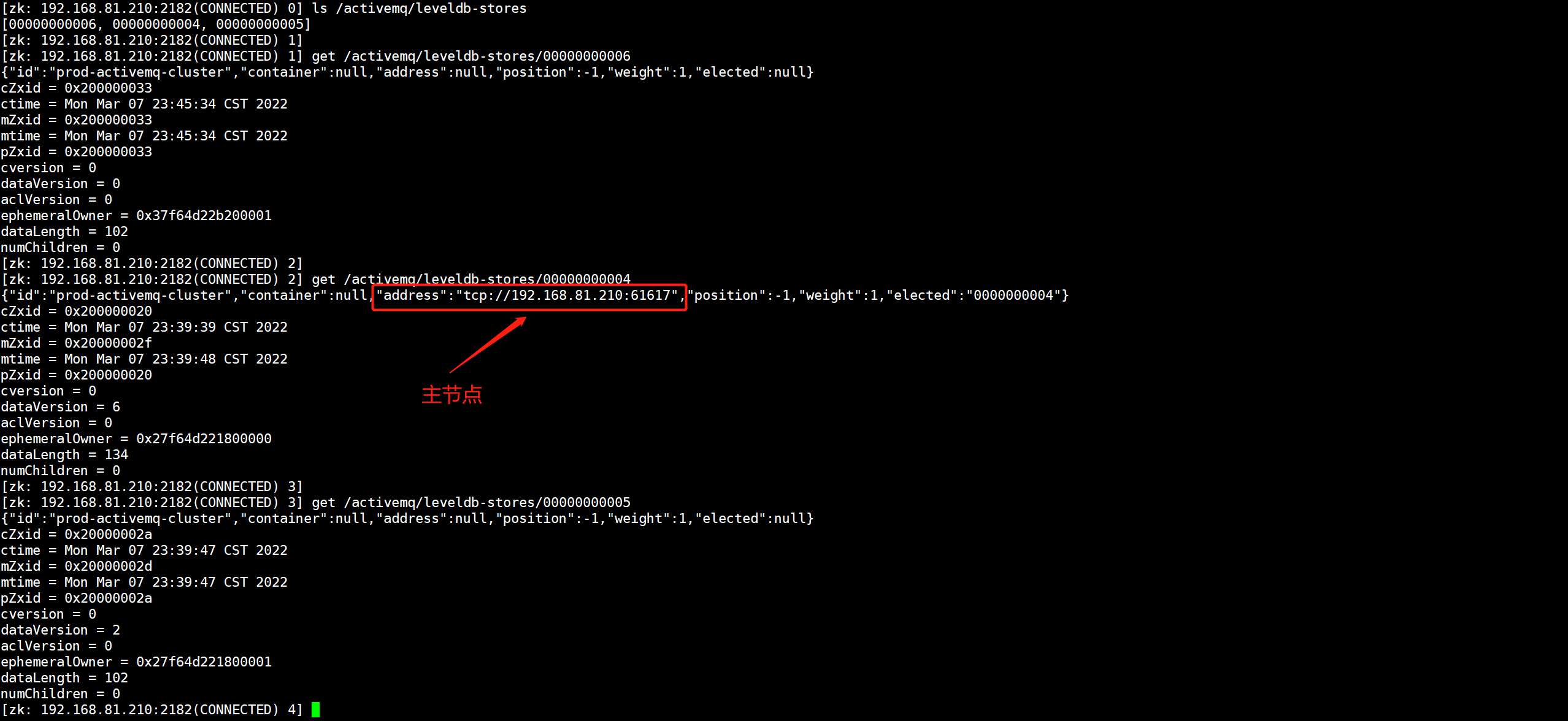

3.在Zookeeper上查询ActiveMQ主节点

ActiveMQ集群部署完毕后,会在Zookeeper中注册各个节点的信息,并且会显示谁是主节点,一般来说,集群中第一个启动的ActiveMQ节点就是Master节点。

1.进入zookeeper控制台

[root@activemq ~]# /data/zookeeper_cluster/zookeeper_2181/bin/zkCli.sh -server 192.168.81.210:2182

2.查看activemq生成的key

[zk: 192.168.81.210:2182(CONNECTED) 0] ls /activemq/leveldb-stores

[00000000006, 00000000004, 00000000005]

#生成了三个可以,每一个节点对应一个key

3.查看key的内容,key中address字段有值,就表示该节点是activemq集群中的主节点,程序中需要配置这个节点进行消息的传递

[zk: 192.168.81.210:2182(CONNECTED) 1] get /activemq/leveldb-stores/00000000006

{"id":"prod-activemq-cluster","container":null,"address":null,"position":-1,"weight":1,"elected":null}

cZxid = 0x200000033

ctime = Mon Mar 07 23:45:34 CST 2022

mZxid = 0x200000033

mtime = Mon Mar 07 23:45:34 CST 2022

pZxid = 0x200000033

cversion = 0

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x37f64d22b200001

dataLength = 102

numChildren = 0

[zk: 192.168.81.210:2182(CONNECTED) 2] get /activemq/leveldb-stores/00000000004

{"id":"prod-activemq-cluster","container":null,"address":"tcp://192.168.81.210:61617","position":-1,"weight":1,"elected":"0000000004"}

cZxid = 0x200000020

ctime = Mon Mar 07 23:39:39 CST 2022

mZxid = 0x20000002f

mtime = Mon Mar 07 23:39:48 CST 2022

pZxid = 0x200000020

cversion = 0

dataVersion = 6

aclVersion = 0

ephemeralOwner = 0x27f64d221800000

dataLength = 134

numChildren = 0

[zk: 192.168.81.210:2182(CONNECTED) 3] get /activemq/leveldb-stores/00000000005

{"id":"prod-activemq-cluster","container":null,"address":null,"position":-1,"weight":1,"elected":null}

cZxid = 0x20000002a

ctime = Mon Mar 07 23:39:47 CST 2022

mZxid = 0x20000002d

mtime = Mon Mar 07 23:39:47 CST 2022

pZxid = 0x20000002a

cversion = 0

dataVersion = 2

aclVersion = 0

ephemeralOwner = 0x27f64d221800001

dataLength = 102

numChildren = 0

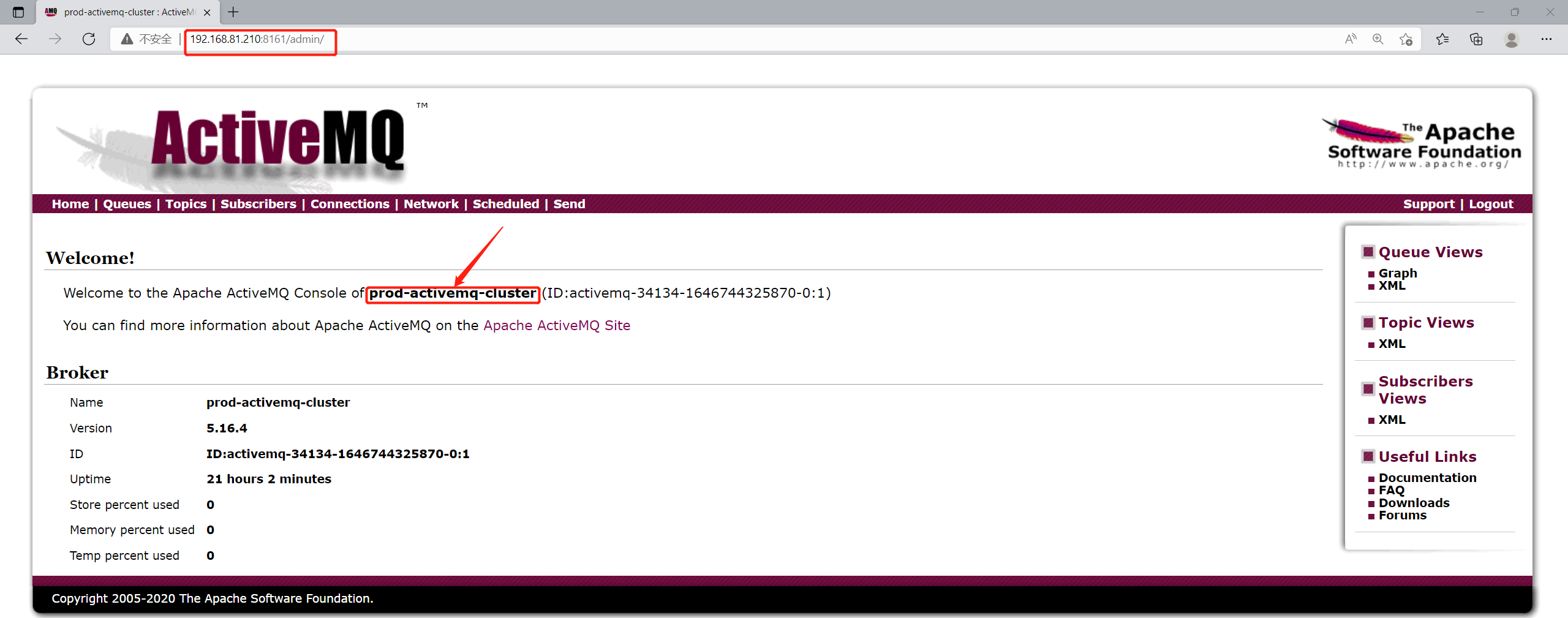

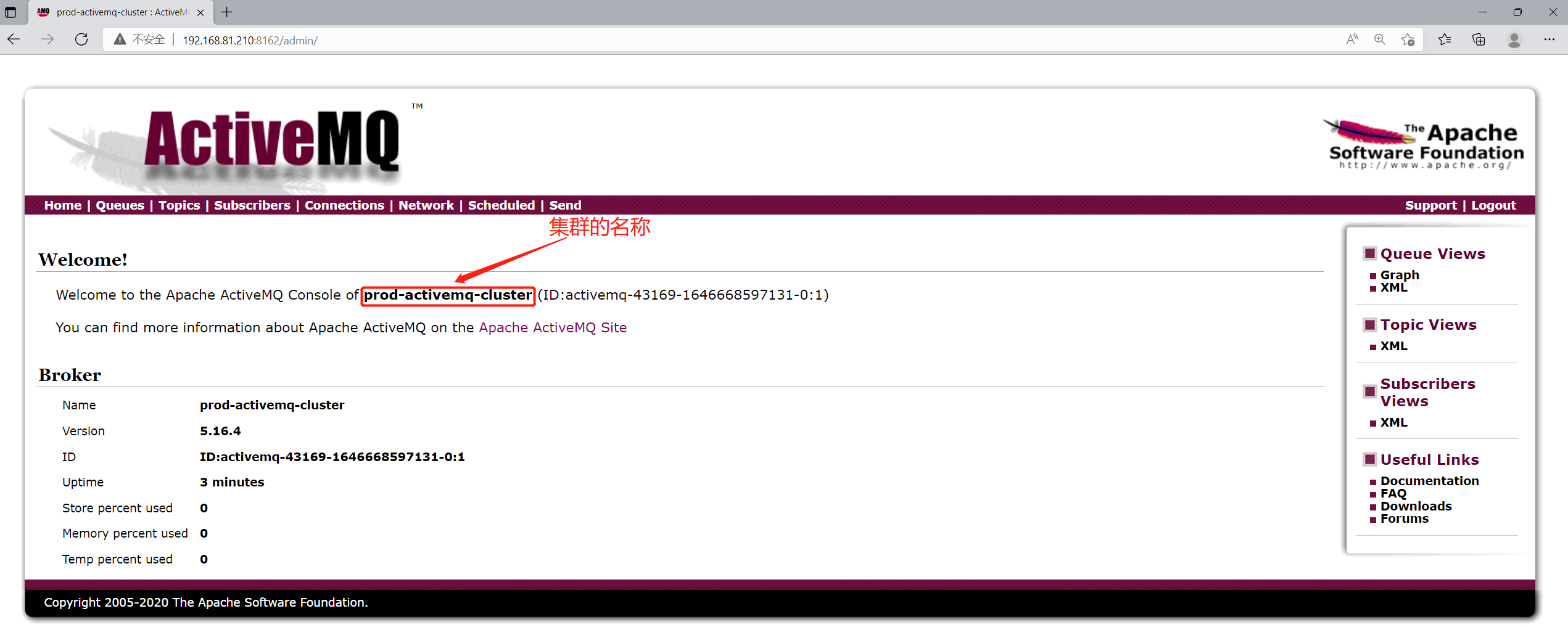

4.访问ActiveMQ集群

在Zookeeper集群中查询到ActiveMQ的主节点是61617端口,61617端口就是第二个MQ节点,对应的WEB后台管理系统的端口号为8162。

5.程序连接ActiveMQ集群的方式

server:

port: 9001

spring:

activemq:

broker-url: failover:(tcp://192.168.81.210:61616,tcp://192.168.81.210:61617,tcp://192.168.81.210:61618)

6.模拟ActiveMQ主节点宕机验证是否会故障切换

模拟ActiveMQ主节点宕机,观察是否会由集群中另外的从节点,升级为主节点。

1)停止8162主ActiveMQ节点

[root@activemq ~]# /data/activemq_cluster/activemq_8162/bin/activemq stop

2)观察是否会故障迁移

已经由8161端口的节点成为了主节点。