增长优化器的主要设计灵感来源于个人在社会成长过程中的学习和反思机制。学习是个体通过从外部世界获得知识而成长的过程。反思是检查个人自身不足并调整个人学习策略以帮助个人成长的过程。参考文献如下:

Zhang, Qingke, et al. “Growth Optimizer: A Powerful Metaheuristic Algorithm for Solving Continuous and Discrete Global Optimization Problems.” Knowledge-Based Systems, vol. 261, Elsevier BV, Feb. 2023, p. 110206,DOI:http://10.1016/j.knosys.2022.110206

该代码的源码编写有两种方式,一种是常规的,以最大迭代次数为目标进行寻优,一种是设置了一个标识符,当适应度值不再增长,且循环已经超过了最大标识符时,会终止寻优。

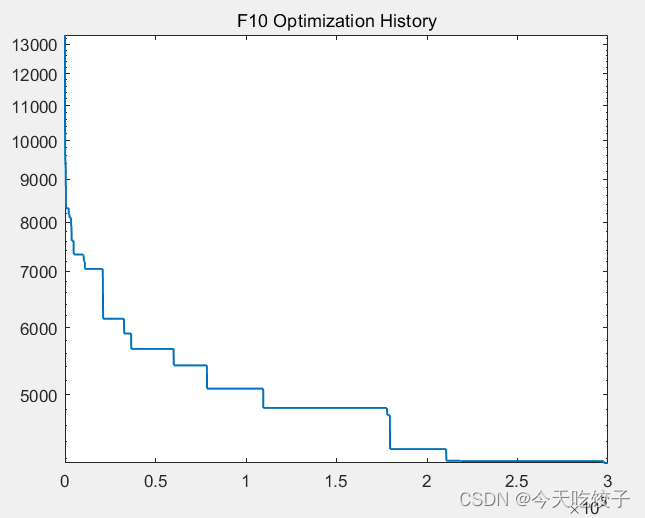

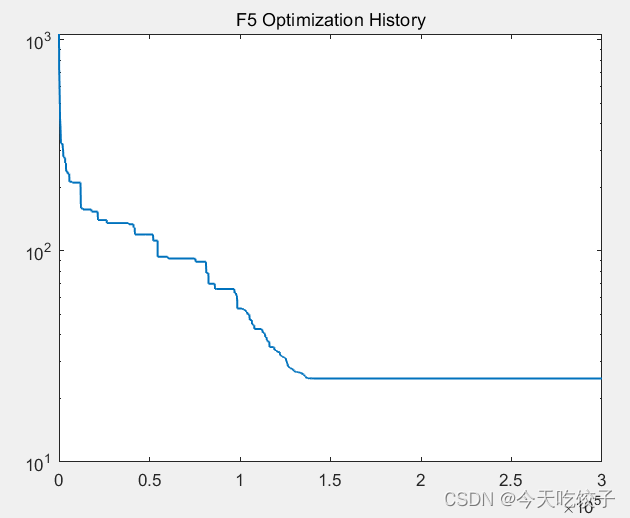

以CEC2017函数为例,进行结果展示:

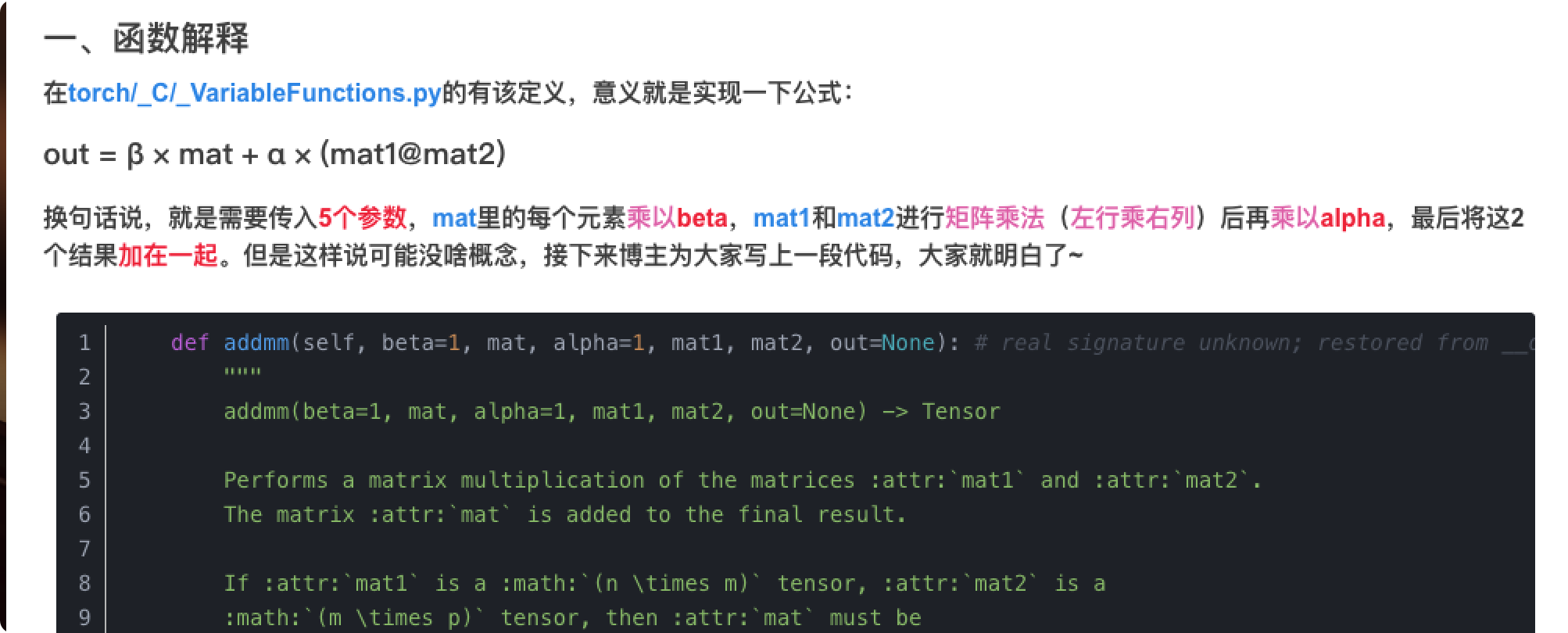

接下来上关键代码:

function [gbestX,gbestfitness,gbesthistory]= GO_FEs(popsize,dimension,xmax,xmin,MaxFEs,Func,FuncId)

% This version is an evaluation version of GO

%% Parameter setting

FEs=0;P1=5;P2=0.001;P3=0.3;

%% Initialization

x=unifrnd(xmin,xmax,popsize,dimension);

newx=x;

fitness=inf(1,popsize);

gbesthistory=inf(1,MaxFEs);

gbestfitness=inf;

for i=1:popsize

fitness(i)=Func(x(i,:)',FuncId);

FEs=FEs+1;

if gbestfitness>fitness(i)

gbestfitness=fitness(i);

gbestX=x(i,:);

end

gbesthistory(FEs)=gbestfitness;

end

%% Enter iteration

while 1

[~, ind]=sort(fitness);

Best_X=x(ind(1),:); % Note the difference between Best_X and gbestX

%% Learning phase

for i=1:popsize

Worst_X=x(ind(randi([popsize-P1+1,popsize],1)),:);

Better_X=x(ind(randi([2,P1],1)),:);

random=randperm(popsize,3);

random(random==i)=[];

L1=random(1);L2=random(2);

Gap1=(Best_X-Better_X); Gap2=(Best_X-Worst_X); Gap3=(Better_X-Worst_X); Gap4=(x(L1,:)-x(L2,:));

Distance1=norm(Gap1); Distance2=norm(Gap2); Distance3=norm(Gap3); Distance4=norm(Gap4);

SumDistance=Distance1+Distance2+Distance3+Distance4;

LF1=Distance1/SumDistance; LF2=Distance2/SumDistance; LF3=Distance3/SumDistance; LF4=Distance4/SumDistance;

SF=fitness(i)/max(fitness);

KA1=LF1*SF*Gap1; KA2=LF2*SF*Gap2; KA3=LF3*SF*Gap3; KA4=LF4*SF*Gap4;

newx(i,:)=x(i,:)+KA1+KA2+KA3+KA4;

% Clipping

newx(i,:)=max(newx(i,:),xmin);

newx(i,:)=min(newx(i,:),xmax);

newfitness=Func(newx(i,:)',FuncId);

FEs=FEs+1;

% Real time update

if fitness(i)>newfitness

fitness(i)=newfitness;

x(i,:)=newx(i,:);

else

if rand<P2&&ind(i)~=ind(1)

fitness(i)=newfitness;

x(i,:)=newx(i,:);

end

end

if gbestfitness>fitness(i)

gbestfitness=fitness(i);

gbestX=x(i,:);

end

gbesthistory(FEs)=gbestfitness;

end

fprintf("FEs: %d, fitness error: %e\n",FEs,gbestfitness);

if FEs>=MaxFEs

break;

end

%% Reflection phase

for i=1:popsize

newx(i,:)=x(i,:);

for j=1:dimension

if rand<P3

R=x(ind(randi(P1)),:);

newx(i,j) = x(i,j)+(R(:,j)-x(i,j))*rand;

AF=(0.01+(0.1-0.01)*(1-FEs/MaxFEs));

if rand<AF

newx(i,j)=xmin+(xmax-xmin)*rand;

end

end

end

% Clipping

newx(i,:)=max(newx(i,:),xmin);

newx(i,:)=min(newx(i,:),xmax);

newfitness=Func(newx(i,:)',FuncId);

FEs=FEs+1;

% Real time update

if fitness(i)>newfitness

fitness(i)=newfitness;

x(i,:)=newx(i,:);

else

if rand<P2&&ind(i)~=ind(1)

fitness(i)=newfitness;

x(i,:)=newx(i,:);

end

end

if gbestfitness>fitness(i)

gbestfitness=fitness(i);

gbestX=x(i,:);

end

gbesthistory(FEs)=gbestfitness;

end

fprintf("FEs: %d, fitness error: %e\n",FEs,gbestfitness);

if FEs>=MaxFEs

break;

end

end

%% Deal with the situation of too little or too much evaluation

if FEs<MaxFEs

gbesthistory(FEs+1:MaxFEs)=gbestfitness;

else

if FEs>MaxFEs

gbesthistory(MaxFEs+1:end)=[];

end

end

end下方小卡片回复关键词:GO,免费获取代码。

后续会继续发布2023年其他最新优化算法,敬请关注。