目录

- 一、简介

- 二、maven依赖

- 三、数据库

- 3.1、创建数据库

- 3.2、创建表

- 四、配置(二选一)

- 4.1、properties配置

- 4.2、yml配置

- 五、精确分片算法

- 5.1、精确分库算法

- 5.2、精确分表算法

- 六、实现

- 6.1、实体层

- 6.2、持久层

- 6.3、服务层

- 6.4、测试类

- 6.4.1、保存订单数据

- 6.4.2、根据订单号查询订单

- 6.4.2、根据订单号和用户查询订单

一、简介

在我之前的文章里,数据的分库分表都是基于行表达式的方式来实现的,看起来也蛮好用,也挺简单的,但是有时会有些复杂的规则,可能使用行表达式策略会很复杂或者实现不了,我们就讲另外一种分片策略,精确分片算法,通常用来处理=或者in条件的情况比较多。

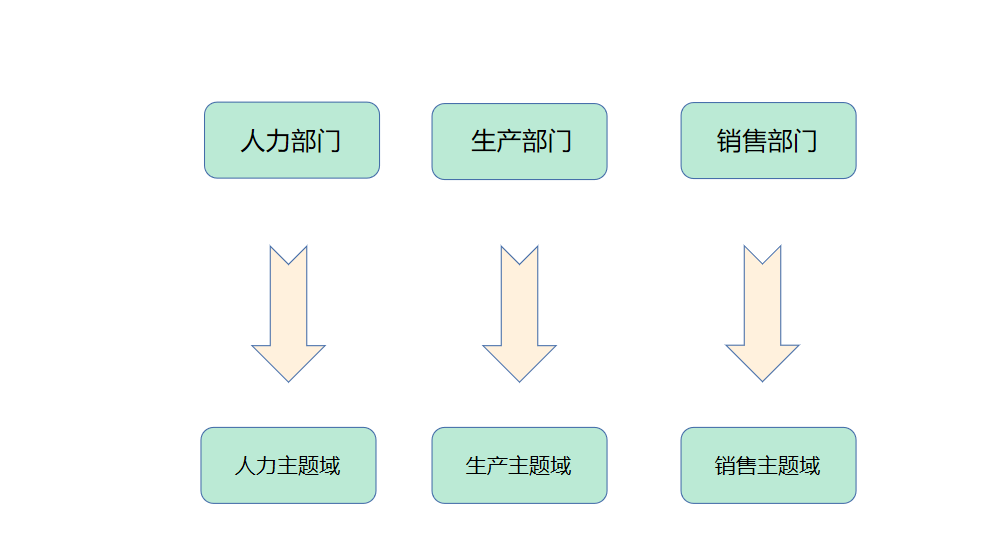

本文示例大概架构如下图:

二、maven依赖

pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.6.0</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>com.alian</groupId>

<artifactId>sharding-jdbc</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>sharding-jdbc</name>

<description>sharding-jdbc</description>

<properties>

<java.version>1.8</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-jpa</artifactId>

</dependency>

<dependency>

<groupId>org.apache.shardingsphere</groupId>

<artifactId>sharding-jdbc-spring-boot-starter</artifactId>

<version>4.1.1</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>1.2.15</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>8.0.26</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>3.12.0</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.18.20</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

<scope>test</scope>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

</plugins>

</build>

</project>

有些小伙伴的 druid 可能用的是 druid-spring-boot-starter

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

<version>1.2.6</version>

</dependency>

然后出现可能使用不了的各种问题,这个时候你只需要在主类上添加 @SpringBootApplication(exclude = {DruidDataSourceAutoConfigure.class}) 即可

package com.alian.shardingjdbc;

import com.alibaba.druid.spring.boot.autoconfigure.DruidDataSourceAutoConfigure;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

@SpringBootApplication(exclude = {DruidDataSourceAutoConfigure.class})

@SpringBootApplication

public class ShardingJdbcApplication {

public static void main(String[] args) {

SpringApplication.run(ShardingJdbcApplication.class, args);

}

}

三、数据库

3.1、创建数据库

CREATE DATABASE `sharding_9` DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci;

CREATE DATABASE `sharding_10` DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci;

CREATE DATABASE `sharding_11` DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci;

3.2、创建表

在数据库sharding_9、sharding_10、sharding_11下面分别创建两张表:tb_order_1和tb_order_2的结构是一样的

tb_order_1

CREATE TABLE `tb_order_1` (

`order_id` bigint(20) NOT NULL COMMENT '主键',

`user_id` int unsigned NOT NULL DEFAULT '0' COMMENT '用户id',

`price` int unsigned NOT NULL DEFAULT '0' COMMENT '价格(单位:分)',

`order_status` tinyint unsigned NOT NULL DEFAULT '1' COMMENT '订单状态(1:待付款,2:已付款,3:已取消)',

`order_time` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '创建时间',

`title` varchar(100) NOT NULL DEFAULT '' COMMENT '订单标题',

PRIMARY KEY (`order_id`),

KEY `idx_user_id` (`user_id`),

KEY `idx_order_time` (`order_time`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COMMENT='订单表';

tb_order_2

CREATE TABLE `tb_order_2` (

`order_id` bigint(20) NOT NULL COMMENT '主键',

`user_id` int unsigned NOT NULL DEFAULT '0' COMMENT '用户id',

`price` int unsigned NOT NULL DEFAULT '0' COMMENT '价格(单位:分)',

`order_status` tinyint unsigned NOT NULL DEFAULT '1' COMMENT '订单状态(1:待付款,2:已付款,3:已取消)',

`order_time` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '创建时间',

`title` varchar(100) NOT NULL DEFAULT '' COMMENT '订单标题',

PRIMARY KEY (`order_id`),

KEY `idx_user_id` (`user_id`),

KEY `idx_order_time` (`order_time`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COMMENT='订单表';

四、配置(二选一)

4.1、properties配置

application.properties

server.port=8899

server.servlet.context-path=/sharding-jdbc

# 允许定义相同的bean对象去覆盖原有的

spring.main.allow-bean-definition-overriding=true

# 数据源名称,多数据源以逗号分隔

spring.shardingsphere.datasource.names=ds1,ds2,ds3

# 未配置分片规则的表将通过默认数据源定位

spring.shardingsphere.sharding.default-data-source-name=ds1

# sharding_9数据库连接池类名称

spring.shardingsphere.datasource.ds1.type=com.alibaba.druid.pool.DruidDataSource

# sharding_9数据库驱动类名

spring.shardingsphere.datasource.ds1.driver-class-name=com.mysql.cj.jdbc.Driver

# sharding_9数据库url连接

spring.shardingsphere.datasource.ds1.url=jdbc:mysql://192.168.0.129:3306/sharding_9?serverTimezone=GMT%2B8&characterEncoding=utf8&useUnicode=true&useSSL=false&zeroDateTimeBehavior=CONVERT_TO_NULL&autoReconnect=true&allowMultiQueries=true&failOverReadOnly=false&connectTimeout=6000&maxReconnects=5

# sharding_9数据库用户名

spring.shardingsphere.datasource.ds1.username=alian

# sharding_9数据库密码

spring.shardingsphere.datasource.ds1.password=123456

# sharding_10数据库连接池类名称

spring.shardingsphere.datasource.ds2.type=com.alibaba.druid.pool.DruidDataSource

# sharding_10数据库驱动类名

spring.shardingsphere.datasource.ds2.driver-class-name=com.mysql.cj.jdbc.Driver

# sharding_10数据库url连接

spring.shardingsphere.datasource.ds2.url=jdbc:mysql://192.168.0.129:3306/sharding_10?serverTimezone=GMT%2B8&characterEncoding=utf8&useUnicode=true&useSSL=false&zeroDateTimeBehavior=CONVERT_TO_NULL&autoReconnect=true&allowMultiQueries=true&failOverReadOnly=false&connectTimeout=6000&maxReconnects=5

# sharding_10数据库用户名

spring.shardingsphere.datasource.ds2.username=alian

# sharding_10数据库密码

spring.shardingsphere.datasource.ds2.password=123456

# sharding_11数据库连接池类名称

spring.shardingsphere.datasource.ds3.type=com.alibaba.druid.pool.DruidDataSource

# sharding_11数据库驱动类名

spring.shardingsphere.datasource.ds3.driver-class-name=com.mysql.cj.jdbc.Driver

# sharding_11数据库url连接

spring.shardingsphere.datasource.ds3.url=jdbc:mysql://192.168.0.129:3306/sharding_11?serverTimezone=GMT%2B8&characterEncoding=utf8&useUnicode=true&useSSL=false&zeroDateTimeBehavior=CONVERT_TO_NULL&autoReconnect=true&allowMultiQueries=true&failOverReadOnly=false&connectTimeout=6000&maxReconnects=5

# sharding_11数据库用户名

spring.shardingsphere.datasource.ds3.username=alian

# sharding_11数据库密码

spring.shardingsphere.datasource.ds3.password=123456

# 采用精确分片策略:PreciseShardingStrategy,根据user_id的奇偶性来添加到不同的库中

spring.shardingsphere.sharding.tables.tb_order.database-strategy.standard.sharding-column=user_id

spring.shardingsphere.sharding.tables.tb_order.database-strategy.standard.precise-algorithm-class-name=com.alian.shardingjdbc.algorithm.DatabasePreciseShardingAlgorithm

# 指定tb_order表的数据分布情况,配置数据节点,使用Groovy的表达式,逻辑表tb_order对应的节点是:ds1.tb_order_1, ds1.tb_order_2,ds2.tb_order_1, ds2.tb_order_2,ds3.tb_order_1, ds3.tb_order_2

spring.shardingsphere.sharding.tables.tb_order.actual-data-nodes=ds$->{1..3}.tb_order_$->{1..2}

# 采用精确分片策略:PreciseShardingStrategy

# 指定tb_order表的分片策略中的分片键

spring.shardingsphere.sharding.tables.tb_order.table-strategy.standard.sharding-column=order_id

# 指定tb_order表的分片策略中的分片算法表达式,使用Groovy的表达式

spring.shardingsphere.sharding.tables.tb_order.table-strategy.standard.precise-algorithm-class-name=com.alian.shardingjdbc.algorithm.OrderTablePreciseShardingAlgorithm

# 指定tb_order表的主键为order_id

spring.shardingsphere.sharding.tables.tb_order.key-generator.column=order_id

# 指定tb_order表的主键生成策略为SNOWFLAKE

spring.shardingsphere.sharding.tables.tb_order.key-generator.type=SNOWFLAKE

# 指定雪花算法的worker.id

spring.shardingsphere.sharding.tables.tb_order.key-generator.props.worker.id=100

# 指定雪花算法的max.tolerate.time.difference.milliseconds

spring.shardingsphere.sharding.tables.tb_order.key-generator.props.max.tolerate.time.difference.milliseconds=20

# 打开sql输出日志

spring.shardingsphere.props.sql.show=true

4.2、yml配置

application.yml

server:

port: 8899

servlet:

context-path: /sharding-jdbc

spring:

main:

# 允许定义相同的bean对象去覆盖原有的

allow-bean-definition-overriding: true

shardingsphere:

props:

sql:

# 打开sql输出日志

show: true

datasource:

# 数据源名称,多数据源以逗号分隔

names: ds1,ds2,ds3

ds1:

# 数据库连接池类名称

type: com.alibaba.druid.pool.DruidDataSource

# 数据库驱动类名

driver-class-name: com.mysql.cj.jdbc.Driver

# 数据库url连接

url: jdbc:mysql://192.168.0.129:3306/sharding_9?serverTimezone=GMT%2B8&characterEncoding=utf8&useUnicode=true&useSSL=false&zeroDateTimeBehavior=CONVERT_TO_NULL&autoReconnect=true&allowMultiQueries=true&failOverReadOnly=false&connectTimeout=6000&maxReconnects=5

# 数据库用户名

username: alian

# 数据库密码

password: 123456

ds2:

# 数据库连接池类名称

type: com.alibaba.druid.pool.DruidDataSource

# 数据库驱动类名

driver-class-name: com.mysql.cj.jdbc.Driver

# 数据库url连接

url: jdbc:mysql://192.168.0.129:3306/sharding_10?serverTimezone=GMT%2B8&characterEncoding=utf8&useUnicode=true&useSSL=false&zeroDateTimeBehavior=CONVERT_TO_NULL&autoReconnect=true&allowMultiQueries=true&failOverReadOnly=false&connectTimeout=6000&maxReconnects=5

# 数据库用户名

username: alian

# 数据库密码

password: 123456

ds3:

# 数据库连接池类名称

type: com.alibaba.druid.pool.DruidDataSource

# 数据库驱动类名

driver-class-name: com.mysql.cj.jdbc.Driver

# 数据库url连接

url: jdbc:mysql://192.168.0.129:3306/sharding_11?serverTimezone=GMT%2B8&characterEncoding=utf8&useUnicode=true&useSSL=false&zeroDateTimeBehavior=CONVERT_TO_NULL&autoReconnect=true&allowMultiQueries=true&failOverReadOnly=false&connectTimeout=6000&maxReconnects=5

# 数据库用户名

username: alian

# 数据库密码

password: 123456

sharding:

# 未配置分片规则的表将通过默认数据源定位

default-data-source-name: ds1

tables:

tb_order:

# 由数据源名 + 表名组成,以小数点分隔。多个表以逗号分隔,支持inline表达式

actual-data-nodes: ds$->{1..3}.tb_order_$->{1..2}

# 分库策略

database-strategy:

# 精确分片策略

standard:

# 分片键

sharding-column: user_id

# 精确分片算法类名称,用于=和IN

precise-algorithm-class-name: com.alian.shardingjdbc.algorithm.DatabasePreciseShardingAlgorithm

# 分表策略

table-strategy:

# 精确分片策略

standard:

# 分片键

sharding-column: order_id

# 精确分片算法类名称,用于=和IN

precise-algorithm-class-name: com.alian.shardingjdbc.algorithm.OrderTablePreciseShardingAlgorithm

# key生成器

key-generator:

# 自增列名称,缺省表示不使用自增主键生成器

column: order_id

# 自增列值生成器类型,缺省表示使用默认自增列值生成器(SNOWFLAKE/UUID)

type: SNOWFLAKE

# SnowflakeShardingKeyGenerator

props:

# SNOWFLAKE算法的worker.id

worker:

id: 100

# SNOWFLAKE算法的max.tolerate.time.difference.milliseconds

max:

tolerate:

time:

difference:

milliseconds: 20

-

通过精确分片算法完成分库分表

-

database-strategy 采用的是 精确分片策略 ,算法实现类是我们自定义的类 com.alian.shardingjdbc.algorithm.DatabasePreciseShardingAlgorithm

-

table-strategy 采用的是 精确分片策略 ,算法实现类是我们自定义的类 com.alian.shardingjdbc.algorithm.OrderTablePreciseShardingAlgorithm

-

actual-data-nodes 使用Groovy的表达式 ds$->{1…3}.tb_order_$->{1…2},对应的数据源是:ds1、 ds2、 ds3,物理表是:tb_order_1、 tb_order_2,组合起来就有6种方式,这里就不一一列举了

-

key-generator :key生成器,需要指定字段和类型,比如这里如果是SNOWFLAKE,最好也配置下props中的两个属性: worker.id 与 max.tolerate.time.difference.milliseconds 属性

五、精确分片算法

在行表示式分片策略中,基本上只需要配置行表示即可,不需要我们开发java,如果有一些比较特殊的要求,表达式很复杂或者是没办法使用表达式,假设我要求根据 userId 进行分库,要满足:

| 用户id尾数 | 要分片到数据库 |

|---|---|

| 0,8 | ds1 |

| 1,3,6,9 | ds2 |

| 2,4,5,7 | ds3 |

使用行表示就很复杂,我们就可以使用自定义分片算法,这里采用精确分片算法。

5.1、精确分库算法

DatabasePreciseShardingAlgorithm.java

@Slf4j

public class DatabasePreciseShardingAlgorithm implements PreciseShardingAlgorithm<Integer> {

public DatabasePreciseShardingAlgorithm() {

}

@Override

public String doSharding(Collection<String> dataSourceCollection, PreciseShardingValue<Integer> preciseShardingValue) {

// 获取分片键的值

Integer shardingValue = preciseShardingValue.getValue();

// 获取逻辑

String logicTableName = preciseShardingValue.getLogicTableName();

log.info("分片键的值:{},逻辑表:{}", shardingValue, logicTableName);

// 对分片键的值对10取模,得到(0-9),我这里就配置了三个库,实际根据需要修改

// 0,8插入到 ds1

// 1,3,6,9插入到 ds2

// 2,4,5,7插入到 ds3

int index = shardingValue % 10;

int sourceTarget;

if (ArrayUtils.contains(new int[]{0, 8}, index)) {

sourceTarget = 1;

} else if (ArrayUtils.contains(new int[]{1, 3, 6, 9}, index)) {

sourceTarget = 2;

} else {

sourceTarget = 3;

}

// 遍历数据源

for (String databaseSource : dataSourceCollection) {

// 判断数据源是否存在

if (databaseSource.endsWith(sourceTarget + "")) {

return databaseSource;

}

}

// 不存在则抛出异常

throw new UnsupportedOperationException();

}

}

实际使用也很简单,我们只需要实现接口 PreciseShardingAlgorithm<Integer> ,需要注意的是这里的类型 Integer 就是分片键 userId 的类型。然后重写方法 doSharding ,这个方法会有两个参数,第一个就是数据源的集合,第二个是分片对象,我们可以获取到 分片键的值 及其 逻辑表 ,具体见上面代码。

分库时就是需要我们通过自定义的算法计算出需要使用的数据源 databaseSource 。

5.2、精确分表算法

OrderTablePreciseShardingAlgorithm.java

@Slf4j

public class OrderTablePreciseShardingAlgorithm implements PreciseShardingAlgorithm<Long> {

public OrderTablePreciseShardingAlgorithm() {

}

@Override

public String doSharding(Collection<String> tableCollection, PreciseShardingValue<Long> preciseShardingValue) {

// 获取分片键的值

Long shardingValue = preciseShardingValue.getValue();

// 取模分表(取模都是从0到collection.size())

long index = shardingValue % tableCollection.size();

// 判断逻辑表名

String logicTableName = preciseShardingValue.getLogicTableName();

// 物理表名

String PhysicalTableName = logicTableName + "_" + (index + 1);

log.info("分片键的值:{},物理表名:{}", shardingValue, PhysicalTableName);

// 判断是否存在该表

if (tableCollection.contains(PhysicalTableName)) {

return PhysicalTableName;

}

// 不存在则抛出异常

throw new UnsupportedOperationException();

}

}

精确分表也是要实现接口 PreciseShardingAlgorithm<Long> ,需要注意的是这里的 Long 就是分片键 orderId 的类型。然后重写方法 doSharding ,这个方法会有两个参数,第一个就是物理表的集合,第二个是分片对象,我们可以获取到 分片键的值 及其 逻辑表 ,具体见上面代码。

我们就简单取模分片了,不过我们是通过我们自定义方法去实现的,而不是行表示,因为这样你可以很灵活的设计你们的分片算法,比如你们可以使用基因法等等方式去处理,我这里只是为了演示方便。

六、实现

6.1、实体层

Order.java

@Data

@Entity

@Table(name = "tb_order")

public class Order implements Serializable {

@Id

@GeneratedValue(strategy = GenerationType.IDENTITY)

@Column(name = "order_id")

private Long orderId;

@Column(name = "user_id")

private Integer userId;

@Column(name = "price")

private Integer price;

@Column(name = "order_status")

private Integer orderStatus;

@Column(name = "title")

private String title;

@Column(name = "order_time")

private Date orderTime;

}

6.2、持久层

OrderRepository.java

public interface OrderRepository extends PagingAndSortingRepository<Order, Long> {

/**

* 根据订单id查询订单

* @param orderId

* @return

*/

Order findOrderByOrderId(Long orderId);

/**

* 根据订单id和用户id查询订单

* @param orderId

* @param userId

* @return

*/

Order findOrderByOrderIdAndUserId(Long orderId,Integer userId);

}

6.3、服务层

OrderService.java

@Slf4j

@Service

public class OrderService {

@Autowired

private OrderRepository orderRepository;

public void saveOrder(Order order) {

orderRepository.save(order);

}

public Order queryOrder(Long orderId) {

return orderRepository.findOrderByOrderId(orderId);

}

public Order findOrderByOrderIdAndUserId(Long orderId, Integer userId) {

return orderRepository.findOrderByOrderIdAndUserId(orderId, userId);

}

}

6.4、测试类

OrderTests.java

@Slf4j

@RunWith(SpringJUnit4ClassRunner.class)

@SpringBootTest

public class OrderTests {

@Autowired

private OrderService orderService;

@Test

public void saveOrder() {

for (int i = 0; i < 20; i++) {

Order order = new Order();

// 随机生成1000到1009的用户id

int userId = (int) Math.round(Math.random() * (1009 - 1000) + 1000);

order.setUserId(userId);

// 随机生成50到100的金额

int price = (int) Math.round(Math.random() * (10000 - 5000) + 5000);

order.setPrice(price);

order.setOrderStatus(2);

order.setOrderTime(new Date());

order.setTitle("");

orderService.saveOrder(order);

}

}

@Test

public void queryOrder() {

Long orderId = 875100237105348608L;

Order order = orderService.queryOrder(orderId);

log.info("查询的结果:{}", order);

}

@Test

public void findOrderByOrderIdAndUserId() {

Long orderId = 875100237105348608L;

Integer userId=1009;

Order order = orderService.findOrderByOrderIdAndUserId(orderId,userId);

log.info("查询的结果:{}", order);

}

}

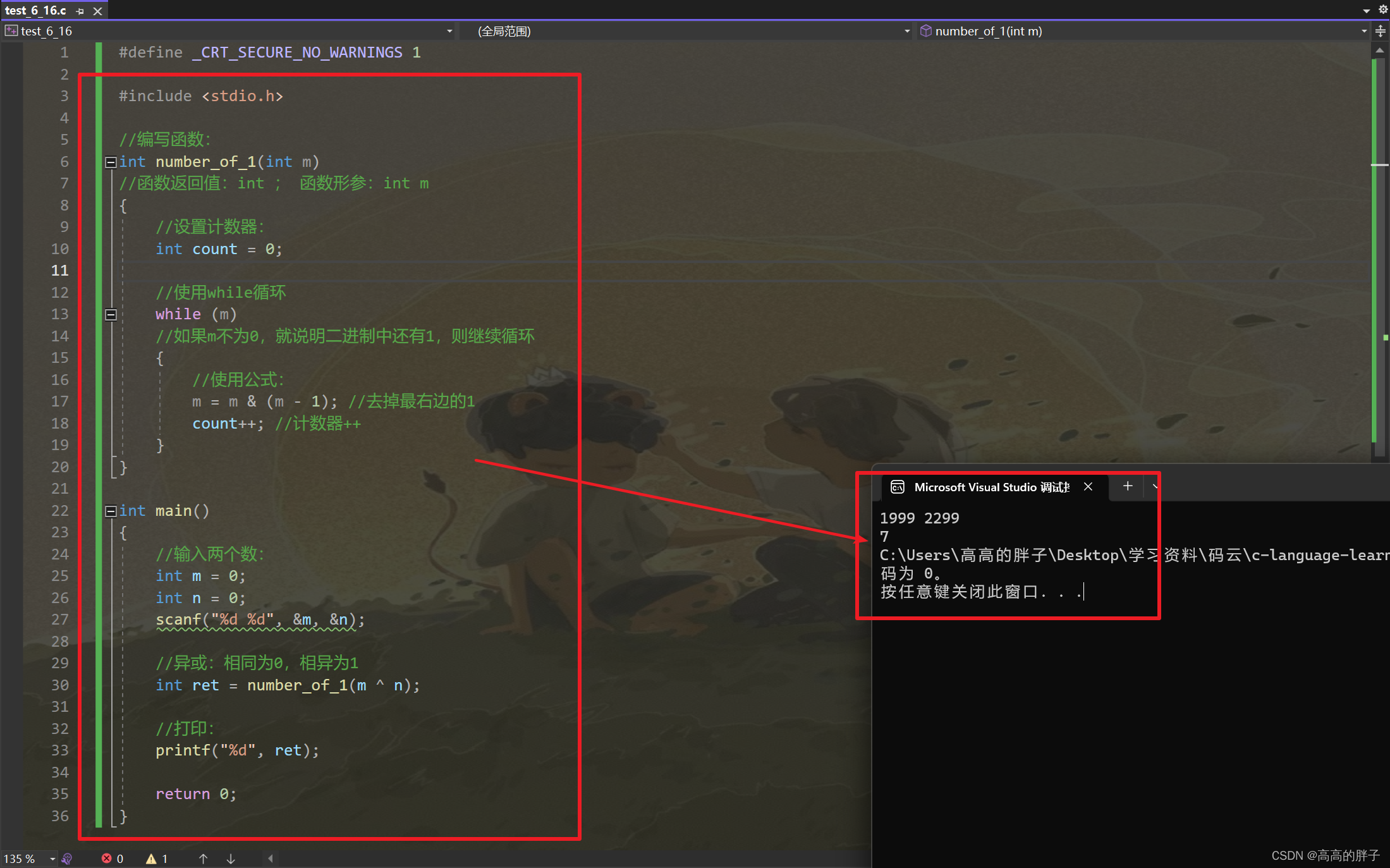

6.4.1、保存订单数据

效果图:

从上面的数据来看,满足我们分库分表的要求的,实现都是基于我们自定义的算法实现。

6.4.2、根据订单号查询订单

@Test

public void queryOrder() {

Long orderId = 875112578379300864L;

Order order = orderService.queryOrder(orderId);

log.info("查询的结果:{}", order);

}

20:37:23 575 INFO [main]:分片键的值:875112578379300864,物理表名:tb_order_2

20:37:23 575 INFO [main]:分片键的值:875112578379300864,物理表名:tb_order_2

20:37:23 575 INFO [main]:分片键的值:875112578379300864,物理表名:tb_order_2

20:37:23 595 INFO [main]:Logic SQL: select order0_.order_id as order_id1_0_, order0_.order_status as order_st2_0_, order0_.order_time as order_ti3_0_, order0_.price as price4_0_, order0_.title as title5_0_, order0_.user_id as user_id6_0_ from tb_order order0_ where order0_.order_id=?

20:37:23 595 INFO [main]:SQLStatement: SelectStatementContext(super=CommonSQLStatementContext(sqlStatement=org.apache.shardingsphere.sql.parser.sql.statement.dml.SelectStatement@28b68067, tablesContext=org.apache.shardingsphere.sql.parser.binder.segment.table.TablesContext@19540247), tablesContext=org.apache.shardingsphere.sql.parser.binder.segment.table.TablesContext@19540247, projectionsContext=ProjectionsContext(startIndex=7, stopIndex=200, distinctRow=false, projections=[ColumnProjection(owner=order0_, name=order_id, alias=Optional[order_id1_0_]), ColumnProjection(owner=order0_, name=order_status, alias=Optional[order_st2_0_]), ColumnProjection(owner=order0_, name=order_time, alias=Optional[order_ti3_0_]), ColumnProjection(owner=order0_, name=price, alias=Optional[price4_0_]), ColumnProjection(owner=order0_, name=title, alias=Optional[title5_0_]), ColumnProjection(owner=order0_, name=user_id, alias=Optional[user_id6_0_])]), groupByContext=org.apache.shardingsphere.sql.parser.binder.segment.select.groupby.GroupByContext@acb1c9c, orderByContext=org.apache.shardingsphere.sql.parser.binder.segment.select.orderby.OrderByContext@1c681761, paginationContext=org.apache.shardingsphere.sql.parser.binder.segment.select.pagination.PaginationContext@411933, containsSubquery=false)

20:37:23 595 INFO [main]:Actual SQL: ds1 ::: select order0_.order_id as order_id1_0_, order0_.order_status as order_st2_0_, order0_.order_time as order_ti3_0_, order0_.price as price4_0_, order0_.title as title5_0_, order0_.user_id as user_id6_0_ from tb_order_2 order0_ where order0_.order_id=? ::: [875112578379300864]

20:37:23 595 INFO [main]:Actual SQL: ds2 ::: select order0_.order_id as order_id1_0_, order0_.order_status as order_st2_0_, order0_.order_time as order_ti3_0_, order0_.price as price4_0_, order0_.title as title5_0_, order0_.user_id as user_id6_0_ from tb_order_2 order0_ where order0_.order_id=? ::: [875112578379300864]

20:37:23 595 INFO [main]:Actual SQL: ds3 ::: select order0_.order_id as order_id1_0_, order0_.order_status as order_st2_0_, order0_.order_time as order_ti3_0_, order0_.price as price4_0_, order0_.title as title5_0_, order0_.user_id as user_id6_0_ from tb_order_2 order0_ where order0_.order_id=? ::: [875112578379300864]

20:37:23 640 INFO [main]:查询的结果:Order(orderId=875112578379300864, userId=1009, price=7811, orderStatus=2, title=, orderTime=2023-06-12 20:24:57.0)

从上面的结果我们可以看到当我们查询order_id为 875112578379300864 的记录时,因为我们之前是按 order_id 取模进行的分表,最终得到的是 tb_order_2 ,但是这里根本不知道是哪个库,所以把 ds1、ds2、ds3 都查了一遍,那有什么方法可以改善么?

6.4.2、根据订单号和用户查询订单

@Test

public void findOrderByOrderIdAndUserId() {

Long orderId = 875112578379300864L;

Integer userId=1009;

Order order = orderService.findOrderByOrderIdAndUserId(orderId,userId);

log.info("查询的结果:{}", order);

}

20:41:09 242 INFO [main]:分片键的值:1009,逻辑表:tb_order

20:41:09 246 INFO [main]:分片键的值:875112578379300864,物理表名:tb_order_2

20:41:09 264 INFO [main]:Logic SQL: select order0_.order_id as order_id1_0_, order0_.order_status as order_st2_0_, order0_.order_time as order_ti3_0_, order0_.price as price4_0_, order0_.title as title5_0_, order0_.user_id as user_id6_0_ from tb_order order0_ where order0_.order_id=? and order0_.user_id=?

20:41:09 264 INFO [main]:SQLStatement: SelectStatementContext(super=CommonSQLStatementContext(sqlStatement=org.apache.shardingsphere.sql.parser.sql.statement.dml.SelectStatement@58d79479, tablesContext=org.apache.shardingsphere.sql.parser.binder.segment.table.TablesContext@102c24d1), tablesContext=org.apache.shardingsphere.sql.parser.binder.segment.table.TablesContext@102c24d1, projectionsContext=ProjectionsContext(startIndex=7, stopIndex=200, distinctRow=false, projections=[ColumnProjection(owner=order0_, name=order_id, alias=Optional[order_id1_0_]), ColumnProjection(owner=order0_, name=order_status, alias=Optional[order_st2_0_]), ColumnProjection(owner=order0_, name=order_time, alias=Optional[order_ti3_0_]), ColumnProjection(owner=order0_, name=price, alias=Optional[price4_0_]), ColumnProjection(owner=order0_, name=title, alias=Optional[title5_0_]), ColumnProjection(owner=order0_, name=user_id, alias=Optional[user_id6_0_])]), groupByContext=org.apache.shardingsphere.sql.parser.binder.segment.select.groupby.GroupByContext@495f7ca4, orderByContext=org.apache.shardingsphere.sql.parser.binder.segment.select.orderby.OrderByContext@700202fa, paginationContext=org.apache.shardingsphere.sql.parser.binder.segment.select.pagination.PaginationContext@141234df, containsSubquery=false)

20:41:09 264 INFO [main]:Actual SQL: ds2 ::: select order0_.order_id as order_id1_0_, order0_.order_status as order_st2_0_, order0_.order_time as order_ti3_0_, order0_.price as price4_0_, order0_.title as title5_0_, order0_.user_id as user_id6_0_ from tb_order_2 order0_ where order0_.order_id=? and order0_.user_id=? ::: [875112578379300864, 1009]

20:41:09 318 INFO [main]:查询的结果:Order(orderId=875112578379300864, userId=1009, price=7811, orderStatus=2, title=, orderTime=2023-06-12 20:24:57.0)

从上面的结果我们可以看到当我们查询order_id为 875112578379300864 的记录时,用户id为 1009 的记录时,最终直接查询到 ds2.tb_order_2 ,并没有把所有的库都去查了一遍,因为我们的查询条件里有 userId ,会自动计算到对应的数据源,而按 order_id 取模进行的分表会找到对应的表。所以对于这种一个表多个字段同时分库分表的时候,一定要注意这一点,这样的查询能提高效率。

![[电离层建模学习笔记]开源程序M_GIM学习记录](https://img-blog.csdnimg.cn/a5dbf218614f43938b6ff855254bc6ed.png)