文章目录

- 21 RBM(Restricted Boltzmann Machine)——受限玻尔兹曼机

- 21.1 背景介绍

- 22.2 RBM模型表示

- 22.3 Inference问题

- 22.4 Marginal问题

21 RBM(Restricted Boltzmann Machine)——受限玻尔兹曼机

21.1 背景介绍

什么是玻尔兹曼机:

- 简单来说就是具有条件的Markov Random Field(一个无向图模型)

- 什么条件呢?加入了隐状态——使得无向图的节点分成两类:观测变量(observed variable)、隐变量(hidden variable)

对于一个无向图来说最重要的就是因子分解,所以玻尔兹曼机自然也可以:

-

Markov Random Field的因子分解基于Hammersley Clifford Theorem——通过最大团分解图:

P ( X ) = 1 Z ∏ i = 1 K φ i ( X C i ) P(X) = \frac{1}{Z} \prod_{i=1}^K \varphi_i(X_{C_i}) P(X)=Z1i=1∏Kφi(XCi)

其中 C i C_i Ci表示最大团, φ i ( X C i ) \varphi_i(X_{C_i}) φi(XCi)表示势函数(potential function), Z Z Z表示归一化因子(配分函数-partition function),并且包含以下条件:

{ φ i ( X C i ) = exp { − E ( X C i ) } > 0 Z = ∑ X ∏ i = 1 K φ i ( X C i ) = ∑ x 1 ∑ x 2 ⋯ ∑ x p ∏ i = 1 K φ i ( X C i ) \begin{cases} \varphi_i(X_{C_i}) = \exp{\lbrace -E(X_{C_i}) \rbrace} > 0 \\ Z = \sum_X \prod_{i=1}^K \varphi_i(X_{C_i}) = \sum_{x_1} \sum_{x_2} \dots \sum_{x_p} \prod_{i=1}^K \varphi_i(X_{C_i}) \\ \end{cases} {φi(XCi)=exp{−E(XCi)}>0Z=∑X∏i=1Kφi(XCi)=∑x1∑x2⋯∑xp∏i=1Kφi(XCi)

其中 E E E表示能量函数(Energy function),所以因子分解的公式也可以写成:

P ( X ) = 1 Z exp { − ∑ i = 1 K E ( X C i ) } P(X) = \frac{1}{Z} \exp{\lbrace - \sum_{i=1}^K E(X_{C_i}) \rbrace} P(X)=Z1exp{−i=1∑KE(XCi)} -

将因子分解的 ∑ i = 1 K E ( X C i ) \sum_{i=1}^K E(X_{C_i}) ∑i=1KE(XCi)整合起来可以写作:

P ( X ) = 1 Z exp { − E ( X ) } P(X) = \frac{1}{Z} \exp{\lbrace - E(X) \rbrace} P(X)=Z1exp{−E(X)}

这个公式也可以叫做玻尔兹曼分布(Boltzmann Distribution)或是吉布斯分布(Gibbs Distribution)

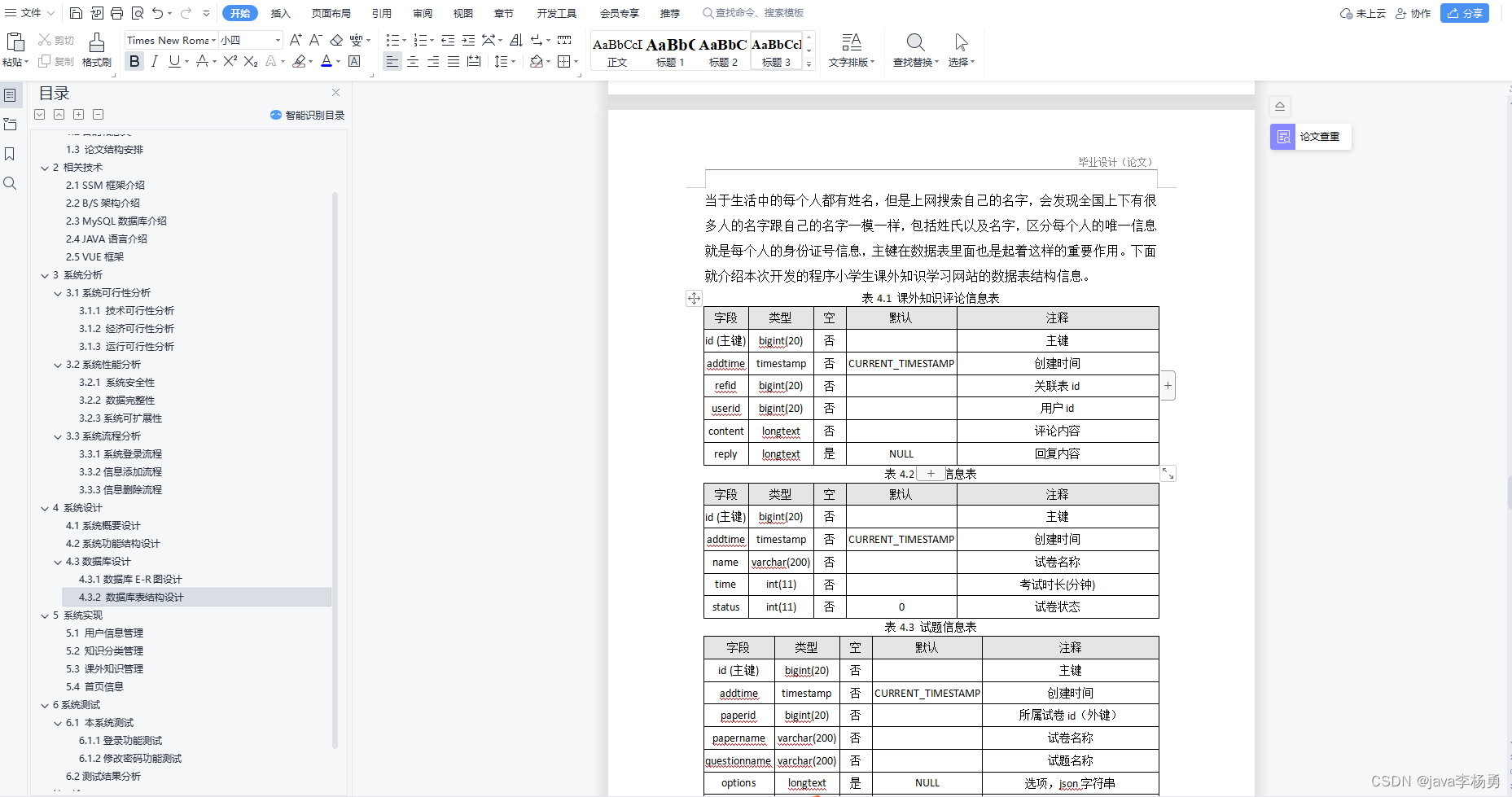

22.2 RBM模型表示

我们先给玻尔兹曼机的数据做一些定义:

- 数据集(节点)表示为 X = { x 1 , x 2 , … , x p } = { h , v } X = {\lbrace x_1, x_2, \dots, x_p \rbrace} = {\lbrace h, v \rbrace} X={x1,x2,…,xp}={h,v}

- 定义hidden node为 H = { h 1 , h 2 , … , x m } H = {\lbrace h_1, h_2, \dots, x_m \rbrace} H={h1,h2,…,xm},observed node为 V = { v 1 , v 2 , … , v n } V = {\lbrace v_1, v_2, \dots, v_n \rbrace} V={v1,v2,…,vn},并且有 m + n = p m+n=p m+n=p

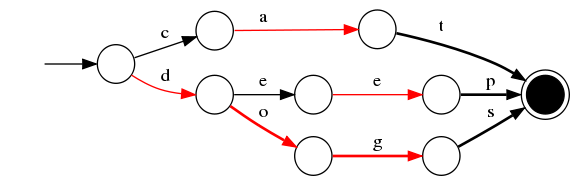

我们先要知道玻尔兹曼机是什么,然后知道为什么要引入受限玻尔兹曼机:

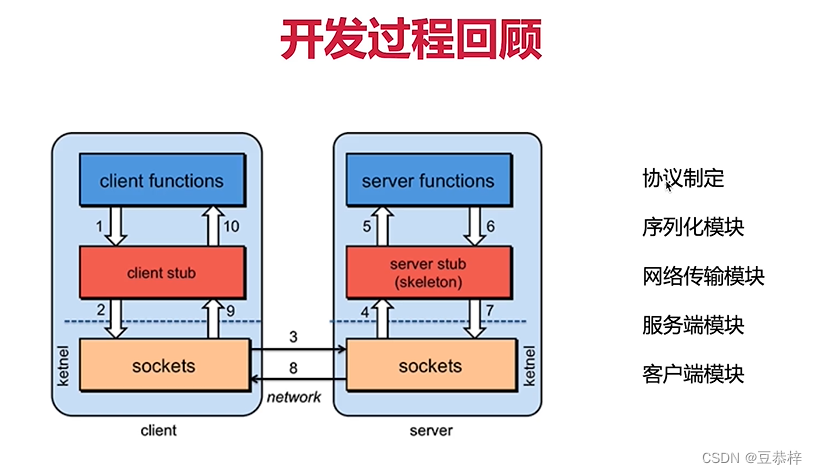

- 玻尔兹曼机就是一个有两种节点的无向图,但他有个问题,就是很难计算Inference。精确推断是intractable问题无法计算,近似推断通过MC的计算量也非常大。

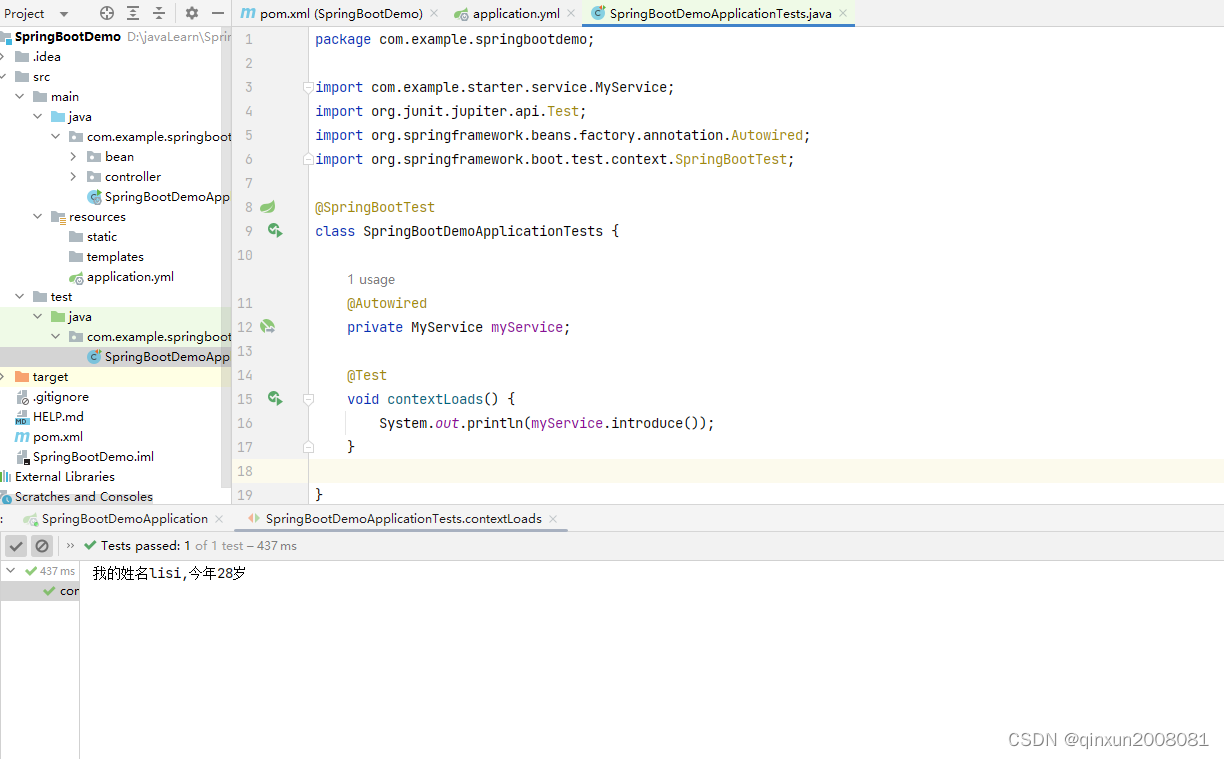

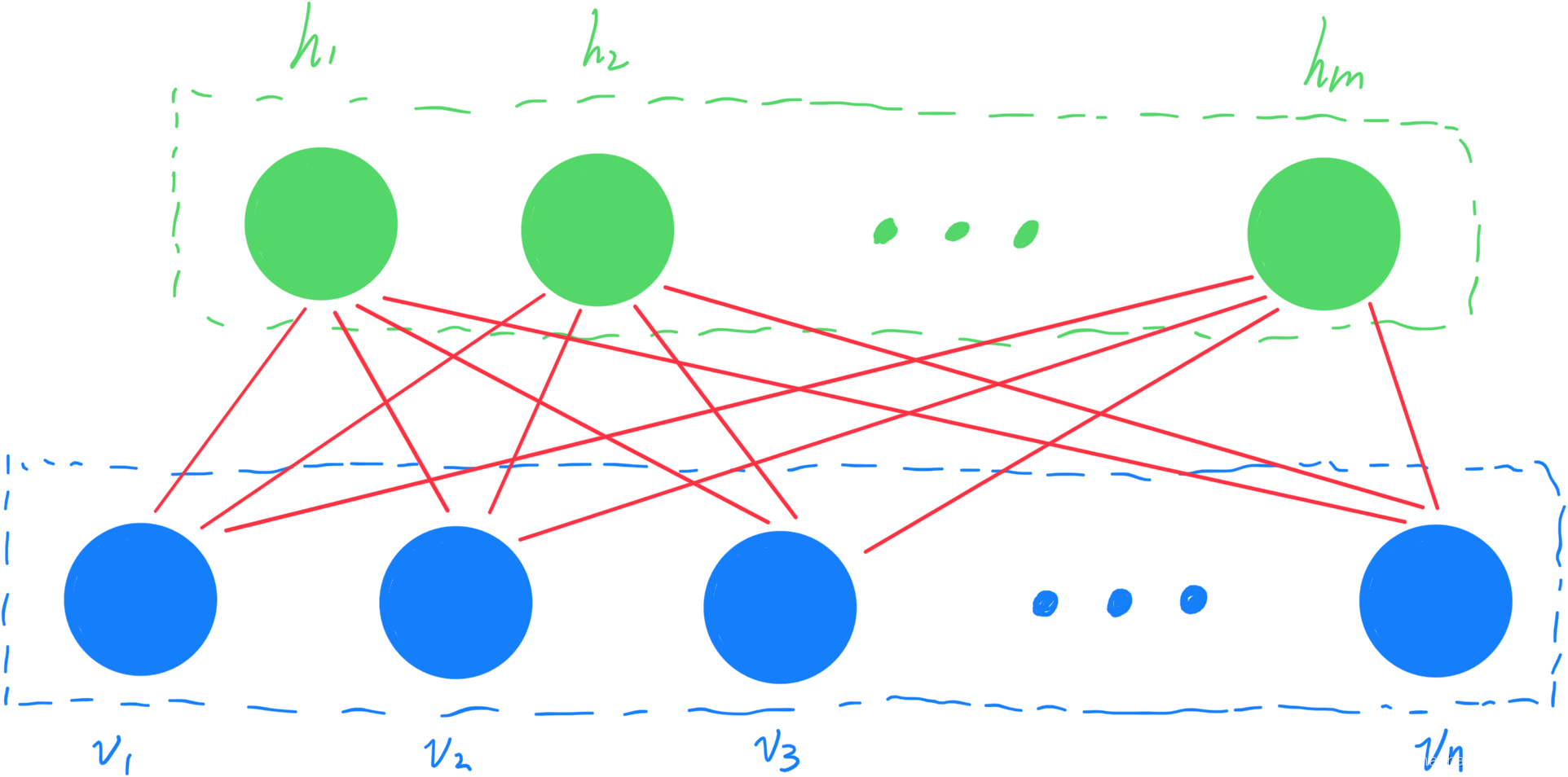

- 为了简化,我们定义了Restricted Boltzmann Machine,在图上的特点是:

h

,

v

h,v

h,v之间有连接,

h

,

v

h,v

h,v内部无连接。如图所示,这样可以减少很多计算量:

既然以及知道受限玻尔兹曼机是一个什么样的形式了,我们就可以推导他的公式了:

-

通过因子图的思想,我们可以将图的关系分成三部分:与observe node相关(用 α \alpha α表示参数)、与hidden node相关(用 β \beta β表示参数)、与两种节点都相关的边(用 W W W表示参数)

-

将已知条件都表示为矩阵形式:

X = ( x 1 x 2 … x p ) = ( H V ) , H = ( h 1 h 2 … h m ) , V = ( v 1 v 2 … v m ) , p = m + n X = \begin{pmatrix} x_1 \\ x_2 \\ \dots \\ x_p \end{pmatrix} = \begin{pmatrix} H \\ V \\ \end{pmatrix} , \quad H = \begin{pmatrix} h_1 \\ h_2 \\ \dots \\ h_m \end{pmatrix} , \quad V = \begin{pmatrix} v_1 \\ v_2 \\ \dots \\ v_m \end{pmatrix}, \quad p=m+n X= x1x2…xp =(HV),H= h1h2…hm ,V= v1v2…vm ,p=m+n -

根据上述两个条件,我们可以归纳为下面的公式:

{ P ( X ) = P ( V , H ) = 1 Z exp { − E ( V , H ) } = 1 Z exp { H T W V + α T V + β T H } E ( V , H ) = − ( H T W V + α T V + β T H ) \begin{cases} P(X) = P(V, H) = \frac{1}{Z} \exp{\lbrace - E(V, H) \rbrace} = \frac{1}{Z} \exp{\lbrace {H^T W V} + {\alpha^T V} + {\beta^T H} \rbrace} \\ E(V, H) = - {({H^T W V} + {\alpha^T V} + {\beta^T H})} \end{cases} {P(X)=P(V,H)=Z1exp{−E(V,H)}=Z1exp{HTWV+αTV+βTH}E(V,H)=−(HTWV+αTV+βTH)

我们通过相关关系,并加入参数可以得到该公式。 -

下面我们对上面的公式做一定的简化:

P ( V , H ) = 1 Z exp { H T W V + α T V + β T H } = 1 Z exp { H T W V } ⋅ exp { α T V } ⋅ exp { β T H } = 1 Z ∏ i = 1 m ∏ j = 1 n exp { h i w i j v j } ⋅ ∏ j = 1 n exp { α j v j } ⋅ ∏ i = 1 m exp { β i h i } \begin{align} P(V, H) &= \frac{1}{Z} \exp{\lbrace {H^T W V} + {\alpha^T V} + {\beta^T H} \rbrace} \\ &= \frac{1}{Z} \exp{\lbrace {H^T W V} \rbrace} \cdot \exp{\lbrace {\alpha^T V} \rbrace} \cdot \exp{\lbrace {\beta^T H} \rbrace} \\ &= \frac{1}{Z} \prod_{i=1}^m \prod_{j=1}^n \exp{\lbrace {h_i w_{ij} v_j} \rbrace} \cdot \prod_{j=1}^n \exp{\lbrace {\alpha_j v_j} \rbrace} \cdot \prod_{i=1}^m \exp{\lbrace {\beta_i h_i} \rbrace} \end{align} P(V,H)=Z1exp{HTWV+αTV+βTH}=Z1exp{HTWV}⋅exp{αTV}⋅exp{βTH}=Z1i=1∏mj=1∏nexp{hiwijvj}⋅j=1∏nexp{αjvj}⋅i=1∏mexp{βihi}

所以我们最后可以将RBM的pdf写成:

P

(

V

,

H

)

=

1

Z

∏

i

=

1

m

∏

j

=

1

n

exp

{

h

i

w

i

j

v

j

}

⋅

∏

j

=

1

n

exp

{

α

j

v

j

}

⋅

∏

i

=

1

m

exp

{

β

i

h

i

}

\begin{align} P(V, H) = \frac{1}{Z} \prod_{i=1}^m \prod_{j=1}^n \exp{\lbrace {h_i w_{ij} v_j} \rbrace} \cdot \prod_{j=1}^n \exp{\lbrace {\alpha_j v_j} \rbrace} \cdot \prod_{i=1}^m \exp{\lbrace {\beta_i h_i} \rbrace} \end{align}

P(V,H)=Z1i=1∏mj=1∏nexp{hiwijvj}⋅j=1∏nexp{αjvj}⋅i=1∏mexp{βihi}

22.3 Inference问题

RBM的Inference就是求解后验 P ( H ∣ V ) , P ( V ∣ H ) P(H|V), P(V|H) P(H∣V),P(V∣H),求解可以通过模型的条件独立性得出公式。

首先我们整理一下RBM已有的条件:

-

数据:

X = ( x 1 x 2 … x p ) = ( H V ) , H = ( h 1 h 2 … h m ) , V = ( v 1 v 2 … v m ) , p = m + n X = \begin{pmatrix} x_1 \\ x_2 \\ \dots \\ x_p \end{pmatrix} = \begin{pmatrix} H \\ V \\ \end{pmatrix} , \quad H = \begin{pmatrix} h_1 \\ h_2 \\ \dots \\ h_m \end{pmatrix} , \quad V = \begin{pmatrix} v_1 \\ v_2 \\ \dots \\ v_m \end{pmatrix}, \quad p=m+n X= x1x2…xp =(HV),H= h1h2…hm ,V= v1v2…vm ,p=m+n -

图形:

-

公式:

{ P ( V , H ) = 1 Z exp { − E ( V , H ) } E ( V , H ) = − ( ∑ i = 1 m ∑ j = 1 n h i w i j v j + ∑ j = 1 n α j v j + ∑ i = 1 m β i h i ) \begin{cases} P(V, H) = \frac{1}{Z} \exp{\lbrace - E(V, H) \rbrace} \\ E(V, H) = - {(\sum_{i=1}^m \sum_{j=1}^n {h_i w_{ij} v_j} + \sum_{j=1}^n {\alpha_j v_j} + \sum_{i=1}^m {\beta_i h_i})} \end{cases} {P(V,H)=Z1exp{−E(V,H)}E(V,H)=−(∑i=1m∑j=1nhiwijvj+∑j=1nαjvj+∑i=1mβihi) -

假设: h i h_i hi此时为0/1变量

通过上述条件求解 P ( H ∣ V ) P(H|V) P(H∣V)( P ( V ∣ H ) P(V|H) P(V∣H)同理可得):

-

根据RBM模型中给定的条件独立性—— h , v h,v h,v之间有连接, h , v h,v h,v内部无连接。可得 h i ⊥ h j ∣ i ≠ j V {h_i \bot h_j |_{i \neq j} V} hi⊥hj∣i=jV,此时再根据贝叶斯定理可得:

P ( H ∣ V ) = ∏ l = 1 m P ( h l ∣ V ) = ∏ l = 1 m P ( h l ∣ h − l , V ) = ∏ l = 1 m P ( h l , h − l , V ) P ( h − l , V ) = ∏ l = 1 m P ( h l , h − l , V ) P ( h − l , V ) = ∏ l = 1 m P ( h l , h − l , V ) ∑ h l P ( h l , h − l , V ) = ∏ l = 1 m P ( h l , h − l , V ) P ( h l = 0 , h − l , V ) + P ( h l = 1 , h − l , V ) \begin{align} P(H|V) &= \prod_{l=1}^m P(h_l|V) = \prod_{l=1}^m P(h_l|h_{-l}, V) = \prod_{l=1}^m \frac{P(h_l, h_{-l}, V)}{P(h_{-l}, V)} = \prod_{l=1}^m \frac{P(h_l, h_{-l}, V)}{P(h_{-l}, V)} \\ &= \prod_{l=1}^m \frac{P(h_l, h_{-l}, V)}{\sum_{h_l} P(h_l, h_{-l}, V)} = \prod_{l=1}^m \frac{P(h_l, h_{-l}, V)}{P(h_l = 0, h_{-l}, V) + P(h_l = 1, h_{-l}, V)} \end{align} P(H∣V)=l=1∏mP(hl∣V)=l=1∏mP(hl∣h−l,V)=l=1∏mP(h−l,V)P(hl,h−l,V)=l=1∏mP(h−l,V)P(hl,h−l,V)=l=1∏m∑hlP(hl,h−l,V)P(hl,h−l,V)=l=1∏mP(hl=0,h−l,V)+P(hl=1,h−l,V)P(hl,h−l,V) -

由于此时 h l h_l hl为已知条件,分子和分母都要通过公式 P ( V , H ) = 1 Z exp { − E ( V , H ) } P(V, H) = \frac{1}{Z} \exp{\lbrace - E(V, H) \rbrace} P(V,H)=Z1exp{−E(V,H)}进行计算,所以我们将 E ( V , H ) E(V, H) E(V,H)进行一个变换,将包含 h l h_l hl项的函数定义为 H l H_l Hl,不包含 h l h_l hl项的函数定义为 H ˉ l {\bar H}_l Hˉl:

E ( H ∣ V ) = − ( ∑ i = 1 m ∑ j = 1 n h i W v j + ∑ j = 1 n α T v j + ∑ i = 1 m β T h i ) = − ( ∑ i = 1 , i ≠ l m ∑ j = 1 n h i w i j v j + h l ∑ j = 1 n W l j v j ‾ + ∑ j = 1 n α j v j + ∑ i = 1 , i ≠ l m β i h i + β l h l ‾ ) \begin{align} E(H|V) &= - {(\sum_{i=1}^m \sum_{j=1}^n {h_i W v_j} + \sum_{j=1}^n {\alpha^T v_j} + \sum_{i=1}^m {\beta^T h_i})} \\ &= - {(\sum_{i=1, i \neq l}^m \sum_{j=1}^n {h_i w_{ij} v_j} + \underline{h_l \sum_{j=1}^n {W_{lj} v_j}} + \sum_{j=1}^n {\alpha_j v_j} + \sum_{i=1, i \neq l}^m {\beta_i h_i} + \underline{\beta_l h_l})} \\ \end{align} E(H∣V)=−(i=1∑mj=1∑nhiWvj+j=1∑nαTvj+i=1∑mβThi)=−(i=1,i=l∑mj=1∑nhiwijvj+hlj=1∑nWljvj+j=1∑nαjvj+i=1,i=l∑mβihi+βlhl) -

根据简化,我们发现只有下划线的部分与 h l h_l hl相关,其他与 h l h_l hl无关。所以我们可以将公式整理为:

{ E ( V , H ) = − ( h l H l ( v ) + H ˉ l ( h − l , v ) ) H l ( v ) = ∑ j = 1 n W l j v j + β l H ˉ l ( h − l , v ) = ∑ i = 1 , i ≠ l m ∑ j = 1 n h i w i j v j + ∑ j = 1 n α j v j + ∑ i = 1 , i ≠ l m β i h i \begin{cases} E(V, H) = - {\big( {h_l H_l(v)} + {{\bar H}_l(h_{-l}, v)} \big)} \\ H_l(v) = \sum_{j=1}^n {W_{lj} v_j} + \beta_l \\ {{\bar H}_l(h_{-l}, v)} = \sum_{i=1, i \neq l}^m \sum_{j=1}^n {h_i w_{ij} v_j} + \sum_{j=1}^n {\alpha_j v_j} + \sum_{i=1, i \neq l}^m {\beta_i h_i} \end{cases} ⎩ ⎨ ⎧E(V,H)=−(hlHl(v)+Hˉl(h−l,v))Hl(v)=∑j=1nWljvj+βlHˉl(h−l,v)=∑i=1,i=lm∑j=1nhiwijvj+∑j=1nαjvj+∑i=1,i=lmβihi -

我们原本的目标是求解 P ( H ∣ V ) P(H|V) P(H∣V),在前面的公式中我们知道他可以拆分开,所以我们现在只要求解出 P ( h l ∣ V ) P(h_l|V) P(hl∣V)就可以求出 P ( H ∣ V ) P(H|V) P(H∣V)。假定我们现在要求的状态为 P ( h l = 1 ∣ V ) P(h_l = 1|V) P(hl=1∣V),我们可以将上面的公式归结为下列公式:

{ P ( h l = 1 ∣ V ) = P ( h l = 1 , h − l , V ) P ( h l = 0 , h − l , V ) + P ( h l = 1 , h − l , V ) E ( V , H ) = − ( h l H l ( v ) + H ˉ l ( h − l , v ) ) \begin{cases} P(h_l = 1|V) = \frac{P(h_l = 1, h_{-l}, V)}{P(h_l = 0, h_{-l}, V) + P(h_l = 1, h_{-l}, V)} \\ E(V, H) = - {\big( {h_l H_l(v)} + {{\bar H}_l(h_{-l}, v)} \big)} \\ \end{cases} {P(hl=1∣V)=P(hl=0,h−l,V)+P(hl=1,h−l,V)P(hl=1,h−l,V)E(V,H)=−(hlHl(v)+Hˉl(h−l,v)) -

最后我们就可以将公式化简为:

P ( h l = 1 ∣ V ) = P ( h l = 1 , h − l , V ) P ( h l = 0 , h − l , V ) + P ( h l = 1 , h − l , V ) = 1 Z exp { 1 ⋅ H l ( v ) + H ˉ l ( h − l , v ) } 1 Z exp { 0 ⋅ H l ( v ) + H ˉ l ( h − l , v ) } + 1 Z exp { 1 ⋅ H l ( v ) + H ˉ l ( h − l , v ) } = exp { H l ( v ) + H ˉ l ( h − l , v ) } exp { H ˉ l ( h − l , v ) } + exp { H l ( v ) + H ˉ l ( h − l , v ) } = 1 1 + exp { − H l ( v ) } = s i g m o i d ( H l ( v ) ) = s i g m o i d ( ∑ j = 1 n W l j v j + β l ) \begin{align} P(h_l = 1|V) &= \frac{P(h_l = 1, h_{-l}, V)}{P(h_l = 0, h_{-l}, V) + P(h_l = 1, h_{-l}, V)} \\ &= \frac{\frac{1}{Z} \exp{\lbrace {1 \cdot H_l(v)} + {{\bar H}_l(h_{-l}, v)} \rbrace}}{\frac{1}{Z} \exp{\lbrace {0 \cdot H_l(v)} + {{\bar H}_l(h_{-l}, v)} \rbrace} + \frac{1}{Z} \exp{\lbrace {1 \cdot H_l(v)} + {{\bar H}_l(h_{-l}, v)} \rbrace}} \\ &= \frac{ \exp{\lbrace {H_l(v)} + {{\bar H}_l(h_{-l}, v)} \rbrace}}{ \exp{\lbrace {{\bar H}_l(h_{-l}, v)} \rbrace} + \exp{\lbrace {H_l(v)} + {{\bar H}_l(h_{-l}, v)} \rbrace}} \\ &= \frac{1}{ 1 + \exp{\lbrace - {H_l(v)} \rbrace} } = sigmoid(H_l(v)) = sigmoid(\sum_{j=1}^n {W_{lj} v_j} + \beta_l) \end{align} P(hl=1∣V)=P(hl=0,h−l,V)+P(hl=1,h−l,V)P(hl=1,h−l,V)=Z1exp{0⋅Hl(v)+Hˉl(h−l,v)}+Z1exp{1⋅Hl(v)+Hˉl(h−l,v)}Z1exp{1⋅Hl(v)+Hˉl(h−l,v)}=exp{Hˉl(h−l,v)}+exp{Hl(v)+Hˉl(h−l,v)}exp{Hl(v)+Hˉl(h−l,v)}=1+exp{−Hl(v)}1=sigmoid(Hl(v))=sigmoid(j=1∑nWljvj+βl)

22.4 Marginal问题

求边缘概率分布就是求 P ( V ) P(V) P(V)( P ( H ) P(H) P(H)也是,但求隐状态的边缘概率意义不大)。

照例先来整理一遍条件:

-

数据:

H = ( h 1 h 2 … h m ) h i ∈ { 0 , 1 } , V = ( v 1 v 2 … v m ) , p = m + n , W = [ w i j ] m × n = ( w 1 w 2 … w m ) H = \begin{pmatrix} h_1 \\ h_2 \\ \dots \\ h_m \end{pmatrix} h_i \in {\lbrace 0, 1 \rbrace} , \quad V = \begin{pmatrix} v_1 \\ v_2 \\ \dots \\ v_m \end{pmatrix}, \quad p=m+n, \quad W = {[w_{ij}]}_{m \times n} = \begin{pmatrix} w_1 \\ w_2 \\ \dots \\ w_m \end{pmatrix} H= h1h2…hm hi∈{0,1},V= v1v2…vm ,p=m+n,W=[wij]m×n= w1w2…wm -

公式:

{ P ( V , H ) = 1 Z exp { − E ( V , H ) } E ( V , H ) = − ( H T W V + α T V + β T H ) \begin{cases} P(V, H) = \frac{1}{Z} \exp{\lbrace - E(V, H) \rbrace} \\ E(V, H) = - {({H^T W V} + {\alpha^T V} + {\beta^T H})} \end{cases} {P(V,H)=Z1exp{−E(V,H)}E(V,H)=−(HTWV+αTV+βTH)

根据公式我们已知

P

(

V

,

H

)

P(V, H)

P(V,H),这个时候我们要求

P

(

V

)

P(V)

P(V),通过积分+公式代入自然就可以求出解:

P

(

V

)

=

∑

h

P

(

H

,

V

)

=

1

Z

∑

h

exp

{

H

T

W

V

+

α

T

V

+

β

T

H

}

=

1

Z

exp

{

α

T

V

}

⋅

∑

h

1

⋯

∑

h

m

exp

{

H

T

W

V

+

β

T

H

}

=

1

Z

exp

{

α

T

V

}

⋅

∑

h

1

⋯

∑

h

m

exp

{

∑

i

=

1

m

(

h

i

w

i

V

+

β

i

h

i

)

}

=

1

Z

exp

{

α

T

V

}

⋅

∑

h

1

exp

{

h

1

w

1

V

+

β

i

h

1

}

⋯

∑

h

m

exp

{

h

m

w

m

V

+

β

i

h

m

}

=

1

Z

exp

{

α

T

V

}

⋅

(

1

+

exp

{

w

1

V

+

β

i

}

)

…

(

1

+

exp

{

w

m

V

+

β

i

}

)

=

1

Z

exp

{

α

T

V

}

⋅

exp

{

log

(

1

+

exp

{

w

1

V

+

β

i

}

)

}

…

exp

{

log

(

1

+

exp

{

w

m

V

+

β

i

}

)

}

=

1

Z

exp

{

α

T

V

+

∑

i

=

1

m

log

(

1

+

exp

{

w

i

V

+

β

i

}

)

⏟

softplus:

l

o

g

(

1

+

e

x

)

}

=

1

Z

exp

{

α

T

V

+

∑

i

=

1

m

s

o

f

t

p

l

u

s

(

w

i

V

+

β

i

)

}

\begin{align} P(V) &= \sum_{h} P(H, V) \\ &= \frac{1}{Z} \sum_h \exp{\lbrace {H^T W V} + {\alpha^T V} + {\beta^T H} \rbrace} \\ &= \frac{1}{Z} \exp{\lbrace {\alpha^T V} \rbrace} \cdot \sum_{h_1} \dots \sum_{h_m} \exp{\lbrace {H^T W V} + {\beta^T H} \rbrace} \\ &= \frac{1}{Z} \exp{\lbrace {\alpha^T V} \rbrace} \cdot \sum_{h_1} \dots \sum_{h_m} \exp{\lbrace \sum_{i=1}^m ({h_i w_i V} + {\beta_i h_i}) \rbrace} \\ &= \frac{1}{Z} \exp{\lbrace {\alpha^T V} \rbrace} \cdot \sum_{h_1} \exp{\lbrace {h_1 w_1 V} + {\beta_i h_1} \rbrace} \dots \sum_{h_m} \exp{\lbrace {h_m w_m V} + {\beta_i h_m} \rbrace} \\ &= \frac{1}{Z} \exp{\lbrace {\alpha^T V} \rbrace} \cdot (1 + \exp{\lbrace {w_1 V} + {\beta_i} \rbrace}) \dots (1 + \exp{\lbrace {w_m V} + {\beta_i} \rbrace}) \\ &= \frac{1}{Z} \exp{\lbrace {\alpha^T V} \rbrace} \cdot \exp{\lbrace \log {(1 + \exp{\lbrace {w_1 V} + {\beta_i} \rbrace})} \rbrace} \dots \exp{\lbrace \log {(1 + \exp{\lbrace {w_m V} + {\beta_i} \rbrace})} \rbrace} \\ &= \frac{1}{Z} \exp{\lbrace {\alpha^T V} + \underbrace{\sum_{i=1}^m \log {(1 + \exp{\lbrace {w_i V} + {\beta_i} \rbrace})}}_{\text{softplus: } log(1+e^x)} \rbrace} \\ &= \frac{1}{Z} \exp{\lbrace {\alpha^T V} + {\sum_{i=1}^m softplus({w_i V} + {\beta_i})} \rbrace} \\ \end{align}

P(V)=h∑P(H,V)=Z1h∑exp{HTWV+αTV+βTH}=Z1exp{αTV}⋅h1∑⋯hm∑exp{HTWV+βTH}=Z1exp{αTV}⋅h1∑⋯hm∑exp{i=1∑m(hiwiV+βihi)}=Z1exp{αTV}⋅h1∑exp{h1w1V+βih1}⋯hm∑exp{hmwmV+βihm}=Z1exp{αTV}⋅(1+exp{w1V+βi})…(1+exp{wmV+βi})=Z1exp{αTV}⋅exp{log(1+exp{w1V+βi})}…exp{log(1+exp{wmV+βi})}=Z1exp{αTV+softplus: log(1+ex)

i=1∑mlog(1+exp{wiV+βi})}=Z1exp{αTV+i=1∑msoftplus(wiV+βi)}