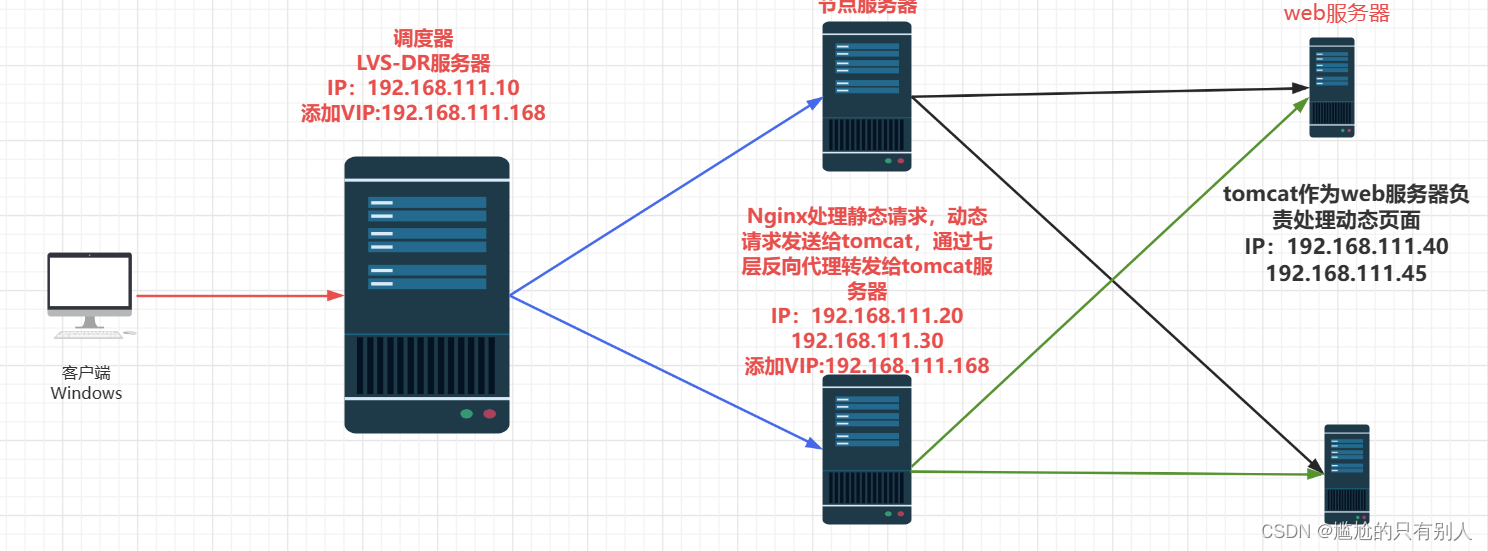

LVS-DR集群

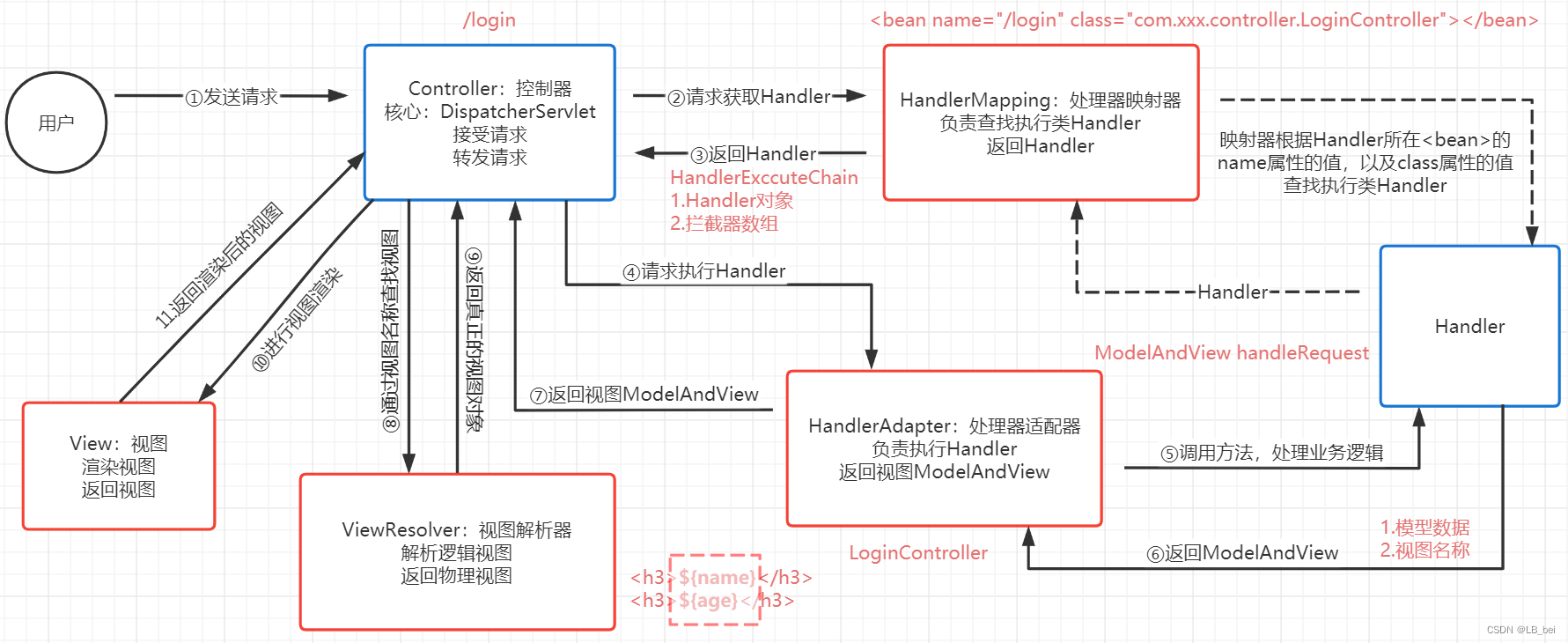

一.LVS-DR工作原理

1.数据包流向

数据包流向分析:

(1)客户端发送请求到 Director Server(负载均衡器),请求的数据报文(源 IP 是 CIP,目标 IP 是 VIP)到达内核空间。

(2)Director Server 和 Real Server 在同一个网络中,数据通过二层数据链路层来传输。

(3)内核空间判断数据包的目标IP是本机VIP,此时IPVS(IP虚拟服务器)比对数据包请求的服务是否是集群服务,是集群服务就重新封装数据包。修改源 MAC 地址为 Director Server 的 MAC地址,修改目标 MAC 地址为 Real Server 的 MAC 地址,源 IP 地址与目标 IP 地址没有改变,然后将数据包发送给 Real Server。

(4)到达 Real Server 的请求报文的 MAC 地址是自身的 MAC 地址,就接收此报文。数据包重新封装报文(源 IP 地址为 VIP,目标 IP 为 CIP),将响应报文通过 lo 接口传送给物理网卡然后向外发出。

(5)Real Server 直接将响应报文传送到客户端。

2.DR模式特点

(1)Director Server 和 Real Server 必须在同一个物理网络中。

(2)Real Server 可以使用私有地址,也可以使用公网地址。如果使用公网地址,可以通过互联网对 RIP 进行直接访问。

(3)Director Server作为群集的访问入口,但不作为网关使用。

(4)所有的请求报文经由 Director Server,但回复响应报文不能经过 Director Server。

(5)Real Server 的网关不允许指向 Director Server IP,即Real Server发送的数据包不允许经过 Director Server。

(6)Real Server 上的 lo 接口配置 VIP 的 IP 地址。

3.LVS-DR模式中问题

3.1节点服务器ARP请求混乱

3.2MAC地址混乱

net.ipv4.conf.lo.arp_ignore = 1 #系统只响应目的IP为本地IP的ARP请求

net.ipv4.conf.lo.arp_announce = 2 #系统不使用IP包的源地址来设置ARP请求的源地址,而选择发送接口的IP地址

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.all.arp_announce = 2

4.调度服务器内核参数修改

#由于 LVS 负载调度器和各节点需要共用 VIP 地址,需要关闭 icmp 的重定向,不充当路由器。

vim /etc/sysctl.conf

net.ipv4.ip_forward = 0

net.ipv4.conf.all.send_redirects = 0

net.ipv4.conf.default.send_redirects = 0

net.ipv4.conf.ens33.send_redirects = 0

sysctl -p

二.LVS-DR部署过程

2.1实验部署分析

2.2实验过程

2.2.1配置tomcat服务器

配置jdk环境

[root@localhost ~]# rpm -ivh jdk-8u201-linux-x64.rpm

警告:jdk-8u201-linux-x64.rpm: 头V3 RSA/SHA256 Signature, 密钥 ID ec551f03: NOKEY

准备中... ################################# [100%]

正在升级/安装...

1:jdk1.8-2000:1.8.0_201-fcs ################################# [100%]

Unpacking JAR files...

tools.jar...

plugin.jar...

javaws.jar...

deploy.jar...

rt.jar...

jsse.jar...

charsets.jar...

localedata.jar...

[root@localhost ~]# vim /etc/profile.d/java.sh

export JAVA_HOME=/usr/java/jdk1.8.0_201-amd64

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib

export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH

[root@localhost ~]# source /etc/profile.d/java.sh

[root@localhost ~]# java -version

java version "1.8.0_201"

Java(TM) SE Runtime Environment (build 1.8.0_201-b09)

Java HotSpot(TM) 64-Bit Server VM (build 25.201-b09, mixed mode)

安装tomcat服务,并通过systemctl管理tomcat服务

[root@localhost ~]# cd /opt

[root@localhost opt]# tar -xf apache-tomcat-9.0.16.tar.gz

[root@localhost opt]# mv apache-tomcat-9.0.16 /usr/local/tomcat

[root@localhost opt]# vim /usr/lib/systemd/system/tomcat.service

[Unit]

Description=tomcat server

Wants=network-online.target

After=network.target

[Service]

Type=forking

Environment="JAVA_HOME=/usr/java/jdk1.8.0_201-amd64"

Environment="PATH=$JAVA_HOME/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin"

Environment="CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar"

ExecStart=/usr/local/tomcat/bin/startup.sh

ExecStop=/usr/local/tomcat/bin/shutdown.sh

Restart=on-failure

[Install]

WantedBy=multi-user.target

准备tomcat动态页面

[root@localhost opt]# cd /usr/local/tomcat/webapps/

[root@localhost webapps]# mkdir test

[root@localhost webapps]# cd test/

[root@localhost test]# ls

[root@localhost test]# vim index.jsp

[root@localhost test]# systemctl restart tomcat.service

[root@localhost test]# netstat -lntp | grep java

tcp6 0 0 :::8080 :::* LISTEN 13074/java

tcp6 0 0 127.0.0.1:8005 :::* LISTEN 13074/java

tcp6 0 0 :::8009 :::* LISTEN 13074/java

[root@localhost test]# systemctl stop firewalld

[root@localhost test]# setenforce 0

setenforce: SELinux is disabled

[root@localhost test]# cat index.jsp

<%@ page language="java" import="java.util.*" pageEncoding="UTF-8"%>

<html>

<head>

<title>JSP test2 page</title>

</head>

<body>

<% out.println("动态页面 2 192.168.111.45,this is second tomcat web ");%>

</body>

</html>

另一台tomcat服务器配置一样

2.2.2配置节点服务器

nginx七层代理

1.关闭防火墙

2.编译安装nginx服务

3.在server块上添加upstream{},添加后端服务器

4.location匹配规则及转发协议

5.重启服务

添加路由转发规则

配置节点服务器物理网卡虚接口及路由转发规则

[root@localhost test]# cd /etc/sysconfig/network-scripts/

[root@localhost network-scripts]# ls

ifcfg-ens33 ifdown-ippp ifdown-sit ifup-bnep ifup-plip ifup-Team network-functions-ipv6

ifcfg-lo ifdown-ipv6 ifdown-Team ifup-eth ifup-plusb ifup-TeamPort

ifdown ifdown-isdn ifdown-TeamPort ifup-ib ifup-post ifup-tunnel

ifdown-bnep ifdown-post ifdown-tunnel ifup-ippp ifup-ppp ifup-wireless

ifdown-eth ifdown-ppp ifup ifup-ipv6 ifup-routes init.ipv6-global

ifdown-ib ifdown-routes ifup-aliases ifup-isdn ifup-sit network-functions

[root@localhost network-scripts]# cp ifcfg-lo ifcfg-lo:0

[root@localhost network-scripts]# vim ifcfg-lo:0

[root@localhost network-scripts]# ifup lo:0

[root@localhost network-scripts]# ifconfig lo:0

lo:0: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 192.168.111.168 netmask 255.255.255.255

loop txqueuelen 1000 (Local Loopback)

[root@localhost network-scripts]# route add -host 192.168.111.168 dev lo:0

修改节点服务器内核配置参数

[root@localhost network-scripts]# vim /etc/rc.local

[root@localhost network-scripts]# chmod +x /etc/rc.d/rc.local

[root@localhost network-scripts]# sysctl -p

net.ipv4.conf.lo.arp_ignore = 1

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.all.arp_announce = 2

安装nginx服务做动静分离和负载均衡

同步配置另一台nginx服务

[root@localhost opt]# cd /etc/yum.repos.d/

[root@localhost yum.repos.d]# ls

local.repo local.sh repo.bak

[root@localhost yum.repos.d]# vim nginx.repo

[root@localhost yum.repos.d]# yum install -y nginx

已加载插件:fastestmirror, langpacks

Loading mirror speeds from cached hostfile

nginx-stable | 2.9 kB 00:00:00

nginx-stable/x86_64/primary_db | 85 kB 00:00:00

正在解决依赖关系

--> 正在检查事务

---> 软件包 nginx.x86_64.1.1.24.0-1.el7.ngx 将被 安装

--> 解决依赖关系完成

依赖关系解决

=================================================================================================================

Package 架构 版本 源 大小

=================================================================================================================

正在安装:

nginx x86_64 1:1.24.0-1.el7.ngx nginx-stable 804 k

事务概要

=================================================================================================================

安装 1 软件包

总下载量:804 k

安装大小:2.8 M

Downloading packages:

nginx-1.24.0-1.el7.ngx.x86_64.rpm | 804 kB 00:00:01

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

正在安装 : 1:nginx-1.24.0-1.el7.ngx.x86_64 1/1

----------------------------------------------------------------------

Thanks for using nginx!

Please find the official documentation for nginx here:

* https://nginx.org/en/docs/

Please subscribe to nginx-announce mailing list to get

the most important news about nginx:

* https://nginx.org/en/support.html

Commercial subscriptions for nginx are available on:

* https://nginx.com/products/

----------------------------------------------------------------------

验证中 : 1:nginx-1.24.0-1.el7.ngx.x86_64 1/1

已安装:

nginx.x86_64 1:1.24.0-1.el7.ngx

完毕!

[root@localhost yum.repos.d]# cd /etc/nginx/

[root@localhost nginx]# cd conf.d/

[root@localhost conf.d]# vim default.conf

在配置文件中server块上添加upstream模块

upstream backend_server {

server 192.168.111.45:8080 weight=1;

server 192.168.111.40:8080 weight=1;

}

location动态页面匹配规则

location ~* .*\.jsp$ {

proxy_pass http://backend_server;

proxy_set_header HOST $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

[root@localhost conf.d]# nginx -t

nginx: the configuration file /usr/local/nginx/conf/nginx.conf syntax is ok

nginx: configuration file /usr/local/nginx/conf/nginx.conf test is successful

[root@localhost conf.d]# systemctl restart nginx

[root@localhost conf.d]# systemctl stop firewalld

[root@localhost conf.d]# setenforce 0

[root@localhost conf.d]# cd /usr/share/nginx/html/

[root@localhost html]# mkdir test

[root@localhost html]# cd test/

[root@localhost test]# echo '<h1>this is 192.168.30 nignx jingtai web</h1>' > index.html

[root@localhost test]# ls

index.html

[root@localhost test]# systemctl restart nginx

[root@localhost test]#

[root@localhost test]# systemctl stop firewalld

[root@localhost test]# systemctl disable firewalld

[root@localhost test]# setenforce 0

2.2.3负载调度服务器

在物理网卡上添加VIP虚接口网卡

修改内核配置参数

配置负载分配策略

在物理网卡上添加VIP虚接口网卡

[root@localhost ~]# cd /etc/sysconfig/network-scripts/

[root@localhost network-scripts]# cp ifcfg-ens33 ifcfg-ens33:0

[root@localhost network-scripts]# vim ifcfg-ens33:0

DEVICE=ens33:0

ONBOOT=yes

IPADDR=192.168.111.168

NETMASK=255.255.255.255

[root@localhost network-scripts]# systemctl restart network

[root@localhost network-scripts]# ifconfig

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.111.10 netmask 255.255.255.0 broadcast 192.168.111.255

inet6 fe80::76bd:2b0e:debf:af01 prefixlen 64 scopeid 0x20<link>

inet6 fe80::7ee0:eac8:3f9d:ccd8 prefixlen 64 scopeid 0x20<link>

inet6 fe80::b565:5b84:d85b:3c3 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:10:ff:ef txqueuelen 1000 (Ethernet)

RX packets 6604 bytes 668640 (652.9 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 1155 bytes 181323 (177.0 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens33:0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.111.168 netmask 255.255.255.255 broadcast 192.168.111.168

ether 00:0c:29:10:ff:ef txqueuelen 1000 (Ethernet)

修改内核配置参数

[root@localhost network-scripts]# vim /etc/sysctl.conf

net.ipv4.ip_forward = 0

net.ipv4.conf.all.send_redirects = 0

net.ipv4.conf.default.send_redirects = 0

net.ipv4.conf.ens33.send_redirects = 0

[root@localhost network-scripts]# sysctl -p

net.ipv4.ip_forward = 0

net.ipv4.conf.all.send_redirects = 0

net.ipv4.conf.default.send_redirects = 0

net.ipv4.conf.ens33.send_redirects = 0

配置ipvs分配策略

通过yum安装ipvsadm服务

[root@localhost network-scripts]# systemctl stop firewalld.service

[root@localhost network-scripts]# systemctl disable firewalld.service

[root@localhost network-scripts]# setenforce 0

[root@localhost network-scripts]# ipvsadm -C

[root@localhost network-scripts]# ipvsadm -a -t 192.168.111.168:80 -r 192.168.111.20:80 -g

[root@localhost network-scripts]# ipvsadm -a -t 192.168.111.168:80 -r 192.168.111.30:80 -g

[root@localhost network-scripts]# ipvsadm

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP localhost.localdomain:http rr

-> 192.168.111.20:http Route 1 0 0

-> 192.168.111.30:http Route 1 0 0

[root@localhost network-scripts]# systemctl restart ipvsadm.service

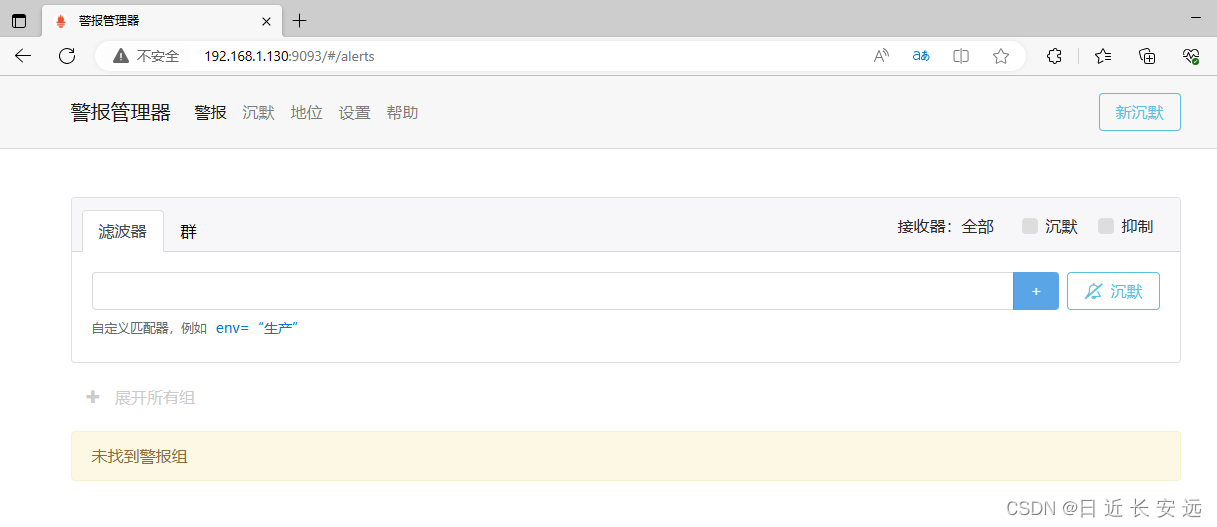

2.3实验结果